Imaging without lenses

New imaging systems, microscopes and video matrices generate digital images based on computer calculations, rather than traditional lenses.

Medieval artisans also knew how to create glass lenses and curved mirrors for projecting images. Such constructions were used to make microscopes, pinhole cameras, telescopes and other tools that allow us to better see very small and large objects located in the distance and nearby, on Earth and in the skies. The next revolution in the formation of images occurred around the middle of the XIX century: photography was invented. Now you can capture the "stopped moments", play them and replicate. Today, the era of chemical photography is coming to an end, a new era is flourishing - digital imaging. Its roots lie in the technology of television, but we will consider the beginning of the era in 1975, when the first digital camera appeared. Today, billions of webcams and cameras in mobile phones around the world take more than a trillion images a year, and many of them are immediately laid out on the Internet. Despite the explosive growth in the number, variety and methods of using imaging systems, the tasks of optical engineers remain largely unchanged: to create a high-quality optical image that accurately captures the scene being shot — so that it “looks good.”

However, in the last 10–20 years, a new paradigm began to be born: computational imaging. This paradigm may not completely replace traditional approaches, but it will cast doubt on centuries-old ideas and help create alternative methods for designing imaging systems. For example, we already have access to new functions and forms of imaging systems, including subminiature devices for capturing macroscopic objects and microscopes without lenses.

Computational Imaging

As the name implies, calculations play a key role in the formation of the final digital image. For a long time, they improved using digital image processing: they removed the red-eye effect when shooting with a flash, corrected colors, etc., but the optical schemes of lenses were never designed to meet these needs. However, digital signal processing allows, for example, to correct optical distortion such as “pillow” or wide-angle distortion at the edges of a picture. When the Hubble orbiting telescope sent the first images to Earth in the late 1980s, they turned out to be much more soapy than expected. It soon became clear that there were some problems with the optics. NASA scientists determined what was wrong, and, until the telescope was repaired, for several years they corrected many defects using complex digital processing algorithms.

In the mid-1990s, Wade Thomas Cathey and Edward R. Dowski, Jr. came up with the idea of designing lenses to form blurry, “degraded” images, but degraded so that digital processing algorithms made it possible to make images not worse, or even better shot with traditional lenses. In particular, Katie and Dovski turned to the characteristic feature of all traditional cameras: a limited depth of field. If you focus on an object at an average distance from you, it will look sharp, but objects closer and farther away will become blurred. Depth of field is the area within which all objects look acceptably sharp. So, two scientists came up with a new lens, which almost equally blurred the optical images of objects at all distances. And then a special algorithm increased the sharpness of the entire image, getting a depth of field that is unattainable for ordinary lenses. Although many scientists improved the described method even more, the idea of Katie and Dovski far advanced the discipline of computational capture and image formation.

Another consequence of this scientific work is that the optical schemes of lenses are now being developed based on the creation of images for computers, not people. It is ironic that in our era of total shooting very few people saw the real optical images produced by cameras. Long gone are the days when the photographer, bending down to the camera and covering himself with a thick cape, pulled out the flap and, before inserting the tape with the film, saw on a frosted glass a “live” image, directly formed by the lens. Today we see on the screens the result of digital processing of optical images caught on silicon matrices.

The next area of application for the combination of optics and digital processing was the simplification of lens design. In your smartphone, a camera lens may consist of 7-8 optical elements, and the lenses of professional cameras sometimes consist of more than 15 optical elements. A large number of lenses is necessary to correct image defects — aberrations inherent in any optical system: chromatic (color halos around objects) and optical (distortion of the shape and proportions of objects). That is, complex lens designs are necessary to produce “good looking” images. The combination of optics and digital processing will help shift part of the work on correcting aberrations to the digital component, which will make it possible to discard some optical elements without compromising the quality of the final digital image. That is, the processing algorithms play the role of virtual optical elements. This approach allowed us to create more compact and cheap optical systems without loss of quality.

To what extent can these ideas be developed? What share of the task of image formation can be shifted from optics to a digital component? How simple can an optical scheme be to get a decent image? Is it possible to even get rid of lenses and mirrors? This has been achieved over the past few years in three ways - the lenses and the optical images they form are completely excluded. The methods are based on diffraction, optical phase reconstruction (reconstruction) and methods of compressive sensing. And for obtaining the final image suitable for people, computer calculations are actively used.

Diffraction imaging

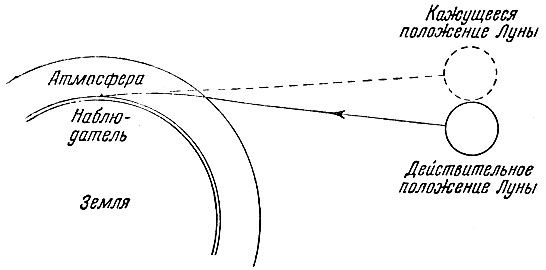

Traditional lenses focus a beam of light with the help of refraction : light is refracted when passing through the interface of media (air-glass) with different speeds of light. It is thanks to the effect of refraction that a pencil immersed in a glass cup with water seems to be curved: the light reflected from the pencil refracts when it enters the air on the way to your eyes. Therefore, we see the underwater part of the pencil not where it actually is.

By the way, due to refraction (refraction) on the border of space and the earth's atmosphere, all celestial objects seem to us located somewhat higher than their real location:

Crooked mirrors like those used in large telescopes form an image differently: with the help of reflection . To understand what is the difference between refraction and reflection, let’s represent light in the form of rays (lines).

Two other physical phenomena will help change the direction of light propagation and use its wave nature (recalling wave-particle duality ): diffraction and interference . When two coherent waves of light meet, they overlap each other, resulting in the resulting amplitude of the wave. If the maximum of one wave always coincides with the maximum of the other, then the waves amplify each other, this is called constructive interference . If the maximum of one wave always coincides with the minimum of the other, the waves cancel each other - this is destructive interference , as a result of which the light may disappear altogether.

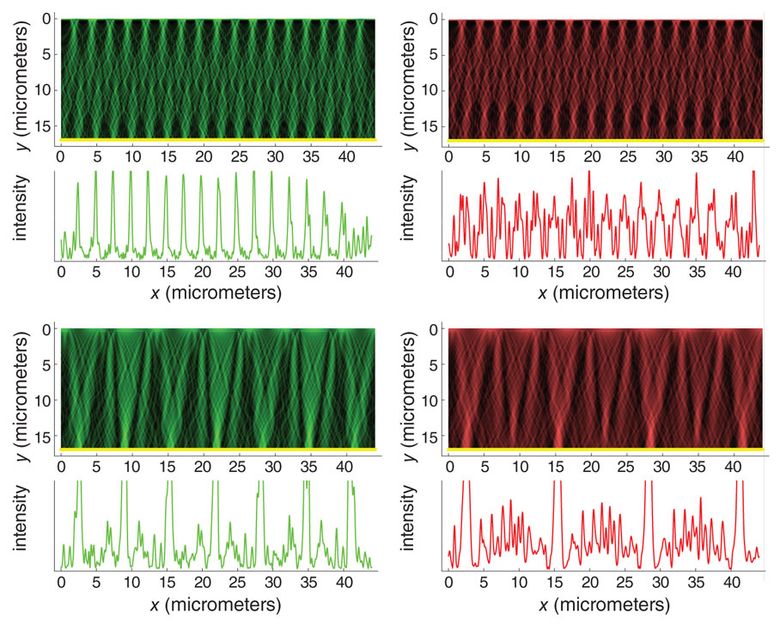

Light can be controlled by diffraction by directing it to the diffraction grating — a series of the finest strokes (raster) —on a smooth surface. Since waves with different lengths are reflected in different directions, color staining occurs. For example, when white light is reflected from tiny grooves on the surface of a CD or DVD, we see rainbow stripes. Due to the dependence of the wavelength on the raster, it is impossible to create a diffraction grating that simply replaces the lenses. An optical image formed by a grating will never look as good as an image from a well-designed lens. Nevertheless, it is quite possible to create acceptable digital images using a combination of diffractive optics (using diffraction) and processing of matched signals (matched signal processing) (taking into account the optics).

Imaging using diffraction

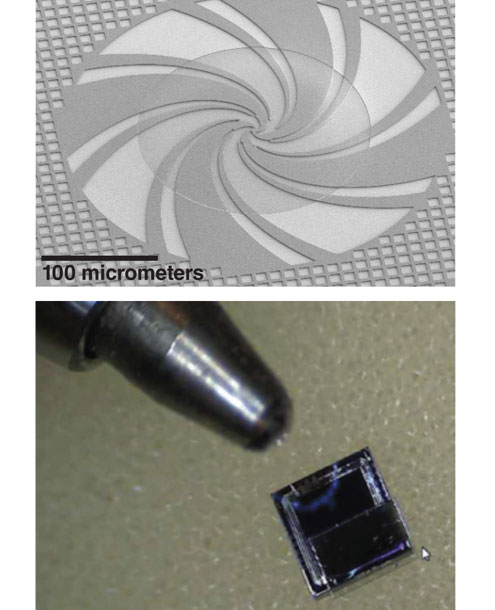

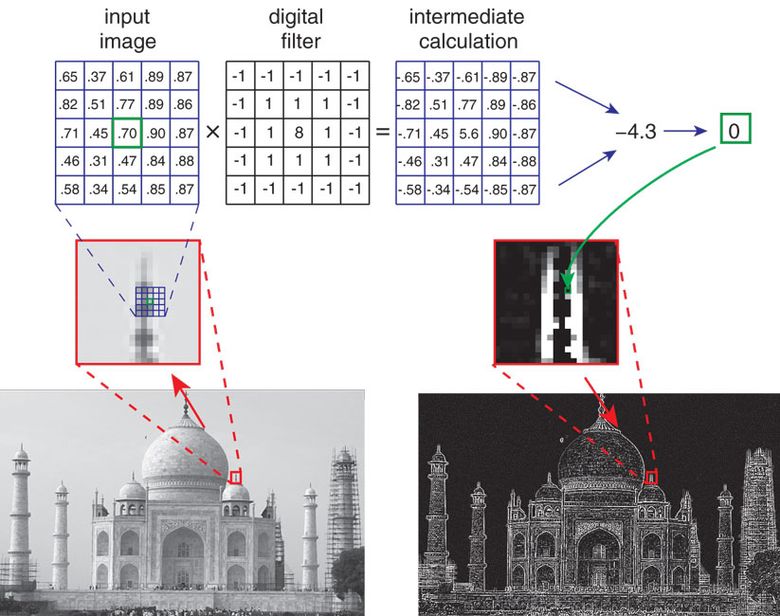

In one of the classes of objective-free devices for macroscopic photography, miniature diffraction gratings are used, which are stepped in the thickness of a transparent material (glass or silicate) and stop one part of the incident light relative to the other part. The mathematical properties of the step scheme are such that the distribution of light in the material weakly depends on the wavelength, and hence on the slight variation in the thickness of the glass itself, which inevitably occurs during manufacture. The grids are attached to a photosensitive matrix - like a matrix in ordinary digital cameras. The incident light passes through the grating and reaches an array, already in a special way decomposed into "components". It looks not at all like an ordinary image: a kind of blurred cloud, incomprehensible to the human eye. However, this cloud contains enough visual information (albeit unusually distributed) to recreate the desired image from it using a computational process called image convolution.

The image reconstruction algorithm is slightly sensitive to visual noise, for example, random fluctuations in the number of photons or electrical noise during the conversion of the signal from the sensor into a numerical representation (the so-called quantization error, quantization error). Therefore, the image may be visually noisy. Although this quality is enough for a number of simple tasks (for example, to calculate the number of people in the frame), however, for a more decent image, you need to capture more information about the scene being shot. The solution "in the forehead" - take a few miniature phase gratings designed to capture different information about the scene. That is, each grid forms a component digital image, these components can then be processed and get one, better image.

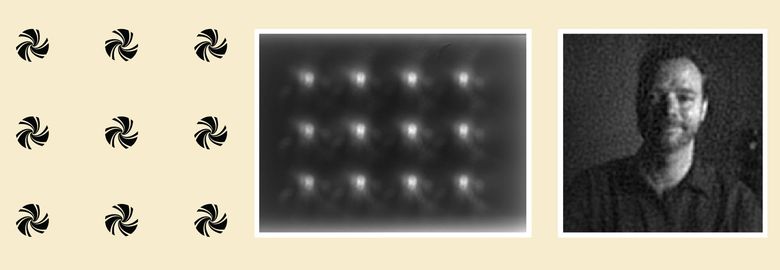

One kind of lensless imaging systems uses gratings that diffuse light, rather than focusing it like a lens. In this example, an array of 12 binary phase microlattices (left) is designed to capture as much visual information as possible about the scene. After passing the light through the array, 12 blurry spots appear, none of which allows a person to understand what has been shot here (in the center). However, this optical image contains enough information so that using digital processing called “image convolution” (image convolution) to get a completely legible portrait (on the right).

This approach will help not only to form an image of the scene, but also to analyze it: to determine the visual properties (for example, if there is a human face in the photo), the direction and speed of the overall movement of the scene ( visual flow , visual flow), count the number of people in indoors. In such situations, diffraction gratings are designed to extract the necessary information, and the processing algorithm is adapted to the specific task. For example, if we need to read a vertical bar code, we use a vertical diffraction grating and an algorithm that leads each pixel of a digital image to a threshold value: the light one is converted to dark, the dark one to black. The result is a black and white digital image, and it can already be recognized by the barcode reading algorithm.

Microscopy using phase recovery

The approach to the creation of objective-free microscopes differs from the methods of creating computational cameras for macroscopic objects, although the diffraction phenomenon is also used here. However, unlike a device that shoots a scene in the usual lighting created by the Sun or lamps, in microscopy for illumination, you can only choose coherent laser radiation or monochromatic light from one or more sources. This allows you to control the diffraction and interference of light. Moreover, the objects of interest to us are so small that diffraction will occur when light passes through the objects themselves, and not through an artificial diffraction grating.

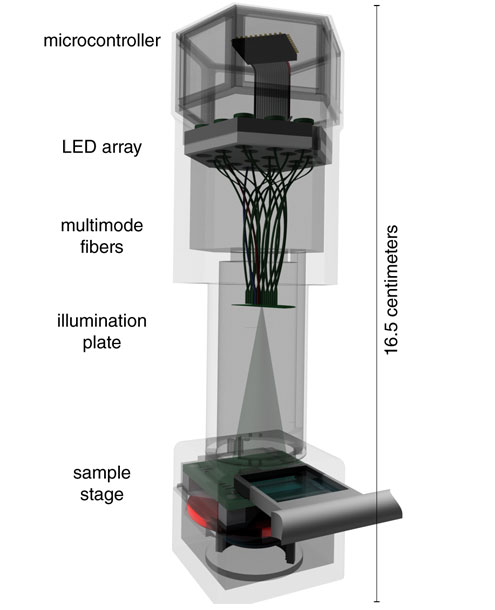

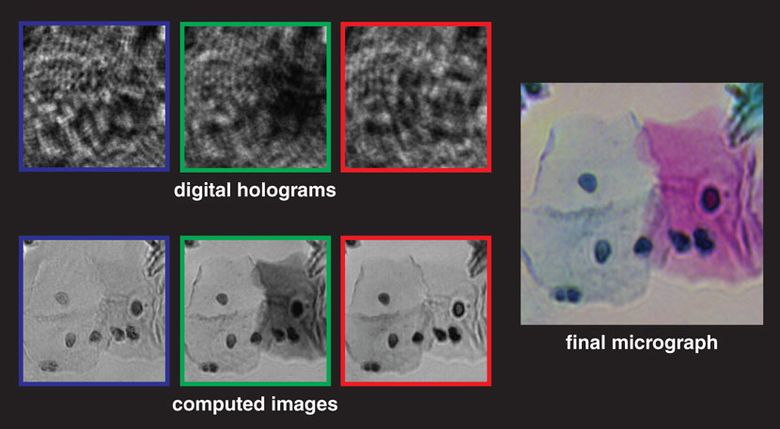

The scheme of such a microscope implies that the sample is placed on top of a photosensitive matrix with a large number of small pixels: a 10-megapixel matrix, for example, which is often found in digital cameras. This scheme is also called the “microscope on a chip” (on chip), because the sample is placed directly on the imaging matrix. Light from a laser or a spectrally pure color LED is incident on the sample and scattered on the objects to be removed. The resulting diffraction waves - forming an object beam (object beam) - are superimposed on the illumination that passes through the sample without distortion - the reference beam . The result is a complex interference pattern, recorded by the photosensitive matrix and used in digital inline holography (digital in-line holography). The raw image vaguely resembles the microscopic shadows of a sample, and in some cases it is enough to roughly calculate the number and location of objects. But the raw holographic image is too muddy, noisy, contains “ring artifacts” and does not allow to determine the morphology of objects. The picture is bad.

The interference pattern goes through several stages of digital processing, the main stage is the phase reconstruction algorithm . In it, using the physics of optical interference, conclusions are drawn about the structure and location of objects in the sample. In short: the algorithm searches for optical information about the phase lost in the hologram on the matrix (which only records the interference pattern, and not the phases of individual light rays themselves). The algorithm iteratively computes phase information in the object beam, which most likely led to the appearance of such an optical interference pattern. When the phase information in the object ray is determined, the algorithm calculates its change back in time to build an image of the objects, forming the final digital image.

As in the case of devices for macro photography, the resolution is increased by capturing several optical images, each of which contains slightly different information. For example, before registering each frame, you can slightly shift the light source, or the sample itself, or the matrix. The frames are then processed and combined to produce one increased-resolution interference image (which is still incomprehensible to humans), and then the phase recovery and time recovery steps are performed.

The lensless microscopes on the chip have several advantages.

First, the area of the sample (i.e. field of view) can be extremely large, it is limited only by the size of the photosensitive matrix on which the sample is placed. Modern matrices allow you to provide a field of view from 20 square millimeters to 20 square centimeters.

Secondly, using transparent microscopes it is possible to study even transparent objects (for example, most bacteria in a layer of water), if they change the phase of light passing through them. Special objective optical microscopes also make it possible to study similar “phase objects”, albeit with a far smaller field of view and overall sample size.

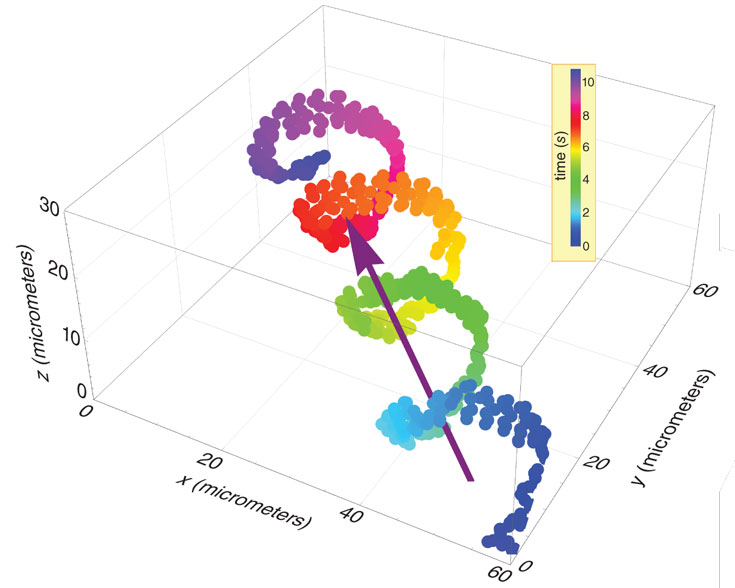

Thirdly, digital processing of the optical image allows you to select different types of cells (for example, sperm cells or blood cells in the capillaries) and track their movements. Thanks to this, doctors and biologists can get important data.

Fourth, such microscopes are much cheaper and more compact than traditional ones. Non-objective microscopes can be connected to a mobile phone, used in rural areas, and digital data can be transmitted anywhere for further thorough analysis.

Compressive sensing technique

The third approach to lensless imaging is based on recent advances in mathematics and signal statistics - the method of compressive sensing . The optical image on the matrix is a complex signal that is represented as a list of numbers and is processed by different algorithms. As a complex sound signal consists of a set of simpler sounds, each of which is added in the necessary proportion, so the picture is formed from a large number of simpler images. A set of simple images, or signals, is called a basis . In the realm of sound, the most common basis is a set of pure cosine tones. No matter how complex the sound is. Everything — from a car horn to Beethoven’s symphony — can be created by adding a large number of basic cosine waves, for each of which the necessary intensity and time shift is selected.

What could be the same basis in the field of images? The two most popular and useful visual bases are the two-dimensional wavelet patterns and the multi-resolution wavelet patterns. These basic elements are mathematically elegant and underlie modern JPEG and JPEG 2000 image compression schemes. Instead of storing and transmitting the values of each pixel of a digital image, you use a file that describes the amplitudes of different component base signals. As a result, the “compressed” file is much smaller than the image itself. For decades, these bases faithfully served as a tool for processing digital images, but did not lead to the creation of new methods for developing optical circuits, because no optical element makes it easy to implement any bases.

Let's go to compressive sensing. Theoretically, statistics show that, as long as the scene information is redundant (i.e., the image is compressible), there is no need to measure bases, it is enough to measure random samples. If such “measures in the code” are available to you, then you can be guided by the consideration that the signal is qualitatively represented as basic elements (cosine waves or wave pulses) and restore the image using the compressive sensing technique. Moreover, to use this class of new image recovery methods, you need far less measurements than before.

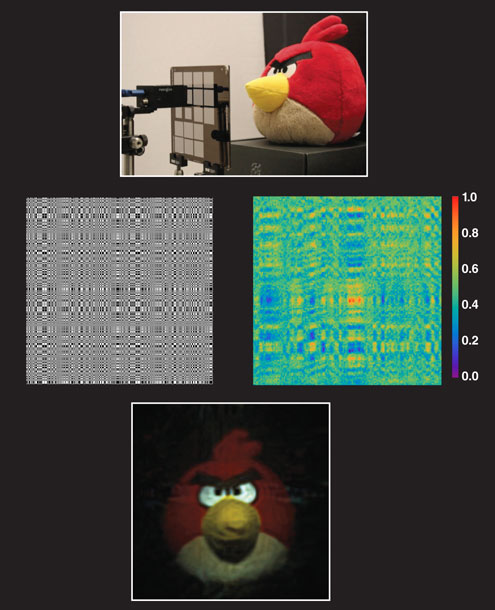

A capturing device that uses compression (above) passes light from the scene through several two-dimensional masks. The middle pictures show the result after two different masks. The compression-based reconstruction algorithm takes the information from all the masks and finds the “simplest” signal consistent with the set of measurements made by the matrix (left). The more complex the scene or the higher the quality of the image you need to ensure, the more component images you need to create.

This theoretical development made it possible to create new approaches to the optical design of cameras based on early advances in x-ray and gamma-ray imaging. Coded apertures (conveniently designed fixed two-dimensional mask masks from transparent and opaque areas) can help in creating ways to capture coded scene measurements with traditional light-sensitive matrices. One of the schemes called FlatCam was developed by Ashok Viraraghavan (Ashok Veeraraghavan) and his colleagues at Rice University. The scheme consists of a simple amplitude mask superimposed on a conventional photosensitive matrix (see illustration above). The light from the scene - in this case, the character from Angry Birds - passes (and diffracts) through the transparent areas of the amplitude mask and hits the matrix. Please note that there are no lenses here, which means that the traditional optical image is not formed. Instead, the matrix records a complex, chaotic-looking light pattern with information about the scene and the mask pattern itself. Since the image is composed of pixels, each pixel provides a different coded measurement of the scene. Then, using the mathematical and algorithmic methods of the compressive sensing, the system finds the “simplest” scene consistent with all these measurements.

The lensless approach has several important advantages.

The cost of conventional cameras is largely determined by the cost of the lenses and the subsequent assembly, so excluding the lens from the circuit can greatly reduce the cost of the product. In the construction of the camera, including the mask and the matrix, only traditional semiconductor manufacturing technologies can be used, which increases scalability and reduces the price. Also, cameras can be thinner than 0.5 millimeter and weigh less than 0.2 grams - they can be used where the usual bulky devices are not applicable today. In addition, the FlatCam scheme allows you to get all the necessary information about the scene, taking one frame, so you can realize the video shooting of dynamic scenes in real time.

Rules change

The engineering of imaging systems enters a new era when optical elements using the physical features of light and tangible materials can be designed in conjunction with digital algorithms using intangible information. Many familiar principles and unwritten rules of thumb that optics engineers have been guided by for centuries, including the need for lenses and curved mirrors or structured digital bases such as cosine functions, are being deposed. Traditional optical images are so familiar and useful that we reluctantly view them more abstractly, solely as information.

Future directions for the development of devices for shooting macro objects include the development of specialized diffraction gratings and processing algorithms. For example, if it is necessary to determine whether there is a human face in the frame, then the lattice itself should, as far as possible, extract only that visual information that indicates the presence of faces. It is also tempting to shift as much of the end-to-end computing load as possible to the optics in order to reduce the amount of computation, and hence the power consumption. In lensless microscopy, the spatial and temporal resolution needs to be increased, and digital microscopes designed to diagnose specific diseases, especially common in developing countries, should be designed.

Lenses and twisted mirrors helped us for hundreds of years, and we are unlikely to completely abandon them. Nevertheless, the new paradigm of computational imaging provides us with other ways, allowing us to find new applications for photo and video devices.

Source: https://habr.com/ru/post/410345/