Graphic editor GANpaint draws objects and demonstrates the capabilities of GAN.

One of the brushes deletes / adds trees, another - people, etc.

Generative-contention networks (GAN) create stunningly realistic images, often indistinguishable from real ones. Since the invention of such networks in 2014, a lot of research has been conducted in this area and a number of applications have been created, including for image manipulation and video prediction . Several variants of GAN have been developed, and the experiments continue.

Despite this tremendous success, many questions still remain. It is not clear what exactly causes terribly unrealistic artifacts, what minimal knowledge is needed to generate specific objects, why does one version of GAN work better than another, what fundamental differences are encoded in their weights? To better understand the internal workings of GAN, researchers at MIT, MIT-IBM Watson AI and IBM Research divisions developed the GANDissection framework and the GANpaint program, a graphical editor on a generative-contention network.

The work is accompanied by a scientific article , which explains in detail the functionality of the framework and discusses those questions to which researchers are trying to find answers. In particular, they are trying to study the internal representations of generative-competitive networks. The “analytical framework for visualizing and understanding GANs at the unit, object and scene level”, that is, the GANDissection framework, should help.

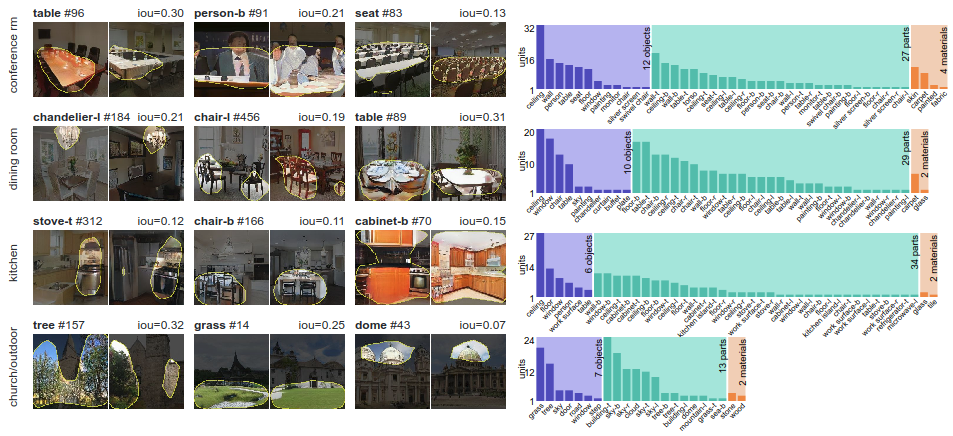

By the method of dividing a picture into parts (segmentation-based network dissection), the system determines groups of “interpretable units”, which are closely related to the concepts of objects. Then, a quantitative assessment of the reasons that cause changes in the interpreted units is carried out. This is done "by measuring the ability of interventions to control objects at the exit." Simply put, researchers study the contextual relationship between specific objects and their environment by embedding detected objects in new images.

The GAN Dissection framework demonstrates that specific neurons in the GAN learn depending on the type of scene that he learns to draw: for example, a jacket neuron appears when studying conference rooms, and a plate neuron appears when drawing kitchens

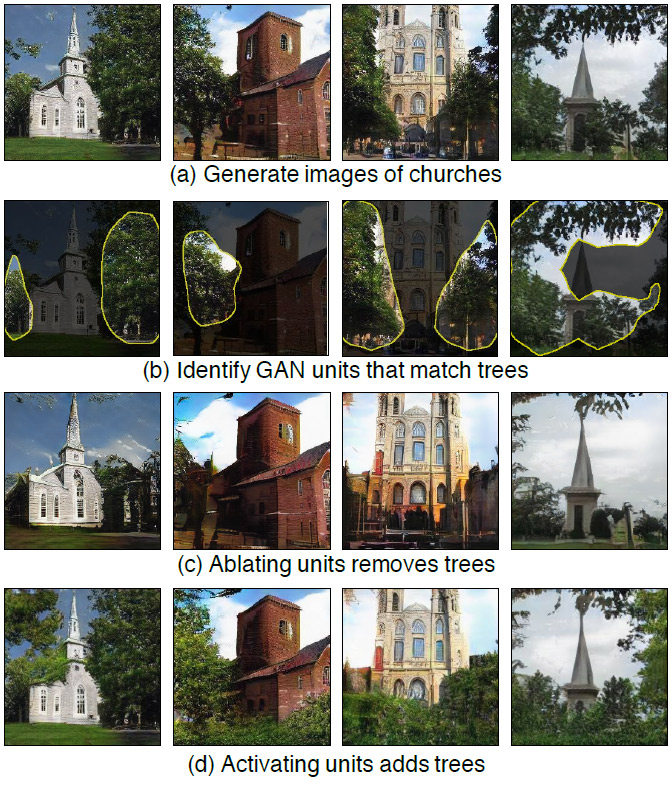

To ensure that sets of neurons control the drawing of objects, rather than simply correlate, the framework interferes with the network and activates and deactivates neurons directly. This is how the graphic editor GANpaint works - it is a visual demonstration of an analytical framework.

GANpaint activates and deactivates neurons on a network trained to create images. Each button on the left panel corresponds to a set of 20 neurons. Only seven buttons:

- tree;

- grass;

- a door;

- sky;

- cloud;

- brick;

- dome.

GANpaint can add or remove such objects.

By switching neurons directly, you can observe the structure of the visual world, which you learned to model a neural network.

When studying the results of the work of other generative-competitive networks, an outside observer may wonder: does the GAN really create a new image or does it simply make up the scene from the objects it met during the training? Maybe the network just remembers the images and then plays them the same way? This research paper and the editor of GANpaint show that the networks have really studied some aspects of the composition, the authors say.

One interesting discovery is that the same neurons control a certain class of objects in different contexts, even if the final appearance of an object varies greatly. The same neurons can switch to the concept of "door", regardless of whether you need to add a heavy door on a large stone wall or a small door on a tiny hut. GAN also understands when you can, and when you cannot create objects. For example, when the door neurons are activated, a door actually appears in the right place in the building. But if you do the same in the sky or in a tree, then usually such an attempt has no effect.

The scientific article “GAN Dissection: Visualizing and Understanding Generative Adversarial Networks” was published on November 26, 2018 on the preprint website arXiv.org (arXiv: 1811.10597v2).

Interactive demonstrations, videos, code and data are published on Github and on the MIT website .

Source: https://habr.com/ru/post/436088/