Monorepositions: please do not (part 2)

Hello!

So, a new portion of the promised holivar about monorepositories. In the first part, we discussed the translation of an article by a respected engineer from Lyft (and earlier Twitter) about what are the disadvantages of monospositories and why they negate almost all the advantages of this approach. Personally, I largely agree with the arguments given in the original article. But, as promised, to put an end to this discussion, I would like to voice a few more points, in my opinion even more important and more practical.

I'll tell you a little about myself - I worked in small projects and in relatively large ones, used poly-repositories in a project with more than 100 microservices (and SLA 99.999%). At the moment, I am engaged in transferring a small mono-repository (in fact, no, just the front js + java backend) from maven to bazel. Did not work in Google, Facebook, Twitter, i.e. I didn’t have the pleasure of using a mono-repository properly tuned and hung up with a tuling.

So, for starters, what is a mono-repository? Comments to the translation of the original article showed that many people believe that the mono-repository is when all 5 developers of the company work on one repository and store the frontend and backend in it. Of course, it is not. A mono-repository is a way to store all the company's projects, libraries, tools for building, plug-ins for IDE, deployment scripts, and everything else in one large repository. Details here trunkbaseddevelopment.com .

How, then, is the approach called when a company is small and it simply does not have such a number of projects, modules, components? This is also a mono-repository, only small.

Naturally, the original article states that all the problems described begin to appear on a certain scale. Therefore, those who write that their mono-repository for 1.5 diggers works fine, of course, absolutely right.

So, the first fact that I would like to fix: a mono-repository is a great start for your new project . Putting all the code in one pile, at first you get only one advantage, because support for multiple repositories will certainly add a bit of overhead.

What is the problem then? And the problem, as noted in the original article, begins on a certain scale. And most importantly, do not miss the moment when such a scale has already arrived.

Therefore, I am inclined to say that, in essence, the problems that arise are not the problems of the approach itself “put all your code in one pile”, and these are problems of just large source code repositories. Those. If you assume that you have used poly repositories for different services / components, and one of these services has become so large (how much, we will discuss just below), then you will most likely get exactly the same problems, only without the advantages of monorepositions (if they are , of course have).

So, how big should the repository be to start to be considered problematic?

There are definitely 2 indicators on which it depends - the amount of code and the number of developers working with this code. If your project has terabytes of code, but at the same time 1-2 people work with it, then most likely they will barely notice the problems (or at least it will be easier to do nothing, even if they notice :)

How to determine that it is time to think about how to improve your repository? Of course, this is a subjective indicator, most likely your developers will begin to complain that something does not suit them. But the problem is that it may be too late to change something. I’ll give some numbers on my own behalf: if cloning your repository takes more than 10 minutes, if the project builds take more than 20-30 minutes, if the number of developers exceeds 50, and so on.

Now let's go through the list of problems that arise in large repositories (some of them were touched upon in the original article, some are not).

On the one hand, we can say that this is a one-time operation that the developer performs during the initial setup of his workstation. Personally, I often have situations when I want to clone a project into the next folder, dig deeper into it, and then delete it. However, if cloning takes more than 10-20 minutes, it will not be so comfortable.

But besides, you should not forget that before building a project on a CI server, you need to clone the repository for each build agent. And here you start to think about how to save this time, because if each assembly takes 10-20 minutes longer, and the result of the assembly appears 10-20 minutes later, it will not suit anyone. So the repository begins to appear in the images of the virtual machines from which the agents are deployed, additional complexity and additional costs to support this solution appear.

This is a fairly obvious point that has been discussed many times. In fact, if you have a lot of source codes, the build will take a lot of time anyway. A familiar situation when after changing one line of code you have to wait half an hour while the changes are reassembled and tested. In fact, there is only one way out - to use an assembly system built around caching results and incremental assembly.

There are not so many options here - despite the fact that the caching capabilities were added to the same gradle (unfortunately, they were not used in practice), they do not bring practical benefits due to the fact that traditional build systems do not have repeatable results. (reproducible builds). Those. Due to the side effects of the previous build, it will still be necessary to trigger caches at some point (standard

My current project is indexed in Intellij IDEA from 30 to 40 minutes. And yours? Of course, you can open only a part of the project or exclude all unnecessary modules from indexing, but ... The problem is that reindexing occurs every time you switch from one branch to another. That is why I like to clone the project in the next directory. Some come to start caching IDE cache :)

<Picture from D-Caprio with a narrowed eye>

Which CI server are you using? Does it provide a convenient interface for viewing and navigating in assembly logs of several gigabytes in size? Sorry, my not :(

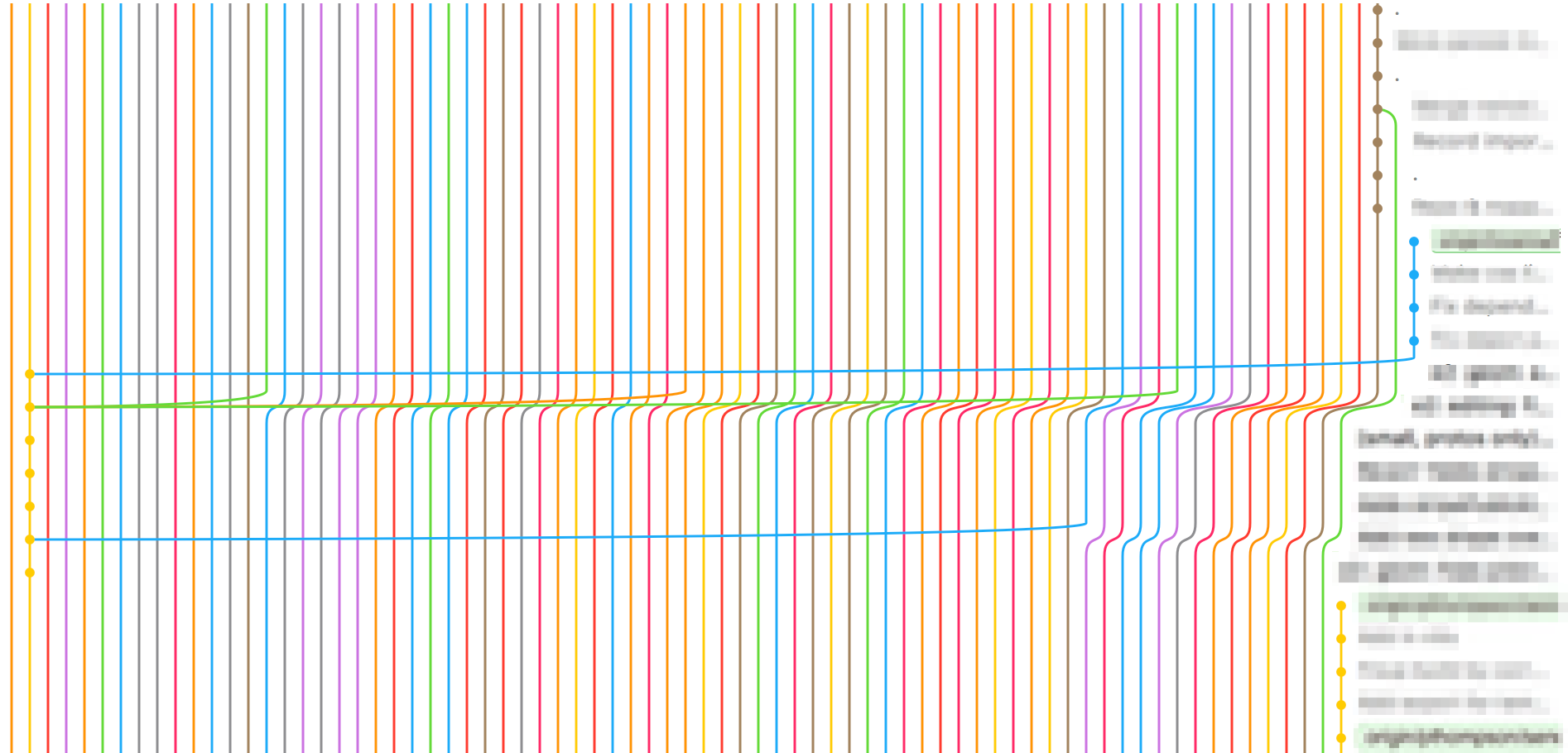

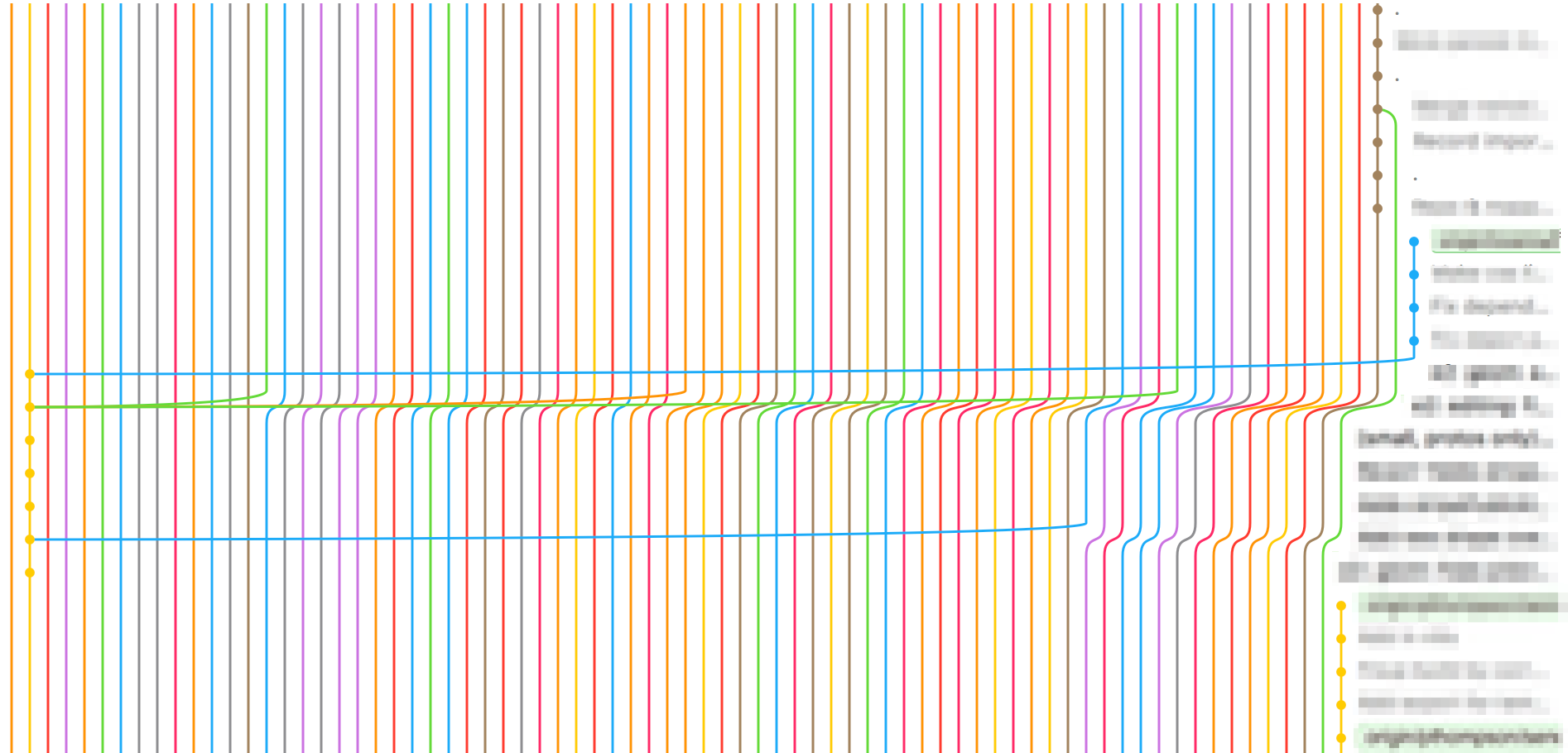

Do you like to watch commit history? I love it, especially in the GUI tool (I better perceive the information visually, do not scold :).

Like? Conveniently? I personally do not!

What happens if someone could run broken tests / non-compiled code into the master? You will of course say that your CI does not allow doing this. What about the unstable tests that are held by the author, and no one else? Now imagine that this code has spread to the machines of 300 developers, and none of them can build a project? What to do in this situation? Wait, when the author will notice and correct? Correct for him? Roll back changes? Of course, ideally, it is worth committing only good code, and writing right away without bugs. Then there will be no such problem.

(for those who are in the tank and did not understand the hints, it’s a negative effect if it happens in the repository with 10 developers and in the repository with 300 will be slightly different)

Ever heard of such a thing? Do you know why she is needed? You will laugh, but this is another tool that should not exist :) Imagine that the build time of your project is 30 minutes. And 100 developers are working on your project. Suppose that each of them shoots 1 commit per day. Now imagine an honest CI that allows merge changes to the master only after they have been applied to the most recent commit from the master (rebase).

Attention, the question: how many hours should be in a day so that such an honest CI-server can smadzhit changes from all developers? The correct answer is 50. Whoever answered correctly, can take the gingerbread from the shelf. Well, or imagine how you just debuted your commit to the most recent commit to the wizard, started the build, and when it was completed, the wizard had already left 20 commits ahead. All over again?

So, merge bot or merge queue is a service that automates the process of re-submitting all merge requests to the fresh master, running the tests and the merge directly, and can also merge commits into batch files and test them together. Very handy thing. See mergify.io , k8s test-infra prow from Google, bors-ng , etc. (I promise to write more about this in the future)

Now to less technical problems:

Honestly, it is still a mystery to me why collecting the entire mono-repository using one common assembly system. Why not build javascript Yarn, java - gradle, Scala - sbt, etc.? If someone knows the answer to this question (does not guess or assumes, and that is, knows) write in the comments.

Of course, it seems obvious that using one build system is better than several different ones. But they still understand that any universal thing is obviously worse than a specialized one, since it most likely has only a subset of all specialized functions. But what's even worse, different programming languages can have different paradigms in terms of assembly, dependency management, etc., which will be very difficult to wrap up in one common wrapper. I don’t want to go into details, give one example about bazel (wait for details in a separate article) - we found 5 independent implementations of javascript build rules for bazel from 5 different companies on GitHub, along with the official one from Google. Worth thinking.

In response to the original CTO article from Chef, Monorepo wrote his answer : please do! . In his reply, he argues that "the main thing in monorepo is that he makes talk and makes flaws visible." He means that when you want to change your API, you will have to find all its uses and discuss your changes with the maintainers of these pieces of code.

So my experience is exactly the opposite. It is clear that this very much depends on the engineering culture in the team, but I see in this approach solid minuses. Imagine that you have used a certain approach that faithfully served you for some time. And so you decided for some reason, solving a similar problem, to use a slightly different method, perhaps more modern. What is the likelihood that the addition of a new approach will pass a review?

In my recent past, I repeatedly received comments like “we already have a proven path, use it” and “if you want to implement a new approach, update the code in all 120 places where the old approach is used and get appruv from all the commands that are responsible for these pieces of code. " Usually this enthusiasm "innovator" ends.

And how much do you think it would cost to write a new service in a new programming language? In polyrepositories - not at all. Create a new repository and write, and even take the most suitable build system. And now the same thing in monorepositories?

I understand perfectly well that “standardization, reuse, sharing of code”, but the project should be developed. In my subjective opinion, a mono-repository rather prevents this.

Recently I was asked: “ are there any open source tools for mono-repositories? ” I replied: “The problem is that tools for mono-repositories, oddly enough, are developed within the mono-compository itself. Therefore, putting them in open source is quite difficult! ”

For an example, look at the project on Github with the bazel plugin for Intellij IDEA . Google develops it in its internal repository, and then “splashes out” parts of it in Github with the loss of commit history, without the ability to send a pull request, and so on. I do not think this is open source (here is an example of my small PR , which was closed instead of merge, and then changes appeared in the next version). By the way, this fact is mentioned in the original article, that mono-suppositories interfere with putting in open-source and creating a community around the project. I think many did not attach much importance to this argument.

Well, if we talk about what to do to avoid all these problems? The advice is exactly one — strive to have a repository as small as possible.

And what about the mono-repository here? And even though this approach makes it impossible for you to have small, light and independent repositories.

What are the disadvantages of the polyrepository approach? I see exactly 1: the inability to track who your API user is. This is especially true of the “nothing nothing” microservices approach, in which the code is not scrambled between microservices. (By the way, do you think this approach is used by anyone in mono-repositories?) This problem, unfortunately, must be solved either by organizational means, or by trying to use code browsing tools that support independent repositories (for example, https://sourcegraph.com / ).

What about comments like “we tried poly-repositories, but then we had to constantly implement features in several repositories at once, which was tiring and we merged everything into one boiler” ? The answer to this is very simple: “one should not confuse the problems of the approach with the incorrect decomposition” . No one argues that exactly one microservice should be in the repository and that's it. In my time of using polypositories we perfectly folded the family of closely related microservices into one repository. However, given that there were more than 100 services, there were more than 20 such repositories. The most important thing to think about in terms of decomposition is how these services will be deployed.

But what about the argument about the version? After all, monorepositories allow not having versions and deploying all from one commit! First, versioning is the simplest of all the problems voiced here. Even in such an old thing as maven there is a maven-version-plugin, which allows you to release a version with just one click. And second, and most importantly, does your company have mobile apps? If so, then you already have versions, and you will not get anywhere from this!

Well, there is still the most important argument in support of mono-repositories - it allows you to refactor the entire code base into one commit! Not really. As mentioned in the original article, due to the limitations that the warmth imposes. You should always keep in mind that for a long time (the duration depends on how your process is organized) you will have 2 versions of the same service in parallel. For example, on my past project, our system was in this state for several hours at every delay. This leads to the fact that it is impossible to conduct global refactorings affecting the interaction interfaces in one commit even in a mono-repository.

So, those respectable and few colleagues who work in Google, Facebook, etc. and they will come here to defend their monopositories, I would like to say: “Do not worry, you are doing everything right, enjoy your tuling, which was spent hundreds of thousands or millions of man hours. They have already been spent, so if you do not use it, then no one will. ”

And to all the rest: “You are not Google, do not use monorepositories!”

P.S. As noted by the highly respected Bobuk in the radio-T podcast when discussing the original article: “There are ~ 20 companies in the world that can do in a mono-repository. The rest should not even try . ”

So, a new portion of the promised holivar about monorepositories. In the first part, we discussed the translation of an article by a respected engineer from Lyft (and earlier Twitter) about what are the disadvantages of monospositories and why they negate almost all the advantages of this approach. Personally, I largely agree with the arguments given in the original article. But, as promised, to put an end to this discussion, I would like to voice a few more points, in my opinion even more important and more practical.

I'll tell you a little about myself - I worked in small projects and in relatively large ones, used poly-repositories in a project with more than 100 microservices (and SLA 99.999%). At the moment, I am engaged in transferring a small mono-repository (in fact, no, just the front js + java backend) from maven to bazel. Did not work in Google, Facebook, Twitter, i.e. I didn’t have the pleasure of using a mono-repository properly tuned and hung up with a tuling.

So, for starters, what is a mono-repository? Comments to the translation of the original article showed that many people believe that the mono-repository is when all 5 developers of the company work on one repository and store the frontend and backend in it. Of course, it is not. A mono-repository is a way to store all the company's projects, libraries, tools for building, plug-ins for IDE, deployment scripts, and everything else in one large repository. Details here trunkbaseddevelopment.com .

How, then, is the approach called when a company is small and it simply does not have such a number of projects, modules, components? This is also a mono-repository, only small.

Naturally, the original article states that all the problems described begin to appear on a certain scale. Therefore, those who write that their mono-repository for 1.5 diggers works fine, of course, absolutely right.

So, the first fact that I would like to fix: a mono-repository is a great start for your new project . Putting all the code in one pile, at first you get only one advantage, because support for multiple repositories will certainly add a bit of overhead.

What is the problem then? And the problem, as noted in the original article, begins on a certain scale. And most importantly, do not miss the moment when such a scale has already arrived.

Therefore, I am inclined to say that, in essence, the problems that arise are not the problems of the approach itself “put all your code in one pile”, and these are problems of just large source code repositories. Those. If you assume that you have used poly repositories for different services / components, and one of these services has become so large (how much, we will discuss just below), then you will most likely get exactly the same problems, only without the advantages of monorepositions (if they are , of course have).

So, how big should the repository be to start to be considered problematic?

There are definitely 2 indicators on which it depends - the amount of code and the number of developers working with this code. If your project has terabytes of code, but at the same time 1-2 people work with it, then most likely they will barely notice the problems (or at least it will be easier to do nothing, even if they notice :)

How to determine that it is time to think about how to improve your repository? Of course, this is a subjective indicator, most likely your developers will begin to complain that something does not suit them. But the problem is that it may be too late to change something. I’ll give some numbers on my own behalf: if cloning your repository takes more than 10 minutes, if the project builds take more than 20-30 minutes, if the number of developers exceeds 50, and so on.

An interesting fact from personal practice:

I worked on a rather large monolith in a team of about 50 developers, divided into several small teams. The development was carried out in feature-brunch, and Merge took place just before the feature-frieze. Once I spent 3 days on the merge of our command line, after 6 other teams froze in front of me.

Now let's go through the list of problems that arise in large repositories (some of them were touched upon in the original article, some are not).

1) Repository download time

On the one hand, we can say that this is a one-time operation that the developer performs during the initial setup of his workstation. Personally, I often have situations when I want to clone a project into the next folder, dig deeper into it, and then delete it. However, if cloning takes more than 10-20 minutes, it will not be so comfortable.

But besides, you should not forget that before building a project on a CI server, you need to clone the repository for each build agent. And here you start to think about how to save this time, because if each assembly takes 10-20 minutes longer, and the result of the assembly appears 10-20 minutes later, it will not suit anyone. So the repository begins to appear in the images of the virtual machines from which the agents are deployed, additional complexity and additional costs to support this solution appear.

2) Assembly time

This is a fairly obvious point that has been discussed many times. In fact, if you have a lot of source codes, the build will take a lot of time anyway. A familiar situation when after changing one line of code you have to wait half an hour while the changes are reassembled and tested. In fact, there is only one way out - to use an assembly system built around caching results and incremental assembly.

There are not so many options here - despite the fact that the caching capabilities were added to the same gradle (unfortunately, they were not used in practice), they do not bring practical benefits due to the fact that traditional build systems do not have repeatable results. (reproducible builds). Those. Due to the side effects of the previous build, it will still be necessary to trigger caches at some point (standard

maven clean build approach). Therefore, it remains only the option to use Bazel / Buck / Pants and others like them. Why this is not very good, we discuss a little below.3) IDE Indexing

My current project is indexed in Intellij IDEA from 30 to 40 minutes. And yours? Of course, you can open only a part of the project or exclude all unnecessary modules from indexing, but ... The problem is that reindexing occurs every time you switch from one branch to another. That is why I like to clone the project in the next directory. Some come to start caching IDE cache :)

<Picture from D-Caprio with a narrowed eye>

4) Assembly logs

Which CI server are you using? Does it provide a convenient interface for viewing and navigating in assembly logs of several gigabytes in size? Sorry, my not :(

5) Commit history

Do you like to watch commit history? I love it, especially in the GUI tool (I better perceive the information visually, do not scold :).

This is the history of commits in my repository.

Like? Conveniently? I personally do not!

6) Broken Tests

What happens if someone could run broken tests / non-compiled code into the master? You will of course say that your CI does not allow doing this. What about the unstable tests that are held by the author, and no one else? Now imagine that this code has spread to the machines of 300 developers, and none of them can build a project? What to do in this situation? Wait, when the author will notice and correct? Correct for him? Roll back changes? Of course, ideally, it is worth committing only good code, and writing right away without bugs. Then there will be no such problem.

(for those who are in the tank and did not understand the hints, it’s a negative effect if it happens in the repository with 10 developers and in the repository with 300 will be slightly different)

7) Merge bot

Ever heard of such a thing? Do you know why she is needed? You will laugh, but this is another tool that should not exist :) Imagine that the build time of your project is 30 minutes. And 100 developers are working on your project. Suppose that each of them shoots 1 commit per day. Now imagine an honest CI that allows merge changes to the master only after they have been applied to the most recent commit from the master (rebase).

Attention, the question: how many hours should be in a day so that such an honest CI-server can smadzhit changes from all developers? The correct answer is 50. Whoever answered correctly, can take the gingerbread from the shelf. Well, or imagine how you just debuted your commit to the most recent commit to the wizard, started the build, and when it was completed, the wizard had already left 20 commits ahead. All over again?

So, merge bot or merge queue is a service that automates the process of re-submitting all merge requests to the fresh master, running the tests and the merge directly, and can also merge commits into batch files and test them together. Very handy thing. See mergify.io , k8s test-infra prow from Google, bors-ng , etc. (I promise to write more about this in the future)

Now to less technical problems:

8) Using a single build tool

Honestly, it is still a mystery to me why collecting the entire mono-repository using one common assembly system. Why not build javascript Yarn, java - gradle, Scala - sbt, etc.? If someone knows the answer to this question (does not guess or assumes, and that is, knows) write in the comments.

Of course, it seems obvious that using one build system is better than several different ones. But they still understand that any universal thing is obviously worse than a specialized one, since it most likely has only a subset of all specialized functions. But what's even worse, different programming languages can have different paradigms in terms of assembly, dependency management, etc., which will be very difficult to wrap up in one common wrapper. I don’t want to go into details, give one example about bazel (wait for details in a separate article) - we found 5 independent implementations of javascript build rules for bazel from 5 different companies on GitHub, along with the official one from Google. Worth thinking.

9) General approaches

In response to the original CTO article from Chef, Monorepo wrote his answer : please do! . In his reply, he argues that "the main thing in monorepo is that he makes talk and makes flaws visible." He means that when you want to change your API, you will have to find all its uses and discuss your changes with the maintainers of these pieces of code.

So my experience is exactly the opposite. It is clear that this very much depends on the engineering culture in the team, but I see in this approach solid minuses. Imagine that you have used a certain approach that faithfully served you for some time. And so you decided for some reason, solving a similar problem, to use a slightly different method, perhaps more modern. What is the likelihood that the addition of a new approach will pass a review?

In my recent past, I repeatedly received comments like “we already have a proven path, use it” and “if you want to implement a new approach, update the code in all 120 places where the old approach is used and get appruv from all the commands that are responsible for these pieces of code. " Usually this enthusiasm "innovator" ends.

And how much do you think it would cost to write a new service in a new programming language? In polyrepositories - not at all. Create a new repository and write, and even take the most suitable build system. And now the same thing in monorepositories?

I understand perfectly well that “standardization, reuse, sharing of code”, but the project should be developed. In my subjective opinion, a mono-repository rather prevents this.

10) Open source

Recently I was asked: “ are there any open source tools for mono-repositories? ” I replied: “The problem is that tools for mono-repositories, oddly enough, are developed within the mono-compository itself. Therefore, putting them in open source is quite difficult! ”

For an example, look at the project on Github with the bazel plugin for Intellij IDEA . Google develops it in its internal repository, and then “splashes out” parts of it in Github with the loss of commit history, without the ability to send a pull request, and so on. I do not think this is open source (here is an example of my small PR , which was closed instead of merge, and then changes appeared in the next version). By the way, this fact is mentioned in the original article, that mono-suppositories interfere with putting in open-source and creating a community around the project. I think many did not attach much importance to this argument.

Alternatives

Well, if we talk about what to do to avoid all these problems? The advice is exactly one — strive to have a repository as small as possible.

And what about the mono-repository here? And even though this approach makes it impossible for you to have small, light and independent repositories.

What are the disadvantages of the polyrepository approach? I see exactly 1: the inability to track who your API user is. This is especially true of the “nothing nothing” microservices approach, in which the code is not scrambled between microservices. (By the way, do you think this approach is used by anyone in mono-repositories?) This problem, unfortunately, must be solved either by organizational means, or by trying to use code browsing tools that support independent repositories (for example, https://sourcegraph.com / ).

What about comments like “we tried poly-repositories, but then we had to constantly implement features in several repositories at once, which was tiring and we merged everything into one boiler” ? The answer to this is very simple: “one should not confuse the problems of the approach with the incorrect decomposition” . No one argues that exactly one microservice should be in the repository and that's it. In my time of using polypositories we perfectly folded the family of closely related microservices into one repository. However, given that there were more than 100 services, there were more than 20 such repositories. The most important thing to think about in terms of decomposition is how these services will be deployed.

But what about the argument about the version? After all, monorepositories allow not having versions and deploying all from one commit! First, versioning is the simplest of all the problems voiced here. Even in such an old thing as maven there is a maven-version-plugin, which allows you to release a version with just one click. And second, and most importantly, does your company have mobile apps? If so, then you already have versions, and you will not get anywhere from this!

Well, there is still the most important argument in support of mono-repositories - it allows you to refactor the entire code base into one commit! Not really. As mentioned in the original article, due to the limitations that the warmth imposes. You should always keep in mind that for a long time (the duration depends on how your process is organized) you will have 2 versions of the same service in parallel. For example, on my past project, our system was in this state for several hours at every delay. This leads to the fact that it is impossible to conduct global refactorings affecting the interaction interfaces in one commit even in a mono-repository.

Instead of concluding:

So, those respectable and few colleagues who work in Google, Facebook, etc. and they will come here to defend their monopositories, I would like to say: “Do not worry, you are doing everything right, enjoy your tuling, which was spent hundreds of thousands or millions of man hours. They have already been spent, so if you do not use it, then no one will. ”

And to all the rest: “You are not Google, do not use monorepositories!”

P.S. As noted by the highly respected Bobuk in the radio-T podcast when discussing the original article: “There are ~ 20 companies in the world that can do in a mono-repository. The rest should not even try . ”

Source: https://habr.com/ru/post/436264/