Top 9 Security Practices at Kubernetes

Note trans. : This is not the first article with general security recommendations in Kubernetes that we translate in our blog. However, its relevance - at least as a reminder of simple and important things that should not be ignored due to lack of time - is only confirmed by recent events mentioned by the author at the beginning of the material. By the way, the author is Connor Gilbert, product manager at StackRox, which offers a ready-made platform for securing applications deployed as part of Kubernetes clusters. So, this is what he advises readers of the CNCF blog ...

NB : To make the article more informative, for some of the terms / methods mentioned by the author, we added links to relevant documentation.

Last month, Kubernetes, the world's most popular container orchestration system, discovered the first major security vulnerability that hit the project's ecosystem. Vulnerability CVE-2018-1002105 allows attackers to compromise clusters through the API-server Kubernetes, which allows to execute malicious code to install malware, etc.

Earlier in the same year, the incorrect configuration of the Kubernetes control panel led to the fact that a software for mining cryptocurrencies was installed on Tesla resources. Then, the attackers took advantage of the fact that one of the Kubernetes panels was not password-protected, which allowed them to access one of the pods with an account to access the larger Tesla infrastructure in AWS.

Organizations that force the process of introducing containers and their orchestration must also take mandatory steps to protect such a critical part of their infrastructure. Below are the nine best practices in Kubernetes based on customer data: follow them to better protect your infrastructure.

In each quarterly release [Kubernetes], there are not only bug fixes, but also new security features: we recommend working with the latest stable version to take advantage of them.

Using the latest release with the latest patches will be especially relevant in light of the recent detection of CVE-2018-1002105. Updates and support may be more difficult than the new features offered in releases, so plan your updates at least once a quarter.

Significantly simplify the update can use providers managed Kubernetes-solutions.

Use RBAC (Role-Based Access Control) to control who can access the Kubernetes API and what rights they have. Usually RBAC is enabled by default in Kubernetes version 1.6 and higher (or later in the case of some providers), but if you have been updated since then and did not change the configuration, you should double-check your settings. Because of the mechanism by which authorization controllers work together in Kubernetes (for a general sequence of operations, see the article “ What happens in Kubernetes when starting the kubectl run? Part 1 ”) , it is necessary to have RBAC and outdated ABAC enabled (Attribute-Based Access Control).

However, it is not enough to include RBAC - it still needs to be used effectively. In general, rights should be avoided for the entire cluster (cluster-wide) , giving preference to rights in certain namespaces. Avoid granting cluster administrator privileges to anyone even for debugging - it is much safer to allocate rights only on a case-by-case basis.

You can view cluster roles and just roles with the commands

If the application requires access to the Kubernetes API, create separate service accounts ( give more details about them in this material - approx. Transl. ) And give them the minimum set of rights required for each use case. This approach is much better than issuing too large rights to the default account in the namespace.

Most applications do not need access to the API at all: for them, you can set the

Creating separate namespaces is important as the first level of component isolation. It is much easier to adjust security settings — for example, network policies — when different types of workloads are deployed in separate namespaces.

Does your team use namespaces effectively? Check their list for non-standard (not created by default):

Good practice for limiting the potential consequences of a compromise is to run workloads with confidential data on a dedicated set of machines. This approach reduces the risk that an application with sensitive data will be accessed by a less secure application running in the same container environment or on the same host. For example, a kubelet of a compromised node usually has access to the contents of secrets only if they are mounted to pods that are scheduled to be executed on the same node. If important secrets can be found on the many nodes of the cluster, the attacker will have more opportunities to get them.

Separation can be done using node pools ( node pools (in the cloud or for on-premises)), as well as Kubernetes control mechanisms, such as namespaces, taints, tolerations, and others.

Sensitive metadata — for example, kubelet administrative credentials — can be stolen or used with malicious intent to escalate privileges in a cluster. For example, a recent find within Shop bounds from Shopify showed in detail how a user could exceed permissions by getting metadata from a cloud provider using specially crafted data for one of the microservices.

In GKE, the metadata concealment function of hiding metadata changes the cluster deployment mechanism in such a way as to avoid such a problem, and we recommend using it until a permanent solution is implemented.

Similar countermeasures may be required in other environments.

Network Policies - Network Policies - allow you to control network access to and from containerized applications. To use them, you must have a network provider with the support of such a resource; in the case of providers of managed Kubernetes solutions like Google Kubernetes Engine (GKE), you will need to enable support. (Enabling network policies for existing clusters in GKE will require a short rolling update.)

Once everything is ready, start with simple default network policies — say, blocking (by default) traffic from other namespaces.

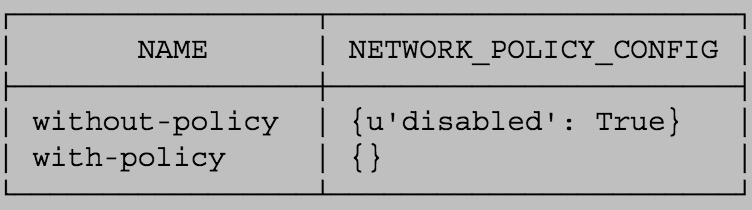

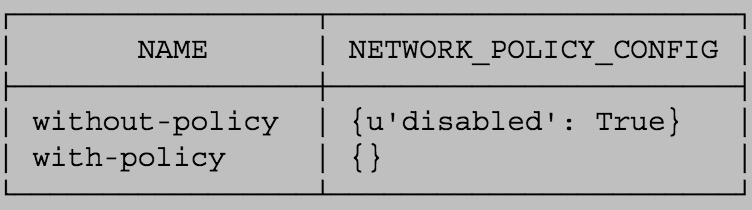

If you use the Google Container Engine, you can check if policy support is enabled in running clusters:

The pod's security policy sets the default values used to run workloads in the cluster. Consider defining the policy and turning on the ad security controller's admission. Pod Security Policy: the instructions for these steps vary depending on the cloud provider or deployment model used.

For starters, you can require disabling in

To improve node security, you can perform the following steps:

Make sure that the audit logs are enabled and that you are tracking the appearance of unusual or unwanted API calls in them, especially in the context of any authorization failures — such entries will have a message with the status “Forbidden”. Authorization failures can mean that the attacker is trying to use the received credentials.

Managed solution providers (including GKE) provide access to this data in their interfaces and can assist you in setting up notifications in case of authorization failures.

Follow these guidelines to make the Kubernetes cluster more secure. Remember that even after the cluster is configured safely, it is necessary to ensure the security in other aspects of the configuration and operation of the containers. To improve the security of the technology stack, examine the tools that provide a central system for managing deployed containers, continuously monitoring and protecting containers and cloud (native cloud) applications.

Read also in our blog:

NB : To make the article more informative, for some of the terms / methods mentioned by the author, we added links to relevant documentation.

Last month, Kubernetes, the world's most popular container orchestration system, discovered the first major security vulnerability that hit the project's ecosystem. Vulnerability CVE-2018-1002105 allows attackers to compromise clusters through the API-server Kubernetes, which allows to execute malicious code to install malware, etc.

Earlier in the same year, the incorrect configuration of the Kubernetes control panel led to the fact that a software for mining cryptocurrencies was installed on Tesla resources. Then, the attackers took advantage of the fact that one of the Kubernetes panels was not password-protected, which allowed them to access one of the pods with an account to access the larger Tesla infrastructure in AWS.

Organizations that force the process of introducing containers and their orchestration must also take mandatory steps to protect such a critical part of their infrastructure. Below are the nine best practices in Kubernetes based on customer data: follow them to better protect your infrastructure.

1. Update to the latest version.

In each quarterly release [Kubernetes], there are not only bug fixes, but also new security features: we recommend working with the latest stable version to take advantage of them.

Using the latest release with the latest patches will be especially relevant in light of the recent detection of CVE-2018-1002105. Updates and support may be more difficult than the new features offered in releases, so plan your updates at least once a quarter.

Significantly simplify the update can use providers managed Kubernetes-solutions.

2. Enable role-based access control (RBAC)

Use RBAC (Role-Based Access Control) to control who can access the Kubernetes API and what rights they have. Usually RBAC is enabled by default in Kubernetes version 1.6 and higher (or later in the case of some providers), but if you have been updated since then and did not change the configuration, you should double-check your settings. Because of the mechanism by which authorization controllers work together in Kubernetes (for a general sequence of operations, see the article “ What happens in Kubernetes when starting the kubectl run? Part 1 ”) , it is necessary to have RBAC and outdated ABAC enabled (Attribute-Based Access Control).

However, it is not enough to include RBAC - it still needs to be used effectively. In general, rights should be avoided for the entire cluster (cluster-wide) , giving preference to rights in certain namespaces. Avoid granting cluster administrator privileges to anyone even for debugging - it is much safer to allocate rights only on a case-by-case basis.

You can view cluster roles and just roles with the commands

kubectl get clusterrolebinding or kubectl get rolebinding --all-namespaces . And so you can quickly check to whom the cluster-admin role has been issued (in this example, only for the masters group): $ kubectl describe clusterrolebinding cluster-admin Name: cluster-admin Labels: kubernetes.io/boostrapping=rbac-defaults Annotations: rbac.authorization.kubernetes.io/autoupdate=true Role: Kind: ClusterRole Name: cluster-admin Subjects: Kind Name ---- ---- Group system:masters Namespace --------- If the application requires access to the Kubernetes API, create separate service accounts ( give more details about them in this material - approx. Transl. ) And give them the minimum set of rights required for each use case. This approach is much better than issuing too large rights to the default account in the namespace.

Most applications do not need access to the API at all: for them, you can set the

automountServiceAccountToken to false .3. Use namespaces to set security boundaries.

Creating separate namespaces is important as the first level of component isolation. It is much easier to adjust security settings — for example, network policies — when different types of workloads are deployed in separate namespaces.

Does your team use namespaces effectively? Check their list for non-standard (not created by default):

$ kubectl get ns NAME STATUS AGE default Active 16m kube-public Active 16m kube-system Active 16m 4. Separate sensitive workloads.

Good practice for limiting the potential consequences of a compromise is to run workloads with confidential data on a dedicated set of machines. This approach reduces the risk that an application with sensitive data will be accessed by a less secure application running in the same container environment or on the same host. For example, a kubelet of a compromised node usually has access to the contents of secrets only if they are mounted to pods that are scheduled to be executed on the same node. If important secrets can be found on the many nodes of the cluster, the attacker will have more opportunities to get them.

Separation can be done using node pools ( node pools (in the cloud or for on-premises)), as well as Kubernetes control mechanisms, such as namespaces, taints, tolerations, and others.

5. Protect access to cloud metadata

Sensitive metadata — for example, kubelet administrative credentials — can be stolen or used with malicious intent to escalate privileges in a cluster. For example, a recent find within Shop bounds from Shopify showed in detail how a user could exceed permissions by getting metadata from a cloud provider using specially crafted data for one of the microservices.

In GKE, the metadata concealment function of hiding metadata changes the cluster deployment mechanism in such a way as to avoid such a problem, and we recommend using it until a permanent solution is implemented.

Similar countermeasures may be required in other environments.

6. Create and define cluster network policies

Network Policies - Network Policies - allow you to control network access to and from containerized applications. To use them, you must have a network provider with the support of such a resource; in the case of providers of managed Kubernetes solutions like Google Kubernetes Engine (GKE), you will need to enable support. (Enabling network policies for existing clusters in GKE will require a short rolling update.)

Once everything is ready, start with simple default network policies — say, blocking (by default) traffic from other namespaces.

If you use the Google Container Engine, you can check if policy support is enabled in running clusters:

$ gcloud container clusters list \ --format='table[box] (name,addonsConfig.networkPolicyConfig)'

7. Set Cluster Pod Security Policy

The pod's security policy sets the default values used to run workloads in the cluster. Consider defining the policy and turning on the ad security controller's admission. Pod Security Policy: the instructions for these steps vary depending on the cloud provider or deployment model used.

For starters, you can require disabling in

NET_RAW capability containers to protect against some types of spoofing attacks.8. Work on node security

To improve node security, you can perform the following steps:

- Make sure the host is safe and properly configured . One of the ways - CIS Benchmarks ; many products have an autochecker that automatically checks the system for compliance with these standards.

- Monitor the network availability of important ports . Make sure that the network blocks access to the ports used by the kubelet, including 10250 and 10255. Consider restricting access to the Kubernetes API server - with the exception of trusted networks. In clusters that did not require authentication and authorization in the kubelet API, attackers used access to such ports to launch miners for cryptocurrencies.

- Minimize administrative access to Kubernetes nodes . Access to the cluster nodes should in principle be limited: for debugging and other tasks, as a rule, it is possible to do without direct access to the node.

9. Enable Audit Logging

Make sure that the audit logs are enabled and that you are tracking the appearance of unusual or unwanted API calls in them, especially in the context of any authorization failures — such entries will have a message with the status “Forbidden”. Authorization failures can mean that the attacker is trying to use the received credentials.

Managed solution providers (including GKE) provide access to this data in their interfaces and can assist you in setting up notifications in case of authorization failures.

Looking to the future

Follow these guidelines to make the Kubernetes cluster more secure. Remember that even after the cluster is configured safely, it is necessary to ensure the security in other aspects of the configuration and operation of the containers. To improve the security of the technology stack, examine the tools that provide a central system for managing deployed containers, continuously monitoring and protecting containers and cloud (native cloud) applications.

PS from translator

Read also in our blog:

- “ 11 ways to (not) become a victim of hacking at Kubernetes ”;

- “ Understand RBAC in Kubernetes ”;

- “ OPA and SPIFFE - two new projects at CNCF for the security of cloud applications ”;

- " Vulnerable Docker VM - a virtual puzzle for Docker and pentesting ";

- " 7 best practices for the operation of containers according to Google ".

Source: https://habr.com/ru/post/436300/