Machine Learning for Vertica

annotation

In this article I want to share my own experience with machine learning in the data store on Vertica.

Let's be honest, I am not an expert analyst who can describe in detail the whole diversity of research methods and data prediction algorithms. But still, being an expert on Vertica and having a basic experience with ML, I will try to talk about ways to work with predictive analysis in Vertica using the built-in functionality of the server and the language R.

Machine Learning Library Vertica

Starting with version 7, Vertica was supplemented with the Machine Learning library, with which you can:

- prepare sample data for machine learning;

- train machine learning models on prepared data;

- Conduct a predictive analysis of storage data on stored machine learning models.

The library comes immediately with Vertica installation for all versions, including the free Community. Working with it is framed in the form of calling functions from under SQL, which are described in detail in the documentation with examples of use on the prepared demonstration data.

An example of working with ML in Vertica

As a simple example of how ML works, I took the sample data for mtcars cars that are part of the ML data sample for Vertica. This data includes two tables:

- mtcars_train - prepared for training model of machine learning data

- mtcars - data for analysis

Let's look at the data for training:

=>SELECT * FROM mtcars_train;

In the data set on the models of cars painted their characteristics. Let's try to train machine learning so that according to the characteristics of cars it was possible to predict what type of gearbox is involved in a car - a manual gearbox or automatic gearbox. To do this, we need to build a logistic regression model on the prepared data, finding the dependence of the box type type “am” and the weight fields of the car “wt”, the number of cylinders “cyl” and the number of speeds in the box “gear”:

=>SELECT LOGISTIC_REG('logistic_reg_mtcars', 'mtcars_train', 'am', 'cyl, wt, gear'); Finished in 19 iterations The called function analyzed the relationship between am and the cyl, wt, gear fields, revealed the dependency formula, and recorded the dependency modeling result in the Vertica database in the “logistic_reg_mtcars” model. With the help of this saved model, it is now possible to analyze data on cars and predict the presence of an automatic box.

Information on the model can be viewed:

=>SELECT GET_MODEL_SUMMARY(USING PARAMETERS model_name='logistic_reg_mtcars');

We now use the model on the data on cars, saving the result in a new table:

=>CREATE TABLE mtcars_predict_results AS ( SELECT car_model, am, PREDICT_LOGISTIC_REG(cyl, wt, gear USING PARAMETERS model_name='logistic_reg_mtcars') AS prediction FROM mtcars ); And comparing the real values of am with those obtained in the prediction prediction:

=>SELECT * FROM mtcars_predict_results;

In this case, the forecast for 100% coincided with the actual type of box in the presented models. In the case of preparing new data for training, you will need to delete and re-save the model.

ML functionality in Vertica

The ML library in Vertica supports the following types of predictive analysis:

- Forecasting:

- Linear regression

- Random forest for regression

- SVM (Support Vector Machine) for Regression

- Classification:

- Logistic regression

- Naive bayes

- Random Forest for Classification

- SVM (Support Vector Machine) for Classification

- Clustering:

- k-means

To prepare the data for training, the following functionality is presented:

- Data balancing

- Cleaning emissions

- Encoding categorical (textual) column values

- Replacing missing data

- Data normalization

- Principal component analysis

- Data sampling

- Singular value decomposition

Considering the ML functional in Vertica, it can be said that the built-in library allows solving a fairly wide range of tasks, but it does not have a foundation for studying patterns and dependencies in the data. There are functions for preparing data for machine learning, but without visualizing the distribution of data in the form of graphs, such data can be prepared and training models trained on them except analysis gurus with expert knowledge of the data being analyzed.

R Studio with Vertica

For more thorough and interactive predictive data analysis, the ideal language is R, which has a visual environment for working with R Studio data. The tangible benefits of using R with Vertica will be:

- interactivity environment with the ability to save the state for further analysis after the next launch;

- visual viewing of data in the form of tables and graphs;

- power of the R language for working with data sets;

- a variety of predictive analysis algorithms, similar to those presented in Vertica ML.

As disadvantages of R with big data, you can mention the requirements for RAM, the speed of working with large data arrays and the need to import and export Vertica data. These shortcomings are covered by the possibility of embedding written functions R for direct execution on a cluster in Vertica, which will be discussed below.

A little introduction to R

Let's reproduce the forecast on the boxes of the machine on the Vertica data with the help of R. In order not to scare away programmers unfamiliar with this language, I will conduct a short course of the young fighter R.

So, the language R is the same procedural language, having objects, classes and functions.

An object can be a data set (vector, list, data ...), value (text, number, date, time ...) or a function. For values, numeric, string, boolean, and date-time types are supported. For datasets, the numbering of arrays starts with 1, not 0.

Classically, instead of "=" in R, the assignment operator "<-" is used. Although it is not forbidden to use the assignment in the other direction "->" and even the usual "=". The "=" operator itself is used when calling functions to specify named parameters.

Instead of "." "$" is used to access dataset fields. The point is not a keyword and is used in the names of objects to increase their readability. Thus, “my.data $ field” will be decrypted as an array of field “field” records from the “my.data” data set.

For framing texts, you can use both single and double quotes.

Most importantly: R is sharpened to work with data sets. Even if the code says "a <-1", then rest assured, R internally believes that "a" is an array of 1 element. The language design allows working with data sets as with ordinary variables: add and subtract, connect and disconnect, filter by measurements. The easiest way to create an array listing its elements is to call the function “c (comma-separated array elements)”. The name "c" is apparently taken as a short abbreviation of the Collection, but I will not say for sure.

Data loading from DBMS to R

To work with RDBMS via ODBC for R you need to install the RODBC package. It can be installed in R Studio on the packages tab or using the R command:

install.packages('RODBC') library('RODBC') Now we can work with Vertica. We make an ODBC alias to the server and get the test and full data for the car:

# Создаем подключение к Vertica con <- odbcConnect(dsn='VerticaDSN') # получаем данные таблицы mtcars_train mtcars.train <- sqlQuery(con, "SELECT * FROM public.mtcars_train") # получаем данные таблицы mtcars</b> mtcars.data <- sqlQuery(con, "SELECT * FROM public.mtcars") # закрываем соединение odbcClose(con) When loading data from R sources for text type and date-time fields, their belonging to the factors is automatically set. The “am” field has a numeric type and is perceived by R as a numerical indicator, and not a factor, which will not allow a logistic regression. Therefore, we transform this field into a numerical factor:

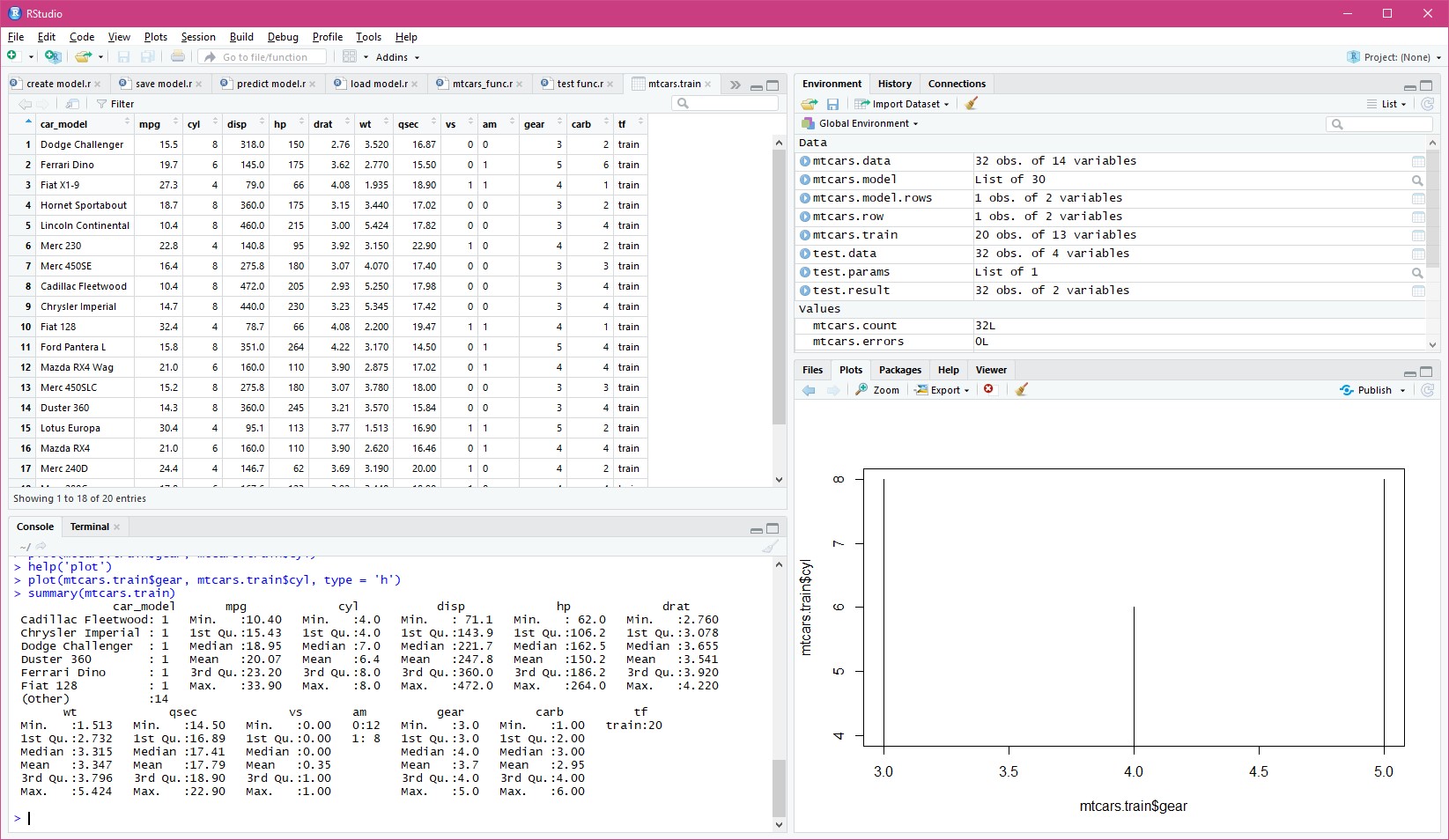

mtcars.data$am = factor(mtcars.data$am) mtcars.train$am = factor(mtcars.train$am) In R Studio, it is convenient to interactively view data, build predictive analysis charts, and write code in R with prompts:

Building a model in R

Let us build a logistic regression model over a prepared data set for the same dimensions as in Vertica:

mtcars.model <- glm(formula = am ~ cyl + wt + gear, family = binomial(), data = mtcars.train) Explanation: in the R language, the formula of the predictive analysis is indicated as:

<поле результата анализа>~<влияющие на анализ поля> Data analysis by model in R

We initialize the resulting data set by taking all the records from the mtcars in the required fields:

mtcars.result <- data.frame(car_model = mtcars.data$car_model, am = mtcars.data$am, predict = 0) Now, using the constructed model, you can perform an analysis on the data itself:

mtcars.result$predict <- predict.glm(mtcars.model, newdata = subset(mtcars.data, select = c('cyl', 'wt', 'gear')), type = 'response' ) The result of the analysis is returned to the predict field as a percentage of the probability of the forecast. Simplify by analogy with Vertica to values of 0 or 1, considering the forecast to be positive with a probability of more than 50%:

mtcars.result$predict <- ifelse(mtcars.result$predict > 0.5, 1, 0) We calculate the total number of records for which the predicted predict field did not coincide with the real value in am:

nrow(mtcars[mtcars.result$am != mtcars.result$predict, ]) R returned zero. Thus, the forecast has converged on all car models, as in ML from Vertica.

Please note: records from mtcars were returned by a filter (the first parameter in square brackets) with all columns (the second missing comma parameter in square brackets).

Local storage and loading of data in R

When exiting from R, the studio offers to save the state of all objects in order to continue working after the restart. If for some reason you need to save and then restore the state of individual objects, for this R provides special functions:

# Сохранить объект модели в файл save(mtcars.model, file = 'mtcars.model') # Восстановить объект модели из файла load('mtcars.model') Saving data from R to Vertica

In case R Studio was used to prepare data for training Vertica ML models or it was used to analyze it directly, which should be further used in the Vertica database, R data sets can be written to the Vertica table.

Since the ODBC library for R is designed for an OLTP RDBMS, it cannot generate table creation requests for Vertica correctly. Therefore, to successfully write data, you will need to manually create the necessary table in Vertica using SQL, the set of fields and types of which coincides with the recordable data set R.

Further, the write process itself looks simple (do not forget to open and then close the con connection):

sqlSave(con, mtcars.result, tablename = 'public.mtcars_result', append = TRUE, rownames = FALSE, colnames = FALSE) Vertica's work with R

Interactive work with data in R Studio is well suited for the mode of research and data preparation. But it is completely unsuitable for analyzing data streams and large arrays in automatic mode. One of the variants of the hybrid scheme of predictive analysis of R with Vertica is the preparation of data for training on R and the identification of dependencies for building models. Next, using the ML functions built into Vertica, the prediction models are trained on the data prepared on R, taking into account the identified dependencies of the variables.

There is also a more flexible option when all the power of the R language is used directly from under Vertica. To do this, under Vertica, an R distribution was developed in the form of a plug-in library, which allows using transformation functions written directly in the R language in SQL queries. The documentation details the installation of R support for Vertica and the additional R packages required for operation, if required.

Saving Model R to Vertica

To use the analysis model previously prepared in R Studio in the R functions working from under Vertica, you need to save them on Vertica servers. It is not convenient and reliable to save files on each cluster server locally, new servers can be added to the cluster, and if you change the model, you need to remember to rewrite all files again.

The most convenient way is to serialize model R into text and save the Vertica function as UDF, which will return this text as a constant (do not forget to open and then close the con connection):

# Сериализуем модель в текст mtcars.model.text <- rawToChar( serialize(mtcars.model, connection = NULL, ascii = TRUE)) # Собираем текст функции для выполнения в Vertica # (в тексте модели одинарные кавычки дублируются) mtcars.func <- paste0( "CREATE OR REPLACE FUNCTION public.MtCarsAnalizeModel() RETURN varchar(65000) AS BEGIN RETURN '", gsub("'", "''", mtcars.model.text), "'; END; GRANT EXECUTE ON FUNCTION public.MtCarsAnalizeModel() TO public;" ) # Создаем функцию на Vertica sqlQuery(con, mtcars.func) The proposed method allows you to bypass the restriction of Vertica on the passed parameters in the transformation function, where you only need to transfer constants or expressions from constants. In Vertica, UDF SQL is compiled not as functions, but as calculated expressions, that is, when a parameter is passed, instead of calling the function, its text (in this case, a constant), which was saved in the code above, is transferred.

If the model is changed, it will be necessary to recreate its function in Vertica. It makes sense to wrap this code in a universal function that generates a function in Vertica with the specified name from the transferred model.

R functions for working in Vertica

In order to connect the R functions to Vertica, you need to write data analysis and registration functions in Vertica.

The function of working with data from under Vertica itself should have two parameters: the resulting data set (as data.frame) and the parameters of work (as list):

MtCarsAnalize <- function(data, parameters) { if ( is.null(parameters[['model']]) ) { stop("NULL value for model! Model cannot be NULL.") } else { model <- unserialize(charToRaw(parameters[['model']])) } names(data) <- c('car_model', 'cyl', 'wt', 'gear') result <- data.frame(car_model = data$car_model, predict = 0) result$predict <- predict.glm(model, newdata = subset(data, select = c('cyl', 'wt', 'gear')), type = 'response' ) result$predict <- ifelse(result$predict > 0.5, TRUE, FALSE) return(result) } In the function body, it is checked that the model parameter is passed, the text of which is translated into a binary form and deserialized into an object of the analysis model. Since Vertica passes its own query field names to the function dataset, the explicit field names are set to the data set. Based on the obtained data, a result set is constructed with the name of the machine model and the zero predict. Next, a forecast is made using only the fields needed for the analysis from the obtained data set. The predict result set is set to boolean values (for variety instead of numeric) and the result is returned from the function.

It now remains to describe the registration of this function in Vertica:

MtCarsAnalizeFactory <- function() { list(name = MtCarsAnalize, udxtype = c("transform"), intype = c("varchar", "int", "float", "int"), outtype = c("varchar", "boolean"), outnames = c("car_model", "predict"), parametertypecallback=MtCarsAnalizeParameters) } MtCarsAnalizeParameters <- function() { parameters <- list(datatype = c("varchar"), length = 65000, scale = c("NA"), name = c("model")) return(parameters) } The MtCarsAnalizeFactory function describes the name of the function used for the operation, the fields for the incoming and outgoing data sets, and the second function describes the parameter “model” being passed. Vertica data types are specified as field types. When transferring and returning data, Vertica automatically converts the values into the required data types for the R language. A table of type compatibility can be found in the Vertica documentation.

You can test the work of the written function for Vertica on the data loaded into R Studio:

test.data = subset(mtcars.data, select = c('car_model', 'cyl', 'wt', 'gear')) test.params = list(model = mtcars.model.text) test.result = MtCarsAnalize(test.data, test.params) Connecting the function library to Vertica

We save all the above functions into a single file "mtcars_func.r" and upload this file to one of the servers from the Vertica cluster in "/ home / dbadmin".

An important point: in R Studio, you need to set the option to save the translation of lines in files to Posix mode (LF). This can be done in the global options, the Code section, the Saving tab. If you are working on Windows, by default the file will be saved with a carriage return and will not be able to be loaded into Vertica.

Connect to the server from the Vertica cluster where you saved the file and load the library:

CREATE LIBRARY MtCarsLibs AS '/home/dbadmin/mtcars_func.r' LANGUAGE 'R'; Now from this library you can register the R function:

CREATE TRANSFORM FUNCTION public.MtCarsAnalize AS LANGUAGE 'R' NAME 'MtCarsAnalizeFactory' LIBRARY MtCarsLibs; GRANT EXECUTE ON TRANSFORM FUNCTION public.MtCarsAnalize(varchar, int, float, int) TO public; Call R functions in Vertica

Call the function R, passing it the text of the model, which was previously saved as a UDF function:

SELECT MtCarsAnalize(car_model, cyl, wt, gear USING PARAMETERS model = public.MtCarsAnalizeModel()) OVER() FROM public.mtcars;

It can be verified that, just as in the previous cases, the prediction that is 100% consistent with the real situation is given:

SELECT c.*, p.predict, p.predict = c.am::int AS valid FROM public.mtcars c INNER JOIN ( SELECT MtCarsAnalize(car_model, cyl, wt, gear USING PARAMETERS model = public.MtCarsAnalizeModel()) OVER() FROM public.mtcars ) p ON c.car_model = p.car_model Note that the transformation functions in Vertica return their own data set from the fields and records defined inside the functions, however, they can be used in queries if they are wrapped in a subquery.

When the R functions are connected, Vertica copies the source code into its installation, which then compiles it into machine code. The source R file uploaded to the server after being connected to the library is not required for further work. The speed of the functions taking into account the binary compilation is high enough to work with large data arrays, however, it is worth remembering that all R operations are carried out in memory and there is a risk to go to a swap if there is a shortage of OS memory to support the needs of Vertica and R .

If the function is called on a partition of data specified in PARTITION BY for OVER, then Vertica parallelizes the execution of each partition on the cluster servers. Thus, if there was a manufacturer still present in the data set, besides the machine model, it would be possible to specify it in PARTITION BY and parallelize the execution of the analysis for each manufacturer.

Vertica's other machine learning capabilities

In addition to R for Vertica, you can develop your own transformation functions in C, Java, and Python. Each of the languages has its own nuances and peculiarities of writing and connecting to Vertica. Together with your own ML, all this gives Vertica a good basis for predictive data analysis.

Thanks and links

I would like to sincerely thank my friend and colleague Vlad Malofeev from Perm, who introduced me to R and helped him figure out one of our joint projects.

Initially, in the project, where the forecast was made on the difficult conditions for the future using the data of the past year, the developers tried to use SQL and Java. This caused great difficulties in view of the quality of these sources and greatly hampered the development of the project. Vlad came to the project with R, we hooked up with R under Vertica, he drove the data to the studio and everything spun and twisted beautifully at once. Literally for weeks, everything that lasted for months was solved, saving the project from complex code.

The following example of data with cars can be downloaded from the GIT repository:

git clone https://github.com/vertica/Machine-Learning-Examples and upload to Vertica:

/opt/vertica/bin/vsql -d <name of your database> -f load_ml_data.sql If you want to go deep into ML and learn how to work with R, I recommend to study the book in Russian “R in action. Analysis and visualization of data in the language of R " . It is written in simple accessible human language and is suitable for beginners who have not previously encountered machine learning.

Here you can see information about the connection of the R library to Vertica.

For those who have already begun to learn and use ML in Python, you should pay attention to IDE Rodeo, this is an analogue of R Studio, because without interactive, qualitative analysis is impossible. I think everything described in this article under R in a similar way can be developed in Python, including saving the model to UDF functions and developing analysis functions for Vertica. If you check, do not forget to unsubscribe about the results in the comments, I would be grateful for the information.

Thank you for your time and I hope that I was able to demonstrate the simplicity and incredible possibilities of the symbiosis of R and Vertica.

Source: https://habr.com/ru/post/436306/