New network automation features in Red Hat Ansible

In the light of the significant improvements implemented in Ansible Engine 2.6, as well as taking into account the release of Ansible Tower 3.3 and the recent release of Ansible Engine 2.7, let's take a closer look at the prospects for network automation.

In this post:

Do not forget that the release of each new version of Ansible is accompanied by updating the porting manual , which will greatly facilitate your transition to the new version.

Connection plugins are what allow Ansible to connect to target hosts in order to perform tasks on them. Starting with Ansible 2.5, a new plugin of this type called network_cli has appeared. It eliminates the need to use the provider parameter and standardizes the order of execution of network modules, with the result that playbooks for network devices are now designed, executed, and work exactly the same way as playbooks for Linux hosts. In turn, for Ansible Tower, the difference between network devices and hosts disappears, and it no longer needs to distinguish between usernames and passwords for network devices and machines. This was discussed in more detail here , but if in brief, the login passwords for the Linux server and the Arista EOS switch or the Cisco router can now be used and stored in the same way.

In Ansible 2.5, it was possible to connect via the eAPI and NX-API methods only using the old provider method. Ansible 2.6 no longer has this restriction and you can freely use the httpapi high-level connection method. Let's see how this is done.

You must first enable the eAPI or NX-API on the network device so that you can then use the httpapi method. This is easily done by the corresponding Ansible command, for example, here's how to enable eAPI on an Arista EOS switch.

When connected to a real Arista EOS switch, the show management api http-commands command will show that the API is enabled:

The following Ansible Playbook script simply executes the show version command, and then in the debug section it returns only the version from the task's JSON output.

Running this script will produce the following result:

NOTE: Arista eAPI does not support abbreviated versions of commands (such as “show ver” instead of “show version2), so you have to write them completely. More information about the httpapi plugin can be found in the documentation.

Ansible 2.6 and October 2.7 released seven new modules for network automation.

The net_get and net_put modules are not tied to the hardware of a particular manufacturer and simply copy files from the network device to the devices using standard SCP or SFTP transfer protocols (specified by the protocol parameter). Both of these modules require the use of the network_cli connection method, and also work only if scp is installed on the controller (pip install scp) and SCP or SFTP is enabled on the network device.

We assume that in the example with our playbook, we have already executed the following command on the Leaf01 device:

Here’s what a playbook with two tasks looks like (the first one copies the file from the Leaf01 device, and the second one copies the file to the Leaf01 device):

The network configuration control protocol NETCONF (Network Configuration Protocol) is developed and standardized by the IETF. According to RFC 6241, NETCONF can be used to set, manipulate, and delete network device configurations. NETCONF is an alternative to the SSH command line (network_cli) and APIs like Cisco NX-API or Arista eAPI (httpapi).

To demonstrate the new netconf modules, we first enable netconf on Juniper routers using the junos_netconf module. Netconf does not support all models of network equipment, so before using it, consult the documentation.

Juniper Networks offers Junos XML API Explorer for Operational Tags and for Configuration Tags . Consider an example of a transactional query that Juniper Networks uses in its documentation to illustrate an RPC query for a specific interface.

This is easily translated into the Ansible Playbook language. get-interface-information is an RPC call, and additional parameters are specified as key-value pairs. In this example, there is only one parameter - interface-name - and on our network device we just want to see em1.0. We use the register parameter set by the task, just to save the results, so we use the debug module and display the results on the terminal screen. The netconf_rpc module also allows you to translate XML output directly into JSON.

After launching the playbook, we’ll get this:

Additional information can be found in the Juniper Platform Guide and in the NETCONF documentation .

The cli_command and cli_config modules that appeared in Ansible Engine 2.7 are also not tied to the equipment of a particular manufacturer and can be used to automate various network platforms. They are repelled by the value of the variable ansible_network_os (specified in the inventory file or in the group_vars folder) in order to use the required pluon cliconf. A list of all the values of the variable ansible_network_os is provided in the documentation . In this version of Ansible, you can still use the old modules for specific platforms, so do not rush to rewrite those with playbooks. Additional information can be found in the official porting guides .

Let's see how these modules are used in the Ansible Playbook. This playbook will run on two Cisco Cloud Services Routers (CSR) systems running IOS-XE. The variable ansible_network_os in the inventory file is set to ios.

Here's how cli_config and cli_command are used:

But the output of this playbook:

Please note that these modules are idempotent as long as you use the appropriate syntax for the corresponding network devices.

The web interface in Red Hat Ansible Tower 3.3 has become more convenient and functional, allowing you to click less on various tasks.

Credential management, task scheduler, inventory scripts, role-based access control, notifications, and much more are now collected in the main menu on the left side of the screen and are available with one click. The Jobs View task view screen immediately displays important additional information: who started the task and when; what inventory were fulfilled during its execution; What project was the playbook taken from?

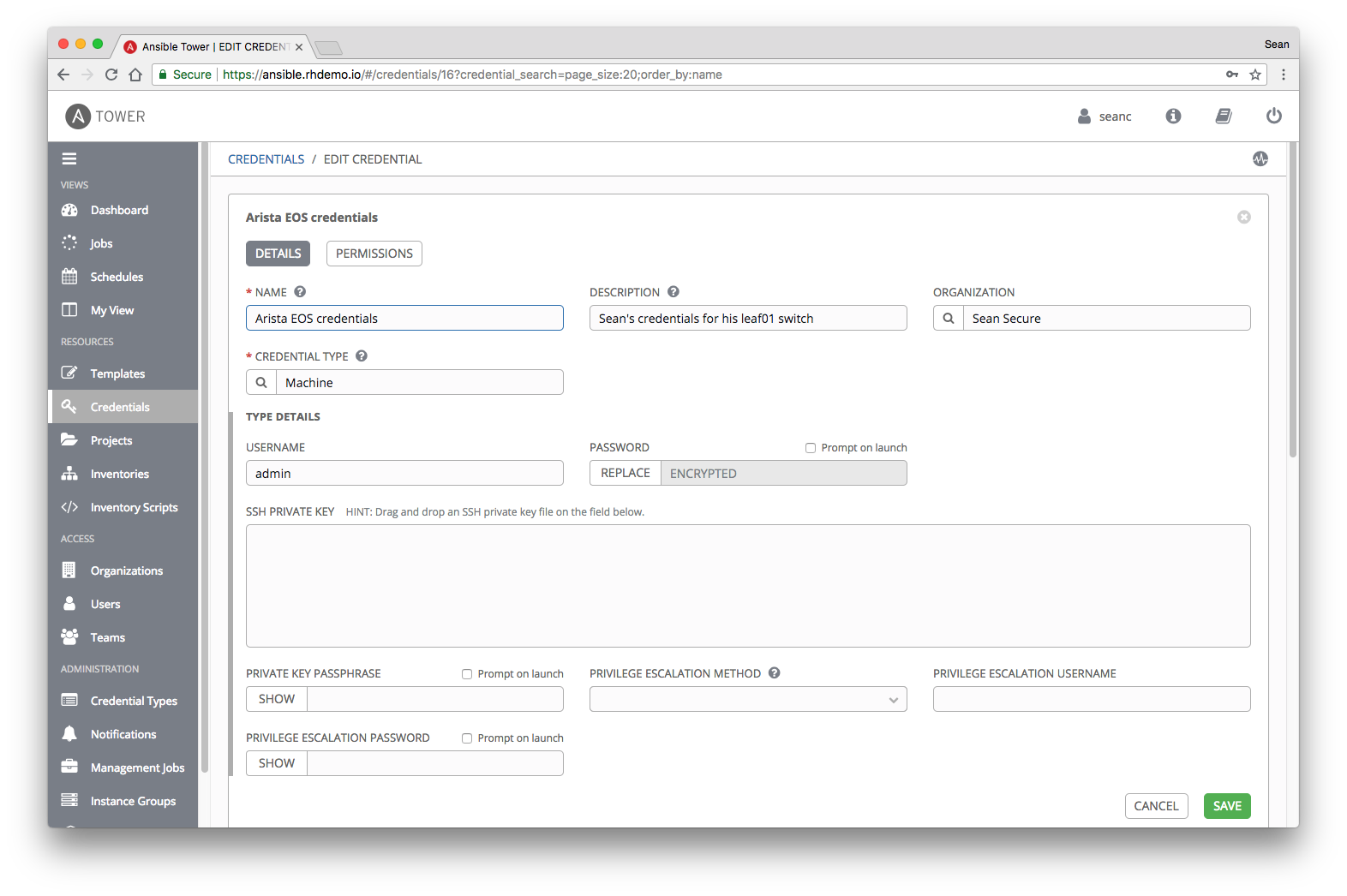

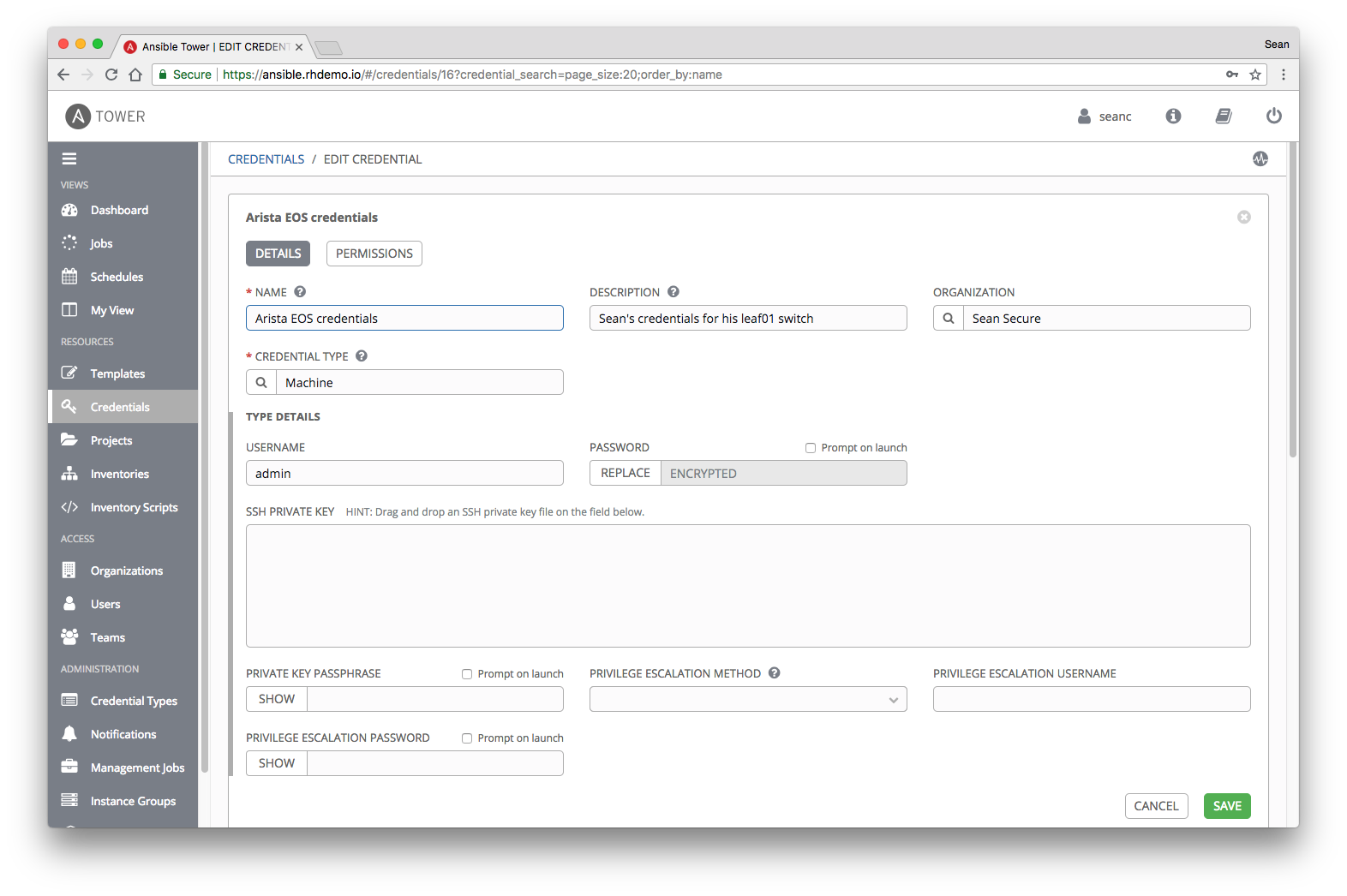

With Red Hat Ansible Tower 3.3, it is much easier to manage logins and passwords for network devices. In the new web interface, the Credentials command is located immediately in the main menu, in the Resources group.

In Ansible Tower 3.3, a special so-called “network” type of credential (Network) remained, which sets the environment variables ANSIBLE_NET_USERNAME and ANSIBLE_NET_PASSWORD used in the old provider method. All this still works, as written in the documentation , so that existing Ansible Playbooks scripts can be used. However, for new high-level connection methods httpapi and network_cli, the network type of credentials is no longer needed, since now Ansible works equally well with login passwords connecting to network devices as well as connecting to Linxu hosts. Therefore, for network devices, you now need to select the Machine credential type — just select it and enter the login password or provide an SSH key.

NOTE: Ansible Tower encrypts the password immediately after you save credentials.

Thanks to encryption, credentials can be safely delegated to other users and groups — they simply won’t see or know the password. For more information about how credentials work, what kind of encryption is used, what other types of credentials (like Amazon AWS, Microsoft Azure and Google GCE) can be found in the documentation for Ansible Tower .

A more detailed description of Red Hat Ansible Tower 3.3 can be found here .

Suppose we want some playbooks to run via Ansible Engine 2.4.2, and others through Ansible Engine 2.6.4. Tower has a special virtualenv tool for creating isolated Python environments to avoid problems with conflicting dependencies and different versions. Ansible Tower 3.3 allows you to set virtualenv at different levels - Organization, Project or Job Template. Here’s what the Job Template we’ve created in Ansible Tower for backing up our network.

When you have at least two virtual environments, the Ansible Environment drop-down menu appears in the Ansible Tower main menu, where you can easily specify which version of Ansible should be used during the task.

Therefore, if you have a mix of playbooks for network automation, some of which use the old provider method (Ansible 2.4 and previous versions), and some are new httpapi plug-ins or network_cli (Ansible 2.5 and higher), you can easily assign each task Ansible version. In addition, this feature will be useful if developers and production use different versions of Ansible. In other words, you can safely upgrade Tower without worrying that after that you will have to switch to any one version of the Ansible Engine, which greatly increases the flexibility in automating various types of network equipment and environments, as well as usage scenarios.

Additional information on using virtualenv can be found in the documentation .

In this post:

- Plug-in connection httpapi.

- Support for Arista eAPI and Cisco NX-API.

- New network automation modules.

- net_get and net_put.

- netconf_get, netconf_rpc, and netconf_config.

- cli_command and cli_config.

- Improved web interface Ansible Tower.

- Management of credentials in Ansible Tower when working with network devices.

- Simultaneous use of different versions of Ansible in Tower

Do not forget that the release of each new version of Ansible is accompanied by updating the porting manual , which will greatly facilitate your transition to the new version.

HTTPAPI Connection Plugin

Connection plugins are what allow Ansible to connect to target hosts in order to perform tasks on them. Starting with Ansible 2.5, a new plugin of this type called network_cli has appeared. It eliminates the need to use the provider parameter and standardizes the order of execution of network modules, with the result that playbooks for network devices are now designed, executed, and work exactly the same way as playbooks for Linux hosts. In turn, for Ansible Tower, the difference between network devices and hosts disappears, and it no longer needs to distinguish between usernames and passwords for network devices and machines. This was discussed in more detail here , but if in brief, the login passwords for the Linux server and the Arista EOS switch or the Cisco router can now be used and stored in the same way.

In Ansible 2.5, it was possible to connect via the eAPI and NX-API methods only using the old provider method. Ansible 2.6 no longer has this restriction and you can freely use the httpapi high-level connection method. Let's see how this is done.

You must first enable the eAPI or NX-API on the network device so that you can then use the httpapi method. This is easily done by the corresponding Ansible command, for example, here's how to enable eAPI on an Arista EOS switch.

[user@rhel7]$ ansible -m eos_eapi -c network_cli leaf01 leaf01 | SUCCESS => { "ansible_facts": { "eos_eapi_urls": { "Ethernet1": [ "https://192.168.0.14:443" ], "Management1": [ "https://10.0.2.15:443" ] } }, "changed": false, "commands": [] } When connected to a real Arista EOS switch, the show management api http-commands command will show that the API is enabled:

leaf01# show management api http-commands Enabled: Yes HTTPS server: running, set to use port 443 <<<rest of output removed for brevity>>> The following Ansible Playbook script simply executes the show version command, and then in the debug section it returns only the version from the task's JSON output.

--- - hosts: leaf01 connection: httpapi gather_facts: false tasks: - name: type a simple arista command eos_command: commands: - show version | json register: command_output - name: print command output to terminal window debug: var: command_output.stdout[0]["version"] Running this script will produce the following result:

[user@rhel7]$ ansible-playbook playbook.yml PLAY [leaf01]******************************************************** TASK [type a simple arista command] ********************************* ok: [leaf01] TASK [print command output to terminal window] ********************** ok: [leaf01] => { "command_output.stdout[0][\"version\"]": "4.20.1F" } PLAY RECAP*********************************************************** leaf01 : ok=2 changed=0 unreachable=0 failed=0 NOTE: Arista eAPI does not support abbreviated versions of commands (such as “show ver” instead of “show version2), so you have to write them completely. More information about the httpapi plugin can be found in the documentation.

New network automation modules

Ansible 2.6 and October 2.7 released seven new modules for network automation.

- net_get - copies a file from a network device to Ansible Controller.

- net_put - copies a file from Ansible Controller to a network device.

- netconf_get — Retrieves configuration / status data from a network device with NETCONF enabled.

- netconf_rpc - performs operations on a network device with NETCONF enabled.

- netconf_config — device configuration of a netconf, the module allows the user to send an XML file to netconf devices and check if the configuration has changed.

- cli_command - runs the cli command on network devices that have a command interface (cli).

- cli_config - sends (push) a text configuration to network devices via network_cli.

net_get and net_put

- net_get - copies a file from a network device to Ansible Controller.

- net_put - copies a file from Ansible Controller to a network device.

The net_get and net_put modules are not tied to the hardware of a particular manufacturer and simply copy files from the network device to the devices using standard SCP or SFTP transfer protocols (specified by the protocol parameter). Both of these modules require the use of the network_cli connection method, and also work only if scp is installed on the controller (pip install scp) and SCP or SFTP is enabled on the network device.

We assume that in the example with our playbook, we have already executed the following command on the Leaf01 device:

leaf01#copy running-config flash:running_cfg_eos1.txt Copy completed successfully. Here’s what a playbook with two tasks looks like (the first one copies the file from the Leaf01 device, and the second one copies the file to the Leaf01 device):

--- - hosts: leaf01 connection: network_cli gather_facts: false tasks: - name: COPY FILE FROM THE NETWORK DEVICE TO ANSIBLE CONTROLLER net_get: src: running_cfg_eos1.txt - name: COPY FILE FROM THE ANSIBLE CONTROLLER TO THE NETWORK DEVICE net_put: src: temp.txt netconf_get, netconf_rpc and netconf_config

- netconf_get — Retrieves configuration / status data from a network device with NETCONF enabled.

- netconf_rpc - performs operations on a network device with NETCONF enabled.

- netconf_config — device configuration of a netconf, the module allows the user to send an XML file to netconf devices and check if the configuration has changed.

The network configuration control protocol NETCONF (Network Configuration Protocol) is developed and standardized by the IETF. According to RFC 6241, NETCONF can be used to set, manipulate, and delete network device configurations. NETCONF is an alternative to the SSH command line (network_cli) and APIs like Cisco NX-API or Arista eAPI (httpapi).

To demonstrate the new netconf modules, we first enable netconf on Juniper routers using the junos_netconf module. Netconf does not support all models of network equipment, so before using it, consult the documentation.

[user@rhel7 ~]$ ansible -m junos_netconf juniper -c network_cli rtr4 | CHANGED => { "changed": true, "commands": [ "set system services netconf ssh port 830" ] } rtr3 | CHANGED => { "changed": true, "commands": [ "set system services netconf ssh port 830" ] } Juniper Networks offers Junos XML API Explorer for Operational Tags and for Configuration Tags . Consider an example of a transactional query that Juniper Networks uses in its documentation to illustrate an RPC query for a specific interface.

<rpc> <get-interface-information> <interface-name>ge-2/3/0</interface-name> <detail/> </get-interface-information> </rpc> ]]>]]> This is easily translated into the Ansible Playbook language. get-interface-information is an RPC call, and additional parameters are specified as key-value pairs. In this example, there is only one parameter - interface-name - and on our network device we just want to see em1.0. We use the register parameter set by the task, just to save the results, so we use the debug module and display the results on the terminal screen. The netconf_rpc module also allows you to translate XML output directly into JSON.

--- - name: RUN A NETCONF COMMAND hosts: juniper gather_facts: no connection: netconf tasks: - name: GET INTERFACE INFO netconf_rpc: display: json rpc: get-interface-information content: interface-name: "em1.0" register: netconf_info - name: PRINT OUTPUT TO TERMINAL WINDOW debug: var: netconf_info After launching the playbook, we’ll get this:

ok: [rtr4] => { "netconf_info": { "changed": false, "failed": false, "output": { "rpc-reply": { "interface-information": { "logical-interface": { "address-family": [ { "address-family-flags": { "ifff-is-primary": "" }, "address-family-name": "inet", "interface-address": [ { "ifa-broadcast": "10.255.255.255", "ifa-destination": "10/8", "ifa-flags": { "ifaf-current-preferred": "" }, "ifa-local": "10.0.0.4" }, <<<rest of output removed for brevity>>> Additional information can be found in the Juniper Platform Guide and in the NETCONF documentation .

cli_command and cli_config

- cli_command - runs a command on network devices using their command line interface (if there is one).

- cli_config - sends (push) a text configuration to network devices via network_cli.

The cli_command and cli_config modules that appeared in Ansible Engine 2.7 are also not tied to the equipment of a particular manufacturer and can be used to automate various network platforms. They are repelled by the value of the variable ansible_network_os (specified in the inventory file or in the group_vars folder) in order to use the required pluon cliconf. A list of all the values of the variable ansible_network_os is provided in the documentation . In this version of Ansible, you can still use the old modules for specific platforms, so do not rush to rewrite those with playbooks. Additional information can be found in the official porting guides .

Let's see how these modules are used in the Ansible Playbook. This playbook will run on two Cisco Cloud Services Routers (CSR) systems running IOS-XE. The variable ansible_network_os in the inventory file is set to ios.

<config snippet from inventory> [cisco] rtr1 ansible_host=34.203.197.120 rtr2 ansible_host=34.224.60.230 [cisco:vars] ansible_ssh_user=ec2-user ansible_network_os=ios Here's how cli_config and cli_command are used:

--- - name: AGNOSTIC PLAYBOOK hosts: cisco gather_facts: no connection: network_cli tasks: - name: CONFIGURE DNS cli_config: config: ip name-server 8.8.8.8 - name: CHECK CONFIGURATION cli_command: command: show run | i ip name-server register: cisco_output - name: PRINT OUTPUT TO SCREEN debug: var: cisco_output.stdout But the output of this playbook:

[user@rhel7 ~]$ ansible-playbook cli.yml PLAY [AGNOSTIC PLAYBOOK] ********************************************* TASK [CONFIGURE DNS] ************************************************* ok: [rtr1] ok: [rtr2] TASK [CHECK CONFIGURATION] ******************************************* ok: [rtr1] ok: [rtr2] TASK [PRINT OUTPUT TO SCREEN] **************************************** ok: [rtr1] => { "cisco_output.stdout": "ip name-server 8.8.8.8" } ok: [rtr2] => { "cisco_output.stdout": "ip name-server 8.8.8.8" } PLAY RECAP ********************************************************** rtr1 : ok=3 changed=0 unreachable=0 failed=0 rtr2 : ok=3 changed=0 unreachable=0 failed=0 Please note that these modules are idempotent as long as you use the appropriate syntax for the corresponding network devices.

Improved Ansible Tower Interface

The web interface in Red Hat Ansible Tower 3.3 has become more convenient and functional, allowing you to click less on various tasks.

Credential management, task scheduler, inventory scripts, role-based access control, notifications, and much more are now collected in the main menu on the left side of the screen and are available with one click. The Jobs View task view screen immediately displays important additional information: who started the task and when; what inventory were fulfilled during its execution; What project was the playbook taken from?

Manage credentials for network devices in Ansible Tower

With Red Hat Ansible Tower 3.3, it is much easier to manage logins and passwords for network devices. In the new web interface, the Credentials command is located immediately in the main menu, in the Resources group.

In Ansible Tower 3.3, a special so-called “network” type of credential (Network) remained, which sets the environment variables ANSIBLE_NET_USERNAME and ANSIBLE_NET_PASSWORD used in the old provider method. All this still works, as written in the documentation , so that existing Ansible Playbooks scripts can be used. However, for new high-level connection methods httpapi and network_cli, the network type of credentials is no longer needed, since now Ansible works equally well with login passwords connecting to network devices as well as connecting to Linxu hosts. Therefore, for network devices, you now need to select the Machine credential type — just select it and enter the login password or provide an SSH key.

NOTE: Ansible Tower encrypts the password immediately after you save credentials.

Thanks to encryption, credentials can be safely delegated to other users and groups — they simply won’t see or know the password. For more information about how credentials work, what kind of encryption is used, what other types of credentials (like Amazon AWS, Microsoft Azure and Google GCE) can be found in the documentation for Ansible Tower .

A more detailed description of Red Hat Ansible Tower 3.3 can be found here .

Simultaneous use of different versions of Ansible in Tower

Suppose we want some playbooks to run via Ansible Engine 2.4.2, and others through Ansible Engine 2.6.4. Tower has a special virtualenv tool for creating isolated Python environments to avoid problems with conflicting dependencies and different versions. Ansible Tower 3.3 allows you to set virtualenv at different levels - Organization, Project or Job Template. Here’s what the Job Template we’ve created in Ansible Tower for backing up our network.

When you have at least two virtual environments, the Ansible Environment drop-down menu appears in the Ansible Tower main menu, where you can easily specify which version of Ansible should be used during the task.

Therefore, if you have a mix of playbooks for network automation, some of which use the old provider method (Ansible 2.4 and previous versions), and some are new httpapi plug-ins or network_cli (Ansible 2.5 and higher), you can easily assign each task Ansible version. In addition, this feature will be useful if developers and production use different versions of Ansible. In other words, you can safely upgrade Tower without worrying that after that you will have to switch to any one version of the Ansible Engine, which greatly increases the flexibility in automating various types of network equipment and environments, as well as usage scenarios.

Additional information on using virtualenv can be found in the documentation .

Source: https://habr.com/ru/post/436318/