How I create VKontakte recommendation service

The summer ended, it was a particularly cold August. My 11th grade started and I realized that now is the last chance (spoiler: no) to somehow improve my professional competence. For several years I have been diligently doing various IT projects, some one, some in a team. But all the sons of my mother's friend are already doing something beautiful. Perhaps useless, but beautiful in appearance. Someone does a sticky simulation of particles in the form of gifs, someone plunges into machine learning and does all sorts of style transfers. And I'm worse? I also want!

An example of the simulation is also under the cut

An example of the simulation of particles from my friend

It was with this thought that my study of the topic of machine learning began. And in terms of training for me there was nothing new, as in any other field of IT practice is needed here. But what if I’m not interested in any tonality analyzers? We have to invent something of our own.

Once again, leafing through the VKontakte news feed, I realized that the communities of this social network are a real treasure for data science. If you process the text, memes in the form of pictures or music from collections, you can get a huge slice of relevant information about modern people: popular music genres, lexicon, or the time of day of the most active people. This field is for many discoveries.

But how much will such statistics be needed for ordinary people? As if I miss my music, or I can’t go to the “Popular” section? It means that we need to think something practical, something that may appeal to at least some significant percentage of users.

It is worth noting that a couple of months before that I read a cool article about creating my own home search engine , which really hurt me. As well as the author, I felt a great craving for huge projects, which day and night handle a huge number of equally great thousands of gigabytes of information.

And now, back in August, which was a little warmer than at the beginning of the article. When I realized that I now have a huge source of information, I realized that it was time. It's time for your own monstrous system. But the main question remained a few more days - what should I do with all this? What to offer the user? I will not torment the reader, I will tell only that it is very difficult for me, like some of my friends, to search for new VKontakte groups that I might like. Now every first public name has a random set of words. Admins are trying to make it the most absurd, probably, this is some kind of race that is understandable only for them.

And then I decided to write a service that will help the user with the choice of communities, recommend what you can subscribe to. That was my idea.

The introduction was not accidental, it should convey my emotions and show that the idea did not appear out of the blue. Actually, like any other ideas on Habré.

My service is still in operation, it has been going on for more than four months (if you count from the moment of the first successful experiment). But I already have an experience that I want to share with you. There will be a brief, concise description of the project. Further I will paint some basic points. And if the article is especially hard for habrayusers, then its continuation will also be released, in which there will be more purely technical information and code.

Everything consists of three parts:

The bot functionality includes searching for new groups and “stripping” text and other information into the database. The engine is also engaged in the further processing of this data, as I will write below. And the site simply allows users to use all of this.

There is nothing new here. I just take the profile of a person in VK and get a list of groups and his friends from him. All this happens with the VK API. And if this API manages to get the list of groups of the user and his friends, then he cannot cope with getting the contents of the groups ... I just bump into the restriction and that's it. Then I remembered that some time ago, VKontakte was promoting its awesome system just for such things. And the name of this system is streaming api.

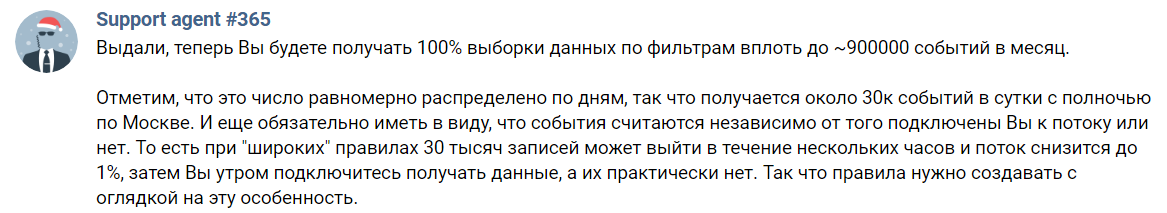

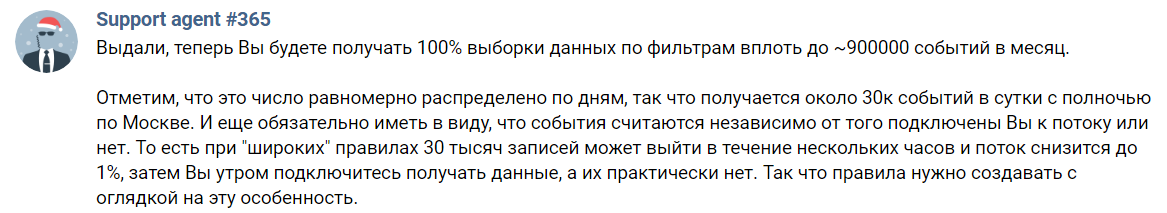

I hope agent # 365 will not hate me all 365 days a year for this screenshot

That is, I can only get 30K events per day. And this number includes comments, and just repost. It is necessary to specify also some words-tags, messages will come only with them. Some of the remaining posts just do not interest me, as it is on the wall with users. It remains quite a bit. For reference, in my current implementation I can receive up to 8.5M records in several days of incomplete uptime (in total - about 10 hours, but there were no exact measurements).

Here I have to say about one rule that I discovered from all this experiment. Never judge a group by one post. Especially if you - artificial intelligence, susceptible to such noise. So, you need at least a few posts to create an objective characteristic of the public. Now let's estimate that some groups with really high-quality content release it every few weeks. And even then I can skip it because of the imperfect Streaming API. And if I get it, how long will it take me to collect the content bit by bit?

I decided that for too long and went the other way. Since I can't get a neat answer from VKontakte in JSON format, then I will parse the walls of the communities. Yes, the task is a little more complicated, and its solution is slowing down, but I have no alternative. That's how I started writing the first block of my system. I wrote it, by the way, in Java using Jsoup, a library that makes it very convenient to extract content from HTML text. I did not forget about the processing of the publication date of the last post, I do not need dead communities, I simply do not index them. Also discarded and posts marked with advertising. Not all administrators make such notes, but this problem is not so easy to solve, I could not create an adequate advertising filter and because of this, for the time being, I refuse such filtering.

This is probably the most interesting part of the project, but I will not describe everything in detail in this publication. If someone will be interested in the details, then ask them to me in all possible ways.

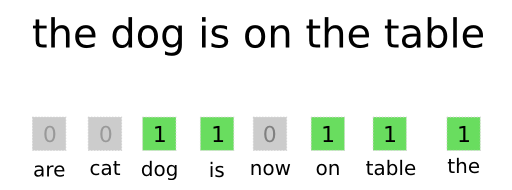

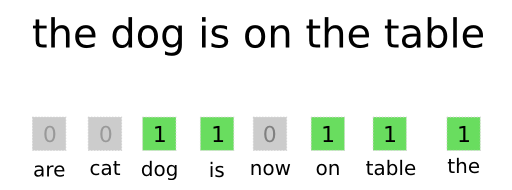

The easiest of all ways to present text in a format understandable for a neural network is bag-of-words.

Another important thing is not to forget about the fact that, for example, the words "Habr" and "(in) Habré" are almost the same to understand. But for the algorithm described above, these are completely different words. In order to fix this, I use the JMorphy2 morphological analyzer. This is the port of the original PyMorphy2 under Java. He can do a lot of cool things, for example, change the shape of a word (case, gender, number, etc.). I need it to get the initial form of the word. As you know, the initial forms of the "identical" words are the same. And this solves the problem above.

Example of a list of words in the dictionary and their numbers (separated by a space)

The list above shows that the word "dragon" has not turned into a "dragon". This is a bit wrong, but even this kind of text preprocessing is enough. And in general, this library has many errors, but most of them do not affect the operation of the system.

The service is focused on the Russian-speaking audience. And for simplicity, only the Russian language is being processed now. All characters (letters among them) from other alphabets are thrown away, as are punctuation marks, numbers, emoji ... Again, a simplification. You also need to remember to filter words from languages that partially use the Russian alphabet, but add their own letters there (Ukrainian, for example).

But I will continue from the moment any post from VKontakte has already turned into a vector (I call it vectorization). Here connects the following link: neural network. I decided to use it as it was interesting to me and managed to find a suitable architecture for my task. This was helped by the first article from the series “Autoencoders in Keras” . And yes, I decided to use the most common autoencoder, as it is beneficial in terms of speed of work and training. But let's get everything in order.

As for all other autoencoders, it is necessary to create two neural networks (encoder and decoder) and combine them into one. I did this as follows:

But why do we need two networks? And in general, the author, you did not describe why this is all !

Easy, now everything will be. To teach, for example, only the encoder is impossible - it will be just not clear how much he made the correct prediction. And for this we are training the second network, which will immediately decode the output of the first (decoder). The same input and output data is still used. A bunch of two networks (just called autoencoder) learns to get the same output from the input data. But all data passes through a narrow "bottleneck" in the form of 64 neurons. This discards the most unnecessary information. Thus, neural networks learn to transmit the most important information about the text as accurately as possible and to emit all noises. Then I just remove the decoder and that's it. You can get a better result, but then you need to either increase the dimension of the output layer of the encoder / input decoder. Then it will be necessary to store more values in the database, it will weigh more + all operations on long vectors will be longer (more on that later). Or you can add layers / neurons, but then the training and vectorization will be longer.

The encoder itself allows "to compress the dimension of the vector." Remember that vector of zeros and ones? So, the encoder allows you to change its size from 30K to 64 without much loss of important information. After this step, you can normally compare two vectors to determine their similarities ...

But we are looking at the work of the service on the recommendation of the communities VK, and not individual records. This means that we need to somehow get the vector of the entire public. This is done very easily, mathematics at the fifth grade level. This is a bit rough method, but it works. I just take and add all the vectors of records from one community (for example, take three small vectors {1, 2, 3}, {2, 3, 4}, {0, 4, 2}, we get the vector {3, 9, 9 }). And I divide each of its elements by the number of vectors (we get the vector {1, 3, 3}). Everything, we have combined all the records of the group into one. In the future, you need to come up with something more cunning, so that you can reject noise in the form of posts with advertising, for example. But now this is enough.

We proceed to the mathematical part itself, but since for some reason everyone is afraid of it, I will sign it as strongly as possible. Let's start with vectors in a mathematical sense. Vector - directed segment. This is such a thing, which has the coordinates of the beginning (it is most convenient to take them with zeros) and the coordinates of the end. It is the latter that are written in curly brackets. For example, the coordinates of the end of the vector {1, 0, 1}YouTube is a point with coordinates (1, 0, 1). But we consider two two-dimensional vectors, veca {5, 2} and vecb {50}. Let's build them in one coordinate system:

Let the vector vecaa pink, vecba - yellow. Then, by the mathematical fact of the ninth class, the cosine of the angle between them is equal to the ratio of their scalar product to the product of their modules.

Dot product < veca , vecb > equal to the sum of the products of the corresponding elements, we have < veca, vecb>=5∗5+2∗0=25 .

The module of the vector is according to the following formula:

Where ax and ay this is the first and second value of vector a, respectively. So:

|a|= sqrt52+22= sqrt29

|b|= sqrt52+02=5

Combining all the formula we get:

cos( veca, vecb)= frac25 sqrt29∗5 approx0.93

The correctness of the calculations can be checked through the trigonometric functions of the resulting right triangle. In the project, all calculations are made using the following formulas, but I only have the coordinates of the end of a vector of two, but sixty-four.

What does this information give? As it turned out, the greater the cosine value (the smaller the angle), the more similar the texts that correspond to the vectors. Thus, the task of finding a group most similar to group A is reduced to finding the cosine of the angle between the vector of this group and all others. Then the engine leaves all groups in which the cosine value paired with A will be greater, say, 0.99. At this stage, you can simply output the result, so it was with me before. But this process is very long already at 100K communities, and what will happen, say, at 1M?

To solve this problem, I use the graph. All groups are represented as its vertices, and two points are connected if the cosine of the angle between the corresponding vectors is greater than 0.99. But if the structure with the name of the graph is incomprehensible to you, then you can simply imagine that I pre-calculate the most similar pairs of communities in the database and save them. And do not forget to update the graph as new groups are added to the database. Yes, it is very long, but still easier for the user than it was before.

I will not describe everything about the site, as this is the simplest and most boring part. I have never written websites from scratch, always used various ready-made engines. But in this project, I realized that it would be easier to make a self-recording. So, the site engine is written in Python 3 using Flask. And the Ninja2 template engine is used, which makes it more convenient to substitute dynamic values into static HTML (and js) code. I did not forget about authorization via VKontakte, since this is the best option. A designer, like a coder, is simply terrible of me; if someone wants to join the project, you are welcome.

The first line of results from the site

I encountered some unpleasant situations that I successfully resolved. The above is a problem with the VK API and its solution was especially unpleasant for the service, as the speed dropped very much. If earlier I received a hundred posts in one request, then now I need to do a few downloads of large HTML code, parse it and only after that process. Now there is a problem with the restriction on getting users, their friends and groups, but this limit does not really hinder at this stage. Then you have to solve it the same way as the first one.

The text in the modern Internet every day becomes less significant. For many years, VKontakte has many groups with videos, pictures and music. And to get good recommendations you need to process them.

But this is not the text and we need truly serious computing power. For example, this is a top-end video card, but now I don’t have it, but I don’t want to take a server for all of this (it’s too early). But in general, I already have the neural network architecture for this task. I'm going to use some kind of neuron to classify images, “cutting off” the upper part from it, which is responsible for the classification of objects itself. All that will map the signs of the image will remain. I, probably, will compress this card with one more encoder and that's it, all subsequent operations are similar to “text”.

There remains one more unresolved question regarding how many requests to the VKontakte site I can do per unit of time. Or for the day. Now I have not rested on this restriction, but it can happen at the most inappropriate moment.

I urgently need a beautiful dashboard and statistics. It is already in the initial state, but it must be finished. From it I want to manage the start / stop of microservices (namely, the engine consists of them), the size of the queues, the processing speed and all that. Well, statistics, who would not want to look at your numbers? Of course, I need to optimize everything and make it suitable for users, in particular, I need to redo the external part of the site, since it does not meet my standards of convenience.

I managed to embark on the path of creating a service with an interesting (at least for me) structure that I am going to use for one of the competitions, allowing me to enter the best university in Russia (I will not say what kind of first non-classical one is). I think that if you still have to work, you can squeeze out of it something more interesting, for example, the analyzer of the quality of publications, make analytics service for the community administration or something else.

I came across many things from the text above for the first time. This means that something I could do wrong. If my readers know what can be improved / corrected, where I may have other problems, and so on - please write about it in the comments. And I ask for criticism about the very quality of the article, so that I can improve it in the next times. Thank.

An example of the simulation is also under the cut

An example of the simulation of particles from my friend

It was with this thought that my study of the topic of machine learning began. And in terms of training for me there was nothing new, as in any other field of IT practice is needed here. But what if I’m not interested in any tonality analyzers? We have to invent something of our own.

Once again, leafing through the VKontakte news feed, I realized that the communities of this social network are a real treasure for data science. If you process the text, memes in the form of pictures or music from collections, you can get a huge slice of relevant information about modern people: popular music genres, lexicon, or the time of day of the most active people. This field is for many discoveries.

But how much will such statistics be needed for ordinary people? As if I miss my music, or I can’t go to the “Popular” section? It means that we need to think something practical, something that may appeal to at least some significant percentage of users.

It is worth noting that a couple of months before that I read a cool article about creating my own home search engine , which really hurt me. As well as the author, I felt a great craving for huge projects, which day and night handle a huge number of equally great thousands of gigabytes of information.

And now, back in August, which was a little warmer than at the beginning of the article. When I realized that I now have a huge source of information, I realized that it was time. It's time for your own monstrous system. But the main question remained a few more days - what should I do with all this? What to offer the user? I will not torment the reader, I will tell only that it is very difficult for me, like some of my friends, to search for new VKontakte groups that I might like. Now every first public name has a random set of words. Admins are trying to make it the most absurd, probably, this is some kind of race that is understandable only for them.

And then I decided to write a service that will help the user with the choice of communities, recommend what you can subscribe to. That was my idea.

The introduction was not accidental, it should convey my emotions and show that the idea did not appear out of the blue. Actually, like any other ideas on Habré.

My service is still in operation, it has been going on for more than four months (if you count from the moment of the first successful experiment). But I already have an experience that I want to share with you. There will be a brief, concise description of the project. Further I will paint some basic points. And if the article is especially hard for habrayusers, then its continuation will also be released, in which there will be more purely technical information and code.

Everything consists of three parts:

- search bot (if you can call it that)

- data processing engine

- website for users (with control panel and monitoring for admins)

The bot functionality includes searching for new groups and “stripping” text and other information into the database. The engine is also engaged in the further processing of this data, as I will write below. And the site simply allows users to use all of this.

Search bot

There is nothing new here. I just take the profile of a person in VK and get a list of groups and his friends from him. All this happens with the VK API. And if this API manages to get the list of groups of the user and his friends, then he cannot cope with getting the contents of the groups ... I just bump into the restriction and that's it. Then I remembered that some time ago, VKontakte was promoting its awesome system just for such things. And the name of this system is streaming api.

Streaming API is a tool for getting a random selection of records from VK. It’s written on the description page that you can simply get up to 1% of all information in order to get up to a hundred, you need to write to Support and explain your intentions to them.It would seem that everything is great. But no. I, like many, probably, missed the most important word in the description above. And this is the preposition "before." Nobody is going to give you all 100% of the data. It's just a beautiful top bar and that's it. In fact, we get this:

I hope agent # 365 will not hate me all 365 days a year for this screenshot

That is, I can only get 30K events per day. And this number includes comments, and just repost. It is necessary to specify also some words-tags, messages will come only with them. Some of the remaining posts just do not interest me, as it is on the wall with users. It remains quite a bit. For reference, in my current implementation I can receive up to 8.5M records in several days of incomplete uptime (in total - about 10 hours, but there were no exact measurements).

Here I have to say about one rule that I discovered from all this experiment. Never judge a group by one post. Especially if you - artificial intelligence, susceptible to such noise. So, you need at least a few posts to create an objective characteristic of the public. Now let's estimate that some groups with really high-quality content release it every few weeks. And even then I can skip it because of the imperfect Streaming API. And if I get it, how long will it take me to collect the content bit by bit?

I decided that for too long and went the other way. Since I can't get a neat answer from VKontakte in JSON format, then I will parse the walls of the communities. Yes, the task is a little more complicated, and its solution is slowing down, but I have no alternative. That's how I started writing the first block of my system. I wrote it, by the way, in Java using Jsoup, a library that makes it very convenient to extract content from HTML text. I did not forget about the processing of the publication date of the last post, I do not need dead communities, I simply do not index them. Also discarded and posts marked with advertising. Not all administrators make such notes, but this problem is not so easy to solve, I could not create an adequate advertising filter and because of this, for the time being, I refuse such filtering.

Engine

This is probably the most interesting part of the project, but I will not describe everything in detail in this publication. If someone will be interested in the details, then ask them to me in all possible ways.

The easiest of all ways to present text in a format understandable for a neural network is bag-of-words.

The process of vectorization and more about BOW

I prepare in advance the dictionary of all frequent words (not forgetting to exclude direct very frequent ones, such as “a”, “what”, “which” and others; they do not distinguish their text from others), in it each word has its number. Then, when I need to process the text with a neural network, I get the number of each word from the dictionary (if it is there) and get a vector (aka array in programming). This is such an ordered set of numbers, in which in place of each number of the word from the text there is a unit (see picture above). It turns out quite understandable for the network data type. I have the length of each vector is 30,000, about as many adequate words I gathered in the early stages of development.

Another important thing is not to forget about the fact that, for example, the words "Habr" and "(in) Habré" are almost the same to understand. But for the algorithm described above, these are completely different words. In order to fix this, I use the JMorphy2 morphological analyzer. This is the port of the original PyMorphy2 under Java. He can do a lot of cool things, for example, change the shape of a word (case, gender, number, etc.). I need it to get the initial form of the word. As you know, the initial forms of the "identical" words are the same. And this solves the problem above.

ноль 6929 маслов 21903 дракончик 25126 цветковый 11441 будда 7374 автобус 1925 супер 1626 плойка 23128 награждение 6241 истеричный 25584 Example of a list of words in the dictionary and their numbers (separated by a space)

The list above shows that the word "dragon" has not turned into a "dragon". This is a bit wrong, but even this kind of text preprocessing is enough. And in general, this library has many errors, but most of them do not affect the operation of the system.

The service is focused on the Russian-speaking audience. And for simplicity, only the Russian language is being processed now. All characters (letters among them) from other alphabets are thrown away, as are punctuation marks, numbers, emoji ... Again, a simplification. You also need to remember to filter words from languages that partially use the Russian alphabet, but add their own letters there (Ukrainian, for example).

But I will continue from the moment any post from VKontakte has already turned into a vector (I call it vectorization). Here connects the following link: neural network. I decided to use it as it was interesting to me and managed to find a suitable architecture for my task. This was helped by the first article from the series “Autoencoders in Keras” . And yes, I decided to use the most common autoencoder, as it is beneficial in terms of speed of work and training. But let's get everything in order.

As for all other autoencoders, it is necessary to create two neural networks (encoder and decoder) and combine them into one. I did this as follows:

from keras.layers import Input, Dense, Flatten, Reshape from keras.models import Model # Размерность кодированного представления encoding_dim = 64 # Энкодер inp = Input(shape=(30000, )) # 30000 - размерность # Кодированное полносвязным слоем представление encoded = Dense(encoding_dim, activation='linear')(inp) # Декодер # Раскодированное другим полносвязным слоем input_encoded = Input(shape=(encoding_dim,)) decoded = Dense(30000, activation='sigmoid')(input_encoded) # Другие модели можно так же использовать как и слои encoder = Model(inp, encoded, name="encoder") decoder = Model(input_encoded, decoded, name="decoder") autoencoder = Model(inp, decoder(encoder(inp)), name="autoencoder") But why do we need two networks? And in general, the author, you did not describe why this is all !

Easy, now everything will be. To teach, for example, only the encoder is impossible - it will be just not clear how much he made the correct prediction. And for this we are training the second network, which will immediately decode the output of the first (decoder). The same input and output data is still used. A bunch of two networks (just called autoencoder) learns to get the same output from the input data. But all data passes through a narrow "bottleneck" in the form of 64 neurons. This discards the most unnecessary information. Thus, neural networks learn to transmit the most important information about the text as accurately as possible and to emit all noises. Then I just remove the decoder and that's it. You can get a better result, but then you need to either increase the dimension of the output layer of the encoder / input decoder. Then it will be necessary to store more values in the database, it will weigh more + all operations on long vectors will be longer (more on that later). Or you can add layers / neurons, but then the training and vectorization will be longer.

The encoder itself allows "to compress the dimension of the vector." Remember that vector of zeros and ones? So, the encoder allows you to change its size from 30K to 64 without much loss of important information. After this step, you can normally compare two vectors to determine their similarities ...

But we are looking at the work of the service on the recommendation of the communities VK, and not individual records. This means that we need to somehow get the vector of the entire public. This is done very easily, mathematics at the fifth grade level. This is a bit rough method, but it works. I just take and add all the vectors of records from one community (for example, take three small vectors {1, 2, 3}, {2, 3, 4}, {0, 4, 2}, we get the vector {3, 9, 9 }). And I divide each of its elements by the number of vectors (we get the vector {1, 3, 3}). Everything, we have combined all the records of the group into one. In the future, you need to come up with something more cunning, so that you can reject noise in the form of posts with advertising, for example. But now this is enough.

We proceed to the mathematical part itself, but since for some reason everyone is afraid of it, I will sign it as strongly as possible. Let's start with vectors in a mathematical sense. Vector - directed segment. This is such a thing, which has the coordinates of the beginning (it is most convenient to take them with zeros) and the coordinates of the end. It is the latter that are written in curly brackets. For example, the coordinates of the end of the vector {1, 0, 1}

Let the vector vecaa pink, vecba - yellow. Then, by the mathematical fact of the ninth class, the cosine of the angle between them is equal to the ratio of their scalar product to the product of their modules.

Dot product < veca , vecb > equal to the sum of the products of the corresponding elements, we have < veca, vecb>=5∗5+2∗0=25 .

The module of the vector is according to the following formula:

| veca|= sqrta2x+a2y

Where ax and ay this is the first and second value of vector a, respectively. So:

|a|= sqrt52+22= sqrt29

|b|= sqrt52+02=5

Combining all the formula we get:

cos( veca, vecb)= frac25 sqrt29∗5 approx0.93

The correctness of the calculations can be checked through the trigonometric functions of the resulting right triangle. In the project, all calculations are made using the following formulas, but I only have the coordinates of the end of a vector of two, but sixty-four.

What does this information give? As it turned out, the greater the cosine value (the smaller the angle), the more similar the texts that correspond to the vectors. Thus, the task of finding a group most similar to group A is reduced to finding the cosine of the angle between the vector of this group and all others. Then the engine leaves all groups in which the cosine value paired with A will be greater, say, 0.99. At this stage, you can simply output the result, so it was with me before. But this process is very long already at 100K communities, and what will happen, say, at 1M?

To solve this problem, I use the graph. All groups are represented as its vertices, and two points are connected if the cosine of the angle between the corresponding vectors is greater than 0.99. But if the structure with the name of the graph is incomprehensible to you, then you can simply imagine that I pre-calculate the most similar pairs of communities in the database and save them. And do not forget to update the graph as new groups are added to the database. Yes, it is very long, but still easier for the user than it was before.

Site

I will not describe everything about the site, as this is the simplest and most boring part. I have never written websites from scratch, always used various ready-made engines. But in this project, I realized that it would be easier to make a self-recording. So, the site engine is written in Python 3 using Flask. And the Ninja2 template engine is used, which makes it more convenient to substitute dynamic values into static HTML (and js) code. I did not forget about authorization via VKontakte, since this is the best option. A designer, like a coder, is simply terrible of me; if someone wants to join the project, you are welcome.

The first line of results from the site

Problems

I encountered some unpleasant situations that I successfully resolved. The above is a problem with the VK API and its solution was especially unpleasant for the service, as the speed dropped very much. If earlier I received a hundred posts in one request, then now I need to do a few downloads of large HTML code, parse it and only after that process. Now there is a problem with the restriction on getting users, their friends and groups, but this limit does not really hinder at this stage. Then you have to solve it the same way as the first one.

The text in the modern Internet every day becomes less significant. For many years, VKontakte has many groups with videos, pictures and music. And to get good recommendations you need to process them.

But this is not the text and we need truly serious computing power. For example, this is a top-end video card, but now I don’t have it, but I don’t want to take a server for all of this (it’s too early). But in general, I already have the neural network architecture for this task. I'm going to use some kind of neuron to classify images, “cutting off” the upper part from it, which is responsible for the classification of objects itself. All that will map the signs of the image will remain. I, probably, will compress this card with one more encoder and that's it, all subsequent operations are similar to “text”.

There remains one more unresolved question regarding how many requests to the VKontakte site I can do per unit of time. Or for the day. Now I have not rested on this restriction, but it can happen at the most inappropriate moment.

Future plans

I urgently need a beautiful dashboard and statistics. It is already in the initial state, but it must be finished. From it I want to manage the start / stop of microservices (namely, the engine consists of them), the size of the queues, the processing speed and all that. Well, statistics, who would not want to look at your numbers? Of course, I need to optimize everything and make it suitable for users, in particular, I need to redo the external part of the site, since it does not meet my standards of convenience.

Conclusion

I managed to embark on the path of creating a service with an interesting (at least for me) structure that I am going to use for one of the competitions, allowing me to enter the best university in Russia (I will not say what kind of first non-classical one is). I think that if you still have to work, you can squeeze out of it something more interesting, for example, the analyzer of the quality of publications, make analytics service for the community administration or something else.

I came across many things from the text above for the first time. This means that something I could do wrong. If my readers know what can be improved / corrected, where I may have other problems, and so on - please write about it in the comments. And I ask for criticism about the very quality of the article, so that I can improve it in the next times. Thank.

Source: https://habr.com/ru/post/436636/