Combining projects in different data centers

In this article, we will look at why the traditional approach to the interconnection of local networks at the L2 level is inefficient with a significant increase in the amount of equipment, and we will also explain what problems we managed to solve in the process of combining projects located at different sites.

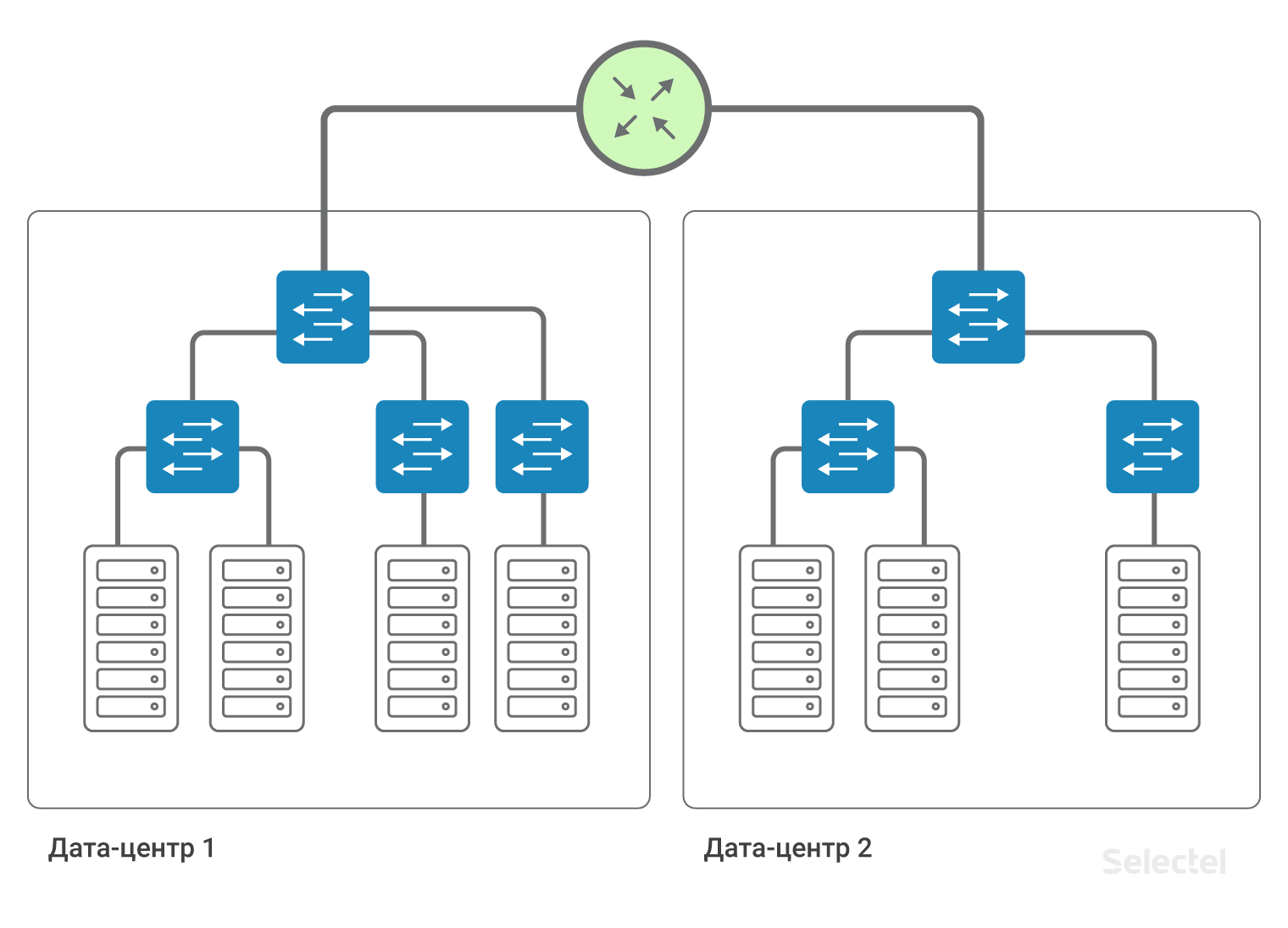

Normal L2 scheme

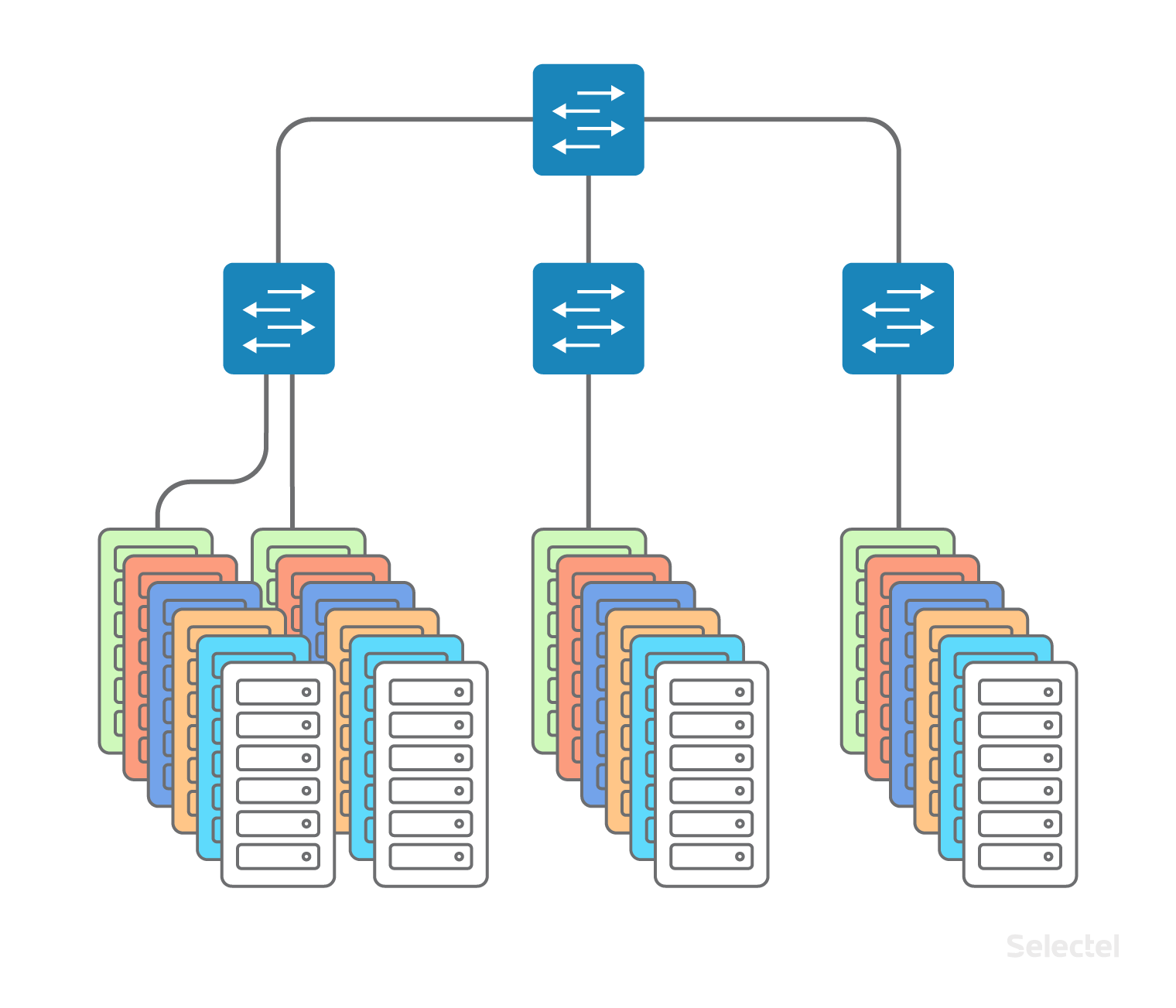

As the IT infrastructure in the data center grows, customers will need to combine servers, storage systems, firewalls into a single network. For this, Selectel initially proposes to use a local network.

The local network is designed as a classic “campus” network within the same data center, except that access switches are located directly in racks with servers. Access switches are further integrated into one aggregation level switch. Each client can order a local network connection for any device he rents or places with us in the data center.

For the organization of a local network, dedicated access and aggregation switches are used, so problems in the Internet network do not affect the local network.

It doesn't matter what rack the next server is in - Selectel integrates servers into a single local area network, and you don’t need to think about switches or server locations. You can order a server when it is needed, and it will be connected to the local network.

L2 works great when the size of the data center is small, when not all racks are filled. But as the number of racks, servers in racks, switches, clients increases, the scheme becomes much more difficult to maintain.

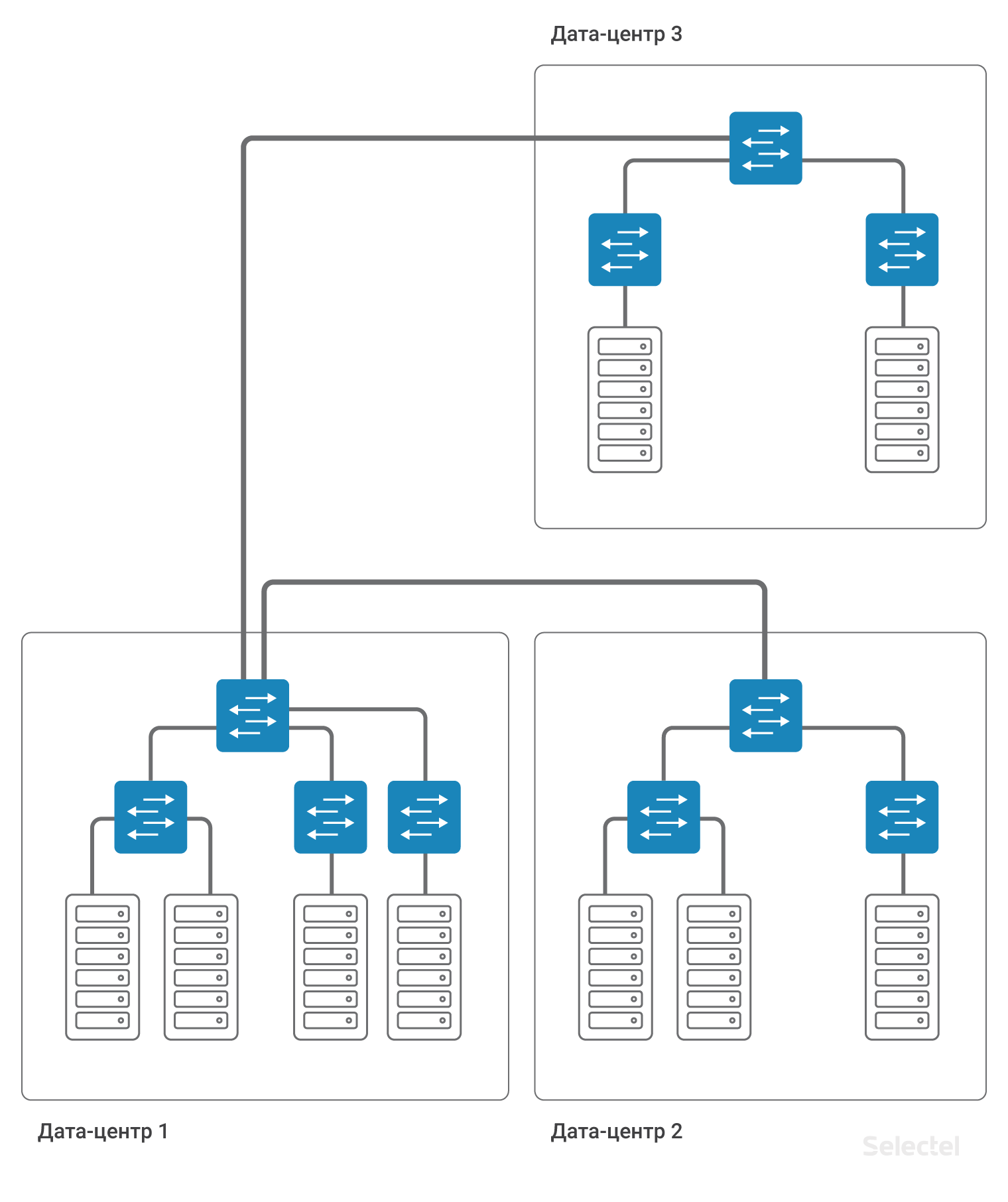

Servers of one client can be located in several data centers to ensure disaster recovery or if it is impossible to locate a server in an already existing data center (for example, all racks and all places are occupied). Connectivity is also required between several data centers - between servers over a local network.

As the number of data centers, racks, servers grows, the scheme becomes more and more complicated. At first, connectivity between servers of different data centers was carried out simply at the level of aggregation switches using VLAN technology.

But the VLAN identification space is very limited (4095 VLAN ID). So for each data center you have to use your own set of VLANs, which reduces the number of possible identifiers that can be used between data centers.

L2 problems

When using a scheme at the L2 level using VLAN, incorrect operation of one of the servers in the data center can lead to interruptions in the provision of services on other servers. Of the most frequent problems include:

- Problems with STP (Spanning-Tree Protocol)

- Broadcast Storm Issues (Broadcast Storm)

- Problems with incorrect multicast processing

- Human factor (link transfer, VLAN transfer)

- Problems with reserving L2

- Problems with unknown-unicast traffic

- Problems with the number of MAC addresses

Problems with STP often relate to settings of client servers or client equipment. Unlike popular traffic exchange points, we cannot completely filter STP on access ports and extinguish ports when a STP PDU arrives. At STP, a number of network equipment manufacturers implement the basic functionality of data center switches, such as loop detection in the network.

If STP does not work correctly on the client side, the entire STP domain of at least one access switch may be affected. The use of STP extensions such as MSTP is also not a solution, since the number of ports, VLANs, and switches often exceeds the architectural scalability of the STP protocol.

Broadcast

The network in the data center can be built on devices from different manufacturers. Sometimes even differences in the software version of the switches are enough for the switches to handle STP differently. For example, there are 280 racks in the Dubrovka 3 data center, which exceeds the maximum possible number of switches in one STP domain.

With widespread use of STP in such a network, the response time to any changes, in particular, simply turning the port on or off, will exceed all waiting thresholds. You do not want one of the clients to turn on the port if you have connectivity over the network for a few minutes?

Problems with broadcast traffic often arise both due to incorrect actions on the server (for example, creating one bridge between several server ports) and due to incorrect software settings on the servers. We are trying to level out possible problems with the amount of broadcast traffic that enters our network. But we can do this on one server connection port, and if there are 5 servers in one switch, each of which does not exceed the set thresholds, then together they can generate enough traffic to control the aggregation switch. From their own practice, problems with a broadcast storm from the server side can be caused by a specific failure of the server's network card.

By protecting the entire network, the aggregation switch will “put” the port on which the network anomaly occurred. Unfortunately, this will lead to the inoperability of both the five servers that caused this incident and the inoperability of other servers (up to several racks in the data center).

Multicast

Problems with incorrect processing of multicast traffic are very specific problems arising in the complex due to incorrect operation of the software on the server and software on the switch. For example, between multiple servers configured Corosync in multicast mode. Regularly exchange Hello-packages is carried out in small volumes. But in some cases, servers with Corosync installed can forward a lot of packets. This volume already requires either special configuration of switches, or the use of correct processing mechanisms (IGMP join and others). In case of incorrect triggering of the mechanisms or when thresholds trigger, there may be service interruptions that will affect other customers. Of course, the fewer clients on the switch, the less the likelihood of problems from another client.

The human factor is a rather unforeseen kind of problems that may arise when working with network equipment. When a network administrator is alone and he correctly builds his work, documents actions and considers the consequences of his actions, then the probability of an error is rather small. But when the number of equipment in the data center operation grows, when there are a lot of employees, when there are many tasks, then a completely different approach to work organization is required.

Some types of standard actions in order to avoid human error are automated, but many types of actions at the current time cannot be automated, or the price of automation of such actions is unreasonably high. For example, physical switching of patch cords to patch panels, connecting new links, replacing existing links. Everything connected with physical contact with the SCS. Yes, there are patch panels that allow switching remotely, but they are very expensive, require a lot of preparatory work and are very limited in their capabilities.

No automatic patch panel will lay a new cable if needed. You can make a mistake when setting up a switch or router. Specify the wrong port number, VLAN number, be sealed when entering a numeric value. When specifying any additional settings, do not take into account their effect on the existing configuration. As the complexity of the scheme increases, the backup scheme becomes more complex (for example, due to the scaling limit being reached by the current scheme), the probability of human errors increases. Anyone can have a human error, no matter what device is in the configuration stage, a server, a switch, a router, or some kind of transit device.

Reserving L2, at first glance, seems like a simple task for small networks. In the course of Cisco ICND are the basics of using STP as a protocol, just originally intended for the organization of redundancy on L2. STP has a lot of restrictions, which in this protocol, as they say, "by design". We must not forget that any STP domain has a very limited “width”, that is, the number of devices in one STP domain is quite small compared to the number of racks in the data center. The STP protocol in its original version divides links into used and backup ones, which does not ensure complete utilization of uplinks during normal operation.

Using other L2 reservation protocols imposes limitations. For example, ERPS (Ethernet Ring Protection Switching) - on the physical topology used, on the number of rings on one device, on the disposal of all links. The use of other protocols, as a rule, is associated with proprietary changes from different manufacturers or restricts the construction of a network to one selected technology (for example, TRILL / SPBm-factory using Avaya equipment).

Unknown-unicast

I would like to highlight a subtype of problems with unknown-unicast traffic. What it is? Traffic that is destined for some specific IP address over L3, but is broadcast over the network over L2, that is, is sent to all ports belonging to this VLAN. This situation may occur for a number of reasons, for example, when receiving DDoS to an unused IP address. Or if during a typo in the server configuration a non-existent address on the network was specified as a backup, and a static ARP entry at that address historically exists on the server. Unknown-unicast appears in the case of the presence of all records in ARP-tables, but in the absence of the MAC address of the recipient in the switching tables of transit switches.

For example, the port behind which the network host is located with this address, very often goes off. This type of traffic is limited by transit switches and is often served in the same way as broadcast or multicast. But unlike them, unknown-unicast traffic can be initiated “from the Internet”, and not just from the client’s network. Particularly high is the risk of unknown-unicast traffic in cases where the filtering rules on border routers allow IP addresses to be exchanged outside.

Even the sheer number of MAC addresses can sometimes be a problem. It would seem that with a data center size of 200 racks, 40 servers per rack, it is unlikely that the number of MAC addresses will greatly exceed the number of servers in the data center. But this is no longer true assertion, since one of the virtualization systems can be run on the servers, and each virtual machine can be represented by its MAC address, or even several (when emulating several network cards in a virtual machine, for example). In total, we can get more than several thousand legitimate MAC addresses from one rack to 40 servers.

Such a number of MAC addresses may affect the fullness of the switching table on some switch models. In addition, for certain switch models, hashing is applied when filling the switching table, and some MAC addresses may cause hash collisions leading to the appearance of unknown-unicast traffic. Randomly searching MAC addresses on a leased server at a speed of, say, 4,000 addresses per second can cause an overflow of the switching table on the access switch. Naturally, restrictions on the number of MAC addresses that can be studied on switch ports are applied on the switches, but depending on the specific implementation of this mechanism, the data can be interpreted differently.

Again, sending traffic to the MAC address filtered by this mechanism leads to the appearance of unknown-unicast traffic. The most unpleasant thing in this situation is that switches are rarely tested by the manufacturer for self-recovery after cases with an overflow of the switching table. A single table overflow caused by, say, a single client error in the hping parameters or in writing a script that monitors its infrastructure, can lead to a switch reboot and interruption of communication for all servers located in the rack. If such an overflow occurs on the aggregation level switch, then rebooting the switch can lead to 15-minute downtime for the entire data center's local network.

I want to convey that the use of L2 is justified only for limited cases and imposes many restrictions. The segment size, the number of L2 segments - these are all the parameters that need to be evaluated each time you add a new VLAN with L2 connectivity. And the smaller the L2 segments, the simpler and, as a result, the more reliable the network is in service.

Typical L2 use cases

As already mentioned, with the gradual development of infrastructure in one data center, L2 local network is used. Unfortunately, this use is also implied when projects are developed in another data center or in another technology (for example, virtual machines in the cloud).

Front and back-end communication, backup

As a rule, the use of a local network begins with the separation of the functionality of the front and back-end services, the allocation of the DBMS to a separate server (to improve performance, to separate the type of OS on the application server and the DBMS). Initially, the use of L2 for these purposes seems to be justified, there are only a few servers in the segment, often they are even located in the same rack.

Servers are included in one VLAN, in one or several switches. As the amount of equipment increases, more and more new servers are included in the switches of the new racks in the data center, from which the L2-domain begins to grow in width.

New servers appear, including backup database servers, backup servers, and the like. As long as the project lives in the same data center, scaling problems, as a rule, do not arise. Application developers simply get used to the fact that on the next server in the local network the IP address changes only in the last octet, and there is no need to prescribe any individual routing rules.

Developers are asking to use a similar scheme when the project grows, when the following servers are already rented in another data center or when some part of the project moves to virtual machines in the cloud . In the picture, everything looks very simple and beautiful:

It would seem that you just need to connect two aggregation switches in DC1 and DC2 with one VLAN. But what is behind this simple act?

Resource reservation

First, we are increasing the width of the L2 domain, so all the potential problems of the DC1 LAN network may arise in DC2. Who would like that his servers are located in DC2, and the incident related to the inaccessibility of the local network, will occur due to incorrect actions inside DC1?

Secondly, you need to take care of reserving this VLAN. The aggregation switch in each data center is the point of failure. The cable between data centers is another point of failure. Each point of failure should be reserved. Two aggregation switches, two cables from aggregation switches to access switches, two cables between data centers ... Each time the number of components increases, and the scheme becomes more complicated.

The complexity of the scheme is caused by the need to reserve every element in the system. For full redundancy of devices and links, it is required to duplicate almost every element. On such a large network, it is no longer possible to use STP to organize redundancy. It would be possible to represent all network elements, in particular, access switches, as components of MPLS-clouds, then the redundancy would be due to the functionality of the MPLS protocol.

But MPLS devices are usually twice as expensive as non-MPLS devices. And it should be noted that the MPLS switch in 1U, which has a good degree of scalability, the implementation of the full-fledged MPLS functionality in the Control-plane, in practice, did not exist until recently. As a result, I want to get rid of or minimize the impact of L2 problems on the existing network, but at the same time preserve the possibility of reserving resources.

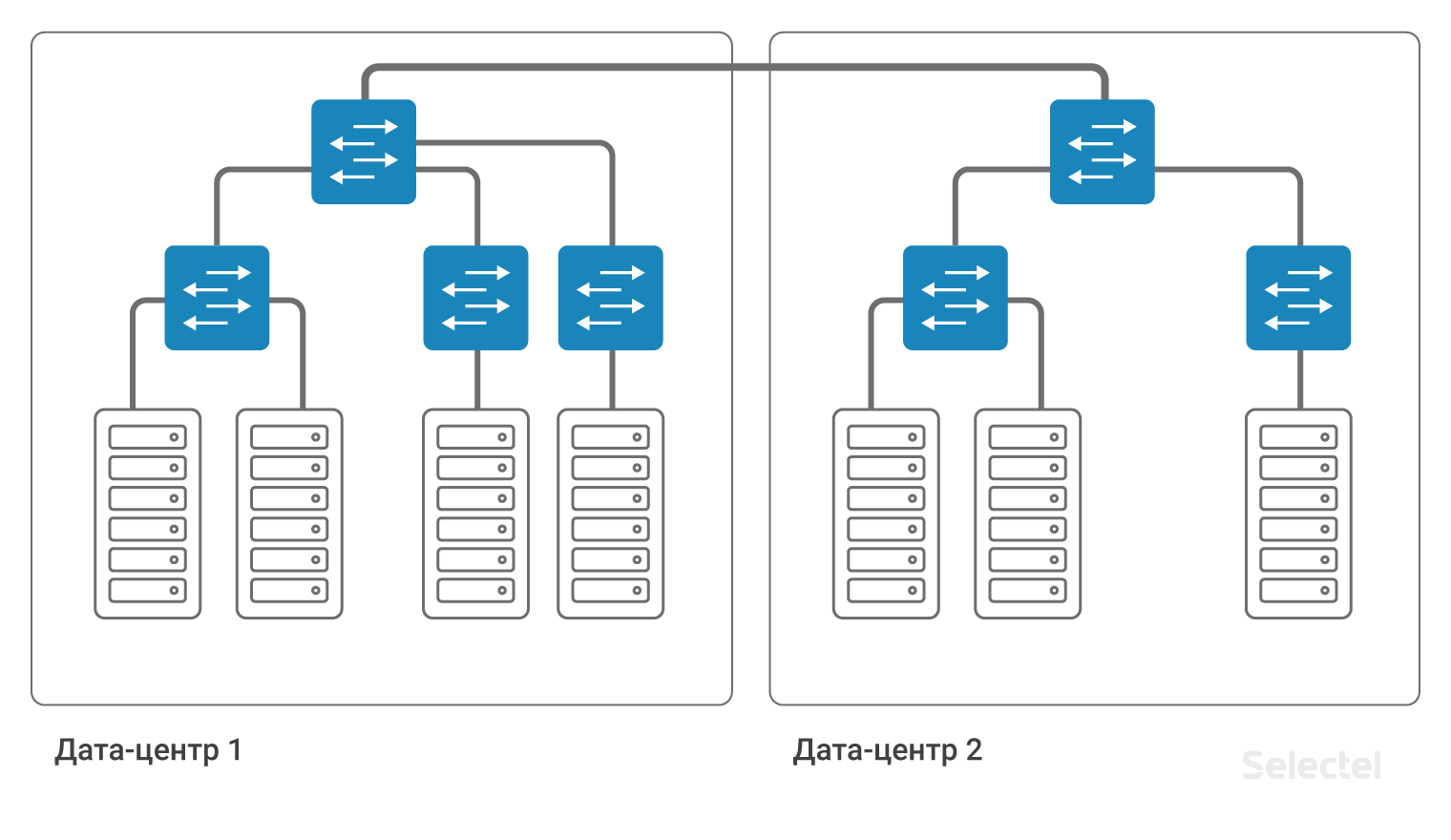

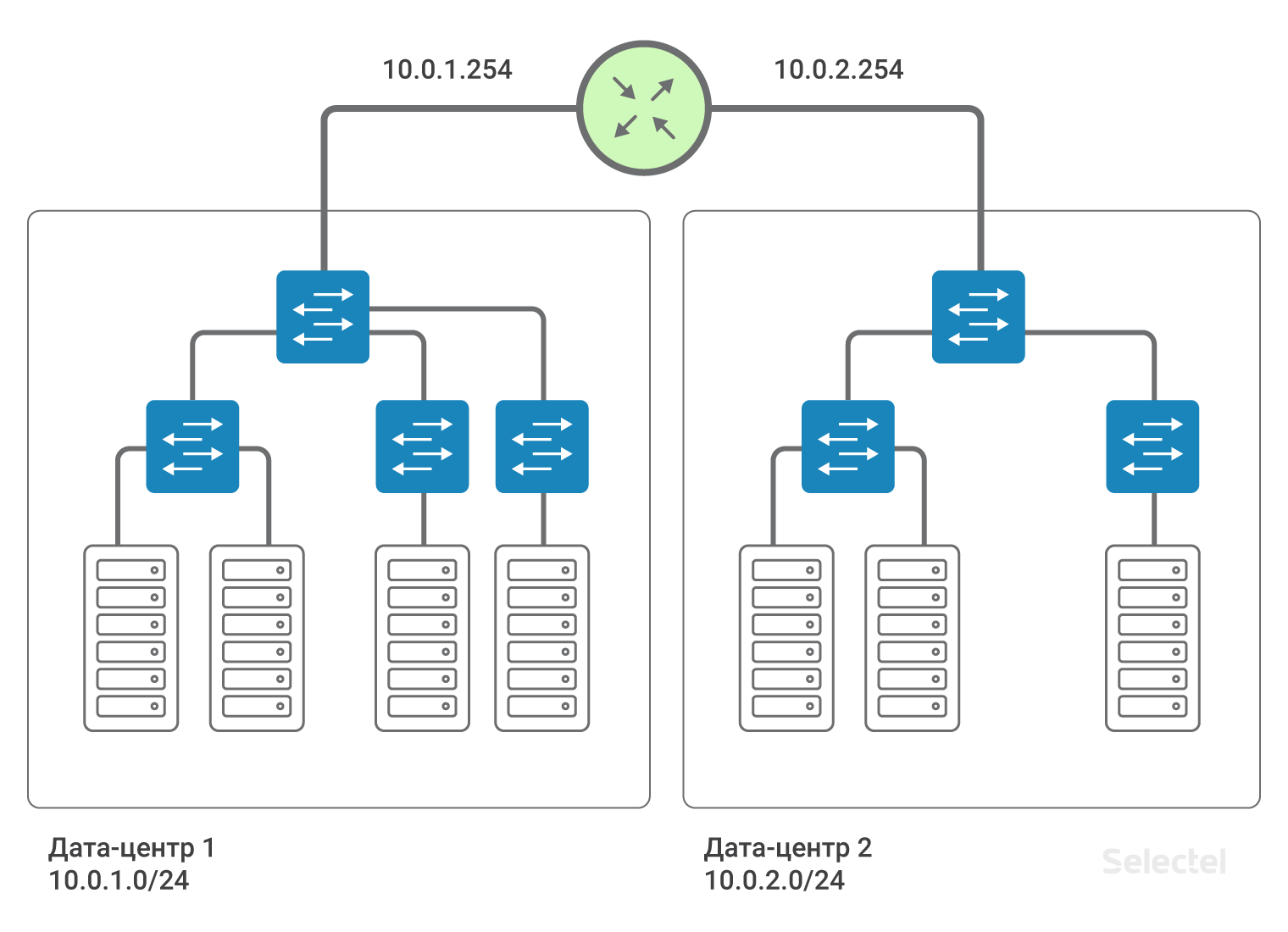

Transition to L3

If each link on the network is presented as a separate IP segment, and each device is represented as a separate router, then we do not need redundancy at the L2 level. Reservation of links and routers is provided through dynamic routing protocols and redundancy routing in the network.

Inside the data center, we can save the existing schemes of interaction between servers with each other via L2, and access to servers in another data center will be carried out via L3.

Thus, data centers are interconnected by L3-connectivity. That is, it is emulated that a router is installed between the data centers (in fact, several, for reservation). This allows you to split L2 domains between data centers, use your own VLAN space in each data center, and communicate between them. For each of the clients, you can use duplicate ranges of IP addresses, the networks are completely isolated from each other, and you cannot get into the network of another client from the network of one client (unless both clients agree to this connection).

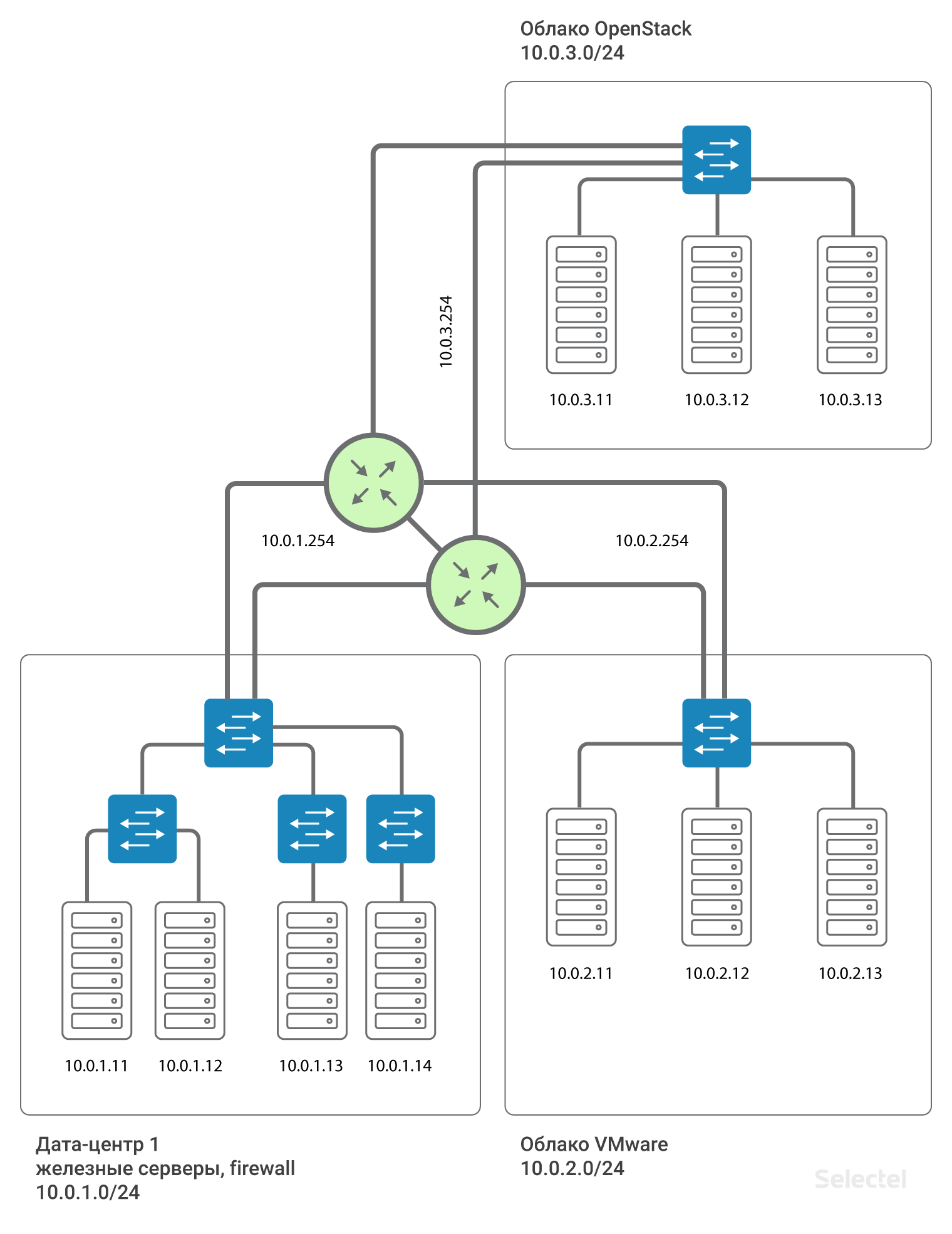

We recommend using IP segments from 10.0.0.0/8 for local networks. For the first data center, the network will be, for example, 10.0.1.0/24, for the second - 10.0.2.0/24. Selectel on the router writes the IP address from this subnet. As a rule, addresses .250-.254 are reserved for Selectel network devices, and address .254 serves as a gateway for accessing other local networks. On all devices in all data centers prescribed route:

route add 10.0.0.0 mask 255.0.0.0 gw 10.0.x.254Where x is the number of the data center. Due to this route, servers in data centers “see” each other by routing.

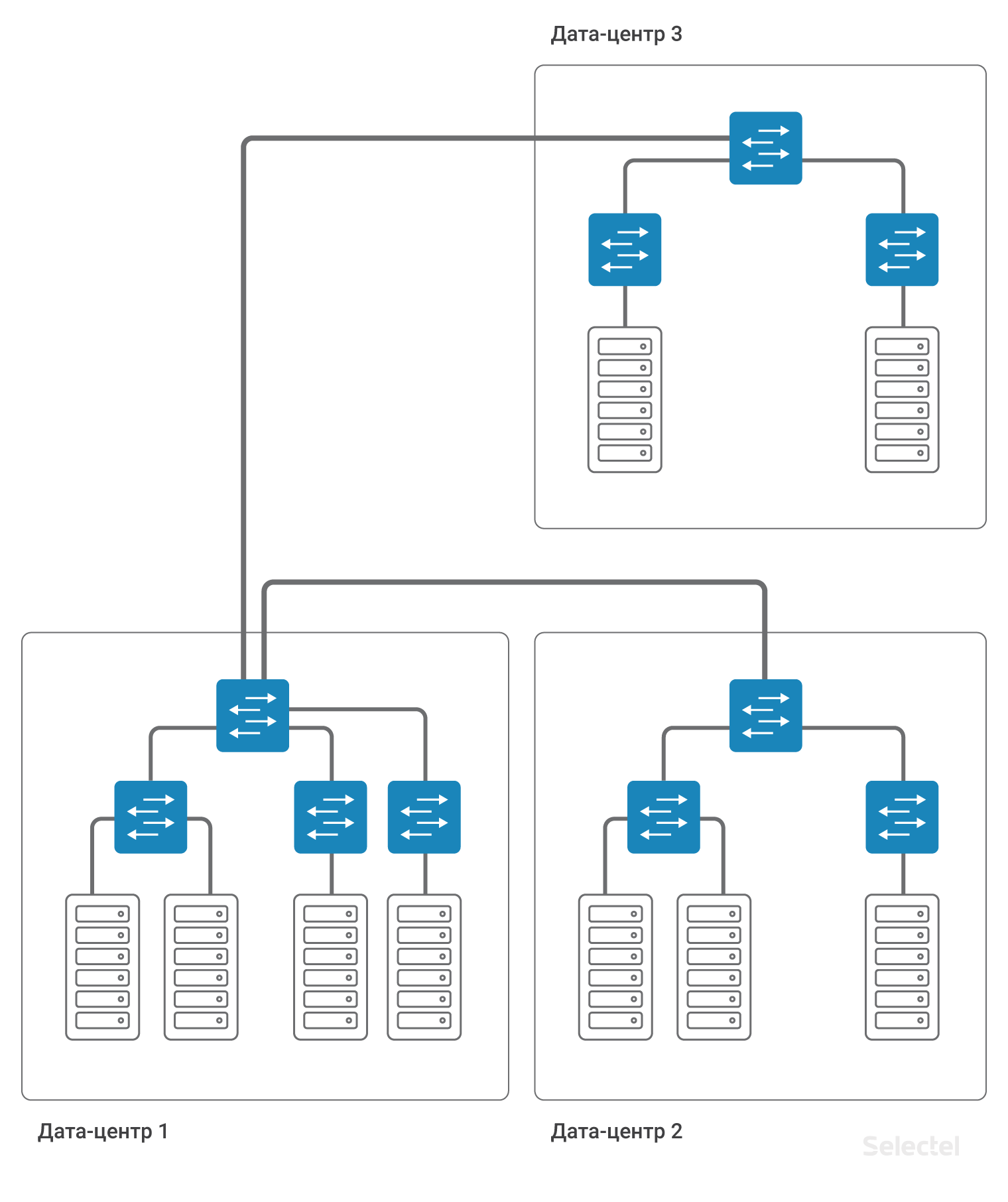

The presence of such a route simplifies the scaling of the scheme in the case, for example, of the appearance of a third data center. Then for servers in the third data center, IP addresses from the following range, 10.0.3.0/24, are written, on the router - the address is 10.0.3.254.

A distinctive feature of the implementation of such a scheme is that it does not require additional redundancy in case of failure of the data center or external communication channels. So, for example, when a data center 1 fails, the connection between data center 2 and data center 3 is completely retained, and when implementing a scheme with L2 feed between data centers through one of them, as in the figure:

Communication between data center 2 and data center 3 depends on the performance of data center 1. Or, additional links and the use of complex L2 redundancy schemes are required. And while maintaining the L2 scheme, the entire network is still very sensitive to incorrect switching, the formation of switching loops, various traffic storms and other troubles.

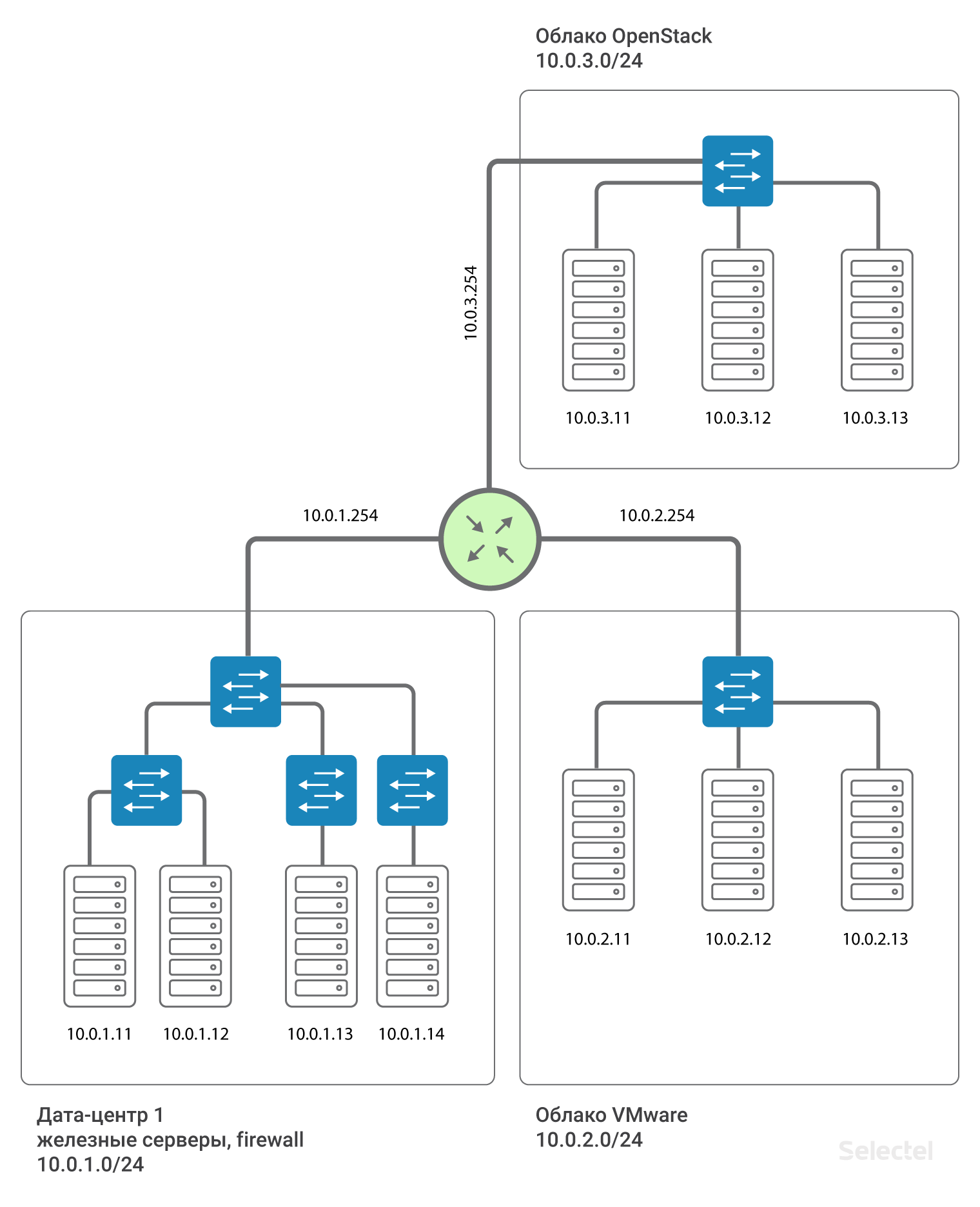

L3 segments within projects

In addition to using different L3-segments in different data centers, it makes sense to allocate a separate L3 network for servers in different projects, often made using different technologies. For example, hardware servers in a data center in one IP subnet, virtual servers in the same data center, but in a VMware cloud, in another IP subnet, some servers related to staging, in a third IP subnet . Then random errors in one of the segments do not lead to the complete failure of all servers entering the local network.

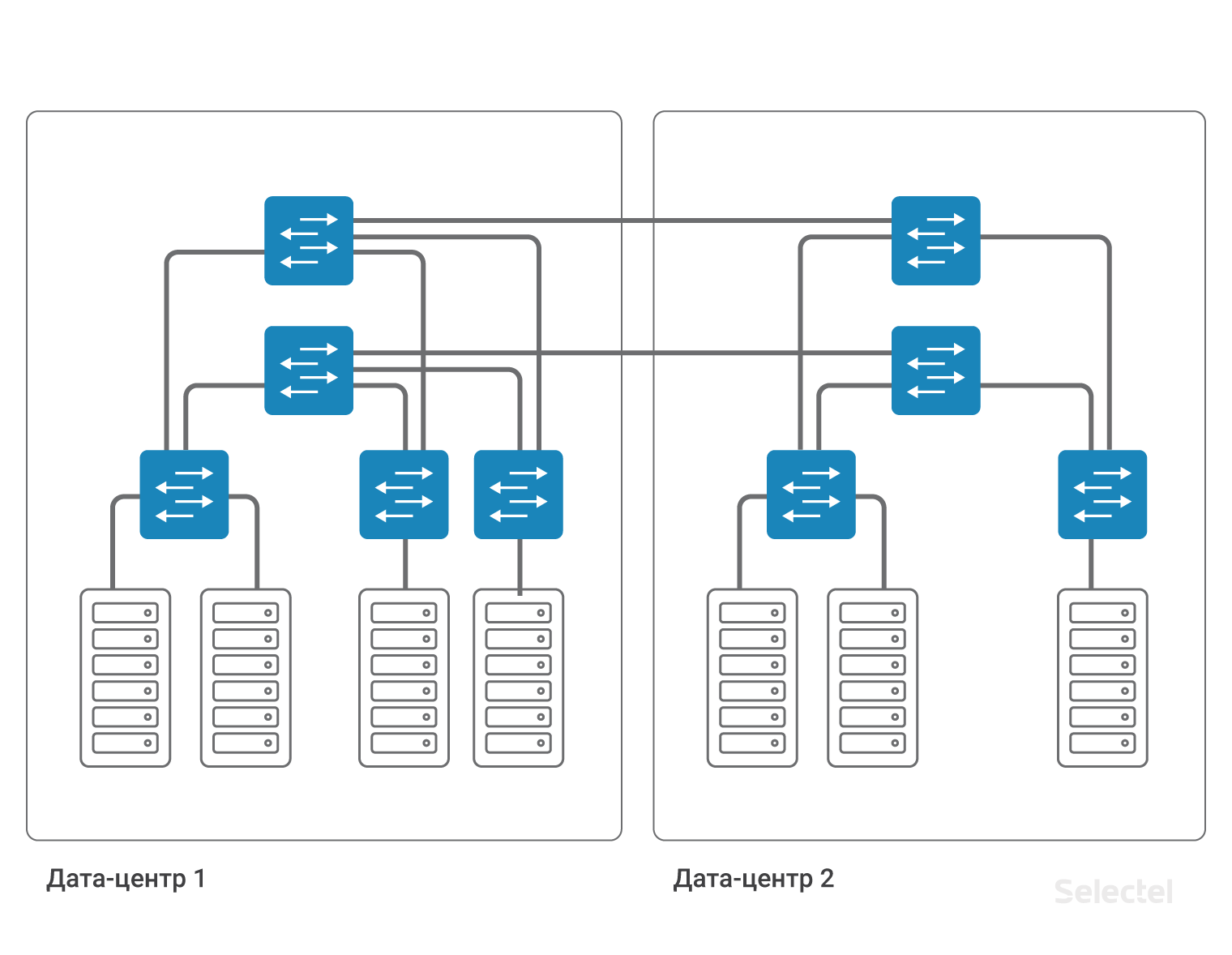

Router reservation

This is all impressive, but between projects a single point of failure is obtained - this is a router. How to be in this case? In fact, the router is not alone. Two routers are allocated for each data center, and for each customer they form Virtual IP .254 using VRRP protocol.

The use of VRRP between two adjacent devices having a common L2 segment is justified. For data centers spaced apart, different routers are used, and MPLS is organized between them. Thus, each client connecting to the local network according to this scheme is connected to a separate L3VPN made for it on these MPLS routers. And the scheme, in approaching reality, looks like this:

The gateway address for each .254 segment is reserved for VRRP between the two routers.

Conclusion

Summarizing the above, changing the type of local network from L2 to L3 allowed us to maintain scalability, increased reliability and fault tolerance, and also allowed us to implement additional redundancy schemes. Moreover, it made it possible to circumvent the existing limitations and “pitfalls” of the L2.

As projects and data centers grow, existing typical solutions reach their scalability limit. This means that they are no longer suitable for effective problem solving. Requirements for reliability and stability of the system as a whole are constantly increasing, which in turn affects the planning process. It is important to take into account the fact that optimistic growth forecasts should be considered in order not to get a system that cannot be scaled in the future.

Tell us - do you already use L3VPN? We are waiting for you in the comments.

Source: https://habr.com/ru/post/436648/