How to roll up updates in production automatically

Launching a new version into combat operation is always a nervous event. Especially if the process involves a lot of manual operations. The human factor is a terrible thing. “It would be nice to automate this process” - this idea is as old as the entire IT world. And the term for this is Continuous Deployment. Yes, here's the trouble, there is not the only right way to configure this continuous deployment. Very much this process is tied to the technological stack of the project and its environment.

In this article I want to share practical experience of setting up automatic system updates without interrupting its work for a specific technological environment, namely: a web application on ASP.NET MVC + Azure SQL + Entity Framework in Code First mode, the application is deployed in Azure as an App Service , and build and deployment are done through Azure DevOps (formerly Visual Studio Team Services).

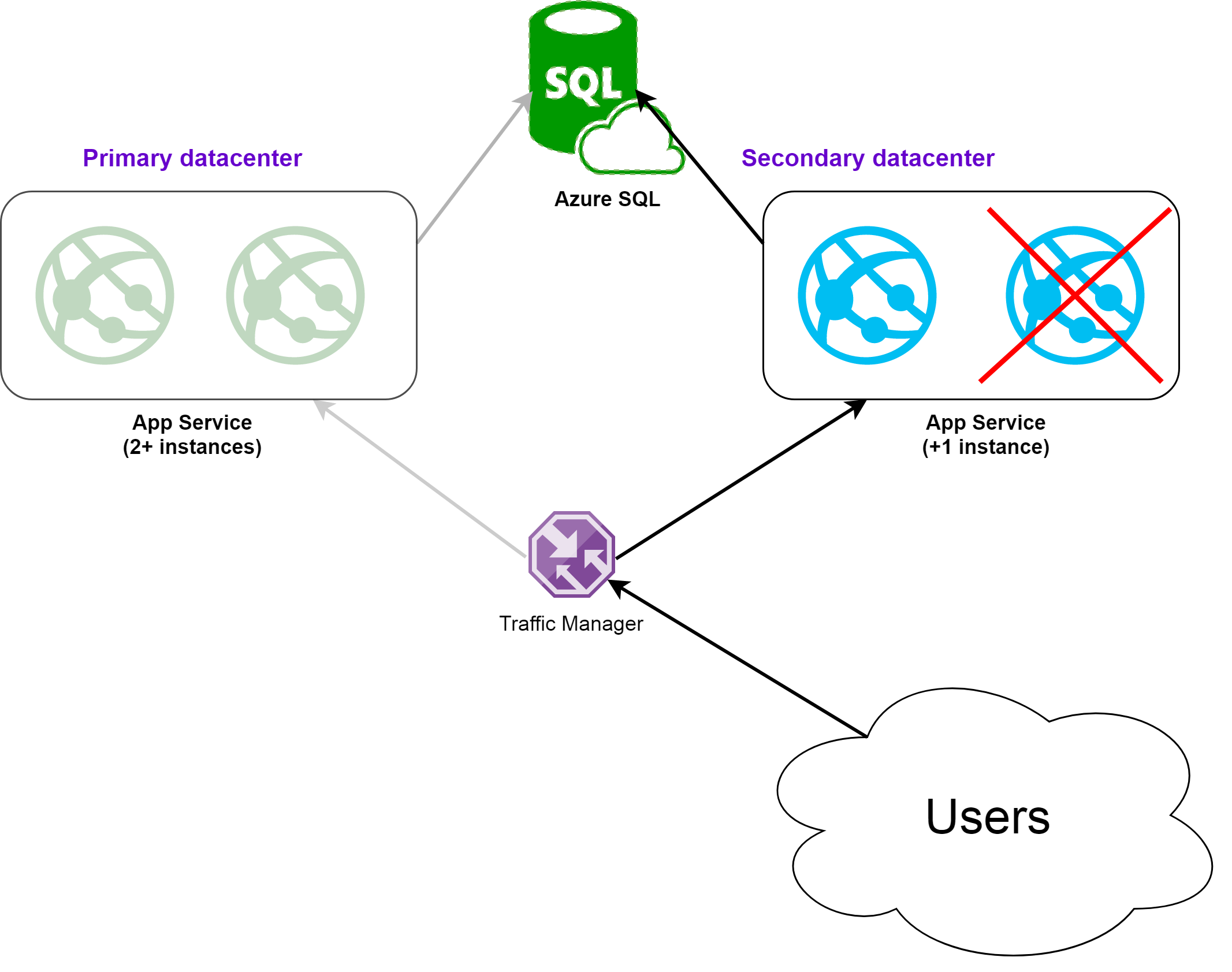

At first glance, everything is very simple, in Azure App Service there is the concept of deployment slot - upload a new version there and turn it on. But it would be simple if the project was based on a non-relational DBMS, in which there is no rigid data scheme. In this case, yes - just a new version picks up traffic and voila. But with a relational DBMS, everything is somewhat more complicated.

The main factors that prevent us from implementing a continuous deployment for our technology stack are the following:

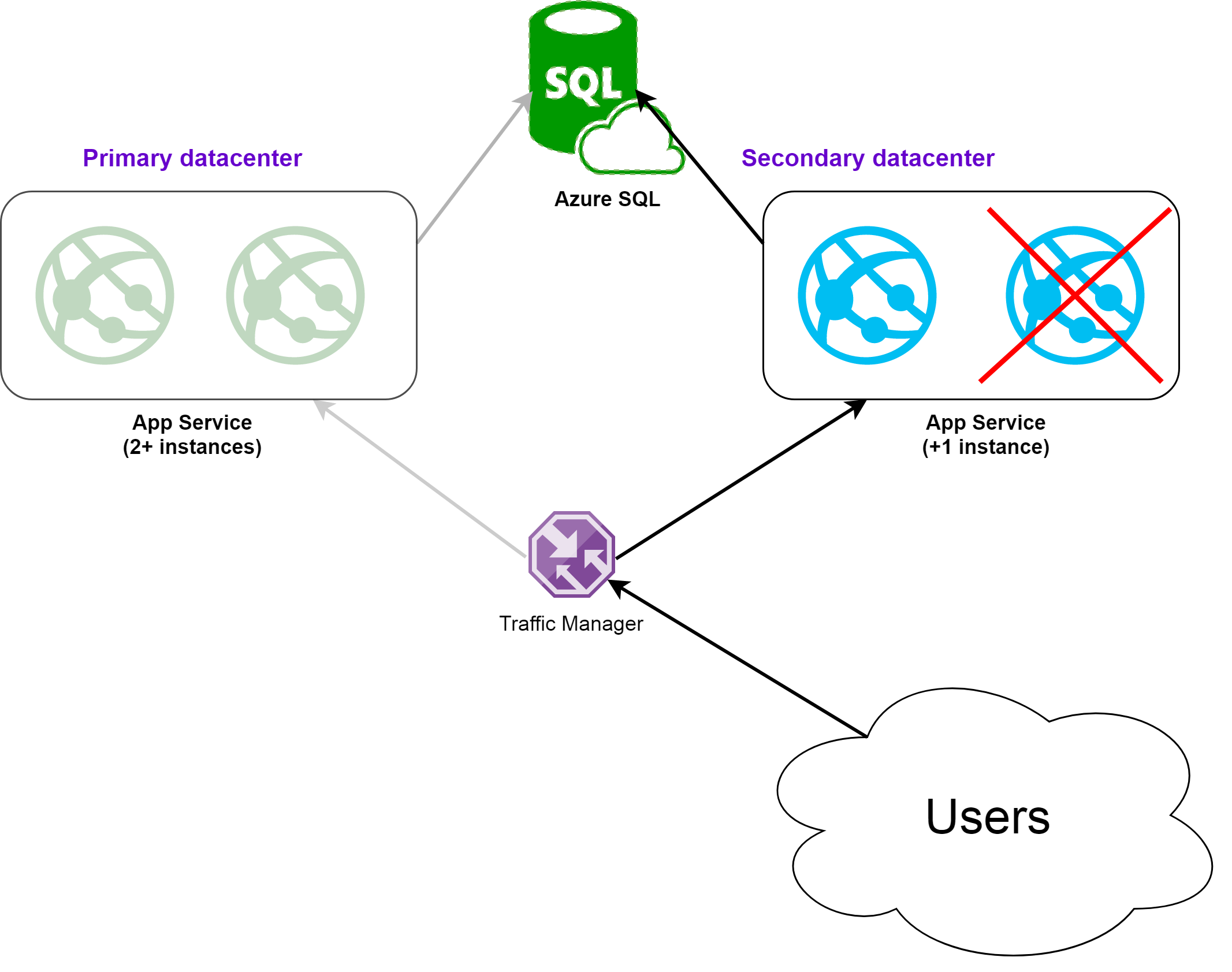

I will explain. Suppose that you deployed a new version in a parallel slot or in a backup data center and started the application of migrations. Assume that we have three migrations and, oh, horror, two rolled, and the third fell. At this point, nothing will happen to the working servers, the Entity Framework does not check the version for each request, but you will most likely not be able to quickly solve the problem. At this time, the load on the application may increase, and the platform will launch an additional instance of the application for you, and it ... naturally will not start, as the database structure has changed. A significant proportion of users will begin to receive errors. Thus, the risk of automatic migration is great.

As for the second item, your migration may contain some commands, the execution time of which exceeds 30 seconds and the standard procedure will fall on timeout. Well, in addition to these points, I personally do not like the fact that with automatic migrations you have to update part of the infrastructure to the new version. And if for the mode with slots in Azure it is not so bad, then for the mode with backup data center you get a part of the infrastructure with a knowingly non-working application. Dangerous is all shot at the most inopportune moment.

Let's start with the most difficult - with the database. So, it would be nice to somehow automatically update the structure of the database so that the old versions of the application would continue to work. In addition, it would be good to take into account the fact that there are such updates when a separate command can be executed for a considerable time, which means we need to update the database without using the built-in mechanisms but by running a separate SQL script. Question: how to prepare it? You can make this process manual. If you have a separate release manager role in your team, you can force it in the Visual Studio environment to execute the command:

It will generate a script, and this person will put this script in a specific project folder. But you must admit, this is still inconvenient, firstly there is the human factor, secondly there are unnecessary difficulties if there was more than one migration between releases. Or for some reason on the target system, one release was missed. We'll have to fence some difficult garden with tracking which migrations already exist and which ones need to be launched. It is difficult and, most importantly, this is the same bike that has already been made in the migration mechanism.

And the process of generating and executing the script will be correctly embedded in the process of laying out the release. In order to generate a migration script, you can use the migrate.exe utility, which is included in the Entity Framework kit. Please note that you need the Entity Framework version 6.2 or higher, since the script generation option appeared in this utility only in April 2017. The utility call looks like this:

It indicates the name of the assembly where your Context is located, the connection string to the target database, the provider and, very importantly, the starting directory, which contains both the assembly with the context and the Entity Framework assembly. Do not experiment with the names of the working directory, be simpler. We stumbled upon the fact that migrate.exe could not read the directory, in the name of which there were spaces and non-letter characters.

Here it is necessary to make an important digression. The fact is that after the above command is executed, a single SQL script will be generated containing all the commands for all migrations that need to be applied to the target database. For Microsoft SQL Server, this is not very good. The fact is that the command without a separator GO server performs as a single package, and some operations can not be performed together in one package.

For example, in some cases, adding a field to a table does not work and immediately creating an index on this table with a new field. But this is not enough, some commands require certain environment settings when executing the script. Such settings are enabled by default when you connect to SQL Server via SQL Server Management Studio, but when the script is executed via the SQLCMD console utility, they must be set manually. To take all this into account, you will have to modify the process of generating the migration script with a file. To do this, create an additional class next to the date context, which does everything you need:

And so that the Entity Framework can use it, register it in the Configuration class, which is usually located in the Migrations folder:

After that, the resulting migration script will contain a GO between each operator, and at the beginning of the file will contain SET QUOTED_IDENTIFIER ON;

Hurray, the preparation is done, it remains to configure the process itself. In general, as part of the release process in Azure DevOps (VSTS / TFS), this is already quite simple. We will need to create a PowerShell script like this:

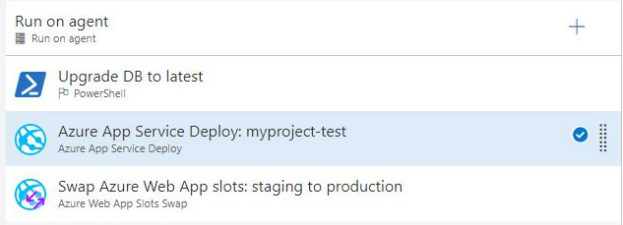

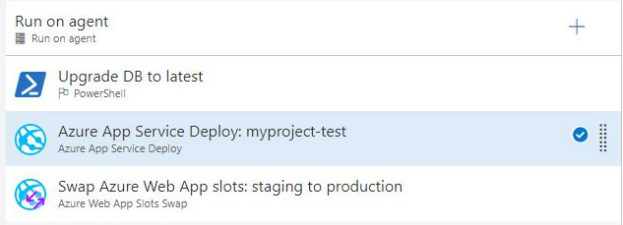

And add a PowerShell script execution block to the release layout process. The block and its settings may look like this:

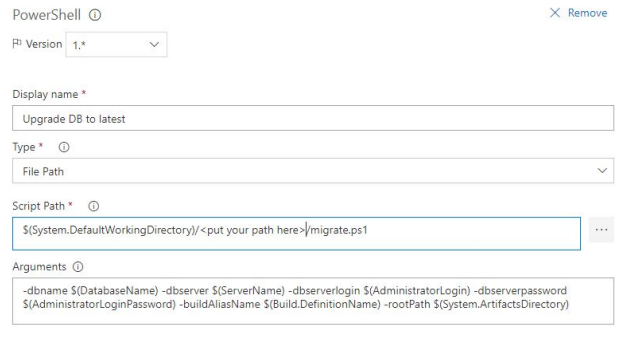

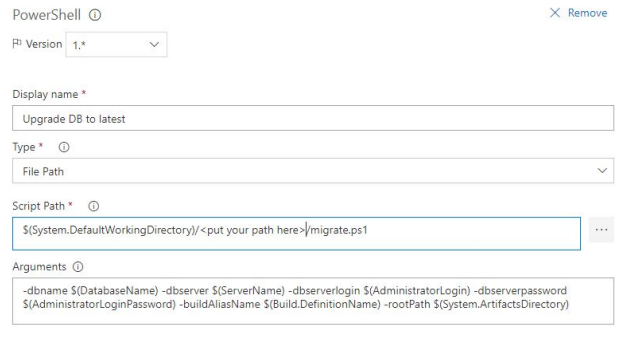

Setting up PowerShell looks like this:

It is important not to forget to add the file migrate.exe from the <YourProject> /packages/EntityFramework.6.2.0/tools/ folder to the project and set the Copy Always property to it so that this utility is copied to the output directory when building the project and you can access it in Azure DevOps release.

Nuance . If your project also uses WebJob when deploying to the Azure App Service, then simply adding Migrate.exe to the project is not safe. We are faced with the fact that in the folder where your WebJob is published, the Azure platform launches stupidly the first exe file it finds. And if your WebJob is alphabetically later than migrate.exe (which we did), then it tries to run migrate.exe instead of your project!

So, we learned how to update the database version by generating a script during the release process, the only thing left is to turn off the migration version check, so that if there are any failures in the script execution process, the old version of our code continues to work. I think there is no need to say that your migrations should be non-destructive. Those. Changes to the database structure should not affect the performance of the previous version, and better than the previous two. To disable the scan, you just need to add the following block to Web.config:

Where the

And finally, it is highly desirable for us to ensure that the application warms up before switching users to the new version. To do this, we add a special block to web.config with links that your application automatically goes through during the initialization process:

You can add several links simply by adding

It's all much simpler and at the same time different. The migration script generation is possible by regular means, but it is not based on the finished assembly, but on the basis of the project file. Thus, the script should be generated as part of the project build process and should be included as an assembly artifact. In this case, the script will contain all the commands of all migrations from the beginning of time. There are no problems in this, since the script is idempotent, i.e. it can be applied to the target database again without any consequences. This has one more useful consequence: we do not need to modify the script generation process in order to divide the commands into packets, everything is already done for this.

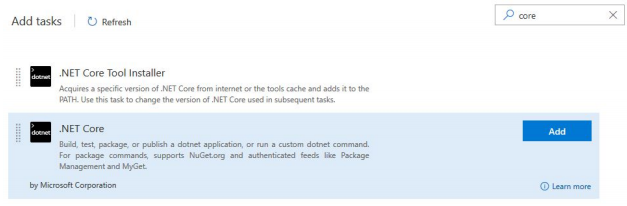

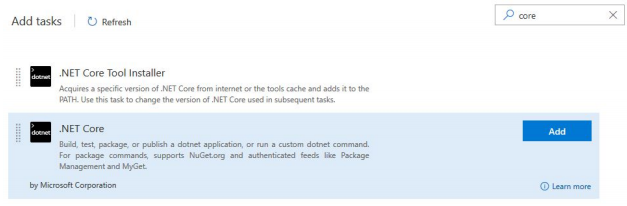

Well, specifically the steps of the process looks like. Add a task to the build process:

Configure it to generate a file with migrations:

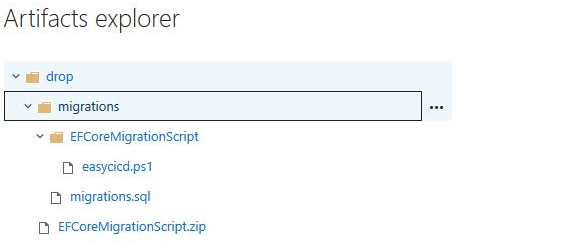

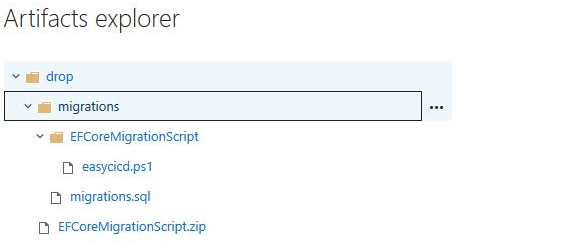

Do not forget to add a script to the PowerShell project that will perform the migration (described above) and the migration file itself. As a result, after assembling the project, the artifacts could look something like this (apart from the actual archive with the assembly, there is an additional PS script and SQL script with migrations):

It remains only to adjust the execution of this PowerShell script in the corresponding Release step by analogy with the procedure described above.

Pavel Kutakov is an expert on cloud technologies, a developer and architect of software systems in various business sectors, from banking IP operating around the world from the USA to Papua New Guinea to a cloud solution for the national lottery operator.

Pavel Kutakov is an expert on cloud technologies, a developer and architect of software systems in various business sectors, from banking IP operating around the world from the USA to Papua New Guinea to a cloud solution for the national lottery operator.

In this article I want to share practical experience of setting up automatic system updates without interrupting its work for a specific technological environment, namely: a web application on ASP.NET MVC + Azure SQL + Entity Framework in Code First mode, the application is deployed in Azure as an App Service , and build and deployment are done through Azure DevOps (formerly Visual Studio Team Services).

At first glance, everything is very simple, in Azure App Service there is the concept of deployment slot - upload a new version there and turn it on. But it would be simple if the project was based on a non-relational DBMS, in which there is no rigid data scheme. In this case, yes - just a new version picks up traffic and voila. But with a relational DBMS, everything is somewhat more complicated.

The main factors that prevent us from implementing a continuous deployment for our technology stack are the following:

- The old version of the application can not work with the new database structure

- Updating the database structure can take considerable time and is not always possible by the means of the application itself through the mechanism of automatic migration application.

I will explain. Suppose that you deployed a new version in a parallel slot or in a backup data center and started the application of migrations. Assume that we have three migrations and, oh, horror, two rolled, and the third fell. At this point, nothing will happen to the working servers, the Entity Framework does not check the version for each request, but you will most likely not be able to quickly solve the problem. At this time, the load on the application may increase, and the platform will launch an additional instance of the application for you, and it ... naturally will not start, as the database structure has changed. A significant proportion of users will begin to receive errors. Thus, the risk of automatic migration is great.

As for the second item, your migration may contain some commands, the execution time of which exceeds 30 seconds and the standard procedure will fall on timeout. Well, in addition to these points, I personally do not like the fact that with automatic migrations you have to update part of the infrastructure to the new version. And if for the mode with slots in Azure it is not so bad, then for the mode with backup data center you get a part of the infrastructure with a knowingly non-working application. Dangerous is all shot at the most inopportune moment.

What to do

Let's start with the most difficult - with the database. So, it would be nice to somehow automatically update the structure of the database so that the old versions of the application would continue to work. In addition, it would be good to take into account the fact that there are such updates when a separate command can be executed for a considerable time, which means we need to update the database without using the built-in mechanisms but by running a separate SQL script. Question: how to prepare it? You can make this process manual. If you have a separate release manager role in your team, you can force it in the Visual Studio environment to execute the command:

update-database -script It will generate a script, and this person will put this script in a specific project folder. But you must admit, this is still inconvenient, firstly there is the human factor, secondly there are unnecessary difficulties if there was more than one migration between releases. Or for some reason on the target system, one release was missed. We'll have to fence some difficult garden with tracking which migrations already exist and which ones need to be launched. It is difficult and, most importantly, this is the same bike that has already been made in the migration mechanism.

And the process of generating and executing the script will be correctly embedded in the process of laying out the release. In order to generate a migration script, you can use the migrate.exe utility, which is included in the Entity Framework kit. Please note that you need the Entity Framework version 6.2 or higher, since the script generation option appeared in this utility only in April 2017. The utility call looks like this:

migrate.exe Context.dll /connectionString="Data Source=localhost;Initial Catalog=myDB;User Id=sa;Password=myPassword;" /connectionProviderName="System.Data.SqlClient" /sc /startUpDirectory="c:\projects\MyProject\bin\Release" /verbose It indicates the name of the assembly where your Context is located, the connection string to the target database, the provider and, very importantly, the starting directory, which contains both the assembly with the context and the Entity Framework assembly. Do not experiment with the names of the working directory, be simpler. We stumbled upon the fact that migrate.exe could not read the directory, in the name of which there were spaces and non-letter characters.

Here it is necessary to make an important digression. The fact is that after the above command is executed, a single SQL script will be generated containing all the commands for all migrations that need to be applied to the target database. For Microsoft SQL Server, this is not very good. The fact is that the command without a separator GO server performs as a single package, and some operations can not be performed together in one package.

For example, in some cases, adding a field to a table does not work and immediately creating an index on this table with a new field. But this is not enough, some commands require certain environment settings when executing the script. Such settings are enabled by default when you connect to SQL Server via SQL Server Management Studio, but when the script is executed via the SQLCMD console utility, they must be set manually. To take all this into account, you will have to modify the process of generating the migration script with a file. To do this, create an additional class next to the date context, which does everything you need:

public class MigrationScriptBuilder : SqlServerMigrationSqlGenerator { public override IEnumerable<MigrationStatement> Generate(IEnumerable<MigrationOperation> migrationOperations, string providerManifestToken) { var statements = base.Generate(migrationOperations, providerManifestToken); var result = new List<MigrationStatement>(); result.Add(new MigrationStatement { Sql = "SET QUOTED_IDENTIFIER ON;" }); foreach (var item in statements) { item.BatchTerminator = "GO"; result.Add(item); } return result; } } And so that the Entity Framework can use it, register it in the Configuration class, which is usually located in the Migrations folder:

public Configuration() { SetSqlGenerator("System.Data.SqlClient", new MigrationScriptBuilder()); …. } After that, the resulting migration script will contain a GO between each operator, and at the beginning of the file will contain SET QUOTED_IDENTIFIER ON;

Hurray, the preparation is done, it remains to configure the process itself. In general, as part of the release process in Azure DevOps (VSTS / TFS), this is already quite simple. We will need to create a PowerShell script like this:

param ( [string] [Parameter(Mandatory=$true)] $dbserver, [string] [Parameter(Mandatory=$true)] $dbname, [string] [Parameter(Mandatory=$true)] $dbserverlogin, [string] [Parameter(Mandatory=$true)] $dbserverpassword, [string] [Parameter(Mandatory=$true)] $rootPath, [string] [Parameter(Mandatory=$true)] $buildAliasName, [string] [Parameter(Mandatory=$true)] $contextFilesLocation, ) Write-Host "Generating migration script..." $fullpath="$rootPath\$buildAliasName\$contextFilesLocation" Write-Host $fullpath & "$fullpath\migrate.exe" Context.dll /connectionProviderName="System.Data.SqlClient" /connectionString="Server=tcp:$dbserver.database.windows.net,1433;Initial Catalog=$dbname;Persist Security Info=False;User ID=$dbserverlogin;Password=$dbserverpassword;MultipleActiveResultSets=False;Encrypt=True;TrustServerCertificate=False;Connection Timeout=30;" /startUpDirectory=$fullpath /verbose /scriptFile=1.SQL Write-Host "Running migration script..." & "SQLCMD" -S "$dbserver.database.windows.net" -U $dbserverlogin@$dbserver -P $dbserverpassword -d $dbname -i 1.SQL Write-Host "====Finished with migration script====" And add a PowerShell script execution block to the release layout process. The block and its settings may look like this:

Setting up PowerShell looks like this:

It is important not to forget to add the file migrate.exe from the <YourProject> /packages/EntityFramework.6.2.0/tools/ folder to the project and set the Copy Always property to it so that this utility is copied to the output directory when building the project and you can access it in Azure DevOps release.

Nuance . If your project also uses WebJob when deploying to the Azure App Service, then simply adding Migrate.exe to the project is not safe. We are faced with the fact that in the folder where your WebJob is published, the Azure platform launches stupidly the first exe file it finds. And if your WebJob is alphabetically later than migrate.exe (which we did), then it tries to run migrate.exe instead of your project!

So, we learned how to update the database version by generating a script during the release process, the only thing left is to turn off the migration version check, so that if there are any failures in the script execution process, the old version of our code continues to work. I think there is no need to say that your migrations should be non-destructive. Those. Changes to the database structure should not affect the performance of the previous version, and better than the previous two. To disable the scan, you just need to add the following block to Web.config:

<entityFramework> <contexts> <context type="<full namespace for your DataContext class>, MyAssembly" disableDatabaseInitialization="true"/> </contexts> </entityFramework> Where the

full namespace for your DataContext class is the full path with the namespace to your successor from DbContext, and MyAssembly is the name of the assembly where your context lies.And finally, it is highly desirable for us to ensure that the application warms up before switching users to the new version. To do this, we add a special block to web.config with links that your application automatically goes through during the initialization process:

<system.webServer> <applicationInitialization doAppInitAfterRestart="true"> <add initializationPage="/" hostName="" /> </applicationInitialization> </system.webServer> You can add several links simply by adding

/> It is claimed that in Azure, when switching slots, the platform waits for the application to finish initializing and only then switches traffic to the new version.And if the project on .NET Core?

It's all much simpler and at the same time different. The migration script generation is possible by regular means, but it is not based on the finished assembly, but on the basis of the project file. Thus, the script should be generated as part of the project build process and should be included as an assembly artifact. In this case, the script will contain all the commands of all migrations from the beginning of time. There are no problems in this, since the script is idempotent, i.e. it can be applied to the target database again without any consequences. This has one more useful consequence: we do not need to modify the script generation process in order to divide the commands into packets, everything is already done for this.

Well, specifically the steps of the process looks like. Add a task to the build process:

Configure it to generate a file with migrations:

Do not forget to add a script to the PowerShell project that will perform the migration (described above) and the migration file itself. As a result, after assembling the project, the artifacts could look something like this (apart from the actual archive with the assembly, there is an additional PS script and SQL script with migrations):

It remains only to adjust the execution of this PowerShell script in the corresponding Release step by analogy with the procedure described above.

about the author

Pavel Kutakov is an expert on cloud technologies, a developer and architect of software systems in various business sectors, from banking IP operating around the world from the USA to Papua New Guinea to a cloud solution for the national lottery operator.

Pavel Kutakov is an expert on cloud technologies, a developer and architect of software systems in various business sectors, from banking IP operating around the world from the USA to Papua New Guinea to a cloud solution for the national lottery operator.Source: https://habr.com/ru/post/436670/