We launch our neural network detector on the Raspberry Pi using the Neural Compute Stick and OpenVINO

With the spread and development of neural networks, there is an increasing need to use them on embedded and low-power devices, robots and drones. The Neural Compute Stick device in conjunction with the OpenVINO framework from Intel allows you to solve this problem by taking the heavy calculations of the neural networks. Thanks to this, you can effortlessly launch a neural network classifier or detector on a low-power device like the Raspberry Pi in almost real time, while not greatly increasing power consumption. In this post, I’ll explain how to use the OpenVINO framework (in C ++) and the Neural Compute Stick to run a simple face detection system on the Raspberry Pi.

As usual, all the code is available on GitHub .

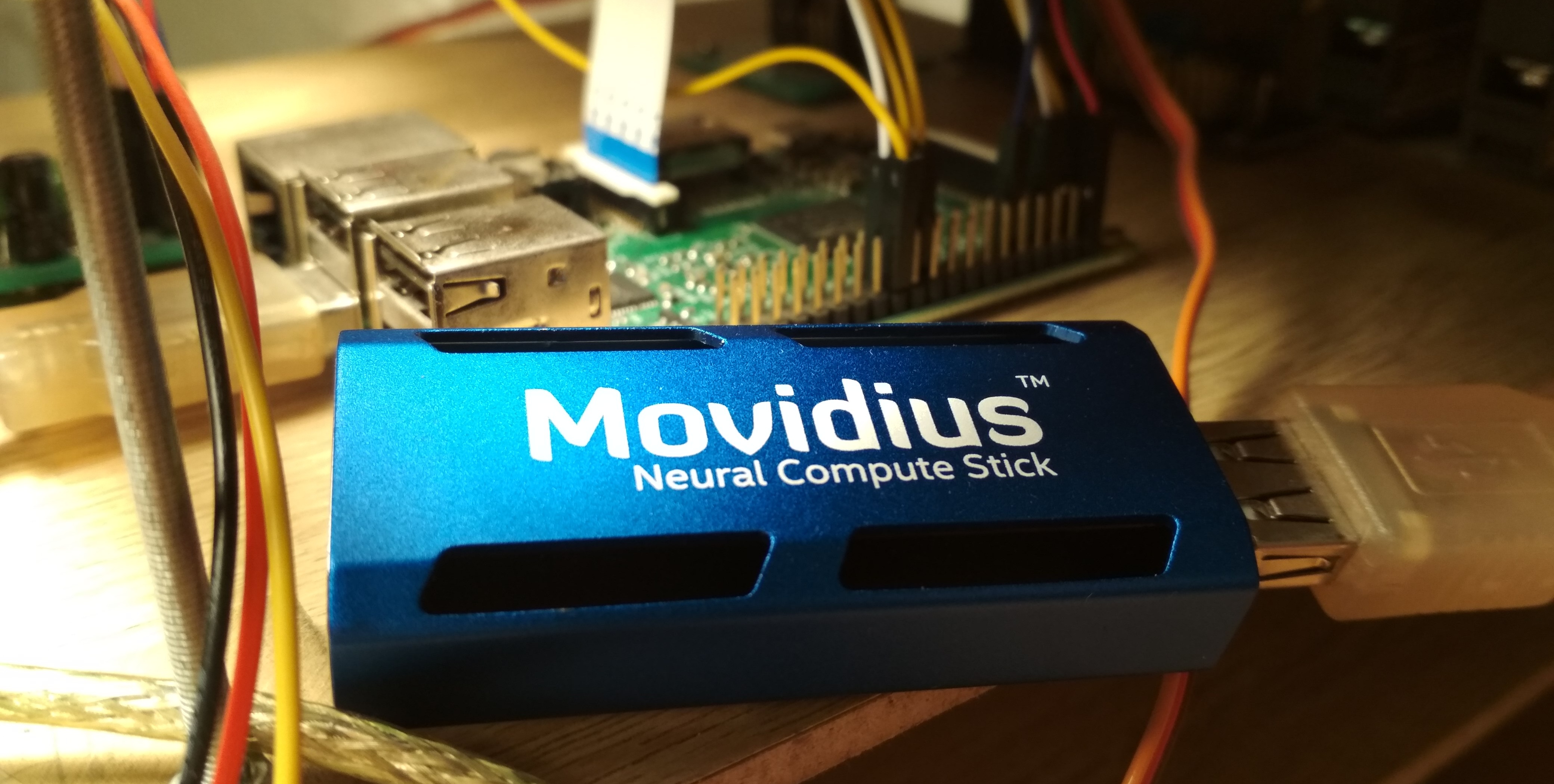

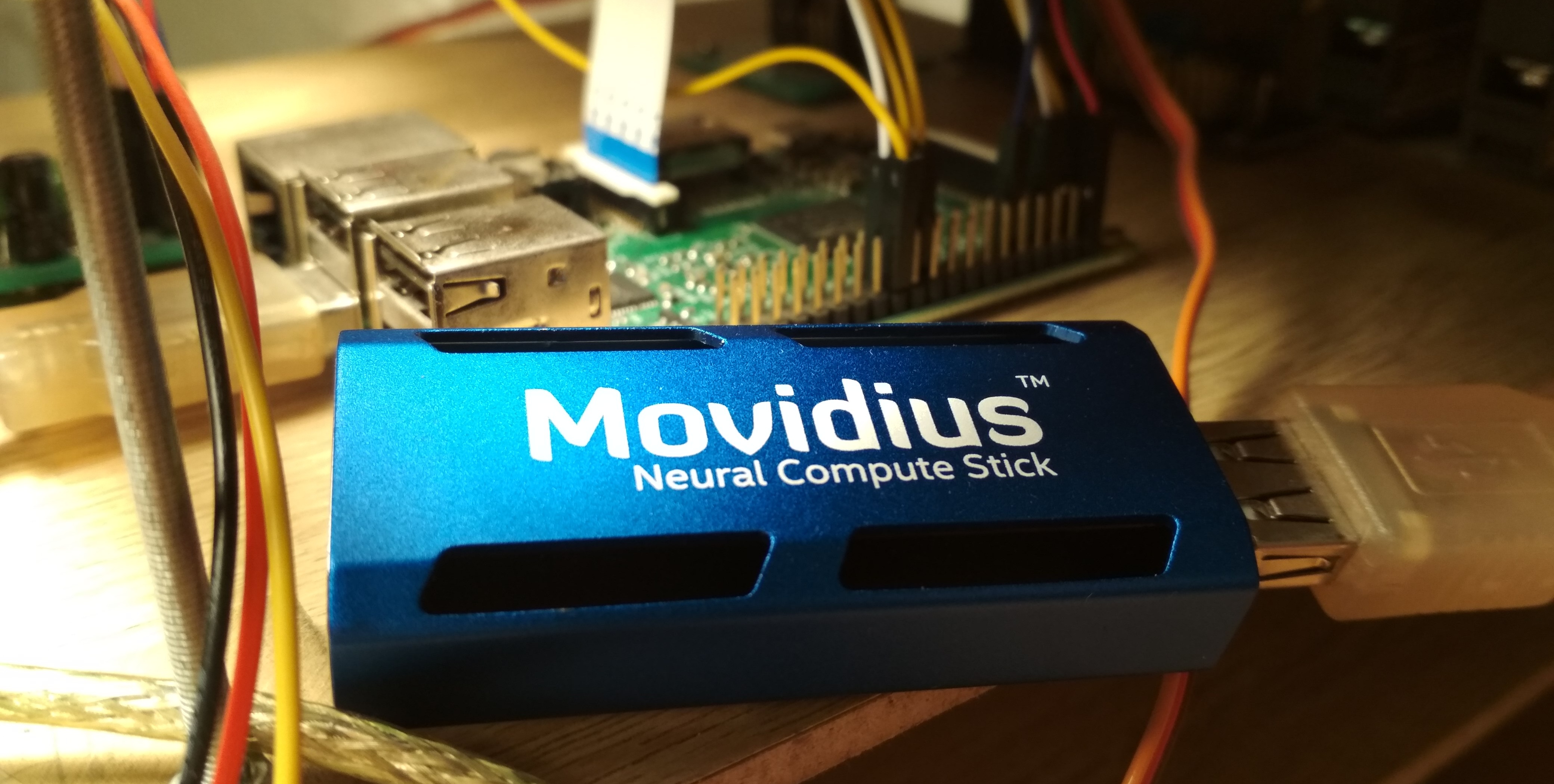

In the summer of 2017, Intel released the Neural Compute Stick (NCS) device designed to run neural networks on low-power devices, and after a couple of months it could be purchased and tested, which I did. NCS is a small computing module with an azure-colored body (also playing the role of a radiator), connected to the main device via USB. Inside, among other things, is the Intel Myriad VPU , which is essentially a 12-core parallel processor, sharpened by operations that often occur in neural networks. NCS is not suitable for teaching neural networks, but the inference in already trained neural networks is comparable in speed to that on the GPU. All calculations in NCS are carried out over 16-bit float numbers, which allows to increase the speed. NCS requires only 1 watt of power to operate, that is, at 5 V on the USB connector, up to 200 mA is consumed - this is even less than the camera for the Raspberry Pi (250 mA).

Neural Compute SDK (NCSDK) was used to work with the first NCS: it includes tools for compiling neural networks in Caffe and TensorFlow formats into NCS format, tools for measuring their performance, as well as Python and C ++ API for interference.

Then a new version of the framework for working with NCS was released: NCSDK2 . The API has changed quite a bit, and although some changes seemed strange to me, there were some useful innovations. In particular, an automatic conversion was added from float 32 bit to float 16 bit to C ++ (previously, for this, you had to insert crutches in the form of code from Numpy). Also appeared the queue of images and the results of their processing.

In May 2018, Intel released OpenVINO (previously called the Intel Computer Vision SDK). This framework is designed to effectively run neural networks on various devices: processors and graphics cards from Intel, FPGA , and the Neural Compute Stick.

In November 2018, a new version of the accelerator was released: the Neural Compute Stick 2 . The computational power of the device was increased: the description on the website promises an acceleration of up to 8x, but I did not have time to test the new version of the device. Acceleration is achieved by increasing the number of cores from 12 to 16, as well as adding new computing devices optimized for neural networks. True, I did not find information about the power consumption.

The second version of NCS is already incompatible with NCSDK or NCSDK2: their credentials passed to OpenVINO, which is capable of working with many other devices besides both versions of NCS. OpenVINO itself has great functionality and includes the following components:

In my previous articles, I talked about how to run the YOLO face detector on NCS (first article) , and also how to train your SSD face detector and run it on Raspberry Pi and NCS (second article) . In these articles, I used NCSDK and NCSDK2. In this article, I will tell you how to do something similar, but using OpenVINO, I will make a small comparison of both different face detectors and the two frameworks for their launch, and point out some of the pitfalls. I am writing in C ++, because I believe that this way you can achieve better performance, which will be important in the case of Raspberry Pi.

Not the most difficult task, although there are subtleties. OpenVINO at the time of this writing only supports Ubuntu 16.04 LTS, CentOS 7.4 and Windows 10. I have Ubuntu 18, and for installation it needs small crutches . I also wanted to compare OpenVINO with NCSDK2, with the installation of which there are also problems: in particular, it tightens its versions of Caffe and TensorFlow and may break the environment settings slightly. In the end, I decided to go the simple way and install both frameworks in a virtual machine with Ubuntu 16 (I use VirtualBox ).

It is worth noting that in order to successfully connect NCS to a virtual machine, you need to install VirtualBox guest add-ons and enable USB 3.0 support. I also added a universal filter for USB devices, as a result of which NCS was connected without problems (although the webcam still has to be connected in the virtual machine settings). To install and compile OpenVINO, you need to create an Intel account, select the framework option (with or without FPGA support) and follow the instructions . It's even easier with NCSDK: it boots from GitHub (don't forget to choose the ncsdk2 branch for the new version of the framework), after which you need to

The only problem I encountered when running NCSDK2 in a virtual machine is the following error:

It occurs at the end of the correct execution of the program and (like) does not affect anything. Apparently, this is a small bug related to the VM (this should not be the case on Raspberry).

Installation on the Raspberry Pi is significantly different. First of all, make sure that you have Raspbian Stretch: both frameworks officially work only on this OS. NCSDK2 needs to be compiled in API-only mode , otherwise it will try to install Caffe and TensorFlow, which is unlikely to please your Raspberry. In the case of OpenVINO, there is an already compiled version for Raspberry , which only needs to be unpacked and set up environment variables. In this version there is only C ++ and Python API, as well as the OpenCV library, all other tools are not available. This means that for both frameworks, models need to be converted in advance on a machine with Ubuntu. My face detection demo works on both Raspberry and desktop, so I just added the converted neural network files to my GitHub repository to make them easier to sync to Raspberry. I have a Raspberry Pi 2 model B, but it should take off with other models.

There is another subtlety regarding the interaction of the Raspberry Pi and the Neural Compute Stick: if in the case of a laptop, simply push NCS to the nearest USB 3.0 port, then you will have to find a USB cable for the Raspberry, otherwise the NSC will block the remaining three USB connectors with its case. It is also worth remembering that on Raspberry all USB versions are 2.0, so the speed of the inference will be lower due to communication delays (a detailed comparison will be later). But if you want to connect to the Raspberry two or more NCS, you will most likely have to find a USB hub with additional power.

Pretty cumbersome. We need to do a lot of different actions, starting with loading the plug-in and ending with the inference itself - so I wrote a wrapper class for the detector. The full code can be viewed on GitHub, and here I just list the main points. Let's start in order:

The definitions of all the functions we need are in the

The following variables will be needed all the time.

Now we will load the necessary plug-in - we need the one that is responsible for NCS and NCS2, it can be obtained by the name "MYRIAD". Let me remind you that in the context of OpenVINO, a plugin is just a dynamic library connecting via an explicit request. The parameter of the

Now we will create an object to load the neural network, read its description and set the size of the batch (the number of simultaneously processed images). A neural network in the OpenVINO format is defined by two files: .xml with a description of the structure and .bin with weights. While we will use ready-made detectors from OpenVINO, we will later create our own. Here

Next, the following happens:

Now the most important point: load the neural network into the plugin (that is, in the NCS). Apparently, the compilation in the desired format occurs on the fly. If the program crashes on this function, the neural network is probably not suitable for this device.

And finally - let's make a trial inference and get the dimensions of the entrance (perhaps this can be done more elegantly). First, we open the query for inferens, then from it we get a link to the input data block, and we already ask it for the size.

Let's try to upload a picture in NCS. In the same way, we create a request for inference, we get a pointer to a data block from it, and from there we get a pointer to the array itself. Next, simply copy the data from our image (here it is already reduced to the desired size). It is worth noting that measurements in

Why asynchronous? This will optimize the allocation of resources. While NCS considers the neural network, it is possible to process the next frame - this will lead to a noticeable acceleration on the Raspberry Pi.

If you are familiar with neural networks, you might have a question about when we scale the values of the input pixels of the neural network (for example, we reduce to [0,1] ). The fact is that in the OpenVINO models this transformation is already included in the description of the neural network, and when using our detector we will do something similar. And since both the conversion to float and input scaling are done by OpenVINO, we just need to resize the image.

Now (after doing some useful work) complete the request for inference. The program is blocked until the execution results come. We get a pointer to the result.

Now it's time to think about the format in which NCS returns the result of the detector. It is worth noting that the format is slightly different from what it was when using NCSDK. Generally speaking, the detector output is four-dimensional and has a dimension (1 x 1 x maximum number of detections x 7), we can assume that this is an array of size (

The

The seven values in the description of one detection are as follows:

Now about how the general scheme of inference in real time looks. First, we initialize the neural network and the camera, set up

In the examples InferenceEngine, I didn’t like the cumbersome CMake files, and I decided to compactly rewrite everything into my Makefile:

This team will work on both Ubuntu and Raspbian, thanks to a pair of tricks. I specified the paths for searching for headers and dynamic libraries for both Raspberry and Ubuntu machines. From libraries, in addition to OpenCV, you also need to connect

Separately, it is worth noting that when compiling on Raspberry you need the

NCS supports only Caffe SSD detectors out of the box, although with the help of a pair of dirty tricks I managed to run YOLO from Darknet on it. Single Shot Detector (SSD) is a popular architecture among lightweight neural networks, and with the help of different encoders (or backbone networks) you can quite flexibly vary the ratio of speed and quality.

I will experiment with different face detectors:

For detectors from OpenVINO, there are no scales in either the Caffe format or the NCSDK format, so I can only run them in OpenVINO.

I have two files in the Caffe format: .prototxt with the description of the network and .caffemodel with weights. I need to get two of them in the format OpenVINO: .xml and .bin with the description and weights, respectively. To do this, you need to use the mo.py script from OpenVINO (aka Model Optimizer):

In this case, the values are brought from the range [0,255] in range [0,1] . In general, this script has a lot of parameters, some of which are specific for individual frameworks, I recommend to look at the manual for the script.

There are no ready-made models in the OpenVINO distribution for Raspberry, but you can simply download them.

I used three comparison options: 1) NCS + Virtual Machine with Ubuntu 16.04, Core i7 processor, USB 3.0 connector; 2) NCS + Same machine, USB 3.0 connector + USB 2.0 cable (there will be more exchange delays with the device); 3) NCS + Raspberry Pi 2 model B, Raspbian Stretch, USB 2.0 connector + USB 2.0 cable.

I ran my detector with both OpenVINO and NCSDK2, detectors from OpenVINO only with their native framework, YOLO only with NCSDK2 (most likely, it can be run on OpenVINO).

The FPS table for different detectors looks like this (approximate numbers):

Note: performance was measured for the entire demo program entirely, including frame processing and visualization.

YOLO was the slowest and most unstable of all. He very often skips the detection and can not work with the illuminated frames.

The detector, which I trained, works twice as fast, is more resistant to distortions in frames and detects even small faces. However, he still sometimes skips the detections, and sometimes he detects false ones. If you cut off the last few layers from it, it will become slightly faster, but it will cease to see large faces. The same detector, launched via OpenVINO, becomes slightly faster when using USB 2.0, the quality does not change visually.

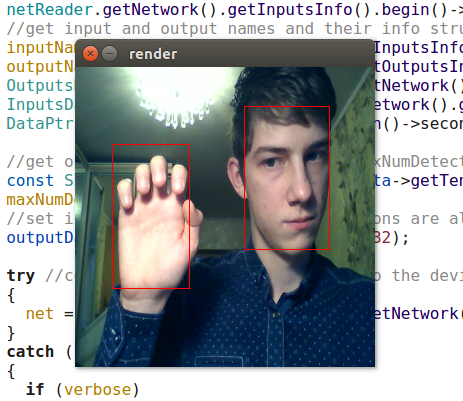

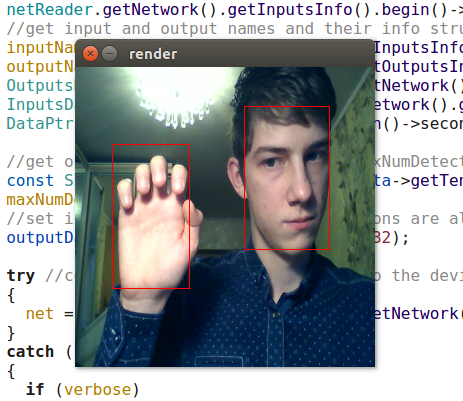

The detectors from OpenVINO, of course, are far superior to both YOLO and my detector. (I would not even train my detector if OpenVINO existed in its current form at that time). The retail-0004 model is significantly faster and at the same time practically does not miss the face, but I managed to slightly deceive her (although the confidence of these detections is low):

Competitive attack of natural intelligence on artificial

The adas-0001 detector is significantly slower, but it works with images of large size and should be more accurate. I did not notice the difference, but I checked it on pretty simple frames.

In general, it is very nice that on a low-power device like the Raspberry Pi you can use neural networks, and even almost in real time. OpenVINO provides very extensive functionality for the inference of neural networks on many different devices - much broader than I described in the article. I think Neural Compute Stick and OpenVINO will be very useful in my robotic research.

As usual, all the code is available on GitHub .

A bit about Neural Compute Stick and OpenVINO

In the summer of 2017, Intel released the Neural Compute Stick (NCS) device designed to run neural networks on low-power devices, and after a couple of months it could be purchased and tested, which I did. NCS is a small computing module with an azure-colored body (also playing the role of a radiator), connected to the main device via USB. Inside, among other things, is the Intel Myriad VPU , which is essentially a 12-core parallel processor, sharpened by operations that often occur in neural networks. NCS is not suitable for teaching neural networks, but the inference in already trained neural networks is comparable in speed to that on the GPU. All calculations in NCS are carried out over 16-bit float numbers, which allows to increase the speed. NCS requires only 1 watt of power to operate, that is, at 5 V on the USB connector, up to 200 mA is consumed - this is even less than the camera for the Raspberry Pi (250 mA).

Neural Compute SDK (NCSDK) was used to work with the first NCS: it includes tools for compiling neural networks in Caffe and TensorFlow formats into NCS format, tools for measuring their performance, as well as Python and C ++ API for interference.

Then a new version of the framework for working with NCS was released: NCSDK2 . The API has changed quite a bit, and although some changes seemed strange to me, there were some useful innovations. In particular, an automatic conversion was added from float 32 bit to float 16 bit to C ++ (previously, for this, you had to insert crutches in the form of code from Numpy). Also appeared the queue of images and the results of their processing.

In May 2018, Intel released OpenVINO (previously called the Intel Computer Vision SDK). This framework is designed to effectively run neural networks on various devices: processors and graphics cards from Intel, FPGA , and the Neural Compute Stick.

In November 2018, a new version of the accelerator was released: the Neural Compute Stick 2 . The computational power of the device was increased: the description on the website promises an acceleration of up to 8x, but I did not have time to test the new version of the device. Acceleration is achieved by increasing the number of cores from 12 to 16, as well as adding new computing devices optimized for neural networks. True, I did not find information about the power consumption.

The second version of NCS is already incompatible with NCSDK or NCSDK2: their credentials passed to OpenVINO, which is capable of working with many other devices besides both versions of NCS. OpenVINO itself has great functionality and includes the following components:

- Model Optimizer: Python script that allows you to convert neural networks from popular frameworks for deep learning into the universal OpenVINO format. The list of supported frameworks: Caffe , TensorFlow , MXNET , Kaldi (speech recognition framework), ONNX (open neural network presentation format).

- Inference Engine: C ++ and Python API for the inference of neural networks, abstracted from a specific device inference. The API code will look almost identical for CPU, GPU, FPGA and NCS.

- A set of plug-ins for different devices. Plugins are dynamic libraries that are loaded explicitly in the code of the main program. We are most interested in the plugin for NCS.

- A set of pre-trained models in the universal format OpenVINO (full list here ). An impressive collection of high-quality neural networks: detectors of persons, pedestrians, objects; recognition of the orientation of faces, particular points of faces, posture of a person; super resolution; other. It is worth noting that not all of them are supported by NCS / FPGA / GPU.

- Model Downloader: another script that simplifies downloading models in the OpenVINO format over the network (although you can easily do without it).

- Library of computer vision OpenCV , optimized for hardware Intel.

- Library of computer vision OpenVX .

- Intel Compute Library for Deep Neural Networks .

- Intel Math Kernel Library for Deep Neural Networks .

- A tool for optimizing neural networks for FPGA (optional).

- Documentation and sample programs.

In my previous articles, I talked about how to run the YOLO face detector on NCS (first article) , and also how to train your SSD face detector and run it on Raspberry Pi and NCS (second article) . In these articles, I used NCSDK and NCSDK2. In this article, I will tell you how to do something similar, but using OpenVINO, I will make a small comparison of both different face detectors and the two frameworks for their launch, and point out some of the pitfalls. I am writing in C ++, because I believe that this way you can achieve better performance, which will be important in the case of Raspberry Pi.

Install OpenVINO

Not the most difficult task, although there are subtleties. OpenVINO at the time of this writing only supports Ubuntu 16.04 LTS, CentOS 7.4 and Windows 10. I have Ubuntu 18, and for installation it needs small crutches . I also wanted to compare OpenVINO with NCSDK2, with the installation of which there are also problems: in particular, it tightens its versions of Caffe and TensorFlow and may break the environment settings slightly. In the end, I decided to go the simple way and install both frameworks in a virtual machine with Ubuntu 16 (I use VirtualBox ).

It is worth noting that in order to successfully connect NCS to a virtual machine, you need to install VirtualBox guest add-ons and enable USB 3.0 support. I also added a universal filter for USB devices, as a result of which NCS was connected without problems (although the webcam still has to be connected in the virtual machine settings). To install and compile OpenVINO, you need to create an Intel account, select the framework option (with or without FPGA support) and follow the instructions . It's even easier with NCSDK: it boots from GitHub (don't forget to choose the ncsdk2 branch for the new version of the framework), after which you need to

make install .The only problem I encountered when running NCSDK2 in a virtual machine is the following error:

E: [ 0] dispatcherEventReceive:236 dispatcherEventReceive() Read failed -1 E: [ 0] eventReader:254 Failed to receive event, the device may have reset It occurs at the end of the correct execution of the program and (like) does not affect anything. Apparently, this is a small bug related to the VM (this should not be the case on Raspberry).

Installation on the Raspberry Pi is significantly different. First of all, make sure that you have Raspbian Stretch: both frameworks officially work only on this OS. NCSDK2 needs to be compiled in API-only mode , otherwise it will try to install Caffe and TensorFlow, which is unlikely to please your Raspberry. In the case of OpenVINO, there is an already compiled version for Raspberry , which only needs to be unpacked and set up environment variables. In this version there is only C ++ and Python API, as well as the OpenCV library, all other tools are not available. This means that for both frameworks, models need to be converted in advance on a machine with Ubuntu. My face detection demo works on both Raspberry and desktop, so I just added the converted neural network files to my GitHub repository to make them easier to sync to Raspberry. I have a Raspberry Pi 2 model B, but it should take off with other models.

There is another subtlety regarding the interaction of the Raspberry Pi and the Neural Compute Stick: if in the case of a laptop, simply push NCS to the nearest USB 3.0 port, then you will have to find a USB cable for the Raspberry, otherwise the NSC will block the remaining three USB connectors with its case. It is also worth remembering that on Raspberry all USB versions are 2.0, so the speed of the inference will be lower due to communication delays (a detailed comparison will be later). But if you want to connect to the Raspberry two or more NCS, you will most likely have to find a USB hub with additional power.

How does the code OpenVINO

Pretty cumbersome. We need to do a lot of different actions, starting with loading the plug-in and ending with the inference itself - so I wrote a wrapper class for the detector. The full code can be viewed on GitHub, and here I just list the main points. Let's start in order:

The definitions of all the functions we need are in the

inference_engine.hpp file in the InferenceEngine namespace. #include <inference_engine.hpp> using namespace InferenceEngine; The following variables will be needed all the time.

inputName and outputName are needed to address the input and output of the neural network. Generally speaking, a neural network can have many inputs and outputs, but in our detectors there will be one at a time. The variable net is the network itself, request is a pointer to the last inverse request, inputBlob is a pointer to the neural network input data array. The remaining variables speak for themselves. string inputName; string outputName; ExecutableNetwork net; InferRequest::Ptr request; Blob::Ptr inputBlob; //input shape int netInputWidth; int netInputHeight; int netInputChannels; //output shape int maxNumDetectedFaces; //return code StatusCode ncsCode; Now we will load the necessary plug-in - we need the one that is responsible for NCS and NCS2, it can be obtained by the name "MYRIAD". Let me remind you that in the context of OpenVINO, a plugin is just a dynamic library connecting via an explicit request. The parameter of the

PluginDispatcher function is the list of directories in which plugins should be searched. If you set up environment variables according to the instructions, an empty string will suffice. For reference, the plugins are in [OpenVINO_install_dir]/deployment_tools/inference_engine/lib/ubuntu_16.04/intel64/ InferencePlugin plugin = PluginDispatcher({""}).getPluginByDevice("MYRIAD"); Now we will create an object to load the neural network, read its description and set the size of the batch (the number of simultaneously processed images). A neural network in the OpenVINO format is defined by two files: .xml with a description of the structure and .bin with weights. While we will use ready-made detectors from OpenVINO, we will later create our own. Here

std::string filename is the file name without the extension. You should also keep in mind that NCS only supports a batch size of 1. CNNNetReader netReader; netReader.ReadNetwork(filename+".xml"); netReader.ReadWeights(filename+".bin"); netReader.getNetwork().setBatchSize(1); Next, the following happens:

- To enter the neural network, we set the unsigned char 8 bit data type. This means that we can feed the image in the format in which it comes from the camera, and InferenceEngine will take care of the conversion (NCS performs calculations in the float 16 bit format). This will speed up a bit on the Raspberry Pi - as I understand it, the conversion is done on the NCS, so the data transfer delay via USB is less.

- We get the names of the input and output, then to refer to them.

- We get the description of the outputs (this is the map from the name of the output to the pointer to the data block). We get a pointer to the data block of the first (single) output.

- We get its size: 1 x 1 x maximum number of detections x length of the description of detection (7). About the format of the description of detections - later.

- Set output format to float 32 bit. Again, the conversion from float 16 bit takes over the InferenceEngine.

//we can set input type to unsigned char: conversion will be performed on device netReader.getNetwork().getInputsInfo().begin()->second->setPrecision(Precision::U8); //get input and output names and their info structures inputName = netReader.getNetwork().getInputsInfo().begin()->first; outputName = netReader.getNetwork().getOutputsInfo().begin()->first; OutputsDataMap outputInfo(netReader.getNetwork().getOutputsInfo()); InputsDataMap inputInfo(netReader.getNetwork().getInputsInfo()); DataPtr &outputData = (outputInfo.begin()->second); //get output shape: (1 x 1 x maxNumDetectedFaces x faceDescriptionLength(7)) const SizeVector outputDims = outputData->getTensorDesc().getDims(); maxNumDetectedFaces = outputDims[2]; //set input type to float32: calculations are all in float16, conversion is performed on device outputData->setPrecision(Precision::FP32); Now the most important point: load the neural network into the plugin (that is, in the NCS). Apparently, the compilation in the desired format occurs on the fly. If the program crashes on this function, the neural network is probably not suitable for this device.

net = plugin.LoadNetwork(netReader.getNetwork(), {}); And finally - let's make a trial inference and get the dimensions of the entrance (perhaps this can be done more elegantly). First, we open the query for inferens, then from it we get a link to the input data block, and we already ask it for the size.

//perform single inference to get input shape (a hack) request = net.CreateInferRequestPtr(); //open inference request //we need the blob size: (batch(1) x channels(3) x H x W) inputBlob = request->GetBlob(inputName); SizeVector blobSize = inputBlob->getTensorDesc().getDims(); netInputWidth = blobSize[3]; netInputHeight = blobSize[2]; netInputChannels = blobSize[1]; request->Infer(); //close request Let's try to upload a picture in NCS. In the same way, we create a request for inference, we get a pointer to a data block from it, and from there we get a pointer to the array itself. Next, simply copy the data from our image (here it is already reduced to the desired size). It is worth noting that measurements in

cv::Mat and inputBlob are stored in a different order (in OpenCV, the channel index changes the fastest, in OpenVINO - the slowest), so memcpy cannot do with one. Then we start asynchronous inference.Why asynchronous? This will optimize the allocation of resources. While NCS considers the neural network, it is possible to process the next frame - this will lead to a noticeable acceleration on the Raspberry Pi.

cv::Mat data; ... //get image somehow //create request, get data blob request = net.CreateInferRequestPtr(); inputBlob = request->GetBlob(inputName); unsigned char* blobData = inputBlob->buffer().as<unsigned char*>(); //copy from resized frame to network input int wh = netInputHeight*netInputWidth; for (int c = 0; c < netInputChannels; c++) for (int h = 0; h < wh; h++) blobData[c * wh + h] = data.data[netInputChannels*h + c]; //start asynchronous inference request->StartAsync(); If you are familiar with neural networks, you might have a question about when we scale the values of the input pixels of the neural network (for example, we reduce to [0,1] ). The fact is that in the OpenVINO models this transformation is already included in the description of the neural network, and when using our detector we will do something similar. And since both the conversion to float and input scaling are done by OpenVINO, we just need to resize the image.

Now (after doing some useful work) complete the request for inference. The program is blocked until the execution results come. We get a pointer to the result.

float * output; ncsCode = request->Wait(IInferRequest::WaitMode::RESULT_READY); output = request->GetBlob(outputName)->buffer().as<float*>(); Now it's time to think about the format in which NCS returns the result of the detector. It is worth noting that the format is slightly different from what it was when using NCSDK. Generally speaking, the detector output is four-dimensional and has a dimension (1 x 1 x maximum number of detections x 7), we can assume that this is an array of size (

maxNumDetectedFaces x 7).The

maxNumDetectedFaces parameter is specified in the description of the neural network, and it is easy to change it, for example, in the .prototxt description of the network in the Caffe format. Earlier we got it from the object representing the detector. This parameter is related to the specifics of the class of detectors SSD (Single Shot Detector) , which includes all supported NCS detectors. SSD always considers the same (and very large) number of bounding boxes for each image, and after screening out detections with a low confidence rating and removing overlapping frames with Non-maximum Suppression, usually 100-200 are usually left. This is what the parameter is responsible for.The seven values in the description of one detection are as follows:

- the number of the image in the batch where the object was detected (in our case it should be zero);

- object class (0 - background, starting from 1 - other classes, only detections with a positive class are returned);

- confidence in the presence of detection (in the range [0,1] );

- the normalized x-coordinate of the upper left corner of the bounding box (in the range [0,1] );

- similarly, the y-coordinate;

- the normalized width of the bounding box (in the range [0,1] );

- similarly - height;

The code to extract the bounding box from the detector output

void get_detection_boxes(const float* predictions, int numPred, int w, int h, float thresh, std::vector<float>& probs, std::vector<cv::Rect>& boxes) { float score = 0; float cls = 0; float id = 0; //predictions holds numPred*7 values //data format: image_id, detection_class, detection_confidence, //box_normed_x, box_normed_y, box_normed_w, box_normed_h for (int i=0; i<numPred; i++) { score = predictions[i*7+2]; cls = predictions[i*7+1]; id = predictions[i*7 ]; if (id>=0 && score>thresh && cls<=1) { probs.push_back(score); boxes.push_back(Rect(predictions[i*7+3]*w, predictions[i*7+4]*h, (predictions[i*7+5]-predictions[i*7+3])*w, (predictions[i*7+6]-predictions[i*7+4])*h)); } } } numPred we learn from the detector itself, and w,h - the size of the image for visualization.Now about how the general scheme of inference in real time looks. First, we initialize the neural network and the camera, set up

cv::Mat for raw frames, and another one for frames reduced to the required size. We fill our frames with zeros - this will add confidence that at the idle launch the neural network will not find anything. Then we start the inference cycle:- We load the current frame into the neural network using an asynchronous request - the NCS has already started working, and at this time we have the opportunity to do useful work as the main processor.

- We display all previous detections in the previous frame, draw a frame (if necessary).

- We get a new frame from the camera, compress it to the desired size. For Raspberry, I recommend using the simplest resizing algorithm — in OpenCV, this is the Nearest Neighbors interpolation. The quality of the detector is not much affected, but can throw a little speed. I also mirror the frame for easy visualization (optional).

- Now it's time to get the result from the NCS, completing the inference request. The program will be blocked until the result.

- We process new detections, select frames.

- Else: working out keystrokes, frame counting, etc.

How to compile it

In the examples InferenceEngine, I didn’t like the cumbersome CMake files, and I decided to compactly rewrite everything into my Makefile:

g++ $(RPI_ARCH) \ -I/usr/include -I. \ -I$(OPENVINO_PATH)/deployment_tools/inference_engine/include \ -I$(OPENVINO_PATH_RPI)/deployment_tools/inference_engine/include \ -L/usr/lib/x86_64-linux-gnu \ -L/usr/local/lib \ -L$(OPENVINO_PATH)/deployment_tools/inference_engine/lib/ubuntu_16.04/intel64 \ -L$(OPENVINO_PATH_RPI)/deployment_tools/inference_engine/lib/raspbian_9/armv7l \ vino.cpp wrapper/vino_wrapper.cpp \ -o demo -std=c++11 \ `pkg-config opencv --cflags --libs` \ -ldl -linference_engine $(RPI_LIBS) This team will work on both Ubuntu and Raspbian, thanks to a pair of tricks. I specified the paths for searching for headers and dynamic libraries for both Raspberry and Ubuntu machines. From libraries, in addition to OpenCV, you also need to connect

libinference_engine and libdl - a library for dynamically linking other libraries, it is needed for the plug-in to load. In this case, the libmyriadPlugin itself is not necessary. Among other things, for Raspberry, I also connect the Raspicam library to work with the camera (this is $(RPI_LIBS) ). Also had to use the standard C ++ 11.Separately, it is worth noting that when compiling on Raspberry you need the

-march=armv7-a flag (this is $(RPI_ARCH) ). If you do not specify it, the program will compile, but will fall with a quiet segment. You can also add optimization with -O3 , this will add speed.What are the detectors

NCS supports only Caffe SSD detectors out of the box, although with the help of a pair of dirty tricks I managed to run YOLO from Darknet on it. Single Shot Detector (SSD) is a popular architecture among lightweight neural networks, and with the help of different encoders (or backbone networks) you can quite flexibly vary the ratio of speed and quality.

I will experiment with different face detectors:

- YOLO, taken from here , converted first to Caffe format, then to NCS format (only with NCSDK). The image is 448 x 448.

- Own Mobilenet + SSD detector, the training of which I told in a previous publication . I still have a clipped version of this detector, which sees only small faces, and at the same time a little faster. I will check the full version of my detector on both NCSDK and OpenVINO. The image is 300 x 300.

- Detector face-detection-adas-0001 from OpenVINO: MobileNet + SSD. The image is 384 x 672.

- OpenVINO face-detection-retail-0004 detector: lightweight SqueezeNet + SSD. The image is 300 x 300.

For detectors from OpenVINO, there are no scales in either the Caffe format or the NCSDK format, so I can only run them in OpenVINO.

Transform your detector in the format OpenVINO

I have two files in the Caffe format: .prototxt with the description of the network and .caffemodel with weights. I need to get two of them in the format OpenVINO: .xml and .bin with the description and weights, respectively. To do this, you need to use the mo.py script from OpenVINO (aka Model Optimizer):

mo.py \ --framework caffe \ --input_proto models/face/ssd-face.prototxt \ --input_model models/face/ssd-face.caffemodel \ --output_dir models/face \ --model_name ssd-vino-custom \ --mean_values [127.5,127.5,127.5] \ --scale_values [127.5,127.5,127.5] \ --data_type FP16 output_dir sets the directory in which new files will be created, model_name is the name for new files without extension, data_type (FP16/FP32) is the type of weights in the neural network (NCS supports only FP16). The parameters mean_values, scale_values set the average and scale for preprocessing images before launching them into the neural network. The specific conversion looks like this:(pixel values−mean values)/scale values

In this case, the values are brought from the range [0,255] in range [0,1] . In general, this script has a lot of parameters, some of which are specific for individual frameworks, I recommend to look at the manual for the script.

There are no ready-made models in the OpenVINO distribution for Raspberry, but you can simply download them.

For example, like this.

wget --no-check-certificate \ https://download.01.org/openvinotoolkit/2018_R4/open_model_zoo/face-detection-retail-0004/FP16/face-detection-retail-0004.xml \ -O ./models/face/vino.xml; \ wget --no-check-certificate \ https://download.01.org/openvinotoolkit/2018_R4/open_model_zoo/face-detection-retail-0004/FP16/face-detection-retail-0004.bin \ -O ./models/face/vino.bin Comparison of detectors and frameworks

I used three comparison options: 1) NCS + Virtual Machine with Ubuntu 16.04, Core i7 processor, USB 3.0 connector; 2) NCS + Same machine, USB 3.0 connector + USB 2.0 cable (there will be more exchange delays with the device); 3) NCS + Raspberry Pi 2 model B, Raspbian Stretch, USB 2.0 connector + USB 2.0 cable.

I ran my detector with both OpenVINO and NCSDK2, detectors from OpenVINO only with their native framework, YOLO only with NCSDK2 (most likely, it can be run on OpenVINO).

The FPS table for different detectors looks like this (approximate numbers):

| Model | USB 3.0 | USB 2.0 | Raspberry pi |

|---|---|---|---|

| Custom SSD with NCSDK2 | 10.8 | 9.3 | 7.2 |

| Custom longrange SSD with NCSDK2 | 11.8 | 10.0 | 7.3 |

| YOLO v2 with NCSDK2 | 5.3 | 4.6 | 3.6 |

| Custom SSD with OpenVINO | 10.6 | 9.9 | 7.9 |

| OpenVINO face-detection-retail-0004 | 15.6 | 14.2 | 9.3 |

| OpenVINO face-detection-adas-0001 | 5.8 | 5.5 | 3.9 |

Note: performance was measured for the entire demo program entirely, including frame processing and visualization.

YOLO was the slowest and most unstable of all. He very often skips the detection and can not work with the illuminated frames.

The detector, which I trained, works twice as fast, is more resistant to distortions in frames and detects even small faces. However, he still sometimes skips the detections, and sometimes he detects false ones. If you cut off the last few layers from it, it will become slightly faster, but it will cease to see large faces. The same detector, launched via OpenVINO, becomes slightly faster when using USB 2.0, the quality does not change visually.

The detectors from OpenVINO, of course, are far superior to both YOLO and my detector. (I would not even train my detector if OpenVINO existed in its current form at that time). The retail-0004 model is significantly faster and at the same time practically does not miss the face, but I managed to slightly deceive her (although the confidence of these detections is low):

Competitive attack of natural intelligence on artificial

The adas-0001 detector is significantly slower, but it works with images of large size and should be more accurate. I did not notice the difference, but I checked it on pretty simple frames.

Conclusion

In general, it is very nice that on a low-power device like the Raspberry Pi you can use neural networks, and even almost in real time. OpenVINO provides very extensive functionality for the inference of neural networks on many different devices - much broader than I described in the article. I think Neural Compute Stick and OpenVINO will be very useful in my robotic research.

Source: https://habr.com/ru/post/436744/