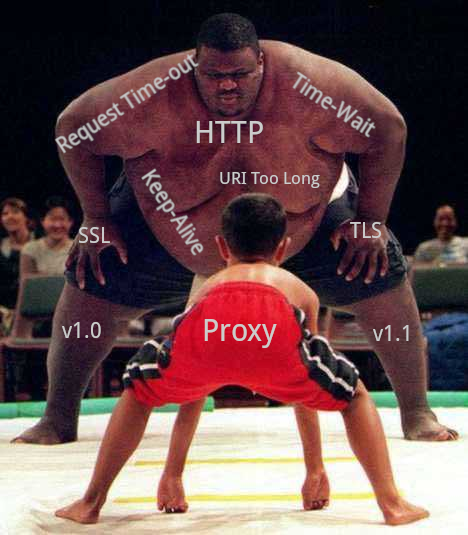

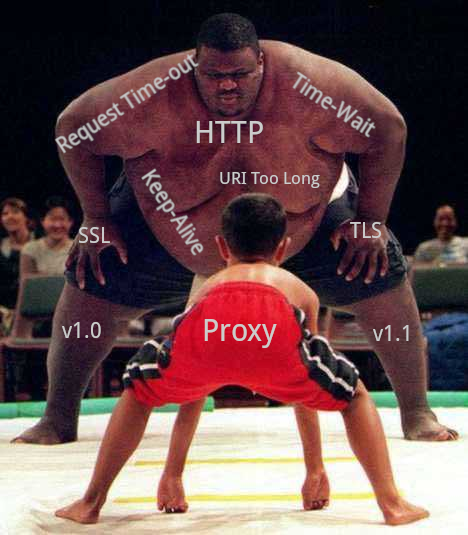

Samples and errors when choosing HTTP Reverse Proxy

Hello!

Today we want to talk about how the hotel booking service Ostrovok.ru team solved the problem of the growth of microservice, whose task is to exchange information with our suppliers. Undying DevOps Team Lead in Ostrovok.ru tells about his experience.

At first, microservice was small and served the following functions:

However, as time went on, the service grew with the number of partners and requests for them.

As the service grew, various kinds of problems began to emerge. Different suppliers put forward their own rules of work: someone restricts the maximum number of connections, someone restricts customers to white lists.

As a result, we had to solve the following tasks:

They did not think long and immediately wondered what to choose: Nginx or Haproxy.

At first, the pendulum swung toward Nginx, since I solved most of the problems associated with HTTP / HTTPS with its help and was always pleased with the result.

The scheme was simple: a request was made to our new Proxy Server on Nginx with a domain like

Here is an example of a

And this is what

Here we have the

Everything is cool, everything works. You can end this article, if not for one nuance.

When using proxy_pass straight to the right address, the request goes, as a rule, via HTTP / 1.0 without

What to do? The first thought is to get all the suppliers in upstream, where the server will be the supplier’s address we need, and in the

Add another

And create

The server itself is slightly modified to take into account the scheme and use the name of the upstream instead of the address:

Fine. The solution works, we add to every upstream

At this time, you can just finish the article and go to drink tea. Or not?

After all, while we are drinking tea, someone from the suppliers may change the IP address or a group of addresses (hi, Amazon) under the same domain, thus one of the suppliers may fall off in the midst of our tea party.

Well, what to do? Nginx has an interesting nuance: during reload it can otrezolvit servers inside

But still it seemed to me a so-so decision, so I began looking askance at Haproxy.

Haproxy has the ability to specify

Fine! Now it remains the case for the settings.

Here is a quick configuration example for Haproxy:

It seems that this time everything works as it should. That's just what I do not like Haproxy, so it is the complexity of the description of configurations. You need to set up quite a lot of text to add one working upstream. But laziness is the engine of progress: if you don’t want to write the same thing, write a generator.

I already had a map from Nginx with the format

Now all we need is to add a new host to nginx_map, start the generator and get a ready haproxy config.

For today, perhaps, everything. This article is rather an introductory one and was devoted to the problem of choosing a solution and its integration into the current environment.

In the next article I will tell you more about what pitfalls we encountered when using Haproxy, what metrics it turned out to be useful to monitor and what exactly should be optimized in the system in order to get the maximum performance from the servers.

Thank you all for your attention, see you soon!

Today we want to talk about how the hotel booking service Ostrovok.ru team solved the problem of the growth of microservice, whose task is to exchange information with our suppliers. Undying DevOps Team Lead in Ostrovok.ru tells about his experience.

At first, microservice was small and served the following functions:

- accept a request from a local service;

- make a request to a partner;

- normalize the response;

- return the result to the questioning service.

However, as time went on, the service grew with the number of partners and requests for them.

As the service grew, various kinds of problems began to emerge. Different suppliers put forward their own rules of work: someone restricts the maximum number of connections, someone restricts customers to white lists.

As a result, we had to solve the following tasks:

- It is desirable to have several fixed external IP addresses so that you can provide them to partners for adding them to the white lists,

- have a single pool of connections to all suppliers so that when scaling our microservice the number of connections remains minimal,

- Terminate SSL and keep

keepalivein one place, thereby reducing the workload for the partners themselves.

They did not think long and immediately wondered what to choose: Nginx or Haproxy.

At first, the pendulum swung toward Nginx, since I solved most of the problems associated with HTTP / HTTPS with its help and was always pleased with the result.

The scheme was simple: a request was made to our new Proxy Server on Nginx with a domain like

<partner_tag>.domain.local , in Nginx there was a map , where <partner_tag> partner’s address. From the map address was taken and proxy_pass was made to this address.Here is an example of a

map that we parse the domain with and select the upstream from the list: ### берем префикс из имени домена: <tag>.domain.local map $http_host $upstream_prefix { default 0; "~^([^\.]+)\." $1; } ### выбираем нужный адрес по префиксу map $upstream_prefix $upstream_address { include snippet.d/upstreams_map; default http://127.0.0.1:8080; } ### выставляем переменную upstream_host исходя из переменной upstream_address map $upstream_address $upstream_host { default 0; "~^https?://([^:]+)" $1; } And this is what

snippet.d/upstreams_map looks like: “one” “http://one.domain.net”; “two” “https://two.domain.org”; Here we have the

server{} : server { listen 80; location / { proxy_http_version 1.1; proxy_pass $upstream_address$request_uri; proxy_set_header Host $upstream_host; proxy_set_header X-Forwarded-For ""; proxy_set_header X-Forwarded-Port ""; proxy_set_header X-Forwarded-Proto ""; } } # service for error handling and logging server { listen 127.0.0.1:8080; location / { return 400; } location /ngx_status/ { stub_status; } } Everything is cool, everything works. You can end this article, if not for one nuance.

When using proxy_pass straight to the right address, the request goes, as a rule, via HTTP / 1.0 without

keepalive and closes immediately after the response is completed. Even if we proxy_http_version 1.1 , nothing will change without upstream ( proxy_http_version ).What to do? The first thought is to get all the suppliers in upstream, where the server will be the supplier’s address we need, and in the

map to keep the "tag" "upstream_name" .Add another

map for parsing the schema: ### берем префикс из имени домена: <tag>.domain.local map $http_host $upstream_prefix { default 0; "~^([^\.]+)\." $1; } ### выбираем нужный адрес по префиксу map $upstream_prefix $upstream_address { include snippet.d/upstreams_map; default http://127.0.0.1:8080; } ### выставляем переменную upstream_host исходя из переменной upstream_address map $upstream_address $upstream_host { default 0; "~^https?://([^:]+)" $1; } ### добавляем парсинг схемы, чтобы к кому надо ходить по https, а к кому надо, но не очень - по http map $upstream_address $upstream_scheme { default "http://"; "~(https?://)" $1; } And create

upstreams with tag names: upstream one { keepalive 64; server one.domain.com; } upstream two { keepalive 64; server two.domain.net; } The server itself is slightly modified to take into account the scheme and use the name of the upstream instead of the address:

server { listen 80; location / { proxy_http_version 1.1; proxy_pass $upstream_scheme$upstream_prefix$request_uri; proxy_set_header Host $upstream_host; proxy_set_header X-Forwarded-For ""; proxy_set_header X-Forwarded-Port ""; proxy_set_header X-Forwarded-Proto ""; } } # service for error handling and logging server { listen 127.0.0.1:8080; location / { return 400; } location /ngx_status/ { stub_status; } } Fine. The solution works, we add to every upstream

keepalive directive, we set proxy_http_version 1.1 , - now we have a pool of connections, and everything works as it should.At this time, you can just finish the article and go to drink tea. Or not?

After all, while we are drinking tea, someone from the suppliers may change the IP address or a group of addresses (hi, Amazon) under the same domain, thus one of the suppliers may fall off in the midst of our tea party.

Well, what to do? Nginx has an interesting nuance: during reload it can otrezolvit servers inside

upstream to new addresses and let traffic to them. In general, also a solution. Throw in cron reload nginx every 5 minutes and continue to drink tea.But still it seemed to me a so-so decision, so I began looking askance at Haproxy.

Haproxy has the ability to specify

dns resolvers and configure dns cache . Thus, Haproxy will update the dns cache if the entries in it have expired, and replace the addresses for upstream in the event that they have changed.Fine! Now it remains the case for the settings.

Here is a quick configuration example for Haproxy:

frontend http bind *:80 http-request del-header X-Forwarded-For http-request del-header X-Forwarded-Port http-request del-header X-Forwarded-Proto capture request header Host len 32 capture request header Referer len 128 capture request header User-Agent len 128 acl host_present hdr(host) -m len gt 0 use_backend %[req.hdr(host),lower,field(1,'.')] if host_present default_backend default resolvers dns hold valid 1s timeout retry 100ms nameserver dns1 1.1.1.1:53 backend one http-request set-header Host one.domain.com server one--one.domain.com one.domain.com:80 resolvers dns check backend two http-request set-header Host two.domain.net server two--two.domain.net two.domain.net:443 resolvers dns check ssl verify none check-sni two.domain.net sni str(two.domain.net) It seems that this time everything works as it should. That's just what I do not like Haproxy, so it is the complexity of the description of configurations. You need to set up quite a lot of text to add one working upstream. But laziness is the engine of progress: if you don’t want to write the same thing, write a generator.

I already had a map from Nginx with the format

"tag" "upstream" , so I decided to take it as a basis, parse and generate a haproxy backend based on these values. #! /usr/bin/env bash haproxy_backend_map_file=./root/etc/haproxy/snippet.d/name_domain_map haproxy_backends_file=./root/etc/haproxy/99_backends.cfg nginx_map_file=./nginx_map while getopts 'n:b:m:' OPT;do case ${OPT} in n) nginx_map_file=${OPTARG} ;; b) haproxy_backends_file=${OPTARG} ;; m) haproxy_backend_map_file=${OPTARG} ;; *) echo 'Usage: ${0} -n [nginx_map_file] -b [haproxy_backends_file] -m [haproxy_backend_map_file]' exit esac done function write_backend(){ local tag=$1 local domain=$2 local port=$3 local server_options="resolvers dns check" [ -n "${4}" ] && local ssl_options="ssl verify none check-sni ${domain} sni str(${domain})" [ -n "${4}" ] && server_options+=" ${ssl_options}" cat >> ${haproxy_backends_file} <<EOF backend ${tag} http-request set-header Host ${domain} server ${tag}--${domain} ${domain}:${port} ${server_options} EOF } :> ${haproxy_backends_file} :> ${haproxy_backend_map_file} while read tag addr;do tag=${tag//\"/} [ -z "${tag:0}" ] && continue [ "${tag:0:1}" == "#" ] && continue IFS=":" read scheme domain port <<<${addr//;} unset IFS domain=${domain//\/} case ${scheme} in http) port=${port:-80} write_backend ${tag} ${domain} ${port} ;; https) port=${port:-443} write_backend ${tag} ${domain} ${port} 1 esac done < <(sort -V ${nginx_map_file}) Now all we need is to add a new host to nginx_map, start the generator and get a ready haproxy config.

For today, perhaps, everything. This article is rather an introductory one and was devoted to the problem of choosing a solution and its integration into the current environment.

In the next article I will tell you more about what pitfalls we encountered when using Haproxy, what metrics it turned out to be useful to monitor and what exactly should be optimized in the system in order to get the maximum performance from the servers.

Thank you all for your attention, see you soon!

Source: https://habr.com/ru/post/436992/