How to defeat the dragon: rewrite your program on Golang

It so happened that your program was written in a scripting language - for example, in Ruby - and there was a need to rewrite it in Golang.

A reasonable question: why in general may need to rewrite the program, which is already written and works fine?

First, let's say a program is associated with a specific ecosystem - in our case, it is Docker and Kubernetes. The entire infrastructure of these projects is written in Golang. This allows access to libraries that use Docker, Kubernetes and others. From the point of view of maintenance, development and improvement of your program, it is more profitable to use the same infrastructure that the main products use. In this case, all new features will be available immediately and you will not have to re-implement them in another language. Only this condition in our particular situation was enough to make a decision about the need to change the language in principle, and that it should be for the language. There are, however, other pluses ...

Secondly, the ease of installing applications on Golang. You do not need to install Rvm, Ruby, a set of gems, etc. in the system. You need to download one static binary file and use it.

Thirdly, the speed of the programs at Golang is higher. This is not a significant systemic increase in speed, which is obtained by using the correct architecture and algorithms in any language. But this is a growth that is felt when you start your program from the console. For example, --help on Ruby can work in 0.8 seconds, and on Golang - 0.02 seconds. This simply improves the user experience of using the program.

NB : As regular readers of our blog could guess, the article is based on the experience of rewriting our dapp product, which is now - not even officially (!) - known as werf . Small details about him, see the end of the material.

Well: you can just go and sit down to write a new code, completely isolated from the old script code. But immediately some complications and limitations on resources and time allocated for development emerge:

- The current version of the program in Ruby is constantly in need of improvements and corrections:

- Bugs arise as they are used and must be corrected promptly;

- It is impossible to freeze the addition of new features for six months, since These features are often required by clients / users.

- Maintaining 2 code bases at the same time is difficult and expensive:

- Teams of 2-3 people are few, considering the presence of other projects besides this program in Ruby.

- The introduction of the new version:

- There should be no significant degradation in function;

- Ideally, this should be seamless and seamless.

It is necessary to organize a continuous porting process. But how to do this if the version on Golang is developed as a separate program?

We write in two languages at once

And what if to transfer components to the Golang from the bottom up? We start with low-level things, then go up through the abstractions.

Imagine that your program consists of the following components:

lib/ config.rb build/ image.rb git_repo/ base.rb local.rb remote.rb docker_registry.rb builder/ base.rb shell.rb ansible.rb stage/ base.rb from.rb before_install.rb git.rb install.rb before_setup.rb setup.rb deploy/ kubernetes/ client.rb manager/ base.rb job.rb deployment.rb pod.rb Port component with functions

A simple case. We take an existing component that is rather isolated from the rest - for example, config ( lib/config.rb ). In this component, only the Config::parse function is defined, which takes the path to the config, reads it and returns the completed structure. A separate binaries for the Golang config and the corresponding package config will be responsible for its implementation:

cmd/ config/ main.go pkg/ config/ config.go The binary on Golang gets the arguments from the JSON file and outputs the result to the JSON file.

config -args-from-file args.json -res-to-file res.json It is config that the config can output messages to stdout / stderr (in our Ruby program, the output always goes to stdout / stderr, so this feature is not parameterized).

Calling the config binary is equivalent to calling some function of the config component. The arguments through the file args.json indicate the name of the function and its parameters. At the output through the res.json file res.json get the result of the function. If the function must return an object of some class, then the data of the object of this class is returned in the form serialized in JSON.

For example, to call the function Config::parse we specify the following args.json :

{ "command": "Parse", "configPath": "path-to-config.yaml" } We res.json result in res.json :

{ "config": { "Images": [{"Name": "nginx"}, {"Name": "rails"}], "From": "ubuntu:16.04" }, } In the config field, we get the state of the Config::Config object serialized in JSON. From this state on the caller in Ruby, you need to construct a Config::Config object.

In the event of an error, the binary can return the following JSON:

{ "error": "no such file path-to-config.yaml" } The error field must be processed by the caller.

Calling Golang from Ruby

From the Ruby side, we turn the Config::parse(config_path) into a wrapper that calls our config , gets the result, handles all possible errors. Here’s an example of ruby pseudocode with simplifications:

module Config def parse(config_path) call_id = get_random_number args_file = "#{get_tmp_dir}/args.#{call_id}.json" res_file = "#{get_tmp_dir}/res.#{call_id}.json" args_file.write(JSON.dump( "command" => "Parse", "configPath" => config_path, )) system("config -args-from-file #{args_file} -res-to-file #{res_file}") raise "config failed with unknown error" if $?.exitstatus != 0 res = JSON.load_file(res_file) raise ParseError, res["error"] if res["error"] return Config.new_from_state(res["config"]) end end A binary could crash with a non-zero unintended code - this is an exceptional situation. Or with the provided codes - in this case, we look at the res.json file for the presence of the error and config fields and eventually return the Config::Config object from the serialized config field.

From the user's point of view, the Config::Parse function has not changed anything.

Port component class

For example, take the class hierarchy lib/git_repo . There are 2 classes: GitRepo::Local and GitRepo::Remote . It makes sense to combine their implementation in a single binary git_repo and, accordingly, package git_repo in Golang.

cmd/ git_repo/ main.go pkg/ git_repo/ base.go local.go remote.go Calling the git_repo binary corresponds to calling any method of the GitRepo::Local or GitRepo::Remote object. An object has a state and it can change after calling a method. Therefore, in the arguments we pass the current state, serialized to JSON. And at the output we always get the new state of the object - also in JSON.

For example, to call the local_repo.commit_exists?(commit) method, we specify args.json :

{ "localGitRepo": { "name": "my_local_git_repo", "path": "path/to/git" }, "method": "IsCommitExists", "commit": "e43b1336d37478282693419e2c3f2d03a482c578" } At the output we get res.json :

{ "localGitRepo": { "name": "my_local_git_repo", "path": "path/to/git" }, "result": true, } In the localGitRepo field, a new state of the object has been received (which may not change). We should put this state in the current Ruby-object local_git_repo in any case.

Calling Golang from Ruby

From the Ruby side, we transform each class method GitRepo::Base , GitRepo::Local , GitRepo::Remote into wrappers that call our git_repo , get the result, set the new state of the object of the GitRepo::Local or GitRepo::Remote class.

Otherwise, everything is the same as calling a simple function.

How to deal with polymorphism and base classes

The easiest thing to do is not to support polymorphism by Golang. Those. make the calls to the git_repo binary always explicitly address the specific implementation (if localGitRepo specified in the arguments, the call came from an object of the GitRepo::Local class; if remoteGitRepo specified, then from the GitRepo::Remote ) and get by copying a small amount of boilerplate- code in cmd. After all, all the same, this code will be thrown out as soon as the move to Golang is completed.

How to change the state of another object

There are situations when an object receives another object as a parameter and calls it a method that implicitly changes the state of this second object.

In this case it is necessary:

- When a binary is called, in addition to the serialized state of the object called by the method, the serialized state of all the parameter objects is transmitted.

- After the call, reinstall the state of the object to which the method was called, and also reinstall the state of all the objects that were passed as parameters.

Otherwise, everything is the same.

What is the result?

We take a component, we port to Golang, we release a new version.

In the case when the underlying components are already ported and the higher-level component that uses them is transferred, this component can “take in itself” of these underlying ones . In this case, the corresponding extra binaries may already be deleted as unnecessary.

And so it goes until we get to the very top layer, which sticks together all the underlying abstractions . This will complete the first stage of porting. The top layer is CLI. He can still live in Ruby for a while before fully switching to Golang.

How to distribute this monster?

Good: now we have an approach to gradually port all components. Question: how to distribute such a program in 2 languages?

In the case of Ruby, the program is still installed as a Gem. As soon as it comes to calling the binary, it can download this dependency to a specific URL (it's hardcoded) and cache it locally on the system (somewhere in the service files).

When we make a new release of our program in 2 languages, we must:

- Collect and download all binary dependencies on a hosting.

- Create Ruby Gem new version.

The binaries for each subsequent version are collected separately, even if some component has not changed. It would be possible to make a separate versioning of all dependent binaries. Then it would not be necessary to collect new binaries for each new version of the program. But in our case, we proceeded from the fact that we do not have time to do something super-complex and optimize the temporary code, so for simplicity we collected separate binaries for each version of the program at the expense of saving time and space for downloading.

Disadvantages approach

Obviously, overhead costs for a constant call of external programs through system / exec .

It is difficult to make caching of any global data at the Golang level - after all, all the data in Golang (for example, package variables) is created when you call a method and die after completion. This must always be borne in mind. However, caching is still possible at the level of class instances or when explicitly passing parameters to an external component.

We must not forget to transfer the state of objects in Golang and correctly restore it after the call.

Binary dependencies on Golang take up a lot of space . It's one thing when there is a single 30 MB binary — a program on Golang. Another thing is when you have ported ~ 10 components, each of which weighs 30 MB - we get 300 MB of files for each version . Because of this, the place on the binary hosting and on the host machine, where your program is running and constantly updated, quickly goes away. However, the problem is not significant if periodically deleting old versions.

Also note that with each update of the program it will take some time to download binary dependencies.

Benefits of the approach

Despite all of these drawbacks, this approach allows you to organize a continuous process of porting to another language and get along with one development team.

The most important advantage is the ability to get quick feedback on the new code, test and stabilize it.

In this case, you can, in between times, add new features to your program, fix bugs in the current version.

How to make a final coup on Golang

At the moment when all the main components are addressed to Golang and already tested in production, all that remains is to rewrite the top interface of your program (CLI) to Golang and throw out all the old Ruby code.

At this stage, it remains only to solve the compatibility problems of your new CLI with the old one.

Cheers, comrades! The revolution has come true.

How we rewrote dapp on Golang

Dapp is a utility developed by Flant for organizing the CI / CD process. It was written in Ruby for historical reasons:

- Extensive experience in developing programs for Ruby.

- Used Chef (recipes for it are written in Ruby).

- Inertness, resistance to the use of a new language for us for something serious.

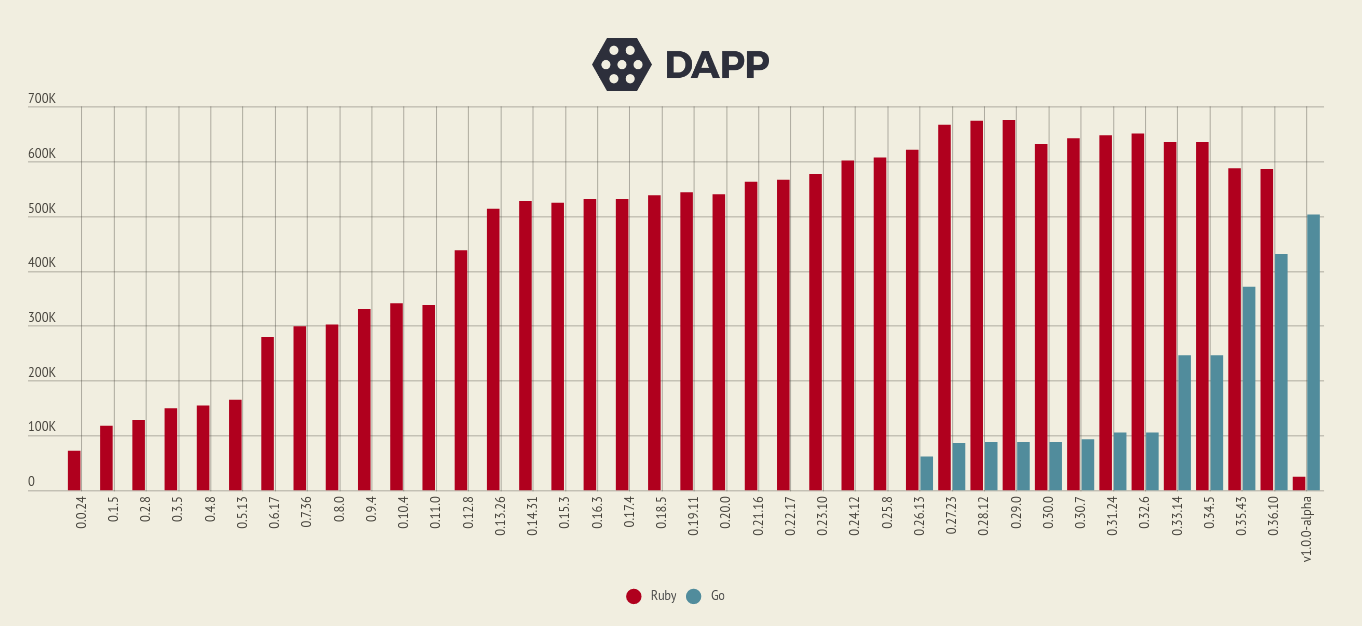

The approach described in the article was used to rewrite dapp to Golang. On the above chart you can see the chronology of the struggle between good (Golang, blue) and evil (Ruby, red):

The amount of code in the dapp / werf project in Ruby vs. Golang over releases

At this point, you can download the alpha version 1.0 , which does not contain Ruby. We also renamed dapp to werf, but this is another story ... Wait for the full release of werf 1.0 soon!

As additional advantages of this migration and illustration of integration with the notorious Kubernetes ecosystem, we note that rewriting dapp on Golang gave us the opportunity to create another project - kubedog . So we were able to isolate the code for tracking K8s resources into a separate project, which can be useful not only in werf, but also in other projects. For the same task, there are other solutions (for more details, see our recent announcement ) , but it would hardly be possible to “compete” with them (in the sense of popularity) without having a Go base.

PS

Read also in our blog:

Source: https://habr.com/ru/post/437044/