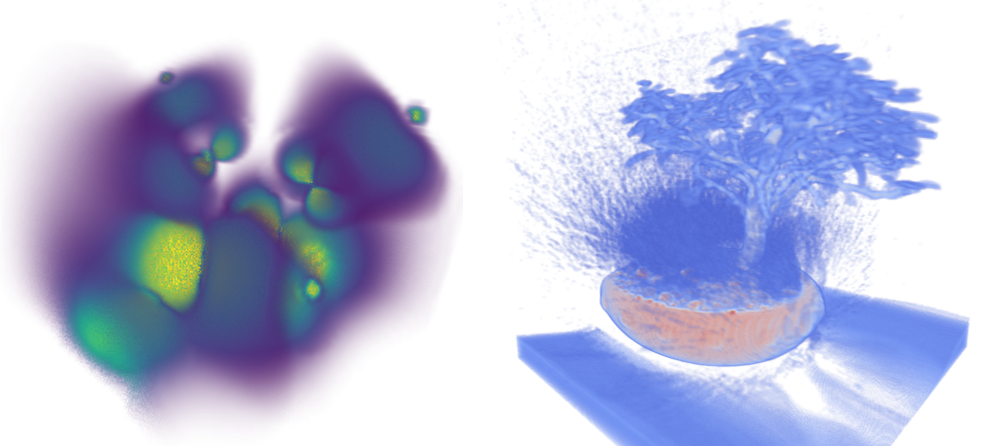

Volume rendering in WebGL

Figure 1. An example of volume renders performed by the WebGL renderer. Left: simulation of the spatial probability distribution of electrons in a high-potential protein molecule. Right: Bonsai tree tomogram. Both datasets are from the Open SciVis Datasets repository .

In scientific visualization, volumetric rendering is widely used for visualizing three-dimensional scalar fields. These scalar fields are often homogeneous grids of values, representing, for example, charge density around a molecule, an MRI scan or a CT scan, a stream of air enveloping an airplane, etc. Volumetric rendering is a conceptually simple method of turning such data into images: sampling the data along the rays from the eye, and assigning color and transparency to each sample, we can create useful and beautiful images of such scalar fields (see Figure 1). In the GPU renderer, such three-dimensional scalar fields are stored as 3D textures; However, 3D textures are not supported in WebGL1, so additional hacks are required to emulate them in volumetric rendering. Recently, WebGL2 has added support for 3D textures, allowing the browser to implement an elegant and fast volumetric renderer. In this post, we will discuss the mathematical foundations of volumetric rendering and discuss how to implement it on WebGL2, to create an interactive volumetric renderer, fully working in the browser! Before you begin, you can test the bulk renderer described in this post online .

1. Introduction

Figure 2: physical volume rendering, taking into account the absorption and emission of light by volume, as well as the effects of scattering.

To create a physically realistic image from volumetric data, we need to model how light rays are absorbed, emitted and scattered by the medium (Figure 2). Although modeling the propagation of light through the environment at this level creates beautiful and physically correct results, it is too expensive for interactive rendering, which is the purpose of the visualization software. In scientific visualizations, the ultimate goal is to allow scientists to interactively explore their data, as well as ask questions about their research task, and answer them. Since a completely physical scattering model will be too expensive for interactive rendering, visualization applications use a simplified emission-absorption model, either ignoring the costly effects of scattering, or in some way approximating them. In this article we will consider only the emission-absorption model.

In the emission-absorption model, we calculate the illumination effects that appear in Figure 2 only along the black beam, and ignore those that arise from the dotted gray rays. The rays passing through the volume and reaching the eyes accumulate the color emitted by the volume and gradually fade out until they are completely absorbed by the volume. If we trace the rays from the eye through the volume, we can calculate the light entering the eye by integrating the ray over the volume in order to accumulate the emission and absorption along the ray. Take a ray that hits the volume at a point s=0 and exiting the volume at the point s=L . We can calculate the light entering the eye using the following integral:

C(r)= intL0C(s) mu(s)e− ints0 mu(t)dtds

During the passage of the beam through the volume, we integrate the emitted color C(s) and absorption mu(s) at every point s along the beam. The emitted light at each point fades and returns to the eye the absorption volume up to this point, which is calculated by the member e− ints0 mu(t)dt .

In general, this integral cannot be calculated analytically, so you need to use numerical approximation. We perform the approximation of the integral, taking a lot of samples N along the beam on the interval s=[0,L] each of which is located at a distance Deltas from each other (Figure 3), and summing up all these samples. The attenuation term at each sampling point becomes the product of the rows accumulating absorption in the previous samples.

C(r)= sumNi=0C(i Deltas) mu(i Deltas) Deltas prodi−1j=0e− mu(j Deltas) Deltas

To further simplify this amount, we approximate the attenuation term ( e− mu(j deltas) deltas ) its next to taylor. Also for convenience, we introduce alpha alpha(i Deltas)= mu(i Deltas) Deltas . This gives us the front-to-back alpha compositing equation:

C(r)= sumNi=0C(i Deltas) alpha(i Deltas) prodi−1j=0(1− alpha(j Deltas))

Figure 3: Calculation of the emission-absorption rendering integral in volume.

The above equation reduces to a for loop, in which we step through the ray through volume and iteratively accumulate color and opacity. This cycle continues until either the beam leaves the volume or the accumulated color becomes opaque ( alpha=1 ). An iterative calculation of the above sum is performed using the familiar compositing equations from front to back:

hatCi= hatCi−1+(1− alphai−1) hatC(i Deltas)

alphai= alphai−1+(1− alphai−1) alpha(i Deltas)

These final equations contain the pre-multiplied opacity for proper mixing, hatC(i Deltas)=C(i Deltas) alpha(i Deltas) .

To render a volume image, simply trace the ray from the eye to each pixel, and then perform the above iteration for each ray that intersects the volume. Each processed beam (or pixel) is dependent, so if we want to render an image quickly, we need a way to parallelly process a large number of pixels. This is where the GPU comes in handy. By implementing the raymarching process in the fragment shader, we will be able to use the power of parallel computing of the GPU to implement a very fast 3D renderer!

Figure 4: Raymarching on a volume grid.

2. GPU implementation on WebGL2

In order for raymarching to run in a fragment shader, you need to force the GPU to execute a fragment shader for the pixels along which we want to trace the ray. However, the OpenGL pipeline works with geometric primitives (Figure 5), and does not have direct methods for executing a fragment shader in a specific area of the screen. To get around this problem, we can render some intermediate geometry to execute the fragment shader on the pixels that we need to render. Our approach to rendering the volume will be similar to the approach of the Shader Toy and the demoscene renderers , which render two full-screen triangles to perform a fragmentary shader, and then it performs the actual rendering work.

Figure 5: The OpenGL pipeline in WebGL consists of two stages of programmable shaders: a vertex shader, which is responsible for transforming input vertices into the space of truncated coordinates (clip space), and a fragmentary shader, which is responsible for shading triangle-covered pixels.

Although rendering two full-screen triangles in the manner of ShaderToy works fine, it will perform unnecessary fragment processing in the case where the volume does not cover the entire screen. This case is quite common: users move the camera away from the volume in order to look at a lot of data in general or to study the large characteristic parts. To limit the fragment processing only to the pixels affected by the volume, we can rasterize the bounding parallelogram of the volume grid, and then execute the raymarching stage in the fragment shader. In addition, we do not need to render the front and reverse faces of the parallelogram, because with a certain order of rendering triangles, the fragmentary shader can then be performed twice. Moreover, if we render only the front faces, we can run into problems when the user approaches the volume, because the front faces will be projected behind the camera, which means they will be cut off, that is, these pixels will not be rendered. To allow users to bring the camera closer to the volume, we will only render the reverse faces of the parallelogram. The resulting rendering pipeline is shown in Figure 6.

Figure 6: WebGL conveyor for raymarching volume. We rasterize the reverse faces of the bounding parallelogram of the volume so that the fragment shader is executed for the pixels related to this volume. Inside the fragment shader, for rendering, we step through the rays through the volume.

In this pipeline, the main part of the actual rendering is performed in a fragment shader; however, we can still use the vertex shader and equipment for fixed interpolation of functions to perform useful calculations. The vertex shader will convert the volume based on the user's camera position, calculate the direction of the beam and the position of the eye in the volume space, and then transfer them to the fragment shader. The direction of the ray, calculated at each vertex, is then interpolated by a triangle by the equipment of fixed interpolation of functions in the GPU, allowing us to calculate the direction of the rays for each fragment a little less expensive. However, when passing to the fragment shader, these directions may not be normalized, so we still have to normalize them.

We will render the limiting parallelogram as a unit cube [0, 1] and scale it to the size of the volume axes in order to support the volumes of the unequal volume. The position of the eye is converted into a single cube, and in this space the direction of the beam is calculated. Raymarching in a single cube space will allow us to simplify texture sampling operations during raymarching in a fragment shader. because they will already be in the space of texture coordinates [0, 1] of the three-dimensional volume.

The vertex shader used by us is shown above; rasterized reverse faces colored in the direction of the ray of sight are shown in Figure 7.

#version 300 es layout(location=0) in vec3 pos; uniform mat4 proj_view; uniform vec3 eye_pos; uniform vec3 volume_scale; out vec3 vray_dir; flat out vec3 transformed_eye; void main(void) { // Translate the cube to center it at the origin. vec3 volume_translation = vec3(0.5) - volume_scale * 0.5; gl_Position = proj_view * vec4(pos * volume_scale + volume_translation, 1); // Compute eye position and ray directions in the unit cube space transformed_eye = (eye_pos - volume_translation) / volume_scale; vray_dir = pos - transformed_eye; };

Figure 7: The reverse faces of the bounding parallelogram of the volume, colored in the direction of the beam.

Now that the fragment shader processes the pixels for which we need to render the volume, we can perform raymarching volume and calculate the color for each pixel. In addition to the direction of the beam and the position of the eye, computed in the vertex shader, to render the volume we need to transfer to the fragment shader and other input data. Of course, we first need a 3D texture sampler to sample the volume. However, volume is just a block of scalar values, and if we used them directly as color values ( C(s) ) and opacity ( alpha(s) ), then a rendered image in grayscale would not be very useful for the user. For example, it would be impossible to highlight interesting areas with different colors, add noise and make background areas transparent to hide them.

To give the user control over the color and opacity assigned to each value of the sample, the renderers of scientific visualizations use an additional color map, called the transfer function . The transfer function sets the color and opacity, which must be assigned to a specific value sampled from the volume. Although there are more complex transfer functions, usually simple color lookup tables are used as functions that can be represented as a one-dimensional texture of color and opacity (in RGBA format). To use the transfer function when performing raymarching volume, we can sample the transfer function texture based on a scalar value sampled from the volume texture. The returned color and opacity values are then used as C(s) and alpha(s) sample

The latest input data for the fragment shader is the size of the volume, which we use to calculate the value of the beam pitch ( Deltas ) to sample at least once each voxel along the beam. Since the traditional beam equation has the form r(t)= veco+t vecd , to match, we will change the terminology in the code, and we denote Deltas as textttdt . Similarly, the interval s=[0,L] along the beam, covered by volume, we denote as [ texttttmin, texttttmax] .

To perform raymarching volume in a fragment shader, we will do the following:

- Normalize the direction of the ray of visibility, obtained as input from the vertex shader;

- Intersect the line of sight with the volume boundaries to determine the interval [ texttttmin, texttttmax] to perform raymarching for the purpose of volume rendering;

- Calculate this step length textttdt so that each voxel is sampled at least once;

- Starting from the entry point to r( texttttmin) let's go through the volume through the ray until we reach the end point in r( texttttmax)

- At each point we sample the volume and use the transfer function to assign color and opacity;

- We will accumulate color and opacity along the beam using the compositing equation from front to back.

As an additional optimization, you can add a condition to prematurely exit the raymarching cycle to complete it when the accumulated color becomes almost opaque. When the color becomes almost opaque, any samples after it will have almost no effect on the pixel, because their color will be completely absorbed by the medium and will not reach the eye.

The full fragment shader for our volumetric renderer is shown below. Added comments that mark every step of the process.

Figure 8: Ready rendered bonsai visualization result from the same viewpoint as in Figure 7.

That's all!

The renderer described in this article will be able to create images similar to the one shown in Figure 8 and Figure 1. You can also test it online . For the sake of brevity, I omitted the Javascript code needed to prepare the WebGL context, load the textures of the volume and the transfer functions, configure the shaders and render the cube for rendering the volume; The full code for the renderer is available on Github for reference.

Source: https://habr.com/ru/post/437526/