opencv4arts: Draw my city, Vincent

OpenCV is a library with a continuous development history of 20 years. Age, when you start to dig into yourself, look for a destination. Are there projects based on it that have made someone's life better, someone happier? Can you do it yourself? Looking for answers and wanting to discover previously unknown OpenCV modules for myself, I want to build applications that "make beautifully" - so that at first it was "wow" and only then you say "oh yes, this is computer vision."

The right of the first article received an experiment with the transfer of styles of world artists to photographs. From the article you will learn what is the heart of the procedure and about the relatively new OpenCV.js - JavaScript version of the OpenCV library.

Style transfer

Forgive me, opponents of machine learning, but the main component in today's article will be a deep convolutional network. Because it works. In OpenCV, there is no way to train neural networks, but you can run existing models. We will use the pre- trained network CycleGAN . The authors, for which they are very grateful, offer a completely free download of networks that convert images of apples into oranges, horses into zebras, satellite images into maps, photos of winter into photos of summer and much more. Moreover, the network training procedure allows you to have two generator models working in both directions at once. That is, by teaching the conversion of winter to summer, you will receive a model for drawing winter landscapes in summer photos. A unique offer that is impossible to refuse.

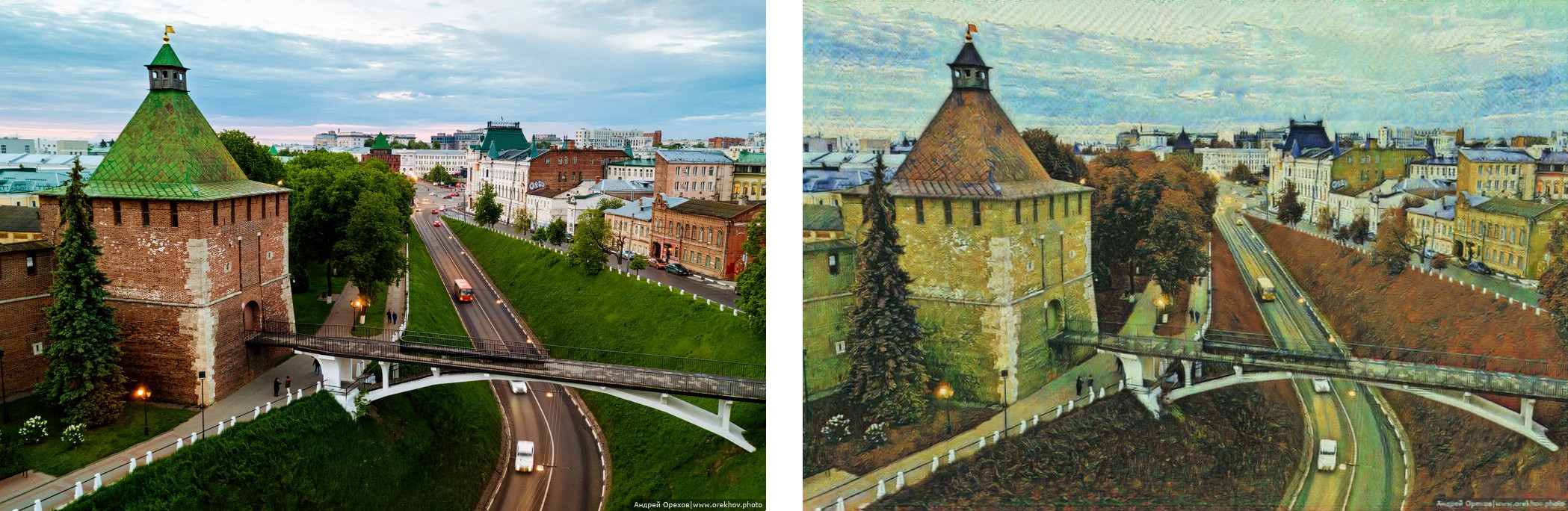

In our example, we will take models that turn photos into paintings by artists. Namely, Vincent Van Gogh, Claude Monet, Paul Cezanne or the whole genre of Japanese engravings Ukiyo-e. That is, we will have four separate networks at our disposal. It is worth noting that not only one picture of the artist was used for teaching each, but a whole multitude, thus the authors tried to teach the neural network not to shift the style of one work, but, as it were, to adopt the writing style.

OpenCV.js

OpenCV is a library developed in the C ++ language, while for most of its functionality, it is possible to create automatic wrappers that call native methods. Officially, Python and Java wrappers are supported. In addition, there are custom solutions for Go , PHP . If you have experience using other languages, it would be great to know which ones, and thanks to whose efforts.

OpenCV.js is a project that received the right to life thanks to the Google Summer of Code program in 2017. By the way, once the deep learning OpenCV module itself was created and significantly improved in its framework. Unlike other languages, OpenCV.js at the moment is not a wrapper for native methods in JavaScript, but a complete compilation using Emscripten using LLVM and Clang. It allows you to make from your C and C ++ applications or a .js library a file that can be run, say, in a browser.

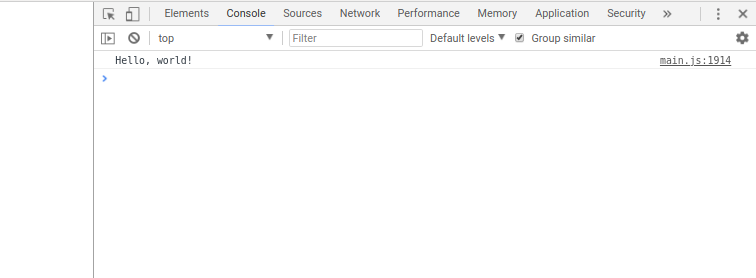

For example,

#include <iostream> int main(int argc, char** argv) { std::cout << "Hello, world!" << std::endl; return 0; } Compile in asm.js

emcc main.cpp -s WASM=0 -o main.js And we load:

<!DOCTYPE html> <html> <head> <script src="main.js" type="text/javascript"></script> </head> </html> You can connect OpenCV.js to your project as follows (nightly build):

<script src="https://docs.opencv.org/master/opencv.js" type="text/javascript"></script> An additional library for reading images, working with the camera, etc., which is written manually in JavaScript, may also be useful:

<script src="https://docs.opencv.org/master/utils.js" type="text/javascript"></script> Loading images

Images in OpenCV.js can be read from elements like canvas or img . This means that downloading the image files directly to them remains the user's task. For convenience, the auxiliary function addFileInputHandler will automatically load the image into the desired canvas element when selecting a picture from the disk at the touch of a button.

var utils = new Utils(''); utils.addFileInputHandler('fileInput', 'canvasInput'); var img = cv.imread('canvasInput'); Where

<input type="file" id="fileInput" name="file" accept="image/*" /> <canvas id="canvasInput" ></canvas> The important point is that img will be a 4-channel RGBA image, which is different from the usual behavior of cv::imread , which creates a BGR image. This should be taken into account, for example, when porting algorithms from other languages.

Everything is simple with drawing - just one imshow call with indication of the desired canvas (it expects RGB or RGBA).

cv.imshow("canvasOutput", img); Algorithm

The entire image processing algorithm is the launch of a neural network. Let what happens inside - remains magic, we will only need to prepare the correct input and correctly interpret the prediction (network output).

The network considered in this example takes as input a four-dimensional tensor with values of type float in the interval [-1, 1] . Each of the dimensions, in order of the rate of change, is an index of the picture, channels, height and width. This arrangement is called NCHW, and the tensor itself is called a blob (blob, binary large object). The preprocessing task is to convert an OpenCV image, whose intensity values are interleaved, have an interval of [0, 255] type of unsigned char in the NCHW blob with a range of values [-1, 1] .

a piece of the Nizhny Novgorod Kremlin (as a person sees)

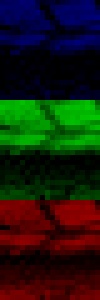

interleaved view (as stored by opencv)

planar view (what the network needs)

As a post-processing, it will be necessary to perform inverse transformations: the network returns an NCHW blob with values in the interval [-1, 1] , which need to be repacked into a picture, normalized to [0, 255] and translated into unsigned char .

Thus, taking into account all the features of reading and writing images of OpenCV.js, we have the following steps:

imread -> RGBA -> BGR [0, 255] -> NCHW [-1, 1] -> [сеть] [сеть] -> NCHW [-1, 1] -> RGB [0, 255] -> imshow Looking at the resulting pipeline, there are questions why the network cannot work directly on interleaved RGBA and return interleaved RGB? Why do we need extra transformations for pixel permutation and normalization? The answer is that a neural network is a mathematical object that performs transformations on the input data of a specific distribution. In our case, it was trained to receive data in this form, therefore, to obtain the desired results, it is necessary to reproduce the preprocessing that the authors used in the training.

Implementation

The neural network, which we will run, is stored as a binary file, which must be preloaded into the local file system.

var net; var url = 'style_vangogh.t7'; utils.createFileFromUrl('style_vangogh.t7', url, () => { net = cv.readNet('style_vangogh.t7'); }); By the way, url is a full link to the file. In this case, we simply load the file lying next to the current HTML page, but you can replace it with the original source (in this case, the download time may be longer).

Reading images from canvas and converting from RGBA to BGR:

var imgRGBA = cv.imread('canvasInput'); var imgBGR = new cv.Mat(imgRGBA.rows, imgRGBA.cols, cv.CV_8UC3); cv.cvtColor(imgRGBA, imgBGR, cv.COLOR_RGBA2BGR); Creating a 4D blob where the blobFromImage function converts to a float data float using normalization constants. Then - start the network.

var blob = cv.blobFromImage(imgBGR, 1.0 / 127.5, // множитель {width: imgBGR.cols, height: imgBGR.rows}, // размеры [127.5, 127.5, 127.5, 0]); // вычитание среднего net.setInput(blob); var out = net.forward(); The result obtained is converted back into the image of the desired type and interval of values [0, 255]

// Нормировка значений из интервала [-1, 1] в [0, 255] var outNorm = new cv.Mat(); out.convertTo(outNorm, cv.CV_8U, 127.5, 127.5); // Создание interleaved изображения из planar блоба var outHeight = out.matSize[2]; var outWidth = out.matSize[3]; var planeSize = outHeight * outWidth; var data = outNorm.data; var b = cv.matFromArray(outHeight, outWidth, cv.CV_8UC1, data.slice(0, planeSize)); var g = cv.matFromArray(outHeight, outWidth, cv.CV_8UC1, data.slice(planeSize, 2 * planeSize)); var r = cv.matFromArray(outHeight, outWidth, cv.CV_8UC1, data.slice(2 * planeSize, 3 * planeSize)); var vec = new cv.MatVector(); vec.push_back(r); vec.push_back(g); vec.push_back(b); var rgb = new cv.Mat(); cv.merge(vec, rgb); // Отрисовка результата cv.imshow("canvasOutput", rgb); At the moment, OpenCV.js is going in semi-automatic mode. In the sense that not all modules and methods from them receive the corresponding signatures in JavaScript. For example, for a dnn module, the list of valid functions is defined as:

dnn = {'dnn_Net': ['setInput', 'forward'], '': ['readNetFromCaffe', 'readNetFromTensorflow', 'readNetFromTorch', 'readNetFromDarknet', 'readNetFromONNX', 'readNet', 'blobFromImage']} The last transformation, which divides the blob into three channels and then mixes them into the picture, in fact, can be done with one method imagesFromBlob , which has not yet been added to the list above. Perhaps this will be your first contribution to the development of OpenCV? ;)

Conclusion

As a demonstration, I prepared a page on GitHub, where you can test the resulting code: https://dkurtaev.imtqy.com/opencv4arts (Caution! Downloading a network around 22MB, save your traffic. It is also recommended to reload the page for each new image, otherwise the quality subsequent treatments somehow strongly distorted). Be prepared for a long process of processing or try changing the size of the image, which as a result will be a slider.

While working on the article and choosing the very image that would become her face, I accidentally found a photo of my friend, which depicts the Kremlin of our city, and then everything came together - I came up with the name of the article and only then I felt that this was what it should be. I suggest you try the application on the photos of your favorite place and, perhaps, tell about it something interesting in the comments or personal letter.

From me - a fun fact. Most residents of Nizhny Novgorod and the Nizhny Novgorod region use the word “get out” in the sense of the word “fit” (to find a free place for themselves). For example, the question “Will we get out of your car?” Means “Do we have enough space in your car?” And not “Can we clean up your car?”. When students from other areas come to us for summer internships, we like to tell this fact - many are genuinely surprised.

useful links

- OpenCV.js documentation

- CycleGAN Models

- Other style transfer models (different normalization)

Source: https://habr.com/ru/post/437600/