How we solved the memory problem in PostgreSQL without adding a byte

A short story about the "heavy" request and elegant solution to the problem

Recently, we were alerted at night by alerts: there is not enough disk space. We quickly figured out what the problem is in ETL problems.

The ETL task was performed in a table where binary records and dumps are stored. Every night this task was supposed to remove duplicate dumps and free up space.

To search for duplicate dumps we used this query:

id, MIN(id) OVER (PARTITION BY blob ORDER BY id) FROM dumps The request combines the same dumps by BLOB-field. Using the window function, we get the ID of the first occurrence of each dump. Then this request deletes all duplicate dumps.

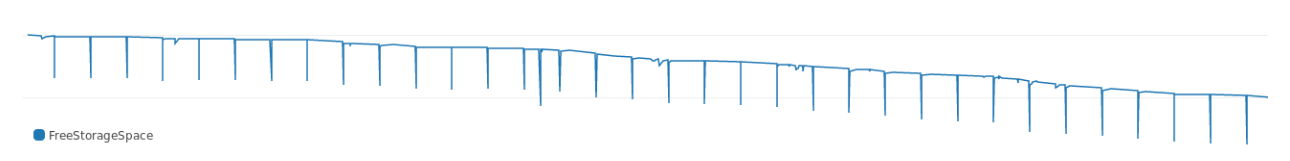

The request was executed for some time, and, as can be seen from the logs, I ate a lot of memory. The graph shows how he scored free disk space every night:

Over time, the request required more and more memory, the dips deepened. And, looking at the execution plan, we immediately saw where everything goes:

Buffers: shared hit=3916, temp read=3807 written=3816 -> Sort (cost=69547.50..70790.83 rows=497332 width=36) (actual time=107.607..127.485 rows=39160) Sort Key: blob, id Sort Method: external merge Disk: 30456kB Buffers: shared hit=3916, temp read=3807 written=3816 -> Seq Scan on dumps (cost=0..8889.32 rows=497332 width=36) (actual time=0.022..8.747 rows=39160) Buffers: shared hit=3916 Execution time: 159.960 ms Sorting takes a lot of memory. In terms of execution from the test data set, sorting requires approximately 30 MB of memory.

Why is that?

PostgreSQL allocates memory for hashing and sorting. The amount of memory is controlled by the work_mem parameter. The default work_mem size is 4 MB. If more than 4 MB is needed for hashing or sorting, PostgreSQL temporarily uses disk space.

Our request obviously consumes more than 4 MB, so the database uses so much memory. We decided: we will not rush - and did not increase the parameter and expand the storage. It is better to look for another way to trim the memory for sorting .

Economical sorting

"How much sorting will eat - depends on the size of the data set and the sort key. You cannot reduce the data set, but the key size is possible .

For the starting point we take the average size of the sort key:

avg ---------- 780 Each key weighs 780. To reduce the binary key, it can be hashed. In PostgreSQL, there is md5 for this (yes, not security, but for our purpose it will do). Let's see how much a BLOB hashed with md5 weighs:

avg ----------- 36 The size of the key hashed through md5 is 36 bytes. The hashed key weighs only 4% of the original version .

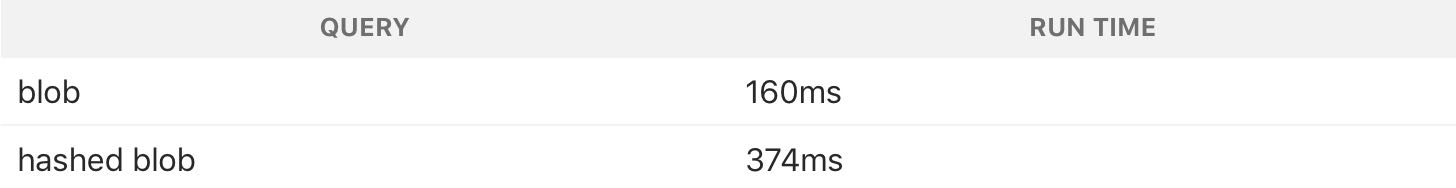

Next, we run the original query with the hashed key:

id, MIN(id) OVER ( PARTITION BY md5(array_to_string(blob, '') ) ORDER BY id) FROM dumps; And the execution plan:

Buffers: shared hit=3916 -> Sort (cost=7490.74..7588.64 rows=39160 width=36) (actual time=349.383..353.045 rows=39160) Sort Key: (md5(array_to_string(blob, ''::text))), id Sort Method: quicksort Memory: 4005kB Buffers: shared hit=3916 -> Seq Scan on dumps (cost=0..4503.40 rows=39160 width=36) (actual time=0.055..292.070 rows=39160) Buffers: shared hit=3916 Execution time: 374.125 ms With a hashed key, the request consumes only 4 extra megabytes, that is, a little more than 10% of the previous 30 MB. So the size of the sort key greatly influences how much memory the sort eats up .

Further more

In this example, we have hashed the BLOB using md5 . Hashes created with MD5 should weigh 16 bytes. And we got more:

md5_size ------------- 32 Our hash was exactly twice as large, because md5 gives the hash in the form of hexadecimal text.

In PostgreSQL, you can use MD5 for hashing with the pgcrypto extension. pgcrypto creates MD5 of type bytea (in binary) :

select pg_column_size( digest('foo', 'md5') ) as crypto_md5_size crypto_md5_size --------------- 20 The hash is still 4 bytes more than it should be. Just the type bytea uses these 4 bytes to store the length of the value, but we will not leave it that way.

It turns out that PostgreSQL's uuid type weighs exactly 16 bytes and supports any arbitrary value, so we get rid of the remaining four bytes:

uuid_size --------------- 16 That's all. 32 bytes with md5 converted to 16 with uuid .

I checked the effects of the change by taking a larger set of data. The data itself can not be shown, but I will share the results:

As the table shows, the original problem query weighed 300 MB (and woke us in the middle of the night). With the key uuid sorting it took just 7 MB.

Considerations after

A query with a hashed sort key to memory consumes less, but it works much slower:

Hashing uses more CPU, so a query with hash is slower. But we tried to solve the problem with disk space, besides the task is performed at night, so time is not a problem. We compromised to save memory.

This is a great example of the fact that it is not always necessary to try to speed up database queries . It is better to use what is balanced and to squeeze the maximum out of a minimum of resources.

Source: https://habr.com/ru/post/437730/