Record video with automatic free-pause free software with bicycles

Russian-speaking developers always have something to tell: to share some of their unique experiences and opinions. But in the format of a video blog, due to the high complexity of the recording, only a few now do it.

Under the cut talked about his difficult way to record and edit video using free software, Ruby scripting and improvised tools.

Theory

I began by studying the theory of recording video blogs on English-language YouTube videos. And from the Russian-language materials - this course turned out to be quite useful (in particular, the module about the video blog and the first video about building a frame from the module about the report). I also briefly familiarized myself with the popular features of proprietary video editors in order to more consciously approach the choice of a free editor.

He did not risk investing in the light: there is not enough time to study it and search for the best option, and a superficial study of cheap options indicates a potential rake such as flickering and poor color rendering. With daylight, I did not have great difficulties, it suffices just for short videos.

Video editor

Existing free video editing tools contain a number of known problems: from bad UI solutions and freezes that turn editing to infinity, to memory leaks, crashes and unexpected artifacts that only appear after the final rendering.

There are a lot of problems and it took time to select a video editor and study its bugs, just to learn how to cope with basic things. Eventually he stopped at Pitivi , simply because he spent so much time on searching and experimenting.

Sound from Flatpak

A supported installation method for Pitivi requires Flatpak. For a while I avoided it because I do not have systemd and PulseAudio in the system.

It turns out systemd is not required for a long time. But PulseAudio - it was necessary to install and configure it was easier to modify Flatpak . But it would be better to put PulseAudio, it's just a bit tedious and unclear whether to expect from him problems with recording sound on existing hardware or not.

Install Pitivi, remove PulseAudio configs, run:

$ sudo flatpak remote-add --if-not-exists flathub https://flathub.org/repo/flathub.flatpakrepo $ sudo flatpak install flathub org.pitivi.Pitivi $ sudo find {/var/lib,~/.local/share}/flatpak/runtime -type f -name '*pulseaudio*.conf' -delete $ flatpak run --device=alsa --branch=stable --arch=x86_64 --command=pitivi org.pitivi.Pitivi No sound. Let's try to run something simpler, for example aplay :

$ sudo find /var/lib/flatpak/app/org.pitivi.Pitivi/x86_64 -type d -path '*/files/bin' -exec cp `which aplay` {} \; $ flatpak run --device=alsa --branch=stable --arch=x86_64 --command=aplay org.pitivi.Pitivi /dev/urandom ALSA lib dlmisc.c:162:(snd_dlsym_verify) unable to verify version for symbol _snd_pcm_empty_open ALSA lib dlmisc.c:283:(snd1_dlobj_cache_get) symbol _snd_pcm_empty_open is not defined inside [builtin] aplay: main:828: audio open error: No such device or address Probably the alsa-lib included in Flatpak was compiled with --with-versioned . A quick fix is to replace libasound.so system one:

$ sudo find /var/lib/flatpak -type f -name libasound.so.2.0.0 -exec cp /usr/lib64/libasound.so.2.0.0 {} \; $ find ~/.local/share/flatpak -type f -name libasound.so.2.0.0 -exec cp /usr/lib64/libasound.so.2.0.0 {} \; # если устанавливали от прав пользователя что-то For me this was not enough:

$ flatpak run --device=alsa --branch=stable --arch=x86_64 --command=aplay org.pitivi.Pitivi /dev/urandom ALSA lib /var/tmp/portage/media-libs/alsa-lib-1.1.6-r1/work/alsa-lib-1.1.6/src/pcm/pcm_direct.c:1943:(snd1_pcm_direct_parse_open_conf) The field ipc_gid must be a valid group (create group audio) aplay: main:828: audio open error: Invalid argument We also need the ALSA config:

$ sudo find /var/lib/flatpak -type d -name etc -exec cp /etc/asound.conf {} \; $ find ~/.local/share/flatpak -type d -name etc -exec cp /etc/asound.conf {} \; # если устанавливали от прав пользователя что-то $ flatpak run --device=alsa --branch=stable --arch=x86_64 --command=aplay org.pitivi.Pitivi /dev/urandom Finally, you can use Pitivi.

- container format: MP4

- video

- codec x264enc

- advanced

- encoding pass / type: constant quantizer

- constant quantizer: 18

- bitrate: 16384 kbit / s

- speed quality preset: ultrafast

- psychovisual tuning preset: film

- audio

- libav ALAC

- at my own risk and fear I use “Never render from proxy files”

- everything else is default

Other effects

I do some animation effects for text using a screencast of full-screen pages that are laid out using reveal.js and animate.css. In reveal.js for some slides I add a transition sound:

<section style="font-size: 5em"> <audio data-autoplay src="/path/to/sound.wav"></audio> #1 </section> It turned out to be important to record a screencast with 60 FPS, if the text is very large. I do the screencast like this:

#!/bin/sh SOUND_INPUT=shared_input_loopback CHANNELS=2 SOUND_RATE=48000 FRAMERATE=60 DRAW_MOUSE=0 VIDEO_SIZE=$(xdpyinfo | awk '/dimensions:/ { print $2; exit }') OUTPUT="${HOME}/video/screen/$(date --rfc-3339=seconds).mp4" ffmpeg \ -thread_queue_size 512 \ -video_size "${VIDEO_SIZE}" \ -framerate "${FRAMERATE}" \ -f x11grab \ -draw_mouse "${DRAW_MOUSE}" \ -i :0.0+0,0 \ -thread_queue_size 512 \ -f alsa \ -ac "${CHANNELS}" \ -i "${SOUND_INPUT}" \ -ar "${SOUND_RATE}" \ -vcodec libx264 -preset ultrafast -crf 18 \ -acodec alac \ -f ipod \ "${OUTPUT}" In my case, shared_input_loopback is a device from the asound.conf config .

Also, this add- on ffmpeg for transitions between clips has proven to be useful.

Video recording

The handset had a Meizu MX4 phone, on which I decided to use the front camera and record using Open Camera. It took some time to train yourself to look at the camera and control its position in space, without making typical mistakes, like cutting off the head. At the same time, speak quite clearly, loudly, gesticulating and generating at least some kind of facial expression. But that was only the beginning.

What prompted me to do automatic video cutting, and even at the recording stage?

- Pitivi brakes and bugs when editing, especially when using the Ripple Move / Edit tool, leading to the need to periodically restart Pitivi.

- For me, the process of manual video cutting is one of the most boring things. It is clear that it is not possible to fully automate this (at least without a scenario in which the pauses necessary for the realization of what was said are not indicated), but at least this process can be optimized.

Here are the requirements for the future bike I set myself:

- Record video using an Android phone, and sound using a laptop.

- Focus control camera.

- Ability to stop recording to save or delete the last recorded fragment.

- Downloading video from a phone via USB, with retries and resume , without blocking the ability to record the next piece.

- Sync audio.

- Determining the presence of voice and throwing pauses.

- The ability to quickly play the last recorded video fragments, with already discarded pauses.

Why so much control over the devices during the recording phase? Why not just start recording for several hours in a row, and then edit it? There are many reasons:

- Banal lack of disk space.

- The tendency of the phone to overheat and quickly discharge during a long recording.

- Malfunction of the touch screen due to the fact that the phone has been in the water. And somehow you need to control the focus. And the next press would create an unnecessary vibration of the device.

- Problems with transferring large files due to poor USB port power on my laptop. In theory, this can be solved using a USB hub with additional power. Using a network is too slow.

- The desire to quickly review the last recorded fragments to make sure that there are no errors and to rewrite them promptly until the planet has turned in the wrong place in front of the sun.

- The desire to throw out obviously bad doubles as early as possible in order not to waste time and disk space on them in the future.

- The need to synchronize long audio recorded by phone and laptop. There may be out of sync with the video due to the fact that frames of audio streams are thrown away both when recording from a laptop and when recording from a phone (which can certainly be solved somehow, but you don’t want to risk and waste time on experiments). It is easier to synchronize small fragments separately, then the possible out of sync will not be noticeable.

- The need to handle the situation when Open Camera restarts recording due to the 4 GiB video size reaching. Probably would have to modify the Open Camera. If this limitation on 4 GiB cannot be removed or increased, it would be necessary to throw an event at the laptop so that he would note that the recording was restarted at this point.

It is easier to record in small fragments and make a primitive automation of all that is possible. As the main language for the development of the bike chose Ruby. Actually now I would probably choose Python, but at that time I was just learning Ruby, and I’m running languages that are new to me in such weird experiments.

Automatic video slicing

Information on the network on this topic is not very much. I remembered late about Stanford and Adobe research (which is not scary, I still need a less sophisticated solution).

The cutting takes place in 2 stages: at the recording stage - rough, at the rendering stage - more accurate, with the ability to manually correct too much trimmed fragments. Rough implemented using VAD from WebRTC. More accurate - using Google Speech (if more specifically - using a modification of the autosub project, to generate subtitles for video). I am sure that there will be more successful solutions, it just turned out to be the best of what we managed to do quickly.

If you want to develop something similar using ffmpeg - stick to the principle of not trying to do too much in one ffmpeg call. Take intermediate files and monitor each step, so you don’t have to search for strange unguided bugs, such as improper cuts or unused effects.

I run the resulting disgrace something like this:

$ bin/vlog-recorder \ --project /path/to/project \ --debug true \ --sound-settings ' --device=usb_card --format=dat' # аргументы к arecord r - (RE)START recording s - STOP and SAVE current clip S - STOP and SAVE current clip, don't use auto trimming d - STOP and DELETE current clip p - PLAY last saved clip f - FOCUS camera on center h - show HELP q / Ctrl+C - QUIT [ stopped ] [ battery: 100% / 36°C ] The arguments to the arecord I need to explicitly specify the device in order to avoid periodic glitches, which are most likely due to the ALSA-vskogo dsnoop plugin. You can also open the log to control the process of downloading files from your phone: tail -f /path/to/project/log.txt .

Quickly srenerit in one video for preview, you can like this:

$ bin/vlog-render \ --project /path/to/project \ --language ru \ --video-filters 'hqdn3d,hflip,curves=psfile=/path/to/curves.acv,vignette' \ --speed 1.3 \ --fps 60 \ --preview true The argument --video-filters are filters passed to ffmpeg . The video will automatically open in the mpv player.

You can also swap or throw out the remaining unnecessary duplicates by editing the appeared file / /path/to/project/render.conf , which can be detected through the recognized voice. The idea, by the way, is not new . You can also speed up individual fragments and edit unsuccessful video cuts, if there are any. Next time, vlog-render re-read render.conf and apply the changes.

To prepare fragments for a video editor, you need to specify --preview false . In addition to the fragments that will lie in the output , it still output.mp4 them into one file output.mp4 , because initially I was not sure:

- will i use small clips in Pitivi

- or upload one long video for further cutting (so that you can apply a number of effects to the “group” of clips).

I mainly use the first option. The second was useful in one video with a bad light: there I used only a piece of output.mp4 . For the second option, the vlog-play-segments script can also be useful: with it, you can quickly see all the pauses between clips in descending order of duration. This will help more accurately render.conf and save time later editing this long piece of video in Pitivi.

The resulting small clips can be downloaded at once to the timeline in Pitivi: select all the imported clips and drag them using drag-n-drop.

Phone mount

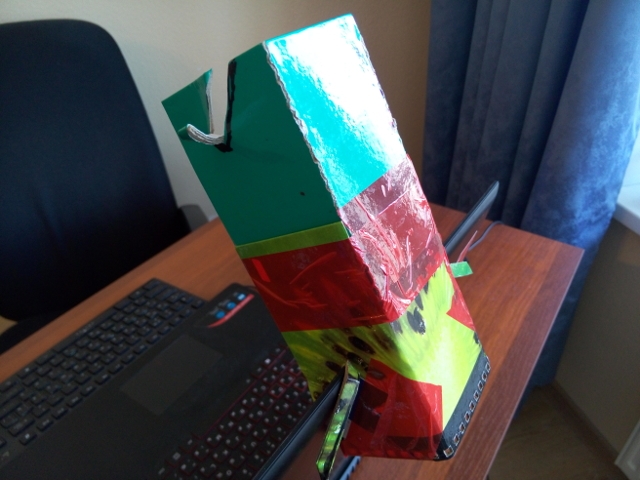

I didn’t want to search for a suitable phone stand, and my hands were already itching to write down anything. We take the piece of cardboard that came to hand and cut out the phone mount to fit our needs:

The stand is mounted on the laptop display to minimize the distance between the script and the camera.

Sound recording

Acceptable sound is very critical . At hand was the microphone Boya BY-M1. Although it is advertised as an omnidirectional microphone, a good sound in practice is obtained only when you use it as unidirectional.

The microphone stand is even simpler to do: take the bottle from the pomegranate juice that came to hand, roll an adhesive tape and assemble this designer together:

You can also put a towel under this design to suppress part of the vibrations from the table and at the same time adjust the height.

Sound card

In my case, this is ASUS Xonar U3. It turned out, however, that it is not compatible with such a microphone: the microphone has a CTIA plug designed for telephones. The problem was solved by an adapter to TRS plugs for a microphone and headphones. And it was not easy to find it: manufacturers of such adapters rarely write details. In my case, some Cablexpert CCA-418W helped.

Another problem with this card is in DC offset in the right channel when recording. That does not interfere, because I'm still in mono. And for software that does not allow to set up mono, a redirection of a good channel to a bad one was done using ALSA.

Also this card is afraid of overheating. You need to keep it away from the cooler, otherwise it will slow down and record sound in jerks.

Sound processing

I edit the sound in the headphones (in my case it is the Pioneer SE-M390), at a volume higher than the one on which I usually listen to music. Algorithm like this:

- With the help of Pitivi, I render a separate sound (using the same ALAC and MP4). I often make several separate tracks, selecting specific layers in Pitivi and temporarily removing unnecessary ones.

- If the resulting files are immediately loaded into Audacity, we will lose stretching / compression of the audio stream, which then may lead to out-of-sync video and audio. What is not obvious, this does not happen with all videos. To prevent this from happening, simply apply these stretch / compressions:

ffmpeg -async 1 -i input.mp4 output.flac - Load all tracks into Audacity. Add background music if needed.

- For all tracks, set the desired volume using Gain.

- We apply the effects of Noise Reduction (double in my case), Compressor and Equalization according to the tips from this video .

- Align and increase the volume at the track with voice. One of the classic methods is Normalize, Amplify, Limiter and again Normalize, but I have not yet managed to get the desired sound quality with this approach.

I temporarily do this: first, I do Gain for the whole track so that the loudest part sounds without overloads, and then manually apply Amplify for individual fragments.Update : another powerful way is RMS Normalize, Limiter and Normal Normalize. RMS Normalize and Limiter settings can be taken from here . Still, this method was not useful to me, because I still decided to switch to another microphone (Zoom H1n) with a built-in Limiter, which suits me (so, with the new microphone, I most likely have to do only normal Normalize, instead of all these things). - A microphone sometimes records sound with some defects that look like clicks. They can be removed using the Spectral edit multi tool effect. Most often, it has to be applied several times in succession for a selected area, using Ctrl + R. Update : thanks to a new microphone, I found out that these defects are related to something external, most likely it is a combination of noise in the mouth and other extraneous sounds.

- We export from Audacity to FLAC and merge everything into one file:

ffmpeg -i sound.flac -an -i video.mp4 -c copy output.mkv - At least I checked the first video at different volumes and different devices.

Result

Taking the opportunity to relax the rules, I invite you to visit the resulting YouTube channel , where I share insights on effective learning programming and related disciplines.

Good luck in developing programs and creating video blogs!

Update : translated this article for your English-language blog.

Source: https://habr.com/ru/post/437918/