Transition to 3D: the impact of chip architecture and recording algorithms on SSD life

Although 14 years have passed since the advent of the first SATA SSD , many consumers still belong to solid-state drives with a fair amount of skepticism. The main reason for mistrust is the limited working life of the flash memory, due to the gradual degradation of the semiconductor chip structure, as a result of which the devices sooner or later lose their ability to record and store information. Detailed technical specifications often only exacerbate the situation: is it difficult for customers to understand whether the TBW 500 TB listed in the description of the Western Digital Blue 3D NAND SATA SSD for two terabytes is a lot or a little? How long will such a disk last in actual working conditions and can it be trusted with the most valuable files? Let's try to sort out this issue together and talk about how reliable modern flash memory is.

Strictly speaking, the answer to the “main question of life, the universe and all that” was received in December 2014, when the guys from the online publication TechReport completed tests of consumer SSDs, which lasted a total of a year. Using the HyperX, Corsair, Kingston and Samsung products as examples, they convincingly proved that the real life of SSDs exceeds 1 petabyte of rewriting. Such volumes are practically unthinkable not only for an ordinary user, but even for professional content-makers: the drive becomes morally outdated much earlier than its resource is exhausted.

However, there is one significant nuance: four years ago, MLC NAND chips were in use, capable of storing 2 bits of information in each cell and manufactured according to a 25-nanometer process technology. At that time, it was a good compromise between super-reliable SLC (single-level cell) and more capacious and inexpensive TLC (triple-level cell): chips with two-bit cells provided an acceptable storage density, withstanding up to 5,000 write / erase cycles ( on average, this figure reaches 3 thousand). What can not be said about their closest brethren: with all the advantages, which should include high capacity and low cost, TLC turned out to be much less durable, hardly taking the bar in 1500 thousand programming / erasing cycles, while most planar microcircuits barely withstand a thousand.

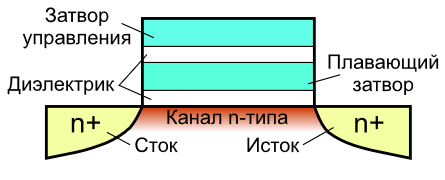

The main culprit in this situation was the transition to a 15-nanometer process used in the production of chips. To understand why this happened, it is enough to remember how the NAND-memory functions. The coding of information bits occurs by changing the charge on a floating gate due to quantum tunneling of electrons through a dielectric layer, due to the high electric field strength.

Floating gate transistor circuit

From the point of view of physics, we are dealing with nothing more than the phenomenon of reversible avalanche-like breakdown. Accelerated in an electric field, electrons receive sufficient kinetic energy for impact ionization of dielectric molecules, resulting in a pair of elementary particles carrying an opposite charge, which are also accelerated by an electric field, and the process repeats, with the number of charge carriers increasing exponentially (hence the name ). It is easy to guess that such processes cause a gradual deterioration of the dielectric layers, as a result, the probability of charge leakage to neighboring cells increases, which in turn leads to damage or even complete loss of data. And the transition to a new process technology only exacerbates the situation: a decrease in the thickness of the dielectric leads to the fact that the cells fail much earlier.

However, if the problem was only this, ordinary consumers, and even corporate users, would simply not notice the difference between MLC and TLC, and in the technical specifications for SSD, we would see much more impressive figures. In reality, a completely different picture appears before our eyes, and the reason for this is the architecture of flash chips and the specificity of their work: individual cells are combined into pages and pages into blocks, while recording information is possible only into blank pages, and its deletion block by block

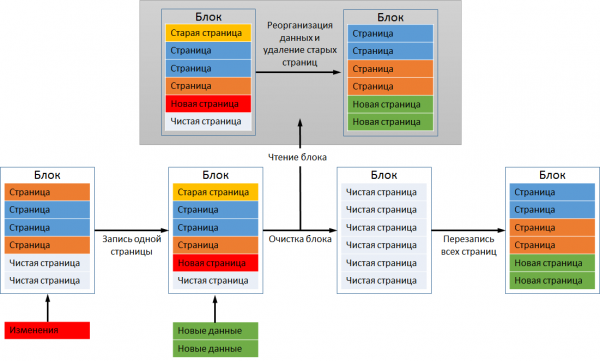

What does this mean in practice? Suppose we have a partially filled block and we want to write new data into it. If their volume is less than the remaining free space, recording occurs instantly, without any additional manipulations. If there is not enough space, complex multi-stage algorithms come into play. Consider the situation in the diagram below.

This is how data is written to flash memory.

New data that we want to record, occupy two pages in the block, but only one is actually free: although the old page (highlighted in yellow) was deleted by the user earlier, in fact the recorded information has not disappeared. To clear space for new data, the controller initiates a procedure known as “garbage collection”, removing unnecessary data and redistributing existing ones. To do this, all pages, with the exception of unnecessary, are copied into the second, free block, while the first is completely erased. Then the actual pages are transferred back to the first block, deleted from the second, and only after that the new data takes its rightful place.

In the example above, in order to write two pages, I had to completely overwrite 2 blocks of six pages each. In fact, the process of “Garbage Collection” will look much more complicated and, as a result, the number of rewriting cycles will be much more. The real picture can be assessed only by knowing the write gain (Write Amplification), which shows how many times the actual load on the flash memory exceeds the calculated one. This indicator can be equal to one only when recording information on an absolutely clean, just formatted disk, in all other cases its value will vary from 2 to 25. Moreover, even for identical drives, at first glance, it can differ significantly, so depends on the model of the controller used and the features of the firmware. Thus, the fault tolerance of SSD is determined not only by the type of flash memory, but also by how developers were able to optimize the operation of the FTL (Flash Translation Layer) layer.

Why did the dramatic increase in storage density not affect the reliability of 3D NAND memory?

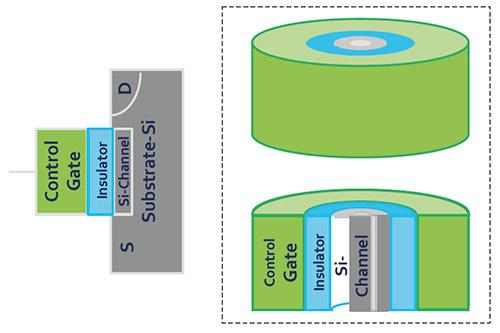

So, now we know how flash memory works and which factors determine the reliability of an SSD drive. The time has come to find out what advantages the transition from “flat” chips to three-dimensional has provided. First of all, 3D NAND differs from its predecessors by using the “Charge Trap Flash” instead of the usual floating gates. If in the latter, polysilicon with doping additives is used to store charges, in CTF it is an isolated region of non-conductive material, the role of which is most often SiN, silicon nitride. Such an approach allowed minimizing the probability of charge leakage and, as a result, increasing the stability of the cells.

The architecture of three-dimensional memory chips has also undergone significant changes compared to its predecessor, since now each cell has a cylindrical structure: the outer layer is a control gate, and the inner layer is an insulator. Since the cells are now located one above the other, they form a stack within which a channel of polycrystalline silicon passes. It is easy to understand that the number of layers in a chip determines the number of cells in the stack.

3D NAND chip cell device

This structure made it possible to reduce the interference between the cells and thereby simplify the recording algorithm: since there was no need to check the state of charge, writing to the cell began in one step. Another important caveat: for the production of 3D NAND used run-in processes, despite the fact that the packing density of the cells was increased several times. For example, even 48-layer chips (the third generation of three-dimensional flash memory) were produced using a 40-nanometer process technology. This allowed not only to increase their reliability, but also to reduce the cost of production, since the existing production lines needed only minimal modernization, and the need for lithography in the deep ultraviolet completely disappeared.

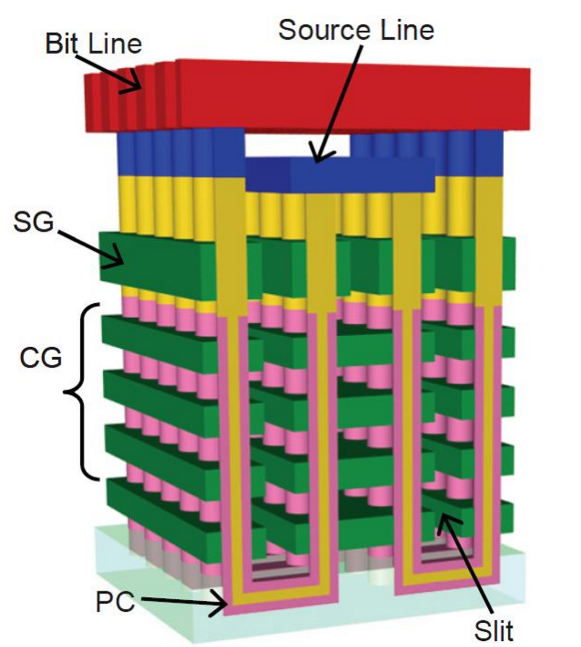

If we talk specifically about Western Digital products, then the modern WD Black SN750 NVMe SSD , the start of sales of which started on January 18, 2019, uses a 64-layer TLC 3D NAND BiCS (Bit Cost Scalable), made using a 28-nanometer process technology. In addition to increasing the packing density by another 1.4 times (the top model now has a capacity of 2 TB, which is twice the size of the flagship of the previous generation), an important feature of this type of chip is the use of U-shaped lines.

3D NAND BiCS Architecture

Since now the switching transistor and the source line are located in the upper part of the crystal, they are practically not exposed to high-temperature influences, which in themselves can lead to errors during read / write operations, which made it possible to further increase the reliability of solid-state drives.

How do write algorithms affect SSD lifespan?

Above, we already wrote that no matter how hardy and protected the flash memory itself was, its resource would be wasted if the SSD developers did not bother to create efficient writing algorithms. To optimize this procedure, two very effective techniques are used: SLC caching and wear leveling (Wear Leveling).

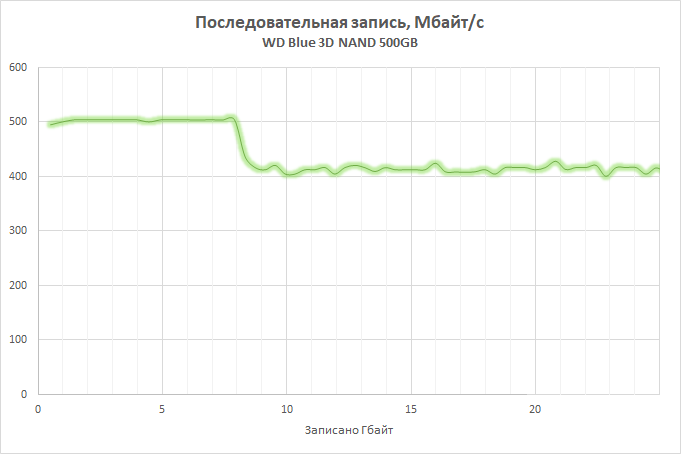

The essence of the first is that part of the available memory array, the size of which depends on the total volume of the drive (for example, when developing the WD Blue 3D NAND SSD we proceeded from 4 GB cache for every 250 GB of capacity) is transferred to the SLC mode, that is , only one bit of information is recorded in each cell, which makes it possible to both significantly increase its productivity and reduce wear rates. SLC is involved in the recording and consolidation of data stored on the SSD, which allows not only to increase the speed of operations, but also to reduce the rate of cell wear. The current versions of Western Digital solid-state drives use nCache 3.0 technology, the latest version of which acquired the direct-to-TLC function, which made it possible to find a balance between caching and speed: data recording bypasses the cache when it is full or when using SLC the buffer turns out to be impractical. This, on the one hand, helped unload the cache, while at the same time avoiding a dramatic drop in performance when filling it.

Dynamics of write speed on SSD when filling SLC-buffer

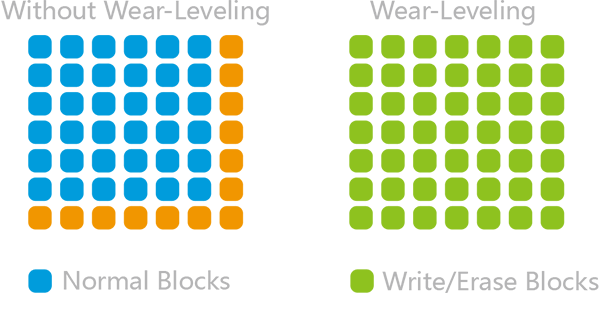

As for the wear leveling technology, it helps to ensure that all existing page blocks are used as evenly as possible. As you know, any operating system uses a logical mechanism for addressing data blocks (LBA), while the controller itself already operates with physical addresses (PBA), correlating them with logical ones. As a result, it does not matter where the fragments of files are actually located, due to which you can write a firmware that will ensure that the load between cells is distributed evenly.

Equalizing wear ensures uniform load on cells

In general, its algorithm is as follows. You bought a brand new SSD and as long as there is free space on it, information will be recorded in free blocks. As you exploit, you start deleting unnecessary files, and the garbage collection mechanism cleans them up in the background, but they will be used for recording only after there are no blocks left on the disk that at least once would not have recorded data. Of course, in reality, everything is much more complicated, but the meaning does not change.

And here you should make another important note regarding Western Digital products. When we made the decision to develop the SSD market, we could go in two ways: buy memory and controllers from third-party manufacturers, focusing only on developing and optimizing micro-programs, or setting up a full-cycle production. Running a similar project from scratch would be impractical, and even buying SanDisk has cost us a pretty penny. But the invested funds got out in full: controlling the production of chips, we were able to adapt the firmware to the features of the microcircuits.

It should be understood that writing information bits to flash memory cells is a much more complicated process than it may seem at first glance: it takes into account hundreds of various parameters, the most important of which are the voltage required to transfer the charge and the recording time. As the chips wear out, their physical characteristics also change: to write data successfully, less voltage is required, and the time it takes for the cell to decrease is also reduced. In most solid-state drives, these parameters are constant, but in Western Digital SSD, on the contrary, they dynamically change as the cells wear out, which allows to maximize the service life of each of them, minimizing the negative impact on semiconductor structures.

QLC 3D NAND - latest generation flash memory

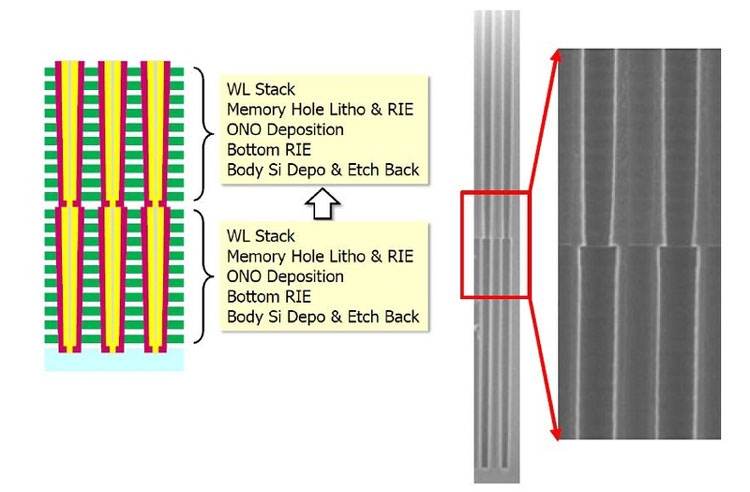

If you follow the news from the world of high technology, then you probably know that Western Digital is actively developing the production of next-generation three-dimensional memory - QLC 3D NAND (the first announcement took place in June 2018). QLC stands for quad-level cell. In other words, 16 charge levels can be stored in a single cell, encoding not three, but four bits of information. Compared to TLC 3D NAND, the recording density in the QLC has increased by 33%: thus, the capacity of one 64-layer chip has increased to 768 Gbps. But this is not the limit: in August 2018, we began production of 96-layer microcircuits. By increasing the number of layers, we managed to get a 50% increase in capacity and overcome the 1 Tbit barrier: the new chips, called 3D NAND BiCS4, can hold 1.33 Tbit of information, which is about 166 GB. It was possible to achieve such high data storage density by combining two 48-layer crystals (today this is the most economically viable approach).

Combining two 48-layer chips into one 96-layer

The increased capacity has the potential to reduce the performance of SSDs, but you shouldn’t worry about it: the new 3D NAND BiCS4 flash memory instead of two physical arrays uses four, which makes it possible to optimize reading and writing data due to parallelization of operations, and this, in turn, will help to more effectively use the cache and control the level of cell wear, keeping the working life of QLC chips at a level comparable to modern TLC solutions. However, the description of the technologies underlying QLC is beyond the scope of this material and certainly deserves a separate article.

Source: https://habr.com/ru/post/438334/