Using graphs to solve sparse systems of linear equations

Prelude

Numerical solution of linear systems of equations is an indispensable step in many areas of applied mathematics, engineering and IT-industry, whether it is working with graphics, calculating the aerodynamics of any aircraft or optimizing logistics. Fashionable now "machine" without this, too, would not really progressed. Moreover, the solution of linear systems, as a rule, consumes the largest percentage of all computational costs. It is clear that this critical component requires maximum speed optimization.

Often work with the so-called. sparse matrices - those with zeros by orders of magnitude more than other values. This, for example, is inevitable if you deal with equations in partial derivatives or with any other processes in which the elements that arise in the defining linear relations are associated only with "neighbors". Here is a possible example of a sparse matrix for the one-dimensional Poisson equation known in classical physics. − phi″=f on a uniform grid (yes, as long as there are not so many zeros in it, but when grinding the grid, they will be healthy!):

\ begin {pmatrix} 2 & -1 & 0 & 0 & 0 \\ -1 & 2 & -1 & 0 & 0 \\ 0 & -1 & 2 & -1 & 0 \\ 0 & 0 & -1 & 2 & -1 \\ 0 & 0 & 0 & -1 & 2 \ end {pmatrix}

The matrices opposite to them — those in which the number of zeros is ignored and take into account all components without exception — are called dense.

Sparse matrices are often, for various reasons, presented in a compressed columnar format — Compressed Sparse Column (CSC). In this description, two integer arrays and one with a floating point are used. Let the matrix have nnzA nonzero and N columns. Elements of the matrix are listed in columns from left to right, with no exceptions. First array iA lengths nnzA contains the row numbers of nonzero matrix components. Second array jA lengths N+1 contains ... no, not column numbers, because then the matrix would be written in the coordinate format (coordinate), or triplet. And the second array contains the ordinal numbers of those components of the matrix, from which the columns begin, including an additional dummy column at the end. Finally, the third array vA lengths nnzA already contains the components themselves, in the order corresponding to the first array. Here, for example, assuming that the numbering of rows and columns of matrices starts from zero, for a specific matrix

A = \ begin {pmatrix} 0 & 1 & 0 & 4 & -1 \\ 7 & 0 & 2.1 & 0 & 3 \\ 0 & 0 & 0 & 10 & 0 \ end {pmatrix}

arrays will be i_A = \ {1, 0, 1, 0, 2, 0, 1 \} , j_A = \ {0, 1, 2, 3, 5, 7 \} , v_A = \ {7, 1, 2.1, 4, 10, -1, 3 \} .

Methods for solving linear systems are divided into two large classes - direct and iterative. Straight lines are based on the ability to represent the matrix as a product of two simpler matrices, in order to split the solution into two simple steps. Iteration uses the unique properties of linear spaces and works on the ancient as the world method of consistent approximation of the unknowns to the desired solution, and in the process of convergence of the matrix are used, as a rule, only to multiply them by vectors. Iterative methods are much cheaper than direct ones, but sluggishly work on ill-conditioned matrices. When reinforced concrete reliability is important - use straight lines. Here I want to touch them a little.

Let's say for a square matrix A we have the decomposition of the form A=LU where L and U - respectively, the lower triangular and upper triangular matrices, respectively. The first means that it has one zeros above the diagonal, the second - that it is lower than the diagonal. How exactly we got this decomposition - we are not interested. Here is a simple decomposition example:

\ begin {pmatrix} 1 & -1 & -1 \\ 2 & - 1 & -0.5 \\ 4 & -2 & -1.5 \ end {pmatrix} = \ begin {pmatrix} 1 & 0 & 0 \\ 2 & 1 & 0 \\ 4 & 2 & 1 \ end {pmatrix} \ begin {pmatrix} 1 & -1 & -1 \\ 0 & 1 & 1.5 \\ 0 & 0 & -0.5 \ end {pmatrix}

How in this case to solve the system of equations Ax=f for example with the right side f= beginpmatrix423 endpmatrix ? The first stage is a forward move (forward solve = forward substitution). Denote y:=Ux and work with the system Ly=f . Since L - lower triangular, successively in the cycle we find all the components y top down:

\ begin {pmatrix} 1 & 0 & 0 \\ 2 & 1 & 0 \\ 4 & 2 & 1 \ end {pmatrix} \ begin {pmatrix} y_1 \\ y_2 \\ y_3 \ end {pmatrix} = \ begin {pmatrix} 4 \\ 2 \\ 3 \ end {pmatrix} \ implies y = \ begin {pmatrix} 4 \\ -6 \\ -1 \ end {pmatrix}

The central idea is that, by finding i vector components y , it is multiplied by a column with the same matrix number L which is then subtracted from the right side. The matrix itself seems to collapse from left to right, decreasing in size as more and more components of the vector are found. y . This process is called “column destruction”.

The second stage is reverse (backward solve = backward substitution). Having found a vector y decide Ux=y . Here we are already going to components from the bottom up, but the idea remains the same: i column is multiplied by the component just found xi and is transferred to the right, and the matrix collapses from right to left. The whole process is illustrated for the matrix mentioned in the example in the picture below:

\ small \ begin {pmatrix} 1 & 0 & 0 \\ 2 & 1 & 0 \\ 4 & 2 & 1 \ end {pmatrix} \ begin {pmatrix} y_1 \\ y_2 \\ y_3 \ end {pmatrix} = \ begin {pmatrix} 4 \\ 2 \\ 3 \ end {pmatrix} \ implies \ begin {pmatrix} 1 & 0 \\ 2 & 1 \ end {pmatrix} \ begin {pmatrix} y_2 \\ y_3 \ end {pmatrix } = \ begin {pmatrix} -6 \\ -13 \ end {pmatrix} \ implies \ begin {pmatrix} 1 \ end {pmatrix} \ begin {pmatrix} y_3 \ end {pmatrix} = \ begin {pmatrix} -1 \ end {pmatrix}

\ small \ begin {pmatrix} 1 & -1 & -1 \\ 0 & 1 & 1.5 \\ 0 & 0 & -0.5 \ end {pmatrix} \ begin {pmatrix} x_1 \\ x_2 \\ x_3 \ end { pmatrix} = \ begin {pmatrix} 4 \\ -6 \ -1 -1 \ end {pmatrix} \ Rightarrow \ begin {pmatrix} 1 & -1 \\ 0 & 1 \ end {pmatrix} \ begin {pmatrix} x_1 \ \ x_2 \ end {pmatrix} = \ begin {pmatrix} 6 \\ -9 \ end {pmatrix} \ implies \ begin {pmatrix} 1 \ end {pmatrix} \ begin {pmatrix} x_1 \ end {pmatrix} = \ begin {pmatrix} -3 \ end {pmatrix}

and our decision will be x= beginpmatrix−3−92 endpmatrix .

If the matrix is dense, that is, it is fully represented as an array, one-dimensional or two-dimensional, and access to a specific element in it occurs during O(1) , then a similar solution procedure with the already existing decomposition is not difficult and is coded easily, so we will not even spend time on it. What if the matrix is sparse? Here, in principle, is also not difficult. Here is the C ++ code for the direct move, in which the solution x written for the place of the right-hand side, without validating the input data (CSC arrays correspond to the lower triangular matrix):

Algorithm 1:

void forward_solve (const std::vector<size_t>& iA, const std::vector<size_t>& jA, const std::vector<double>& vA, std::vector<double>& x) { size_t j, p, n = x.size(); for (j = 0; j < n; j++) // цикл по столбцам { x[j] /= vA[jA[j]]; for (p = jA[j]+1; p < jA[j+1]; p++) x[iA[p]] -= vA[p] * x[j] ; } } All further discussion will concern only the direct course for solving the lower triangular system. Lx=f .

Outset

And what if the right side, i.e. vector to the right of the equal sign Lx=f , itself has a large number of zeros? Then it makes sense to skip the calculations associated with zero positions. Changes in the code in this case are minimal:

Algorithm 2:

void fake_sparse_forward_solve (const std::vector<size_t>& iA, const std::vector<size_t>& jA, const std::vector<double>& vA, std::vector<double>& x) { size_t j, p, n = x.size(); for (j = 0; j < n; j++) // цикл по столбцам { if(x[j]) { x[j] /= vA[jA [j]]; for (p = jA[j]+1; p < jA[j+1]; p++) x[iA[p]] -= vA[p] * x[j]; } } } The only thing we added is an

if , the purpose of which is to reduce the number of arithmetic operations to their actual number. If, for example, the entire right-hand side consists of zeros, then nothing should be considered: the decision will be the right-hand side.Everything looks great and of course it will work, but here, after a long prelude, the problem is finally visible - the asymptotically poor performance of this solver for large systems. And all because of the very fact of having a

for loop. What is the problem? Even if the condition inside the if turns out to be true extremely rarely, one cannot get away from the cycle itself, and this causes the complexity of the algorithm O(N) where N - the size of the matrix. No matter how the cycles are optimized by modern compilers, this complexity will make itself felt at large N . Especially sad when the whole vector f entirely consists of zeros, because, as we said, and in this case, then do nothing! Not a single arithmetic operation! What the hell O(N) action?Well, let's say. Even if so, why not just endure the run

for idle, because of real calculations with real numbers, i.e. Those that fall under the if will still be small? And the fact is that this procedure of a forward stroke with a sparse right-hand side itself is often used in external cycles and underlies the Cholesky decomposition. A=LLT and left-looking LU decomposition . Yes, one of those expansions, without the ability to make all these direct and reverse moves in solving linear systems lose all practical meaning.Theorem. If the matrix is symmetric positive-definite (SPD), then it can be represented as A=LLT the only way where L - lower triangular matrix with positive elements on the diagonal.

For highly sparse SPD matrices, a Cholesky decomposition is used. Schematically representing decomposition in a matrix-block form

\ begin {pmatrix} \ mathbf {L} _ {11} & \ mathbf {0} \\ \ mathbf {l} _ {12} ^ T & l_ {22} \ end {pmatrix} \ begin {pmatrix} \ mathbf {L} _ {11} ^ T & \ mathbf {l} _ {12} \\ \ mathbf {0} & l_ {22} \ end {pmatrix} = \ begin {pmatrix} \ mathbf {A} _ { 11} & \ mathbf {a} _ {12} \\ \ mathbf {a} _ {12} ^ T & a_ {22} \ end {pmatrix},

The whole factorization process can be logically divided into just three steps.

Algorithm 3:

- lower cholesky decomposition method mathbfL11 mathbfLT11= mathbfA11 (recursion!)

- lower triangular system with sparse right side mathbfL11 mathbfl12= mathbfa12 ( here it is! )

- calculation l22= sqrta22− mathbflT12 mathbfl12 (trivial operation)

In practice, this is implemented in such a way that in one large cycle, steps 3 and 2 are performed, and in that order. Thus, the matrix is run diagonally from top to bottom, increasing the matrix L line by line on each iteration of the loop.

If in a similar aglorythm the difficulty of a forward move in step 2 is O(N) where N - the size of the lower triangular matrix mathbfL11 on an arbitrary iteration of a large cycle, the complexity of the entire decomposition will be at least O(N2) ! Oh, how it would not like!

State of the art

Many algorithms are somehow based on the mimicking of human actions in solving problems. If we give a person a lower-triangular linear system with the right side, in which only 1-2 are nonzero, then he will first, of course, run the vector of the right side with his eyes from top to bottom (that damned cycle of complexity O(N) ! ) to find these nonuli. Then he will use them only, without wasting time on zero components, because the solutions will not affect the solution: there is no point in dividing zeros into diagonal components of the matrix, as well as moving the column multiplied by zero to the right. This is the algorithm shown above 2. There are no miracles. But what if a person is immediately given non-zero component numbers from some other sources? For example, if the right-hand side is a column of some other matrix, as is the case with the Cholesky decomposition, then we have instant access to its non-zero components if we request them sequentially:

// если столбец с индексом j, то пробегаем только ненулевые элементы: for (size_t p = jA[j]; p < jA[j+1]; p++) // получаем ненулевой элемент vA[p] матрицы на пересечении строки iA[p] и столбца j The difficulty of such access is O(nnzj) where nnzj - the number of nonzero components in column j. Thank God for the CSC format! As you can see, it is not only used to save memory.

In this case, we can catch the very essence of what is happening when solving by the method of a forward move Lx=f for sparse matrix L and the right side f . Hold your breath: we take a non-zero component fi in the right part, we find the corresponding variable xi by dividing by Lii and then, multiplying the entire i-th column by this found variable, we introduce additional non-nulls in the right-hand side by subtracting this column from the right-hand side! This process is perfectly described in the language of ... graphs. Moreover, oriented and non-cyclical.

We define for a lower triangular matrix that has no zeros on the diagonal, a connected graph. We assume that the numbering of rows and columns starts from zero.

Definition Connectivity graph for a lower triangular matrix L size N which has no zeros on the diagonal, let's call the set of nodes V = \ {0, ..., N-1 \} and oriented edges E = \ {(j, i) | L_ {ij} \ ne 0 \} .

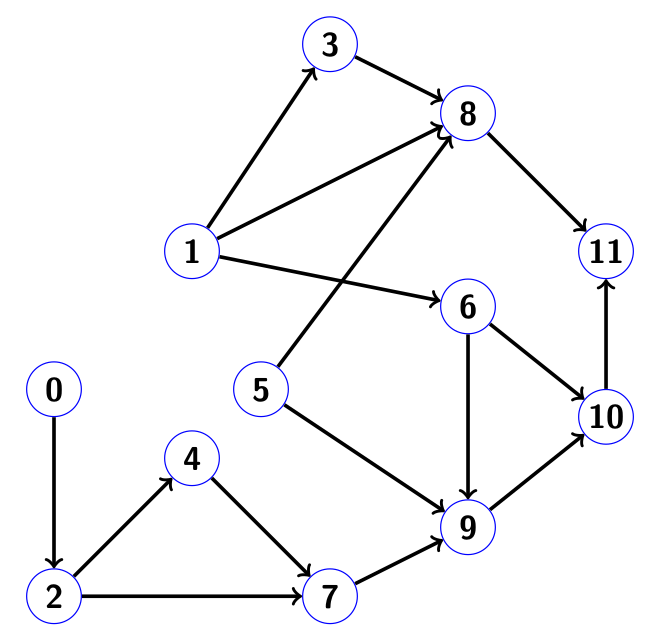

Here, for example, looks like a connectivity graph for a lower triangular matrix.

in which the numbers simply correspond to the ordinal number of the diagonal element, and the dots denote nonzero components below the diagonal:

Definition Reachability of a directed graph G on a set of indices W subsetV let's call such a set of indices RG(W) subsetV that in any index z inRG(W) You can get to the passage on the graph with some index w(z) inW .

Example: for the graph from the image R_G (\ {0, 4 \}) = \ {0, 4, 5, 6 \} . Clear that always runs W subsetRG(W) .

If we represent each node of the graph as the column number of the matrix that generated it, the neighboring nodes, to which its edges are directed, correspond to the row numbers of the nonzero components in this column.

Let be nnz (x) = \ {j | x_j \ ne 0 \} denotes a set of indices corresponding to nonzero positions in the vector x.

Hubert's theorem (no, not the one whose name the spaces are named) Set nnz(x) where x there is a solution vector of a rarefied lower triangular system Lx=f with sparse right side f , coincides with Rg(nnz(f)) .

Addition from ourselves: in the theorem we do not take into account the unlikely possibility that non-zero numbers on the right-hand side, when killing columns, can be reduced to zero, for example, 3 - 3 = 0. This effect is called numerical cancellation. To take into account such spontaneously arising zeros is a waste of time, and they are perceived like all other numbers in non-zero positions.

The effective method of carrying out a direct stroke with a given sparse right-hand side, assuming that we have direct access to its non-zero components by index, is as follows.

- We run the graph by the "depth-first search" (depth first search), sequentially starting from the index i innnz(f) every nonzero component of the right side. Write found nodes to array Rg(nnz(f)) at the same time we perform in the order in which we return back in the graph! By analogy with the army of invaders: we are occupying a country without cruelty, but when we began to drive back, we are angry, we destroy everything in our path.

It is worth noting that it is absolutely not necessary that the list of indexes nnz(f) It was sorted by rerun when it was fed to the input of the "depth-first search" algorithm. You can start in any order on the set nnz(f) . Different ordering of belonging to the set nnz(f) indexes does not affect the final decision, as we will see in the example.

This step does not require any knowledge of the real array at all. vA ! Welcome to the world of symbolic analysis when working with direct sparse solvers! - We proceed to the solution itself, having at its disposal an array Rg(nnz(f)) from the previous step. Columns are destroyed in the reverse order of the record of this array . Voila!

Example

Consider an example in which both steps are shown in detail. Suppose we have a matrix of size 12 x 12 of the following form:

The corresponding connectivity graph has the form:

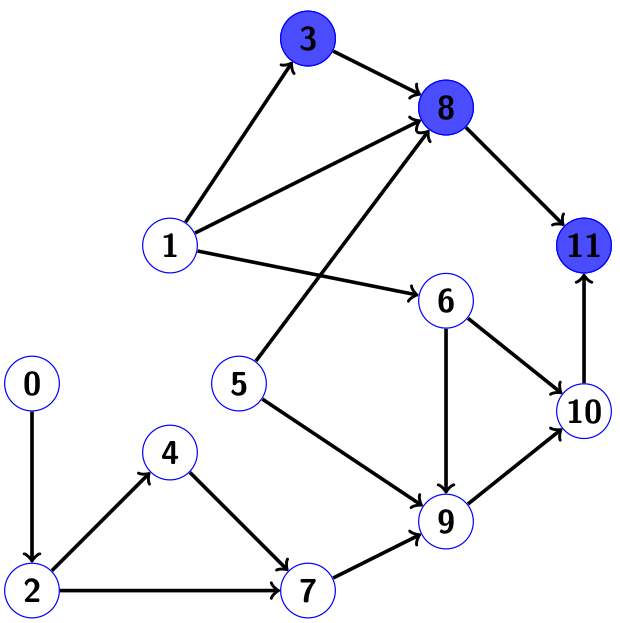

Let the right side of nonuli are only at positions 3 and 5, that is, nnz (f) = \ {3, 5 \} . Let's run the graph, starting from these indices in the written order. Then the “depth-first search” method will look as follows. First we will visit the indexes in order. 3 to8 to11 , not forgetting to mark the indexes as visited. In the image below they are painted in blue:

When going back, we put the indices into our array of indices of nonzero components of the solution. nnz (x): = \ {\ color {blue} {11}, \ color {blue} 8, \ color {blue} 3 \} . Next, try to run 5 to8 to... , but we come across the already marked node 8, so we don’t touch this route and proceed to the branch 5 to9 to... . The result of this run will be 5 to9 to10 . Site 11 can not visit, since it is already labeled. So, go back and complete the array nnz(x) New catch marked in green: nnz (x): = \ {\ color {blue} {11}, \ color {blue} 8, \ color {blue} 3, \ color {green} {10}, \ color {green} 9, \ color {green} 5 \} . And here is the drawing:

Cloudy green nodes 8 and 11 are those that we wanted to visit during the second run, but could not, because already visited during the first.

So the array nnz(x)=RG(nnz(f)) formed. Go to the second step. A simple step: run through the array nnz(x) in the reverse order (right to left), finding the corresponding components of the solution vector x dividing by the diagonal components of the matrix L and moving the columns to the right. The remaining components x as they were zeros, they remained so. Schematically, this is shown below, where the bottom arrow indicates the order in which columns are destroyed:

Please note: in this order, the destruction of columns number 3 will meet later numbers 5, 9 and 10! Columns are not destroyed in an order sorted in ascending order, which would be a mistake for dense ones, i.e. unbreakable matrices. But for sparse matrices like in the order of things. As can be seen from the non-zero structure of the matrix in this example, the use of columns 5, 9 and 10 to column 3 will not distort the components in the answer. x5 , x9 , x10 not at all, they have c x3 just "no intersection." Our method took it into account! Similarly, using column 8 after column 9 will not spoil a component. x9 . Excellent algorithm, is not it?

If we go around the graph from index 5 first, and then from index 3, then our array will be nnz (x): = \ {\ color {blue} {11}, \ color {blue} 8, \ color {blue} {10}, \ color {blue} {9}, \ color {blue} 5, \ color {green} 3 \} . Destroying the columns in the reverse order of this array will give exactly the same solution as in the first case!

The complexity of all our operations is scaled according to the number of actual arithmetic operations and the number of nonzero components on the right side, but not the size of the matrix! We have achieved our goal.

Criticism

BUT! A critical reader will notice that the very assumption at the beginning, as if we have “direct access to non-zero components of the right part by indices,” already means that once we ran the right part from top to bottom to find these indices and organize them. in the array nnz(f) that is already spent O(N) action! Moreover, the graph's mileage itself requires that we pre-allocate the memory of the maximum possible length (we need to write down the indexes found by the search in depth!), So as not to suffer with further relocation as the array grows nnz(x) This also requires O(N) operations! Why then, they say, all the fuss?

But indeed, for a one-time solution of a sparse lower triangular system with a sparse right-hand side, initially given as a dense vector, there is no point in wasting developer time on all the above-mentioned algorithms. They may be even slower than the method in the forehead, represented by algorithm 2 above. But, as mentioned earlier, this device is indispensable for Cholesky factorization, so you should not throw tomatoes at me. Indeed, before running algorithm 3, all the necessary memory of maximum length is allocated immediately and requires O(N) of time. In the subsequent cycle in columns A all data is only overwritten in a fixed-length array N , and only in those positions in which this perezapist actual, thanks to direct access to nonzero elements. And it is precisely due to this that efficiency arises!

C ++ implementation

The graph itself as a data structure in the code is not necessary to build. It is enough to use it implicitly when working with the matrix directly. All the required connectivity will be taken into account by the algorithm. Search in depth of course convenient to implement using a banal recursion. Here, for example, looks like a recursive depth search based on a single starting index:

/* j - стартовый индекс iA, jA - целочисленные массивы нижнетреугольной матрицы, представленной в формате CSC top - значение текущей глубины массива nnz(x); в самом начале передаём 0 result - массив длины N, на выходе содержит nnz(x) с индекса 0 до top-1 включительно marked - массив меток длины N; на вход подаём заполненным нулями */ void DepthFirstSearch(size_t j, const std::vector<size_t>& iA, const std::vector<size_t>& jA, size_t& top, std::vector<size_t>& result, std::vector<int>& marked) { size_t p; marked[j] = 1; // помечаем узел j как посещённый for (p = jA[j]; p < jA[j+1]; p++) // для каждого ненулевого элемента в столбце j { if (!marked[iA[p]]) // если iA[p] не помечен { DepthFirstSearch(iA[p], iA, jA, top, result, marked); // Поиск в глубину на индексе iA[p] } } result[top++] = j; // записываем j в массив nnz(x) } If in the very first call of

DepthFirstSearch to pass the variable top equal to zero, then after the completion of the entire recursive procedure, the variable top will equal the number of indexes found in the result array. Homework: write another function that takes an array of indices of nonzero components on the right side and passes them sequentially to the DepthFirstSearch function. Without this, the algoryth is not complete. Note: an array of real numbers vA we do not pass to the function at all, since it is not needed in the process of symbolic analysis.Despite its simplicity, the implementation flaw is obvious: for large systems, the stack overflow is not far off. Well then, there is an option based on a cycle instead of recursion. It is more difficult to understand, but already suitable for all occasions:

/* j - стартовый индекс N - размер матрицы iA, jA - целочисленные массивы нижнетреугольной матрицы, представленной в формате CSC top - значение текущей глубины массива nnz(x) result - массив длины N, на выходе содержит часть nnz(x) с индексов top до ret-1 включительно, где ret - возвращаемое значение функции marked - массив меток длины N work_stack - вспомогательный рабочий массив длины N */ size_t DepthFirstSearch(size_t j, size_t N, const std::vector<size_t>& iA, const std::vector<size_t>& jA, size_t top, std::vector<size_t>& result, std::vector<int>& marked, std::vector<size_t>& work_stack) { size_t p, head = N - 1; int done; result[N - 1] = j; // инициализируем рекурсионный стек while (head < N) { j = result[head]; // получаем j с верхушки рекурсионного стека if (!marked[j]) { marked[j] = 1; // помечаем узел j как посещённый work_stack[head] = jA[j]; } done = 1; // покончили с узлом j в случае отсутствия непосещённых соседей for (p = work_stack[head] + 1; p < jA[j+1]; p++) // исследуем всех соседей j { // работаем с cоседом с номером iA[p] if (marked[iA[p]]) continue; // узел iA[p] посетили раньше, поэтому пропускаем work_stack[head] = p; // ставим на паузу поиск по узлу j result[--head] = iA[p]; // запускаем поиск в глубину на узле iA[p] done = 0; // с узлом j ещё не покончили break; } if (done) // поиск в глубину на узле j закончен { head++; // удаляем j из рекурсионного стека result[top++] = j; // помещаем j в выходной массив } } return (top); } And this is how the generator of a non-zero solution vector structure looks like nnz(x) :

/* iA, jA - целочисленные массивы матрицы, представленной в формате CSC iF - массив индексов ненулевых компонент вектора правой части f nnzf - количество ненулей в правой части f result - массив длины N, на выходе содержит nnz(x) с индексов 0 до ret-1 включительно, где ret - возвращаемое значение функции marked - массив меток длины N, передаём заполненным нулями work_stack - вспомогательный рабочий массив длины N */ size_t NnzX(const std::vector<size_t>& iA, const std::vector<size_t>& jA, const std::vector<size_t>& iF, size_t nnzf, std::vector<size_t>& result, std::vector<int>& marked, std::vector<size_t>& work_stack) { size_t p, N, top; N = jA.size() - 1; top = 0; for (p = 0; p < nnzf; p++) if (!marked[iF[p]]) // начинаем поиск в глубину на непомеченном узле top = DepthFirstSearch(iF[p], N, iA, jA, vA, top, result, marked, work_stack); for (p = 0; p < top; p++) marked[result[p]] = 0; // очищаем метки return (top); } Finally, combining everything into one, we write the lower triangular solver itself for the case of a sparse right-hand side:

/* iA, jA, vA - массивы матрицы, представленной в формате CSC iF - массив индексов ненулевых компонент вектора правой части f nnzf - количество ненулей в правой части f vF - массив ненулевых компонент вектора правой части f result - массив длины N, на выходе содержит nnz(x) с индексов 0 до ret-1 включительно, где ret - возвращаемое значение функции marked - массив меток длины N, передаём заполненным нулями work_stack - вспомогательный рабочий массив длины N x - вектор решения длины N, которое получим на выходе; на входе заполнен нулями */ size_t lower_triangular_solve (const std::vector<size_t>& iA, const std::vector<size_t>& jA, const std::vector<double>& vA, const std::vector<size_t>& iF, size_t nnzf, const std::vector<double>& vF, std::vector<size_t>& result, std::vector<int>& marked, std::vector<size_t>& work_stack, std::vector<double>& x) { size_t top, p, j; ptrdiff_t px; top = NnzX(iA, jA, iF, nnzf, result, marked, work_stack); for (p = 0; p < nnzf; p++) x[iF[p]] = vF[p]; // заполняем плотный вектор for (px = top; px > -1; px--) // прогон в обратном порядке { j = result[px]; // x(j) будет ненулём x [j] /= vA[jA[j]]; // мгновенное нахождение x(j) for (p = jA[j]+1; p < jA[j+1]; p++) { x[iA[p]] -= vA[p]*x[j]; // уничтожение j-ого столбца } } return (top) ; } We see that our cycle runs only on the array indices nnz(x) rather than the whole set 0 , 1 , . . . , N - 1 . Done!

There is an implementation that does not use an array of

marked labels to save memory. Instead, an additional set of indexes is used. V 1 not intersecting with the setV = { 0 , . . . , N - 1 } , in which a one-to-one mapping occurs by a simple algebraic operation as a procedure for marking a node. However, in our era of cheap memory, save it on a single array of lengthN seems completely redundant.As a conclusion

The process of solving a rarefied linear equation system by the direct method, as a rule, is divided into three stages:

- Character analysis

- Численная факторизация на основе данных сивольного анализа

- Решение полученных треугольных систем с плотной правой частью

The second step, numerical factorization, is the most resource-intensive part and devours most (> 90%) of the estimated time. The purpose of the first step is to reduce the high cost of the second. An example of symbolic analysis was presented in this post. However, it is the first step that requires the longest development time and maximum mental costs on the part of the developer. A good symbolic analysis requires knowledge of the theory of graphs and trees and the possession of an “algorithmic flair”. The second step is disproportionately easier to implement.

Well, the third step and the implementation, and the estimated time in most cases the most unassuming.

A good introduction to direct methods for sparse systems is in the book by Tim Avis , a professor at the faculty of computer science at the University of Texas A & M ,Direct Methods for Sparse Linear Systems ". The algorithm described above is described there. I am not familiar with Davis himself, although I myself once worked at the same university (albeit in another department). If I am not mistaken, Davis himself participated in the development of solvers used in Matlab (!). Moreover, he is involved even in Google Street View image generator (least squares method). By the way, in the same faculty none other than Bjorn Straustrup himself is the creator of the C ++ language.

Maybe someday I'll write something else about sparse matrices or numerical methods in in general. All good!

Source: https://habr.com/ru/post/438716/