A sober look at Helm 2: “This is what it is ...”

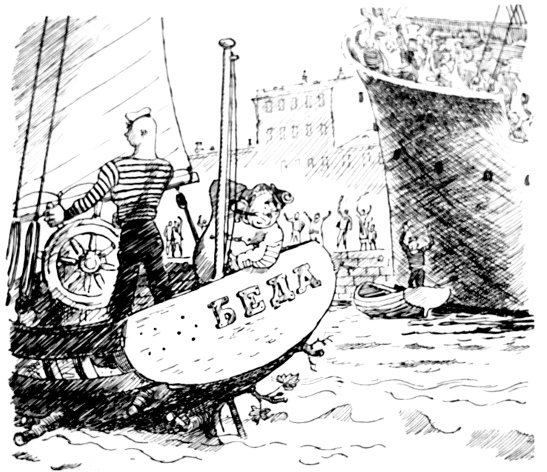

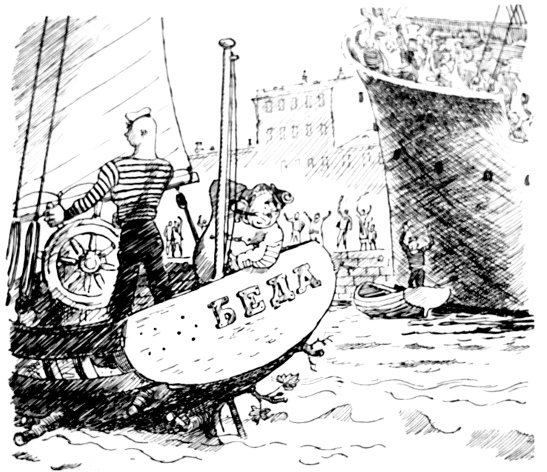

Like any other solution, Helm - the package manager for Kubernetes - has pros, cons and scope, so using it you should correctly evaluate your expectations ...

We use Helm in our arsenal of continuous roll-out tools. At the time of this writing, there are over a thousand applications in our clusters and about 4000 installations of these applications in various variations. Periodically, we encounter problems, but in general we are satisfied with the decision; we have no downtime and data loss.

The main motive for writing this article is to provide the user with an objective assessment of the main problems of Helm 2 without categorical conclusions, as well as the desire to share experiences and our solutions.

When running, Helm does not take into account the state of the release resources in the cluster. When reinstalling the result is determined only by the current and saved configurations. Thus, the state of the resource in the cluster and the Helm registry is different, and Helm does not take into account.

Consider how this problem manifests itself:

And that is not all. At some point, the user changes the resource template in the chart (the new state W) - then we have two scenarios after running the

To avoid such a problem, it is proposed to organize work with releases as follows: no one should change the resources manually , Helm is the only tool for working with release resources. Ideally, chart changes are versioned in a Git repository and applied exclusively within the CD.

If this option is not suitable, then you can follow the synchronization of release resource states . Manual synchronization might look like this:

When applying patches using the

At the time of this writing, the Helm developers are looking for ways to implement 3-way-merge in Helm 3. With Helm 2, not everything is so rosy: 3-way-merge is not planned to be implemented, but there is a PR to fix the way of creating resources - you can learn more or even participate under the relevant issue .

The problem manifests itself when new resources are successfully created when you re-roll out , and the roll-out itself ends up with an error. Under the new resources are meant those that were not in the last installation of the chart.

In case of a failed rollout, the release is saved in the registry marked FAILED , and during the installation Helm relies on the state of the last release of DEPLOYED , which in this case does not know anything about new resources. As a result, Helm tries to re-create these resources and fails with the error "no RESOURCE with the name NAME found" (the error says the opposite, but this is exactly the problem). Part of the problem stems from the fact that Helm does not take into account the state of the release resources in the cluster when creating the patch, as described in the previous section.

At the moment, the only solution would be to delete new resources manually.

In order to avoid such a state, you can automatically delete new resources created in the current upgrade / rollback, if the command ends with an error. After a long discussion with the developers in Helm for the upgrade / rollback commands, they added the option -

Starting with Helm 2.13, the

The problem may occur when the Helm hook runs too long (for example, during migrations) - even though the specified timeout for

This error is generated by the Kubernetes API server while the hook job is waiting. Helm does not handle this error and immediately crashes - instead of repeating the pending request.

As a solution, you can increase the timeout in the api-server:

If the first release via

It would seem that the solution is quite simple: you need to manually execute

In addition, starting with Helm version 2.13 in the

Helm lacks the

Since it is critical for a product that everything works without interruptions, it is necessary to look for solutions, the rollout must be predictable. In order to minimize the likelihood of production downtime, a multi-path approach is often used (for example, staging, qa and production), which consists of sequentially rolling out the contours. With this approach, most of the problems are fixed until they roll back into production and, in conjunction with autorolback, can achieve good results.

To organize autorollback, you can use the helm-monitor plugin, which allows you to bind rollback to metrics from Prometheus. A nice article describing this approach is available here .

For some of our projects, a fairly simple approach is used:

Again, starting with Helm version 2.13, by calling

As planned, Helm should monitor the performance of relevant liveness and readiness samples when using the

This function is not working properly now: not all resources and not all versions of the API are supported. Yes, and the stated process of waiting does not satisfy our needs.

As in the case of using

If the problematic release was rolled back, and Helm does not return any information during the rollout process, what does debugging amount to?! In the case of

Our werf CI / CD utility can deploy a Helm chart and monitor resource readiness, as well as display related information. All data are combined into a single stream and are displayed in the log.

This logic is made in a separate decision kubedog . With the help of the utility, you can subscribe to the resource and receive events and logs, as well as timely learn about the failed rollout. Those. as a solution, after calling

We sought to make a tool that will provide all the necessary information for debugging in the output of the CI / CD pipeline. You can read more about the utility in our recent article .

Perhaps in Helm 3 sometime a similar solution will appear, but so far our issue is in a hung state.

By default, when the

To ensure cluster security, it is necessary to limit Tiller's capabilities, as well as take care of the connection - the network security over which communication takes place between the Helm components.

The first can be achieved through the use of the standard Kubernetes RBAC mechanism, which will limit the tiller actions, and the second - by configuring SSL. Read more in the Helm: Securing your Helm Installation documentation.

There is an opinion that the presence of the server component - Tiller - is a serious architectural error , literally a foreign resource with root privileges in the Kubernetes ecosystem. In part, we agree: the implementation is not perfect, but let's take a look at it from the other side . If you interrupt the deployment process and kill the Helm client, the system will not remain in an uncertain state, i.e. Tiller will bring the release status to valid. You also need to understand that despite the fact that in Helm 3 they refuse Tiller, these functions will somehow be performed by the CRD controller.

Go-templates have a large threshold of entry, but the technology has no limitations on the possibilities and problems with DRY. The basic principles, syntax, functions and operators are discussed in our previous article in the Helm series.

It is convenient to store and accompany the application code, infrastructure, and templates for rollout, when they are located in one place. And secrets are no exception.

Helm does not support out-of-the-box secrets, but the helm-secrets plugin is available, which is essentially a layer between sops , the secret manager of Mozilla, and Helm.

When working with secrets, we use our own solution implemented in werf ( secrets documentation ). Of the features:

Helm 2 is positioned as a stable product, but at the same time there are many bugs that hang in limbo (some of them are several years old!). Instead of making decisions or at least patches, all forces are thrown on the development of Helm 3.

Despite the fact that MR and issue may hang for months ( here is an example of how we added a

To use Helm or not is up to you, of course. Today we ourselves adhere to such a position that, despite the shortcomings, Helm is an acceptable solution for deployment and participating in its development is beneficial for the whole community.

Read also in our blog:

We use Helm in our arsenal of continuous roll-out tools. At the time of this writing, there are over a thousand applications in our clusters and about 4000 installations of these applications in various variations. Periodically, we encounter problems, but in general we are satisfied with the decision; we have no downtime and data loss.

The main motive for writing this article is to provide the user with an objective assessment of the main problems of Helm 2 without categorical conclusions, as well as the desire to share experiences and our solutions.

[BUG] After rollout, the status of the release resources in the cluster does not match the described Helm-chart

When running, Helm does not take into account the state of the release resources in the cluster. When reinstalling the result is determined only by the current and saved configurations. Thus, the state of the resource in the cluster and the Helm registry is different, and Helm does not take into account.

Consider how this problem manifests itself:

- The resource template on the chart corresponds to state X.

- The user installs the chart (Tiller saves the state of resource X).

- Then the user manually changes the resource in the cluster (the state changes from X to Y).

- Without making any changes, he performs the

helm upgrade... And the resource is still in the Y state, although the user expects X.

And that is not all. At some point, the user changes the resource template in the chart (the new state W) - then we have two scenarios after running the

helm upgrade :- Application of the XW patch drops.

- After applying the patch, the resource goes to the Z state, which does not correspond to the desired one.

To avoid such a problem, it is proposed to organize work with releases as follows: no one should change the resources manually , Helm is the only tool for working with release resources. Ideally, chart changes are versioned in a Git repository and applied exclusively within the CD.

If this option is not suitable, then you can follow the synchronization of release resource states . Manual synchronization might look like this:

- We learn the status of release resources through

helm get. - We learn the state of resources in Kubernetes through

kubectl get. - If the resources are different, then we synchronize Helm with Kubernetes:

- We create a separate branch.

- We update the manifests of the chart. Templates must match resource states in Kubernetes.

- We execute warm. Synchronize the state in the registry Helm and cluster.

- After that, the branch can be removed and continue full-time work.

When applying patches using the

kubectl apply , the so-called 3-way-merge is performed, i.e. takes into account the real state of the updated resource. You can see the code of the algorithm here , and read about it here .At the time of this writing, the Helm developers are looking for ways to implement 3-way-merge in Helm 3. With Helm 2, not everything is so rosy: 3-way-merge is not planned to be implemented, but there is a PR to fix the way of creating resources - you can learn more or even participate under the relevant issue .

[BUG] Error: no RESOURCE with the name NAME found

The problem manifests itself when new resources are successfully created when you re-roll out , and the roll-out itself ends up with an error. Under the new resources are meant those that were not in the last installation of the chart.

In case of a failed rollout, the release is saved in the registry marked FAILED , and during the installation Helm relies on the state of the last release of DEPLOYED , which in this case does not know anything about new resources. As a result, Helm tries to re-create these resources and fails with the error "no RESOURCE with the name NAME found" (the error says the opposite, but this is exactly the problem). Part of the problem stems from the fact that Helm does not take into account the state of the release resources in the cluster when creating the patch, as described in the previous section.

At the moment, the only solution would be to delete new resources manually.

In order to avoid such a state, you can automatically delete new resources created in the current upgrade / rollback, if the command ends with an error. After a long discussion with the developers in Helm for the upgrade / rollback commands, they added the option -

--cleanup-on-fail , which activates automatic cleanup when a rollout fails. Our PR is under discussion, finding the best solution.Starting with Helm 2.13, the

--atomic option appears in the helm install/upgrade --atomic , which activates cleaning and rollback during a failed installation (for more details, see PR ).[BUG] Error: watch closed before Until timeout

The problem may occur when the Helm hook runs too long (for example, during migrations) - even though the specified timeout for

helm install/upgrade , as well as the spec.activeDeadlineSeconds for the corresponding Job are not exceeded.This error is generated by the Kubernetes API server while the hook job is waiting. Helm does not handle this error and immediately crashes - instead of repeating the pending request.

As a solution, you can increase the timeout in the api-server:

--min-request-timeout=xxx in the /etc/kubernetes/manifests/kube-apiserver.yaml file.[BUG] Error: UPGRADE FAILED: "foo" has no deployed releases

If the first release via

helm install ended with an error, the subsequent helm upgrade will return a similar error.It would seem that the solution is quite simple: you need to manually execute

helm delete --purge after a failed first installation, but this manual action breaks the CI / CD automatics. In order not to interrupt the execution of manual commands, you can use the werf features to roll it out . When using werf, the problematic release will be automatically recreated upon re-installation.In addition, starting with Helm version 2.13 in the

helm install and helm upgrade --install enough to specify the - --atomic option and after a failed installation, the release will automatically be removed (see PR for details).Autorollback

Helm lacks the

--autorollback option, which, when --autorollback , will remember the current successful revision (will fall if the last revision is not successful) and, after an unsuccessful attempt, the deploy will rollback to the saved revision.Since it is critical for a product that everything works without interruptions, it is necessary to look for solutions, the rollout must be predictable. In order to minimize the likelihood of production downtime, a multi-path approach is often used (for example, staging, qa and production), which consists of sequentially rolling out the contours. With this approach, most of the problems are fixed until they roll back into production and, in conjunction with autorolback, can achieve good results.

To organize autorollback, you can use the helm-monitor plugin, which allows you to bind rollback to metrics from Prometheus. A nice article describing this approach is available here .

For some of our projects, a fairly simple approach is used:

- Before deploy we remember the current revision (we believe that in a normal situation, if a release exists, then it is necessarily in the DEPLOYED state):

export _RELEASE_NAME=myrelease export _LAST_DEPLOYED_RELEASE=$(helm list -adr | \ grep $_RELEASE_NAME | grep DEPLOYED | head -n2 | awk '{print $2}') - Run install or upgrade:

helm install/upgrade ... || export _DEPLOY_FAILED=1 - Check the deployment status and rollback to the saved state:

if [ "$_DEPLOY_FAILED" == "1" ] && [ "x$_LAST_DEPLOYED_RELEASE" != "x" ] ; then helm rollback $_RELEASE_NAME $_LAST_DEPLOYED_RELEASE fi - We end the pipeline with an error if the deployment was unsuccessful:

if [ "$_DEPLOY_FAILED" == "1" ] ; then exit 1 ; fi

Again, starting with Helm version 2.13, by calling

helm upgrade enough to specify the - --atomic option and after a failed installation, rollback will be automatically executed (see PR for details).Waiting for readiness of release resources and feedback at the time of rollout

As planned, Helm should monitor the performance of relevant liveness and readiness samples when using the

--wait option: --wait if set, will wait until all Pods, PVCs, Services, and minimum number of Pods of a Deployment are in a ready state before marking the release as successful. It will wait for as long as --timeout This function is not working properly now: not all resources and not all versions of the API are supported. Yes, and the stated process of waiting does not satisfy our needs.

As in the case of using

kubectl wait , there is no quick feedback and there is no possibility to regulate this behavior. If the rollout ends with an error, then we will know about it only after the timeout expires . In case of a problem installation, you should complete the roll-out process as soon as possible, finish the CI / CD pipeline, roll back the release to the working version and proceed to debugging.If the problematic release was rolled back, and Helm does not return any information during the rollout process, what does debugging amount to?! In the case of

kubectl wait you can organize a separate process to display logs, which will require the names of the release resources. How to organize a simple and working solution is not immediately clear. And in addition to the logs of pods, useful information can be contained in the process of rolling out, resource events ...Our werf CI / CD utility can deploy a Helm chart and monitor resource readiness, as well as display related information. All data are combined into a single stream and are displayed in the log.

This logic is made in a separate decision kubedog . With the help of the utility, you can subscribe to the resource and receive events and logs, as well as timely learn about the failed rollout. Those. as a solution, after calling

helm install/upgrade without the --wait option, --wait can call kubedog for each release resource.We sought to make a tool that will provide all the necessary information for debugging in the output of the CI / CD pipeline. You can read more about the utility in our recent article .

Perhaps in Helm 3 sometime a similar solution will appear, but so far our issue is in a hung state.

Security when using helm init by default

By default, when the

helm init command is executed, the server component is installed in the cluster with rights similar to the superuser, which can lead to undesirable consequences when accessing third parties.To ensure cluster security, it is necessary to limit Tiller's capabilities, as well as take care of the connection - the network security over which communication takes place between the Helm components.

The first can be achieved through the use of the standard Kubernetes RBAC mechanism, which will limit the tiller actions, and the second - by configuring SSL. Read more in the Helm: Securing your Helm Installation documentation.

There is an opinion that the presence of the server component - Tiller - is a serious architectural error , literally a foreign resource with root privileges in the Kubernetes ecosystem. In part, we agree: the implementation is not perfect, but let's take a look at it from the other side . If you interrupt the deployment process and kill the Helm client, the system will not remain in an uncertain state, i.e. Tiller will bring the release status to valid. You also need to understand that despite the fact that in Helm 3 they refuse Tiller, these functions will somehow be performed by the CRD controller.

Martian Go Patterns

Go-templates have a large threshold of entry, but the technology has no limitations on the possibilities and problems with DRY. The basic principles, syntax, functions and operators are discussed in our previous article in the Helm series.

No secrets out of the box

It is convenient to store and accompany the application code, infrastructure, and templates for rollout, when they are located in one place. And secrets are no exception.

Helm does not support out-of-the-box secrets, but the helm-secrets plugin is available, which is essentially a layer between sops , the secret manager of Mozilla, and Helm.

When working with secrets, we use our own solution implemented in werf ( secrets documentation ). Of the features:

- Ease of implementation.

- Keeping a secret in a file, not just in YAML. Convenient when storing certificates, keys.

- Recreation of secrets with the new key.

- Roll out without a secret key (when using werf). It can be useful for those cases when the developer does not have this secret key, but there is a need to run the deployment on a test or local circuit.

Conclusion

Helm 2 is positioned as a stable product, but at the same time there are many bugs that hang in limbo (some of them are several years old!). Instead of making decisions or at least patches, all forces are thrown on the development of Helm 3.

Despite the fact that MR and issue may hang for months ( here is an example of how we added a

before-hook-creation policy for hooks for several months), it is still possible to participate in the development of the project. Every Thursday a half-hour Helm developer rally takes place, where you can learn about the priorities and current directions of the team, ask questions and boost their work. About me and other channels of communication is described in detail here .To use Helm or not is up to you, of course. Today we ourselves adhere to such a position that, despite the shortcomings, Helm is an acceptable solution for deployment and participating in its development is beneficial for the whole community.

PS

Read also in our blog:

- “ Creating packages for Kubernetes with Helm: chart structure and template making ”;

- “ Practical acquaintance with the package manager for Kubernetes - Helm ”;

- “ Package Manager for Kubernetes - Helm: Past, Present, Future ”;

- " Practice with dapp. Part 2. Deploying Docker images in Kubernetes using Helm . ”

Source: https://habr.com/ru/post/438814/