Kubernetes tutorial, part 1: applications, microservices and containers

At our request, Habr created the Kubernetes hub and we are pleased to place the first publication in it. Subscribe!

Kubernetes is easy. Why do banks pay me for working in this area a lot of money, while anyone can master this technology in just a few hours?

If you doubt that Kubernetes can be explored so quickly - I suggest you try to do it yourself. Namely, having mastered this material, you will be able to launch an application based on microservices in the Kubernetes cluster. I can guarantee this, since it is exactly according to the method used here that I teach our clients to work with Kubernetes. What makes this guide different from others? In fact - a lot of things. So, most of these materials begin with an explanation of simple things — Kubernetes concepts and features of the kubectl command. The authors of these materials assume that their reader is familiar with application development, with microservices and with Docker containers. We will go the other way. First, we will talk about how to run an application based on microservices on a computer. Then we will consider assembly of images of containers for each microservice. And after that we'll get to know Kubernetes and analyze the deployment of an application based on microservices in a cluster managed by Kubernetes.

Such an approach, with a gradual approach to Kubernetes, will give a depth of understanding of what is happening, which is necessary for an ordinary person in order to understand how simple everything is in Kubernetes. Kubernetes is, of course, a simple technology, provided that those who want to master it know where and how it is used.

Now, without further ado, let's get to work and talk about the application with which we will work.

Experimental application

Our application will perform only one function. It accepts, as input, one sentence, after which, using text analysis tools, it performs a sentiment analysis of this sentence, receiving an assessment of the author’s emotional attitude towards an object.

Here is the main window of this application.

Web application for analyzing the tonality of texts

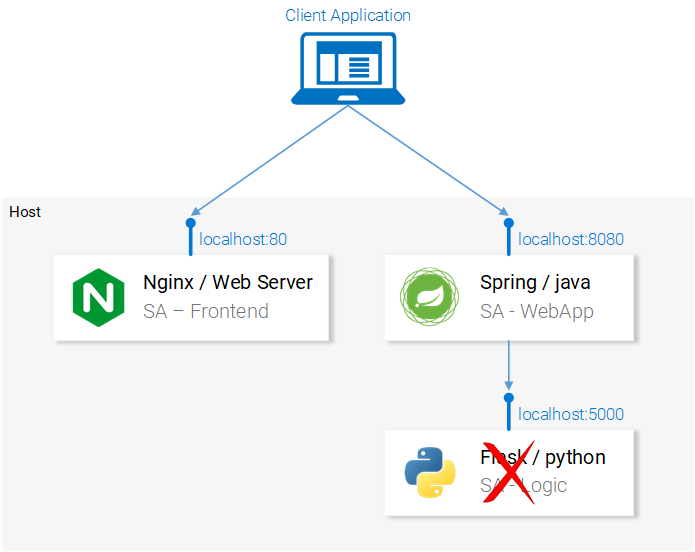

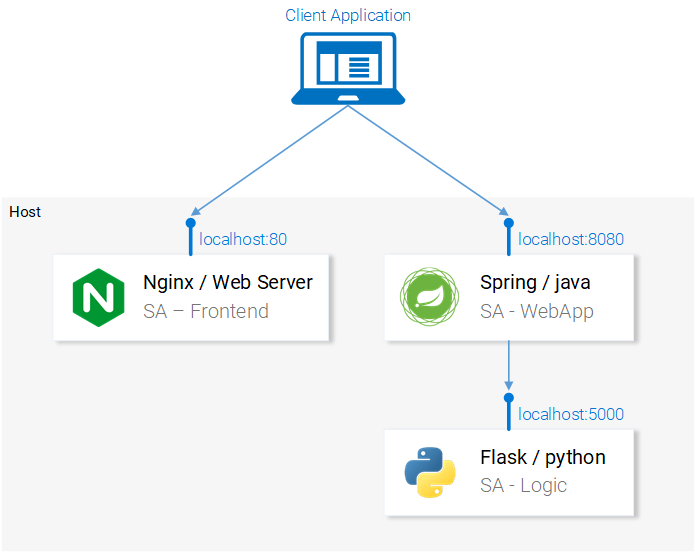

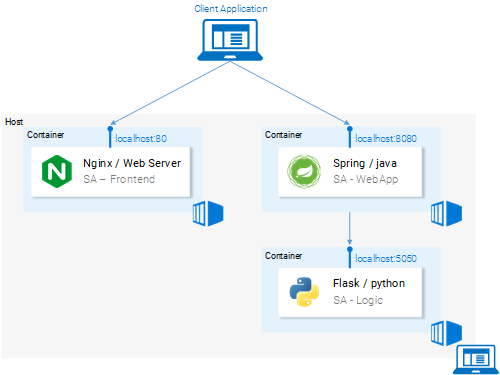

From a technical point of view, the application consists of three microservices, each of which solves a specific set of tasks:

- SA-Frontend is an Nginx web server that serves static React files.

- SA-WebApp is a web application written in Java that processes requests from the frontend.

- SA-Logic is a Python application that performs text tone analysis.

It is important to note that microservices do not exist in isolation. They realize the idea of “separation of duties”, but they, at the same time, need to interact with each other.

Data flows in the application

In the diagram above, you can see the numbered stages of the system, illustrating the data flows in the application. Let's sort them out:

- The browser requests the server file

index.html(which, in turn, downloads the package React-application). - The user interacts with the application, this causes a call to a web application based on Spring.

- The web application redirects the text analysis request to the Python application.

- The Python application analyzes the text key and returns the result as a response to the request.

- Spring-application sends the response to the React-application (and, in turn, shows the result of the text analysis to the user).

The code for all these applications can be found here . I recommend that you copy this repository to yourself right now, as there are many interesting experiments with it ahead of us.

Running a microservice based application on a local computer

In order for the application to work, we need to run all three microservices. Let's start with the cutest of them - with the frontend application.

▍Configuring React for Local Development

In order to run the React application, you need to install the Node.js platform and NPM on your computer. After you have installed all this, go to the

sa-frontend project folder and use the following command: npm install By executing this command, the dependencies of the React-application will be loaded into the

node_modules folder, entries for which are in the package.json file. After the dependencies are loaded in the same folder, execute the following command: npm start That's all. Now the React-application is running, you can get access to it by going to

localhost:3000 in the browser. You can change something in his code. You will immediately see the effect of these changes in the browser. This is possible thanks to the so-called hot-swappable modules. Thanks to this, front-end development turns into a simple and enjoyable experience.▍Preparation of React-application to the conclusion in the production

In order to actually use the React application, we need to convert it into a set of static files and give them to clients using a web server.

To build the React application, again using the terminal, go to the

sa-frontend folder and execute the following command: npm run build This will create a

build directory in the project folder. It will contain all static files necessary for the operation of the React application.▍Service static files using Nginx

First you need to install and run the Nginx web server. Here you can download it and find instructions for installing and running. Then you need to copy the contents of the

sa-frontend/build folder to the [your_nginx_installation_dir]/html folder.With this approach, the

index.html file generated during the assembly of the React application will be available at [your_nginx_installation_dir]/html/index.html . This is the file that, by default, the Nginx server issues when accessing it. The server is configured to listen on port 80 , but you can configure it as you need by editing the file [your_nginx_installation_dir]/conf/nginx.conf .Now open your browser and go to

localhost:80 . You will see the React application page.

React-application serviced by the server Nginx

If you enter something now in the

Type your sentence field and click the Send button, nothing will happen. But, if you look at the console, you can see error messages there. In order to understand exactly where these errors occur, let’s analyze the application code.ФронтAnalysis of front-end application code

Looking at the code of the

App.js file, we can see that clicking on the Send button calls the analyzeSentence() method. The code for this method is shown below. In this case, note that for each line to which there is a comment like # Номер , there is an explanation given below code. In the same way, we will parse other code fragments. analyzeSentence() { fetch('http://localhost:8080/sentiment', { // #1 method: 'POST', headers: { 'Content-Type': 'application/json' }, body: JSON.stringify({ sentence: this.textField.getValue()})// #2 }) .then(response => response.json()) .then(data => this.setState(data)); // #3 } 1. The URL for the POST request. It is implied that at this address there is an application waiting for such requests.

2. The request body sent to the application. Here is an example of the request body:

{ sentence: "I like yogobella!" } 3. Upon receiving a response to the request, the status of the component is updated. This causes the component to be re-rendered. If we receive data (that is, a JSON object containing the data entered and the calculated text estimate), we will derive the

Polarity component, since the corresponding conditions will be met. Here is how we describe the component: const polarityComponent = this.state.polarity !== undefined ? <Polarity sentence={this.state.sentence} polarity={this.state.polarity}/> : null; The code, it seems, looks quite workable. What is wrong here, after all? If you assume that at the address to which the application tries to send a POST request, as long as there is nothing that can accept and process this request, then you will be absolutely right. Namely, to handle requests coming in at

http://localhost:8080/sentiment , we need to run a web application based on Spring.

We need a Spring application that can accept a POST request.

▍Set up a Spring-based Web Application

In order to deploy the Spring application, you need JDK8 and Maven and properly configured environment variables. After you install all this, you can continue to work on our project.

▍ Packing an application in a jar file

Navigate to the

sa-webapp folder using the terminal and enter the following command: mvn install After executing this command, the

target directory will be created in the sa-webapp folder. There will be a Java application packed in a jar file represented by the sentiment-analysis-web-0.0.1-SNAPSHOT.jar file.▍ Start Java application

Go to the

target folder and start the application with the following command: java -jar sentiment-analysis-web-0.0.1-SNAPSHOT.jar An error will occur during the execution of this command. In order to proceed with its correction, we can analyze the exception information in the stack trace data:

Error creating bean with name 'sentimentController': Injection of autowired dependencies failed; nested exception is java.lang.IllegalArgumentException: Could not resolve placeholder 'sa.logic.api.url' in value "${sa.logic.api.url}" The most important thing for us here is the mention of the impossibility of finding out the meaning of

sa.logic.api.url . Let's analyze the code in which the error occurs.▍A Java Application Code Analysis

Here is the code snippet where the error occurs.

@CrossOrigin(origins = "*") @RestController public class SentimentController { @Value("${sa.logic.api.url}") // #1 private String saLogicApiUrl; @PostMapping("/sentiment") public SentimentDto sentimentAnalysis( @RequestBody SentenceDto sentenceDto) { RestTemplate restTemplate = new RestTemplate(); return restTemplate.postForEntity( saLogicApiUrl + "/analyse/sentiment", // #2 sentenceDto, SentimentDto.class) .getBody(); } } - In S

entimentControllerthere is asaLogicApiUrlfield. Its value is set by thesa.logic.api.urlproperty. - The

saLogicApiUrlstringsaLogicApiUrlconcatenated with the value/analyse/sentiment. Together they form an address for making a request to microservice that performs text analysis.

▍Property value setting

In Spring, the standard source for property values is the

application.properties file, which can be found at sa-webapp/src/main/resources . But using it is not the only way to set property values. This can be done with the help of the following command: java -jar sentiment-analysis-web-0.0.1-SNAPSHOT.jar --sa.logic.api.url=WHAT.IS.THE.SA.LOGIC.API.URL The value of this property should point to the address of our Python application.

By customizing it, we tell the Spring web application where it needs to go in order to perform text analysis requests.

In order not to complicate your life, we decide that the Python application will be available at

localhost:5000 and try not to forget about it. As a result, the command to start the Spring application will look like this: java -jar sentiment-analysis-web-0.0.1-SNAPSHOT.jar --sa.logic.api.url=http://localhost:5000

Our system is missing a Python application.

Now we just have to start the Python application and the system will work as expected.

▍Python application setup

In order to run a Python application, you need to have Python 3 and Pip installed, and you need to set the appropriate environment variables correctly.

▍Install dependencies

Navigate to the

sa-logic/sa project folder and run the following commands: python -m pip install -r requirements.txt python -m textblob.download_corpora ▍ Start application

After installing the dependencies, we are ready to run the application:

python sentiment_analysis.py After executing this command, we will be told the following:

* Running on http://0.0.0.0:5000/ (Press CTRL+C to quit) This means that the application is running and waiting for requests at

localhost:5000/▍ Code Investigation

Consider the Python application code in order to understand how it responds to requests:

from textblob import TextBlob from flask import Flask, request, jsonify app = Flask(__name__) #1 @app.route("/analyse/sentiment", methods=['POST']) #2 def analyse_sentiment(): sentence = request.get_json()['sentence'] #3 polarity = TextBlob(sentence).sentences[0].polarity #4 return jsonify( #5 sentence=sentence, polarity=polarity ) if __name__ == '__main__': app.run(host='0.0.0.0', port=5000) #6 - Initializing the

Flaskobject. - Setting the address for performing POST requests to it.

- Extracting the

sentenceproperty from the request body. - Initialization of an anonymous

TextBlobobject and getting thepolarityvalue for the first sentence received in the request body (in our case this is the only sentence that is submitted for analysis). - Return of the answer, the body of which contains the text of the sentence and the

polarityindex calculated for it. - Launching a Flask application, which will be available at

0.0.0.0:5000(you can access it using alocalhost:5000type construction).

Now microservices of which the application consists are launched. They are configured to interact with each other. Here is the diagram of the application at this stage of work.

All microservices of which the application consists are given in a healthy state.

Now, before continuing, open the React-application in the browser and try to analyze with the help of any proposal. If everything is done correctly - after clicking on the

Send button you will see the analysis results under the text field.In the next section, we will talk about how to run our microservices in Docker containers. This is necessary in order to prepare the application to run in the Kubernetes cluster.

Docker Containers

Kubernetes is a system for automating the deployment, scaling, and management of containerized applications. It is also called the “container orchestrator”. If Kubernetes works with containers, then we need to get these containers first before using this system. But first, let's talk about what containers are. Perhaps the best answer to the question of what it is can be found in the Docker documentation :

A container image is a lightweight, stand-alone, executable package containing an application that includes everything you need to run it: application code, runtime, system tools and libraries, settings. Containerized programs can be used in Linux and Windows environments, while they will always work the same regardless of infrastructure.

This means that containers can be run on any computers, including production servers, and in any environments the applications enclosed in them will work in the same way.

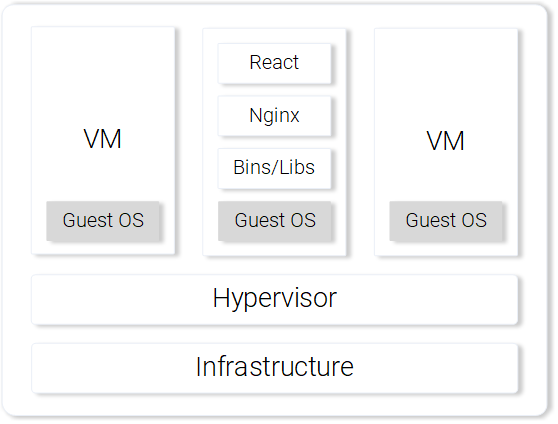

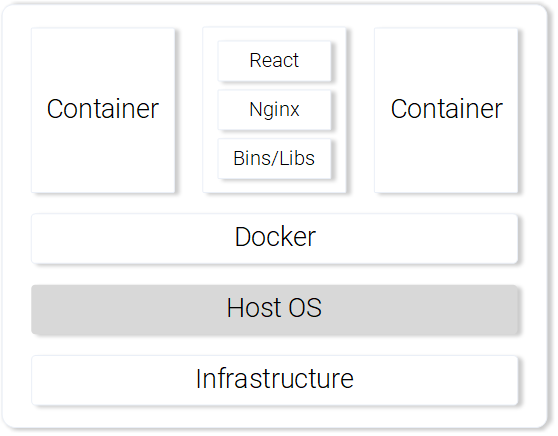

In order to explore the features of containers and compare them with other methods of launching applications, consider an example of servicing a React application using a virtual machine and a container.

▍Service of static files of a React-application by means of a virtual machine

Trying to organize the maintenance of static files by means of virtual machines, we will encounter the following disadvantages:

- Inefficient use of resources, since each virtual machine is a full-fledged operating system.

- Platform dependency. What works on a local computer may well not make money on a production server.

- Slow and resource-intensive scaling solution based on virtual machines.

Nginx web server serving static files running on a virtual machine

If, however, to solve a similar problem, use containers, then, in comparison with virtual machines, it will be possible to note the following strengths:

- Efficient use of resources: working with the operating system using Docker.

- Platform independence. A container that a developer can run on his computer will work anywhere.

- Lightweight deployment through the use of layers of images.

Nginx web server serving static files running in a container

We compared virtual machines and containers on only a few points, but even this is enough to feel the strengths of the containers. Find out more about Docker containers here.

▍ Building a Container Image for a React Application

The main building block of the Docker container is the

Dockerfile file. At the beginning of this file, make a record of the base image of the container, then there include a sequence of instructions indicating the order of creation of the container, which will meet the needs of some application.Before we work on the

Dockerfile file, let’s recall what we did to prepare the React application files for display on the Nginx server:- Building a React-application package (

npm run build). - Starting Nginx server.

- Copying the contents of the

builddirectory from thesa-frontendproject folder to thenginx/htmlserver folder.

Below you can see the parallels between the creation of the container and the above actions performed on the local computer.

▍Preparation of the Dockerfile file for the SA-Frontend application

The instructions that will be contained in the

Dockerfile for the SA-Frontend application consist of just two commands. The fact is that the Nginx development team has prepared a basic image for Nginx, which we will use to create our image. Here are the two steps we need to describe:- The basis of the image you need to make an image Nginx.

- The contents of the

sa-frontend/buildfolder need to be copied to thenginx/htmlimage folder.

If you move from this description to the

Dockerfile file, then it will look like this: FROM nginx COPY build /usr/share/nginx/html As you can see, everything is very simple, while the contents of the file even turns out to be quite readable and understandable. This file tells the system that you need to take a

nginx image with everything that already exists in it, and copy the contents of the build directory to the nginx/html directory.Here you may have a question regarding where I know about exactly where I need to copy files from the

build folder, that is, where did the path /usr/share/nginx/html come from. In fact, there is nothing complicated here either. The fact is that relevant information can be found in the image description .▍ Building the image and loading it into the repository

Before we can work with the ready image, we need to send it to the repository of images. To do this, we will use the free cloud platform for hosting Docker Hub images. At this stage you need to do the following:

- Install Docker .

- Register on the Docker Hub website.

- Log in to your account by running the following command in the terminal:

docker login -u="$DOCKER_USERNAME" -p="$DOCKER_PASSWORD"

Now you need to use the terminal to go to the

sa-frontend directory and execute a command of the following form there: docker build -f Dockerfile -t $DOCKER_USER_ID/sentiment-analysis-frontend . Hereinafter in similar commands

$DOCKER_USER_ID you need to replace your username with Docker Hub. For example, this part of the command might look like this: rinormaloku/sentiment-analysis-frontend .At the same time, this command can be shortened by removing

-f Dockerfile from it, since this file already exists in the folder in which we execute this command.In order to send the finished image to the repository, we need the following command:

docker push $DOCKER_USER_ID/sentiment-analysis-frontend After its execution, check the list of your repositories on the Docker Hub in order to understand whether the image has been successfully sent to the cloud storage.

▍ Container launch

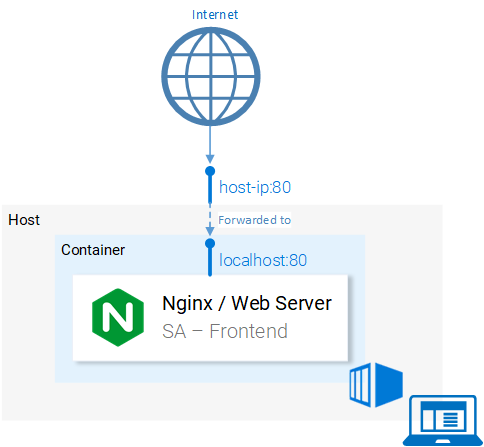

Now, anyone can download and run an image known as

$DOCKER_USER_ID/sentiment-analysis-frontend . In order to do this, you need to run the following sequence of commands: docker pull $DOCKER_USER_ID/sentiment-analysis-frontend docker run -d -p 80:80 $DOCKER_USER_ID/sentiment-analysis-frontend Now the container is running, and we can continue to work, creating other images we need. But, before continuing, let's deal with the

80:80 construction, which is encountered in the image launch command and may seem confusing.- The first

80is the port number of the host (that is, the local computer). - The second number

80is the port of the container to which the request should be redirected.

Consider the following illustration.

Port Forwarding

The system redirects requests from the

<hostPort> port to the <containerPort> port. That is, a call to port 80 computer is redirected to port 80 container.Since port

80 open on the local computer, you can access the application from this computer at localhost:80 . If your system does not support Docker, you can run the application on the Docker virtual machine, whose address will look like <docker-machine ip>:80 . To find out the IP address of the Docker virtual machine, you can use the command docker-machine ip .At this stage, after successfully launching the frontend application container, you should be able to open its page in the browser.

.File .dockerignore

Collecting the image of the

SA-Frontend application, we may have noticed that this process is extremely slow. This is because the image assembly context must be sent to the Docker daemon. The directory that represents the build context is specified by the last argument of the docker build . In our case, there is a full stop at the end of this command. This causes the following structure to be included in the build context: sa-frontend: | .dockerignore | Dockerfile | package.json | README.md +---build +---node_modules +---public \---src But of all the folders present here, we only need the

build folder. Downloading anything else is a waste of time. You can speed up the build by pointing Docker to which directories you can ignore. It is in order to do this, we need the .dockerignore file. To you, if you are familiar with the .gitignore file, the structure of this file will surely seem familiar. It lists the directories that the image build system can ignore. In our case, the contents of this file look like this: node_modules src public The

.dockerignore file must be in the same folder as the Dockerfile file. Now the image will be assembled in seconds.Займёмся теперь образом для Java-приложения.

▍Сборка образа контейнера для Java-приложения

Знаете что, а ведь вы уже изучили всё необходимое для создания образов контейнеров. Именно поэтому данный раздел будет весьма коротким.

Откройте файл

Dockerfile , который находится в папке проекта sa-webapp . Если вы прочтёте текст этого файла, то в нём вам встретятся всего две новые конструкции, начинающиеся с ключевых слов ENV и EXPOSE : ENV SA_LOGIC_API_URL http://localhost:5000 … EXPOSE 8080 Ключевое слово

ENV позволяет объявлять переменные окружения внутри контейнеров Docker. В частности, в нашем случае оно позволяет задать URL для доступа к API приложения, выполняющего анализ текста.Ключевое слово

EXPOSE позволяет указать Docker на то, что порт нужно открыть. Мы собираемся пользоваться этим портом в ходе работы с приложением. Тут можно заметить, что в Dockerfile для приложения SA-Frontend такой команды нет. Это нужно лишь для целей документирования, другим словами, эта конструкция предназначена для того, кто будет читать Dockerfile .Сборка образа и отправка его в репозиторий выглядит точно так же, как в предыдущем примере. Если же вы пока не очень уверены в своих силах — соответствующие команды можно найти в файле

README.md в папке sa-webapp .▍Сборка образа контейнера для Python-приложения

Если вы взглянете на содержимое файла

Dockerfile в папке sa-logic , то ничего нового для себя вы там не найдёте. Команды для сборки образа и отправки его в репозиторий тоже должны быть уже вам знакомы, но их, как и в случае с другими нашими приложениями, можно найти в файле README.md в папке sa-logic .▍Тестирование контейнеризированных приложений

Можете ли вы доверять чему-то такому, что вы не протестировали? Я тоже не могу. Испытаем наши контейнеры.

- Запустим контейнер приложения

sa-logicи настроим его на прослушивание порта5050:docker run -d -p 5050:5000 $DOCKER_USER_ID/sentiment-analysis-logic - Запустим контейнер приложения

sa-webappи настроим его на прослушивание порта8080. Кроме того, нам нужно настроить порт, на котором Python-приложение будет ждать запросы от Java-приложения, переназначив переменную окруженияSA_LOGIC_API_URL:$ docker run -d -p 8080:8080 -e SA_LOGIC_API_URL='http://<container_ip or docker machine ip>:5000' $DOCKER_USER_ID/sentiment-analysis-web-app

Для того чтобы узнать о том, как выяснить IP-адрес контейнера или виртуальной машины Docker — обратитесь к файлу README .

Запустим контейнер приложения

sa-frontend : docker run -d -p 80:80 $DOCKER_USER_ID/sentiment-analysis-frontend Теперь всё готово к тому, чтобы перейти в браузере по адресу

localhost:80 и испытать приложение.Обратите внимание на то, что если вы меняли порт для

sa-webapp , или если вы работаете с виртуальной машиной Docker, вам понадобится отредактировать файл App.js из папки sa-frontend , поменяв IP-адрес или номер порта в методе analyzeSentence() , подставив вместо устаревших данных актуальные сведения. После этого нужно снова собрать образ и воспользоваться им.Вот как выглядит схема нашего приложения теперь.

Микросервисы выполняются в контейнерах

Итоги: зачем нам кластер Kubernetes?

Только что мы изучили файлы

Dockerfile , поговорили о том, как собирать образы и отправлять их в репозиторий Docker. Кроме того, мы научились ускорять сборку образов, пользуясь файлом .dockerignore . В итоге наши микросервисы теперь выполняются в контейнерах Docker. Тут у вас может возникнуть вполне оправданный вопрос о том, зачем нам Kubernetes. Ответу на данный вопрос будет посвящена вторая часть этого материала. А пока подумайте над следующим вопросом:Предположим, что наше веб-приложение для анализа текстов стало всемирно популярным. Каждую минуту к нему приходят миллионы запросов. Это значит, что микросервисы

sa-webapp и sa-logic будут находиться под огромной нагрузкой. Как масштабировать контейнеры, в которых выполняются микросервисы?

Source: https://habr.com/ru/post/438982/