Buildbot: a tale with examples of one more continuous integration system

(picture from the official site )

Buildbot, as the name implies, is a continuous integration system (ci) tool. About him there have already been several articles on Habré, but, from my point of view, the advantages of this tool are not very clear. In addition, there are almost no examples in them, which makes it hard to see the full power of the program. In my article I will try to fill these shortcomings, tell you about the internal Buildbot device and give examples of several non-standard scenarios.

Common words

Currently, there are a huge number of systems of continuous integration, and when it comes to one of them, then there are quite logical questions in the spirit of "And why is it needed if you already have a <program_name> and everyone uses it?". I will try to answer this question about Buildbot. A part of the information will be duplicated with already existing articles, a part is described in the official documentation, but this is necessary for the sequence of the narration.

The main difference from other systems of continuous integration is that Buildbot is a Python framework for writing ci, and not a solution out of the box. This means that in order to connect any project to the Buildbot, you must first write a separate program on python using the Buildbot framework that implements the necessary integration functionality for your project. This approach provides tremendous flexibility, allowing you to implement tricky test scenarios that are impossible for out-of-box solutions due to architectural constraints.

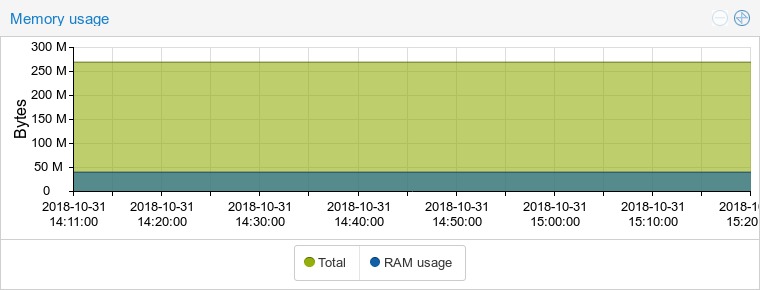

Further, Buildbot is not a service, so you must honestly deploy it on your infrastructure. Here I will note that the framework is very loyal to the resources of the system. This is certainly not C or C ++, but the python wins from its Java competitors. For example, a comparison of memory consumption with a GoCD (and yes, despite the name, this is a Java system):

Buildbot:

GoCD:

Independently deploying and writing a separate program for testing can make you sad at the thought of initial setup. However, scripting is greatly simplified due to the huge number of built-in classes. These classes cover a variety of standard operations, whether it is getting changes from the githabo repository or building a project with CMake. As a result, standard scripts for small projects will be as simple as YML files for any travis-ci. I will not write about the deployment, it is covered in detail in existing articles and nothing complicated there either.

The next feature of Buildbot, I note that the default test logic is implemented on the side of the ci-server. This goes against the popular “pipeline as a code” approach today, in which the testing logic is described in a file (like .travis.yml), which is in the repository along with the project source code, and the ci server only reads this file and executes what it says. Again, this is just the default behavior. The capabilities of the Buildbot framework allow you to implement the described approach with storing the test script in the repository. There is even a ready-made solution - bb-travis , which tries to take the best from Buildbot and travis-ci. In addition, later in this article I will describe how to implement something similar to this behavior myself.

Buildbot by default collects every commit when you push. This may seem like some minor unnecessary features, but for me, on the contrary, it has become one of the main advantages. Many popular solutions out of the box (travis-ci, gitlab-ci) do not provide this feature at all, working only with the latest commit on the branch. Imagine that during development you often have cherry-pick commits. It will be unpleasant to take a non-working commit that was not checked by the build system because it was launched along with a bundle of commits from above. Of course, in Buildbot, only the assembly of the last commit can be assembled, and this is done by setting only one parameter.

The framework has a fairly good documentation, in which everything is described in detail from the general architecture to the guidelines for extending the built-in classes. Nevertheless, even despite such documentation, you may have to look at some things in the source code. It is fully open under the GPL v2 license and is easy to read. Of the minuses - the documentation is available only in English, in Russian there is very little information in the network. The tool did not appear yesterday, with its help the python itself , Wireshark , LLVM and many other famous projects are assembled. There are updates, the project is supported by many developers, so you can talk about reliability and stability.

(main Python Buildbot page)

Theormin

This part is essentially a free translation of the head of the official documentation on the architecture of the framework. This shows the complete chain of actions from receiving changes by the ci-system to sending notifications about the result to users. So, you made changes to the source code of the project and sent them to a remote repository. What happens next is shown schematically in the picture:

(picture from official documentation )

First of all, Buildbot should somehow find out about changes in the repository. The main methods here are two - webhuki and polling, although no one forbids to come up with something more sophisticated. In the first case in Buildbot, the heir classes BaseHookHandler are responsible for this. There are many off-the-shelf solutions, such as GitHubHandler or GitoriusHandler . The key method in these classes is getChanges () . Its logic is extremely simple: it must convert an HTTP request into a list of change objects.

For the second case, we need the classes derived from PollingChangeSource . Again, there are ready-made solutions, such as GitPoller or HgPoller . The key method is poll () . It is called with a certain frequency and must somehow create a list of changes in the repository. In the case of a gita, this may be a call to git fetch and a comparison with a previously saved state. If the built-in capabilities are not enough, then it is enough to create your own class and inherit the method. An example of using a polling:

c['change_source'] = [changes.GitPoller( repourl = 'git@git.example.com:project', project = 'My Project', branches = True, # получаем изменения со всех веток pollInterval = 60 )] It is even simpler to use a webhost, the main thing is not to forget to configure it on the side of the git-server. In the configuration file, this is just one line:

c['www']['change_hook_dialects'] = { 'github': {} } The next step is to change objects to the input scheduler objects ( schedulers ). Examples of integrated schedulers: AnyBranchScheduler , NightlyScheduler , ForceScheduler , etc. Each scheduler receives all change objects as input, but selects only those that pass through the filter. The filter is passed to the scheduler in the constructor via the argument change_filter . At the exit, schedulers create build requests. The scheduler selects builders based on the builders argument.

Some planners have a tricky argument called treeStableTimer . It works as follows: when a change is received, the scheduler does not immediately create a new build request, but starts a timer. If new changes come, and the timer has not expired, the old change is replaced with a new one, and the timer is updated. When the timer ends, the scheduler creates just one build request from the last saved change.

Thus the logic of assembling only the last commit when pushing is implemented. Scheduler setup example:

c['schedulers'] = [schedulers.AnyBranchScheduler( name = 'My Scheduler', treeStableTimer = None, change_filter = util.ChangeFilter(project = 'My Project'), builderNames = ['My Builder'] )] Requests for the assembly, no matter how strange it may sound, come to the input of the builders. The task of the collector is to run the assembly on an accessible “worker” (worker). Worker is an environment for building, for example, stretch64 or ubuntu1804x64. The list of workers is passed through the workers argument. All workers in the list should be the same (i.e., the names are naturally different, but the environment inside is the same), since the collector is free to choose any of the available ones. Specifying multiple values here is used for load balancing, not for building in different environments. Through the factor y argument, the collector receives a sequence of steps for building the project. I will write about this in detail below.

An example of setting up a collector:

c['builders'] = [util.BuilderConfig( name = 'My Builder', workernames = ['stretch32'], factory = factory )] So, the project is going. The last step in Buildbot is build notification. The reporters are responsible for this. A classic example is the MailNotifier class, which sends an email with build results. MailNotifier connection example :

c['services'] = [reporters.MailNotifier( fromaddr = 'buildbot@example.com', relayhost = 'mail.example.com', smtpPort = 25, extraRecipients = ['devel@example.com'], sendToInterestedUsers = False )] Well, it's time to move on to full-fledged examples. I note that Buildbot itself is written using the Twisted framework, and therefore familiarity with it will greatly facilitate the writing and understanding of Buildbot scripts. Whipping boy we will have a project called Pet Project. Let it be written in C ++, compiled using CMake, and the source code lies in the git repository. We were not even too lazy and wrote tests for it that are run by the ctest command. Most recently, we read this article and realized that we want to apply fresh knowledge to our project.

Example one: to work

Actually, the configuration file:

100 lines of python code

from buildbot.plugins import * # shortcut c = BuildmasterConfig = {} # create workers c['workers'] = [worker.Worker('stretch32', 'example_password')] # general settings c['title'] = 'Buildbot: test' c['titleURL'] = 'https://buildbot.example.com/' c['buildbotURL'] = 'https://buildbot.example.com/' # setup database c['db'] = { 'db_url': 'sqlite:///state.sqlite' } # port to communicate with workers c['protocols'] = { 'pb': { 'port': 9989 } } # make buildbot developers a little bit happier c['buildbotNetUsageData'] = 'basic' # webserver setup c['www'] = dict(plugins = dict(waterfall_view={}, console_view={}, grid_view={})) c['www']['authz'] = util.Authz( allowRules = [util.AnyEndpointMatcher(role = 'admins')], roleMatchers = [util.RolesFromUsername(roles = ['admins'], usernames = ['root'])] ) c['www']['auth'] = util.UserPasswordAuth([('root', 'root_password')]) # mail notification c['services'] = [reporters.MailNotifier( fromaddr = 'buildbot@example.com', relayhost = 'mail.example.com', smtpPort = 25, extraRecipients = ['devel@example.com'], sendToInterestedUsers = False )] c['change_source'] = [changes.GitPoller( repourl = 'git@git.example.com:pet-project', project = 'Pet Project', branches = True, pollInterval = 60 )] c['schedulers'] = [schedulers.AnyBranchScheduler( name = 'Pet Project Scheduler', treeStableTimer = None, change_filter = util.ChangeFilter(project = 'Pet Project'), builderNames = ['Pet Project Builder'] )] factory = util.BuildFactory() factory.addStep(steps.Git( repourl = util.Property('repository'), workdir = 'sources', haltOnFailure = True, submodules = True, progress = True) ) factory.addStep(steps.ShellSequence( name = 'create builddir', haltOnFailure = True, hideStepIf = lambda results, s: results == util.SUCCESS, commands = [ util.ShellArg(command = ['rm', '-rf', 'build']), util.ShellArg(command = ['mkdir', 'build']) ]) ) factory.addStep(steps.CMake( workdir = 'build', path = '../sources', haltOnFailure = True) ) factory.addStep(steps.Compile( name = 'build project', workdir = 'build', haltOnFailure = True, warnOnWarnings = True, command = ['make']) ) factory.addStep(steps.ShellCommand( name = 'run tests', workdir = 'build', haltOnFailure = True, command = ['ctest']) ) c['builders'] = [util.BuilderConfig( name = 'Pet Project Builder', workernames = ['stretch32'], factory = factory )] By writing these lines we will get an automatic build when pushing to the repository, a beautiful web-face, email notifications and other attributes of any self-respecting ci. The most part here should be clear: the settings of the planners, collectors and other objects are made similar to the examples given earlier, the meaning of most of the parameters is intuitive. In detail, I will focus only on the creation of a factory, which I promised to do earlier.

The factory consists of steps ( build steps ) that Buildbot must perform for the project. As with other classes, there are many off-the-shelf solutions. Our factory consists of five steps. As a rule, the first step is to get the current status of the repository, and here we will not make an exception. For this we use the standard Git class:

First step

factory = util.BuildFactory() factory.addStep(steps.Git( repourl = util.Property('repository'), workdir = 'sources', haltOnFailure = True, submodules = True, progress = True) ) Next, we need to create a directory in which the project will be built - we will do a full out of source build. Before this, you must not forget to delete the directory if it already exists. So we need to execute two commands. The ShellSequence class will help us with this:

Second step

factory.addStep(steps.ShellSequence( name = 'create builddir', haltOnFailure = True, hideStepIf = lambda results, s: results == util.SUCCESS, commands = [ util.ShellArg(command = ['rm', '-rf', 'build']), util.ShellArg(command = ['mkdir', 'build']) ]) ) Now you need to run CMake. To do this, it is logical to use one of the two classes - ShellCommand or CMake . We will use the latter, but the differences here are minimal: it is a simple wrapper over the first class, making it a little more convenient to pass the arguments specific to CMake.

Third step

factory.addStep(steps.CMake( workdir = 'build', path = '../sources', haltOnFailure = True) ) Time to compile the project. As in the previous case, you can use ShellCommand . Similarly, there is the Compile class, which is a wrapper over ShellCommand . However, this is a trickier wrapper: the Compile class keeps track of warnings during compilation and accurately displays them in a separate log. That is why we will use the Compile class:

Fourth step

factory.addStep(steps.Compile( name = 'build project', workdir = 'build', haltOnFailure = True, warnOnWarnings = True, command = ['make']) ) Finally, run our tests. Here we will use the ShellCommand class mentioned earlier:

Fifth step

factory.addStep(steps.ShellCommand( name = 'run tests', workdir = 'build', haltOnFailure = True, command = ['ctest']) ) Example two: pipeline as a code

Here I will show how to implement a budget version of storing the testing logic along with the source code of the project, and not in the ci-server configuration file. To do this, put in the repository with the code file. Buildbot , in which each line consists of words, the first of which are interpreted as a directory for the command, and the rest as a command with its own arguments. For our Pet Project, the .buildbot file will look like this:

File .buildbot with commands

. rm -rf build

. mkdir build

build cmake ../sources

build make

build ctestNow we need to modify the buildbot configuration file. To analyze the .buildbot file, we will have to write our own step class. This step will read the .buildbot file, then for each line add a ShellCommand step with the necessary arguments. To dynamically add steps, we will use the build.addStepsAfterCurrentStep () method. It looks not scary:

Class AnalyseStep

class AnalyseStep(ShellMixin, BuildStep): def __init__(self, workdir, **kwargs): kwargs = self.setupShellMixin(kwargs, prohibitArgs = ['command', 'workdir', 'want_stdout']) BuildStep.__init__(self, **kwargs) self.workdir = workdir @defer.inlineCallbacks def run(self): self.stdio_log = yield self.addLog('stdio') cmd = RemoteShellCommand( command = ['cat', '.buildbot'], workdir = self.workdir, want_stdout = True, want_stderr = True, collectStdout = True ) cmd.useLog(self.stdio_log) yield self.runCommand(cmd) if cmd.didFail(): defer.returnValue(util.FAILURE) results = [] for row in cmd.stdout.splitlines(): lst = row.split() dirname = lst.pop(0) results.append(steps.ShellCommand( name = lst[0], command = lst, workdir = dirname ) ) self.build.addStepsAfterCurrentStep(results) defer.returnValue(util.SUCCESS) Thanks to this approach, the factory for the assembler has become easier and more versatile:

Factory to analyze .buildbot file

factory = util.BuildFactory() factory.addStep(steps.Git( repourl = util.Property('repository'), workdir = 'sources', haltOnFailure = True, submodules = True, progress = True, mode = 'incremental') ) factory.addStep(AnalyseStep( name = 'Analyse .buildbot file', workdir = 'sources', haltOnFailure = True, hideStepIf = lambda results, s: results == util.SUCCESS) ) Example three: worker as a code

Now let's imagine that next to the project code we need to determine not the sequence of commands, but the environment for the assembly. In essence, we define a worker. The .buildbot file might look something like this:

The .buildbot file with the environment

{

"workers": ["stretch32", "wheezy32"]

}The configuration file of Buildbot will be complicated in this case, because we want the assemblies on different environments to be interconnected (if an error occurred in at least one environment, the whole commit was considered non-working). To solve the problem will help us two levels. We will have a local worker who analyzes the .buildbot file and runs the builds on the required workers. First, as in the previous example, we will write our step for analyzing the .buildbot file. To run the build on a specific worker, a bunch of Trigger steps and a special type of TriggerableScheduler planners are used . Our step has become a little more difficult, but quite understandable:

Class AnalyseStep

class AnalyseStep(ShellMixin, BuildStep): def __init__(self, workdir, **kwargs): kwargs = self.setupShellMixin(kwargs, prohibitArgs = ['command', 'workdir', 'want_stdout']) BuildStep.__init__(self, **kwargs) self.workdir = workdir @defer.inlineCallbacks def _getWorkerList(self): cmd = RemoteShellCommand( command = ['cat', '.buildbot'], workdir = self.workdir, want_stdout = True, want_stderr = True, collectStdout = True ) cmd.useLog(self.stdio_log) yield self.runCommand(cmd) if cmd.didFail(): defer.returnValue([]) # parse JSON try: payload = json.loads(cmd.stdout) workers = payload.get('workers', []) except json.decoder.JSONDecodeError as e: raise ValueError('Error loading JSON from .buildbot file: {}' .format(str(e))) defer.returnValue(workers) @defer.inlineCallbacks def run(self): self.stdio_log = yield self.addLog('stdio') try: workers = yield self._getWorkerList() except ValueError as e: yield self.stdio_log.addStdout(str(e)) defer.returnValue(util.FAILURE) results = [] for worker in workers: results.append(steps.Trigger( name = 'check on worker "{}"'.format(worker), schedulerNames = ['Pet Project ({}) Scheduler'.format(worker)], waitForFinish = True, haltOnFailure = True, warnOnWarnings = True, updateSourceStamp = False, alwaysUseLatest = False ) ) self.build.addStepsAfterCurrentStep(results) defer.returnValue(util.SUCCESS) We will use this step on a local worker. Please note that we have set the tag to our “Pet Project Builder” collector. With it, we can filter MailNotifier , telling it that the letters need to be sent only to certain collectors. If this filtering is not done, then when building a commit on two environments, we will receive three letters.

General collector

factory = util.BuildFactory() factory.addStep(steps.Git( repourl = util.Property('repository'), workdir = 'sources', haltOnFailure = True, submodules = True, progress = True, mode = 'incremental') ) factory.addStep(AnalyseStep( name = 'Analyse .buildbot file', workdir = 'sources', haltOnFailure = True, hideStepIf = lambda results, s: results == util.SUCCESS) ) c['builders'] = [util.BuilderConfig( name = 'Pet Project Builder', tags = ['generic_builder'], workernames = ['local'], factory = factory )] It remains for us to add the collectors and those Triggerable Schedulers for all our real workers:

Pickers in the right environments

for worker in allWorkers: c['schedulers'].append(schedulers.Triggerable( name = 'Pet Project ({}) Scheduler'.format(worker), builderNames = ['Pet Project ({}) Builder'.format(worker)]) ) c['builders'].append(util.BuilderConfig( name = 'Pet Project ({}) Builder'.format(worker), workernames = [worker], factory = specific_factory) )

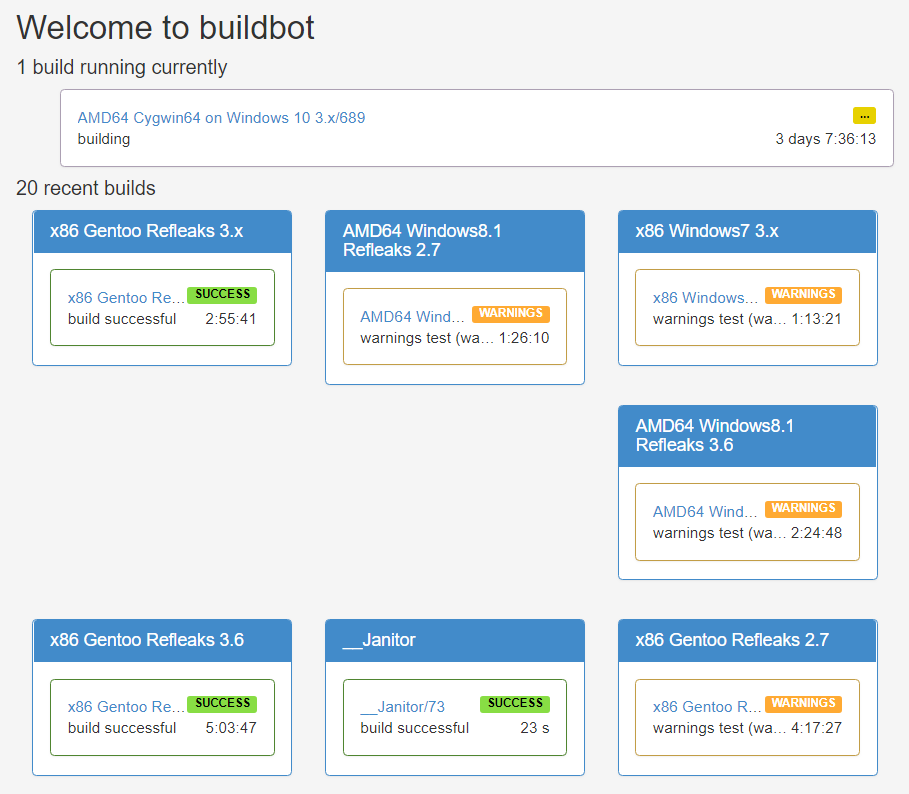

(build page of our project in two environments)

Example Four: One Email per Multiple Commit

If you use any of the examples above, you can notice one unpleasant feature. Since one letter is generated for each commit, when we push a branch with 20 new commits, we will receive 20 letters. To avoid this, as in the previous example, two levels will help us. We also need to modify the class to get changes. Instead of creating a set of change objects, we will create only one such object, in the properties of which a list of all commits is transmitted. In haste, you can do this:

Class MultiGitHubHandler

class MultiGitHubHandler(GitHubHandler): def getChanges(self, request): new_changes = GitHubHandler.getChanges(self, request) if not new_changes: return ([], 'git') change = new_changes[-1] change['revision'] = '{}..{}'.format( new_changes[0]['revision'], new_changes[-1]['revision']) commits = [c['revision'] for c in new_changes] change['properties']['commits'] = commits return ([change], 'git') c['www']['change_hook_dialects'] = { 'base': { 'custom_class': MultiGitHubHandler } } To work with such an unusual change object, we need our own special step, which dynamically creates steps that collect a particular commit:

Class GenerateCommitSteps

class GenerateCommitSteps(BuildStep): def run(self): commits = self.getProperty('commits') results = [] for commit in commits: results.append(steps.Trigger( name = 'Checking commit {}'.format(commit), schedulerNames = ['Pet Project Commits Scheduler'], waitForFinish = True, haltOnFailure = True, warnOnWarnings = True, sourceStamp = { 'branch': util.Property('branch'), 'revision': commit, 'codebase': util.Property('codebase'), 'repository': util.Property('repository'), 'project': util.Property('project') } ) ) self.build.addStepsAfterCurrentStep(results) return util.SUCCESS Add our common collector, which only deals with the launch of assemblies of individual commits. It should be marked with a tag in order to filter the sending of letters by this very tag.

General Mail Collector

c['schedulers'] = [schedulers.AnyBranchScheduler( name = 'Pet Project Branches Scheduler', treeStableTimer = None, change_filter = util.ChangeFilter(project = 'Pet Project'), builderNames = ['Pet Project Branches Builder'] )] branches_factory = util.BuildFactory() branches_factory.addStep(GenerateCommitSteps( name = 'Generate commit steps', haltOnFailure = True, hideStepIf = lambda results, s: results == util.SUCCESS) ) c['builders'] = [util.BuilderConfig( name = 'Pet Project Branches Builder', tags = ['branch_builder'], workernames = ['local'], factory = branches_factory )] It remains to add only the collector for individual commits. We just do not mark this collector with a tag, and therefore letters for it will not be created.

General Mail Collector

c['schedulers'].append(schedulers.Triggerable( name = 'Pet Project Commits Scheduler', builderNames = ['Pet Project Commits Builder']) ) c['builders'].append(util.BuilderConfig( name = 'Pet Project Commits Builder', workernames = ['stretch32'], factory = specific_factory) ) Final words

This article in no way replaces the reading of official documentation, so if you are interested in Buildbot, then your next step should be reading it. The full versions of the configuration files of all the examples given are available on the githab . Related links, from which was taken most of the materials for the article:

Source: https://habr.com/ru/post/439096/