What would the Internet system look like in the game EvE Online

EvE online is an exciting game. This is one of the few MMOs in which there is only one “server” to enter, which means that everyone plays in the same logical world. She also had an exciting set of events that occurred inside the game, and she also remains a very visually appealing game:

There is also an extensive map of the world on which all these players can be placed. At its peak, EvE had 63,000 online players in one world with 500,000 paid subscriptions at its peak of popularity, and although this number is getting smaller every year, the world remains incredibly large. This means that the transition from one side to the other is a significant amount of time (as well as the risk due to the player’s dependence on the faction).

The translation was made with the support of the company EDISON Software , which professionally deals with security , as well as develops electronic medical verification systems .

You travel to different areas using the warp mode (within the same system), or jump into different systems using the jump gate:

And all these systems combine to create a map of beauty and complexity:

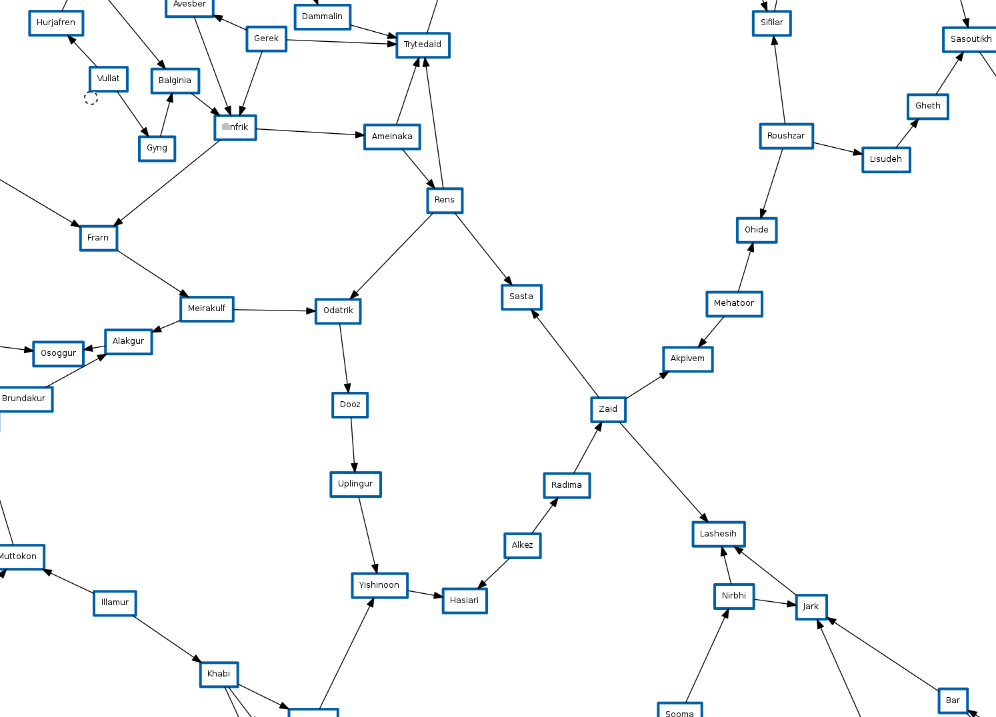

I have always viewed this map as a network, it is a large network of systems that connect with each other so that people can pass through them, and most systems have more than two hopping gates. It made me think about what would happen if you literally took the idea of a map as a network? What will the EvE Internet system look like?

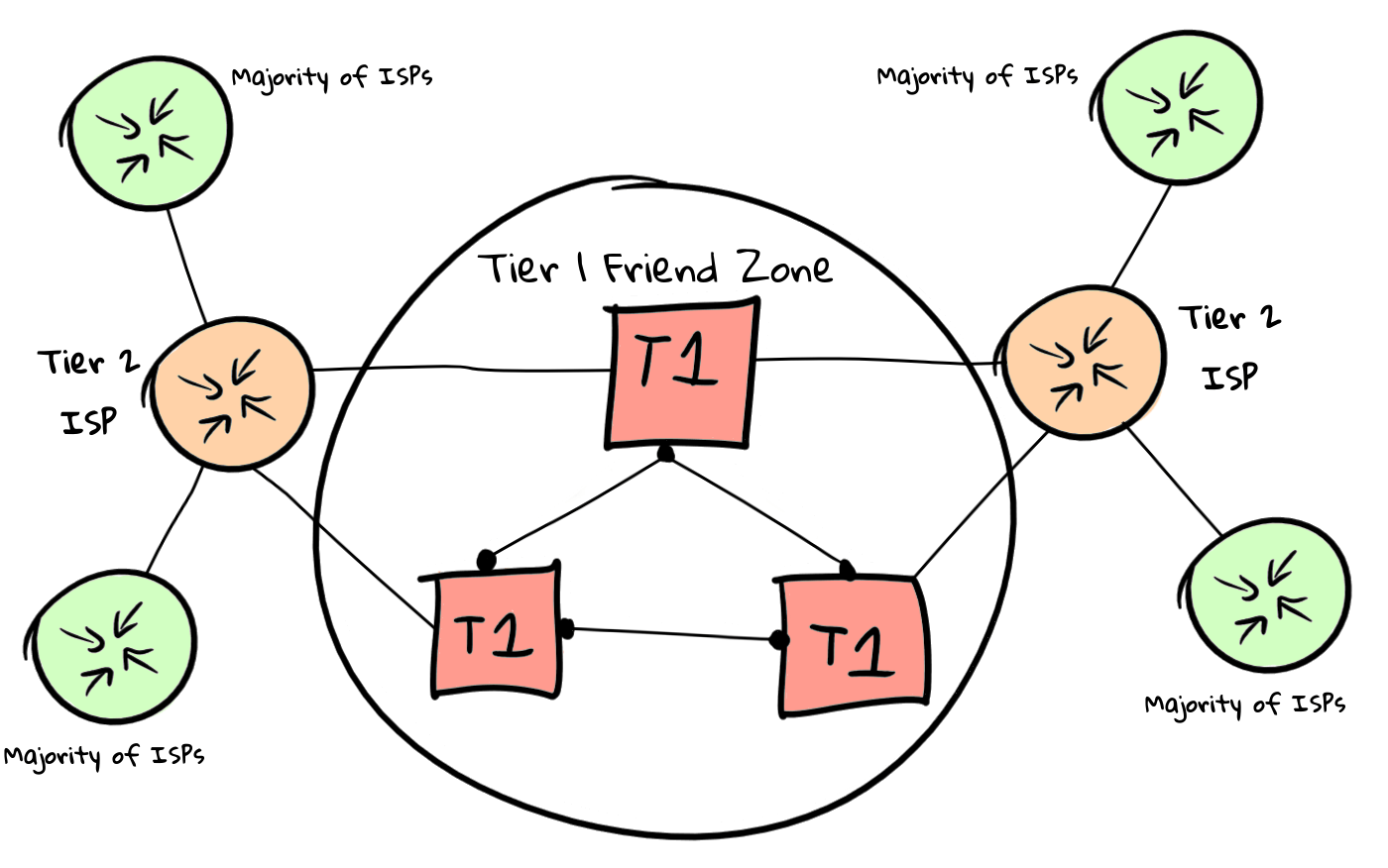

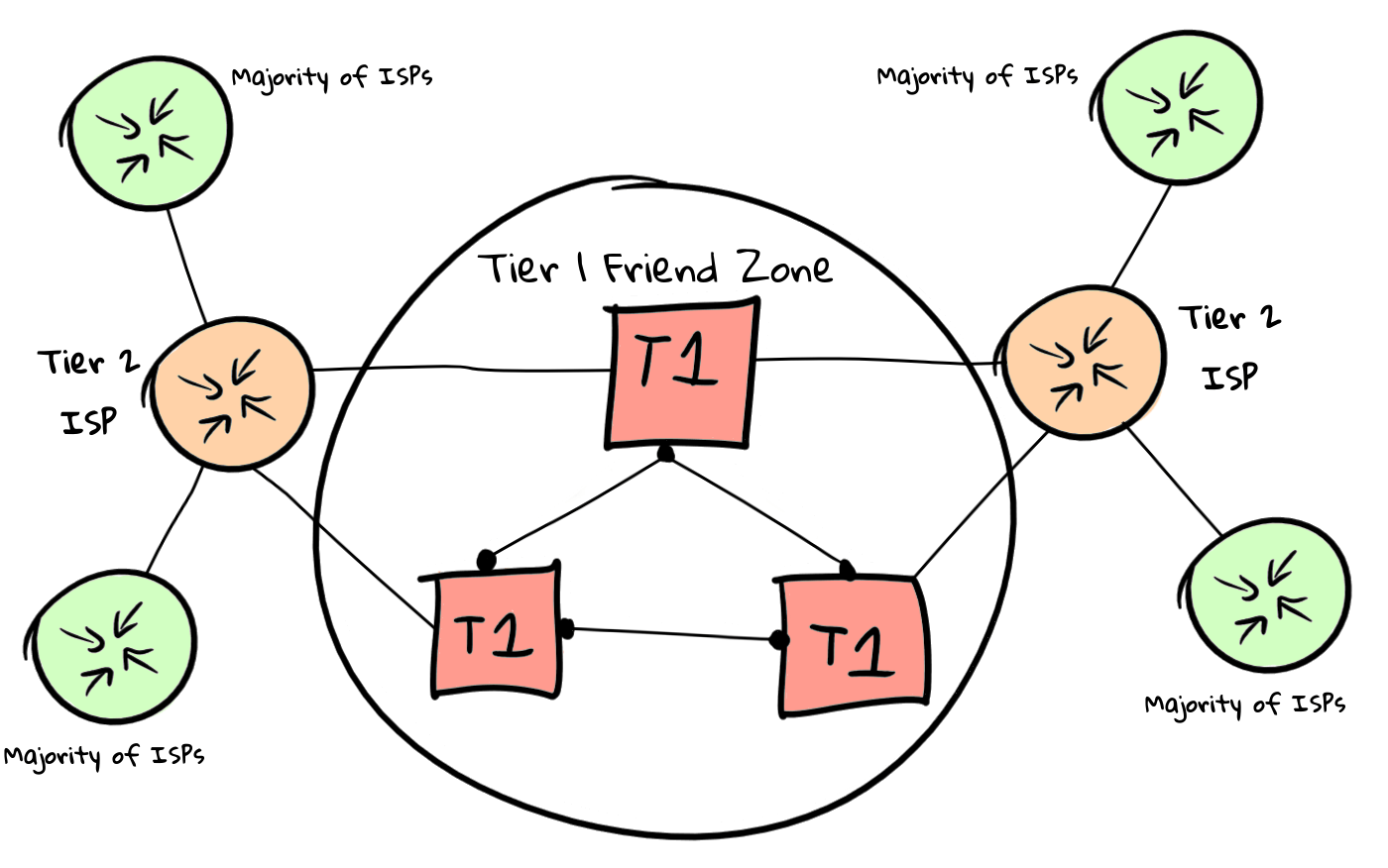

To do this, we need to understand how the real Internet works. The Internet is a large collection of ISPs that are all numerically identified using a standardized and unique ISP number called an autonomous system number or ASN (or AS for a shorter version). This AS needs a way to exchange routes with each other, since they will own IP address ranges, and they need a way to inform other ISPs that their routers can route these IP addresses. For this, the world settled on a border gateway protocol or BGP.

BGP works by “communicating” to other AS (known as a node) their routes:

The standard behavior of BGP when it receives a route from a node is to transfer it to all other nodes to which it is also connected. This means that the nodes will automatically share their routing tables with each other.

However, this behavior is only useful if you use BGP to send routes to internal routers, since the modern Internet has different logical relationships with each other. Instead of a network, the modern Internet looks like this:

However, EvE online is installed in the future. Who knows if the Internet relies on this routing scheme for profit. Let's imagine that this is not so that we can see how BGP is scaled in larger networks.

To do this, we need to simulate the actual behavior of the BGP router and the connection. Considering that EvE has a relatively low 8000 ~ number of systems, and a reasonable 13.8 thousand connections between them. I assumed that it would actually be impossible to run 8000 ~ virtual machines with real BGP and a network to figure out how these real systems look when they act together as a network.

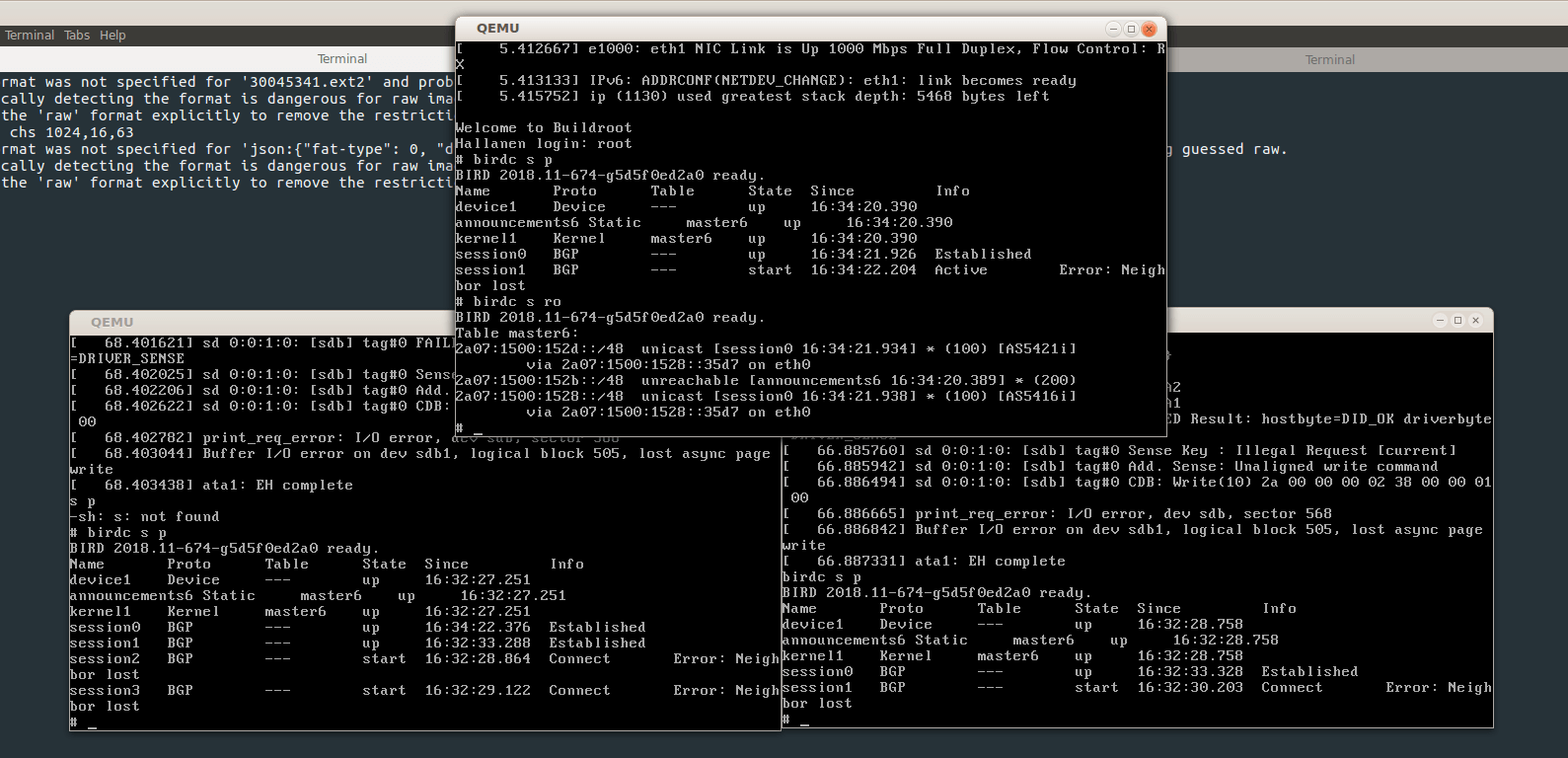

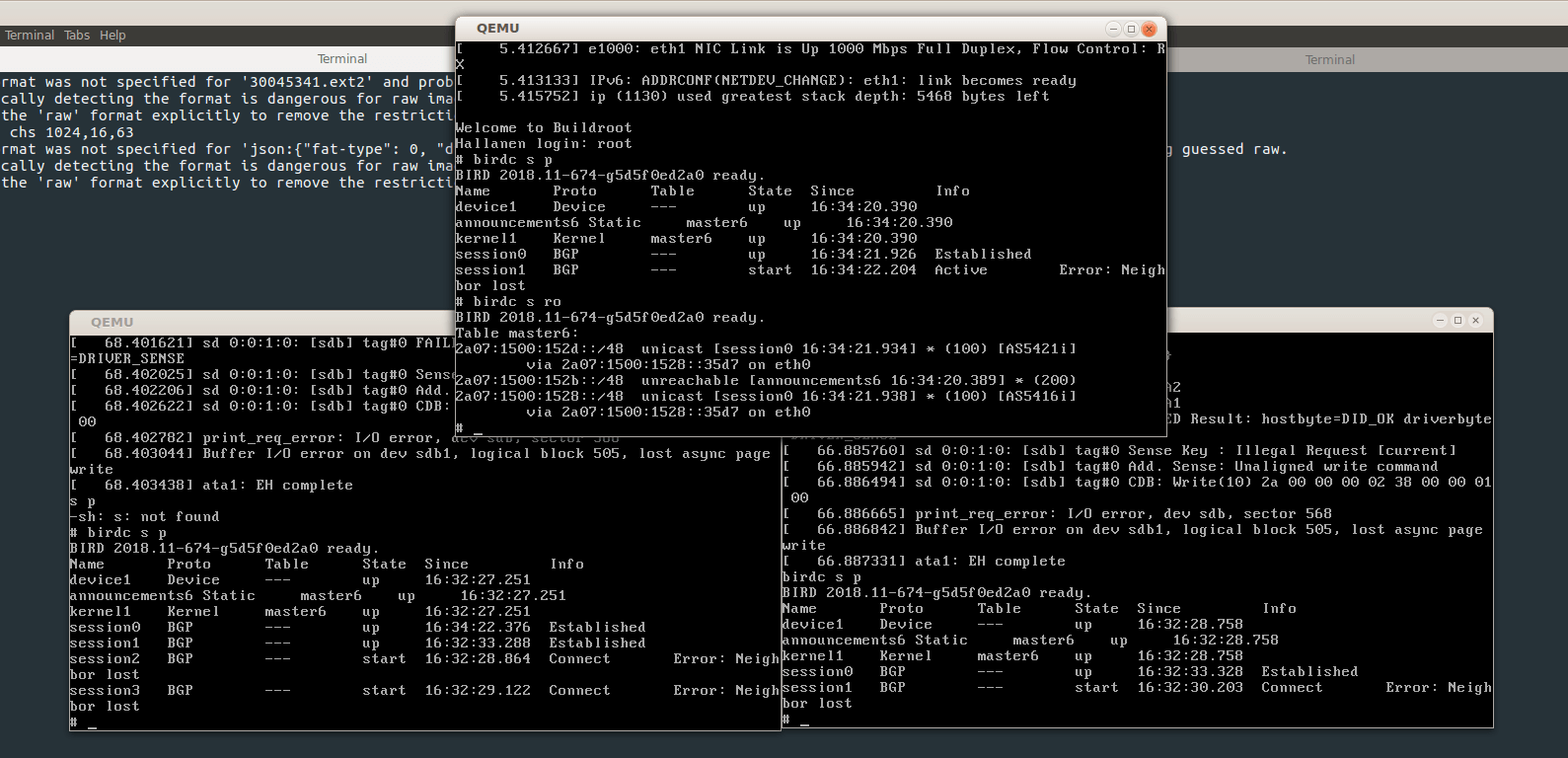

However, we do not have unlimited resources, so we will need to find a way to make the smallest Linux image, both when using disk space and when using memory. To do this, I paid attention to embedded systems, because embedded systems often have to work in environments with very low levels of resources. I came across Buildroot , and after a few hours I had a small Linux image containing only what I needed to run this project.

This image contains bootable linux, which also has: * Bird 2 BGP Daemon * tcpdump and My Traceroute (mtr) for network debugging * busybox for the base shell and system utilities

This image can be easily launched in qemu with a small number of options:

For networking, I decided to use the undocumented function from qemu (in my version), in which you can send two qemu processes to each other and use UDP sockets to transfer data between them. This is convenient, as we plan to provide a large number of links, so using the usual TUN / TAP adapter method can quickly lead to confusion.

Since this function is partly undocumented, there were some problems with its work. It took me a long time to understand that the network name on the command line should be the same for both sides of the connection. Later it turned out that this function is already well documented, as is usually the case. Changes take time to get to older versions of the distribution.

As soon as it worked, we had a couple of virtual machines that could send packets between themselves, and the hypervisor sends them as UDP datagrams. Since we will be launching a large number of such systems, we will need a quick way to configure them using the previously created configuration. To do this, we can use the convenient qemu function, which allows you to take a directory on the hypervisor and turn it into a virtual FAT32 file system. This is useful because it allows us to create a directory for each system that we plan to run, and each qemu process points to that directory, which means that we can use the same boot image for all virtual machines in the cluster.

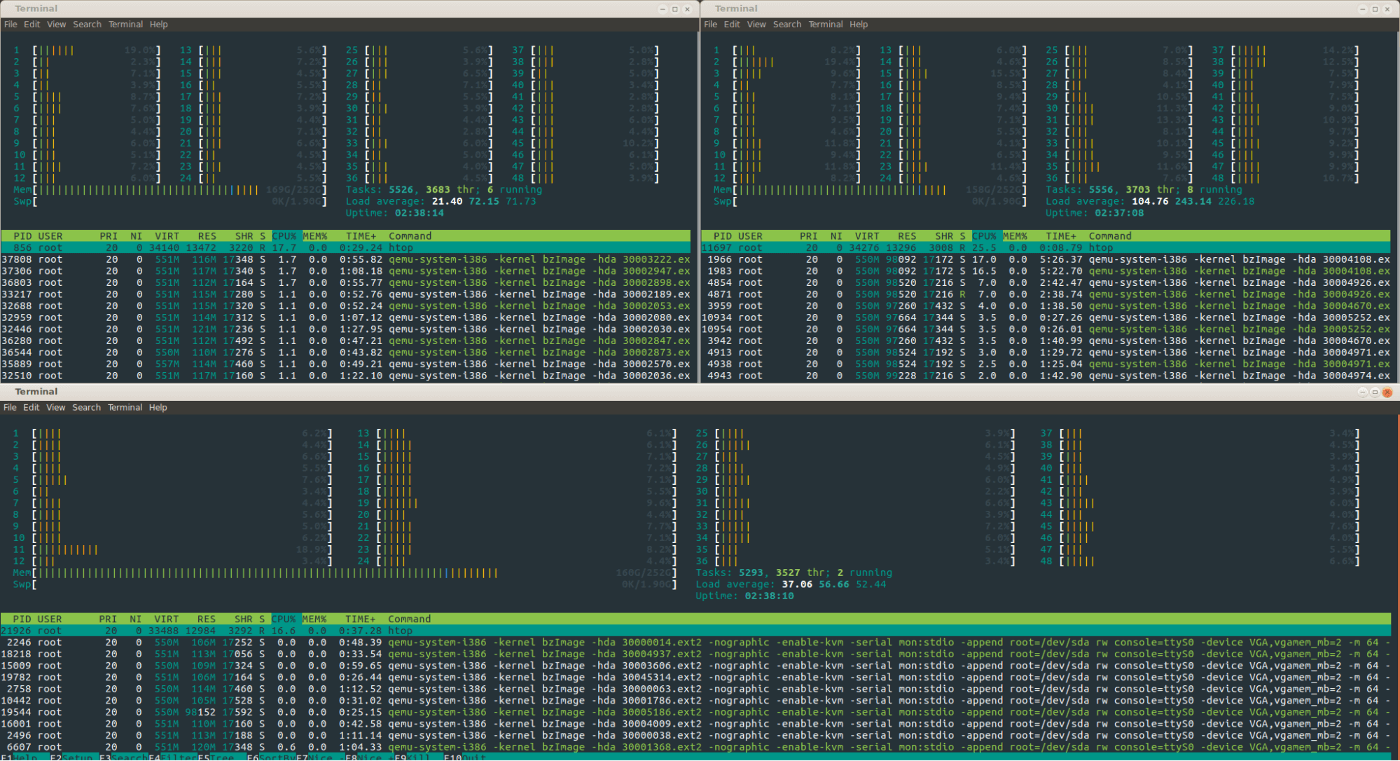

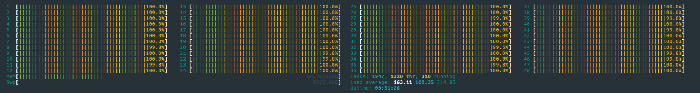

Since each system has 64 MB of RAM, and we plan to use 8000 ~ VM, we certainly need a decent amount of RAM. For this, I used 3 m2.xlarge.x86 with packet.net's, as they offer 256 GB of RAM with 2x Xeon Gold 5120, which means they have a decent amount of support.

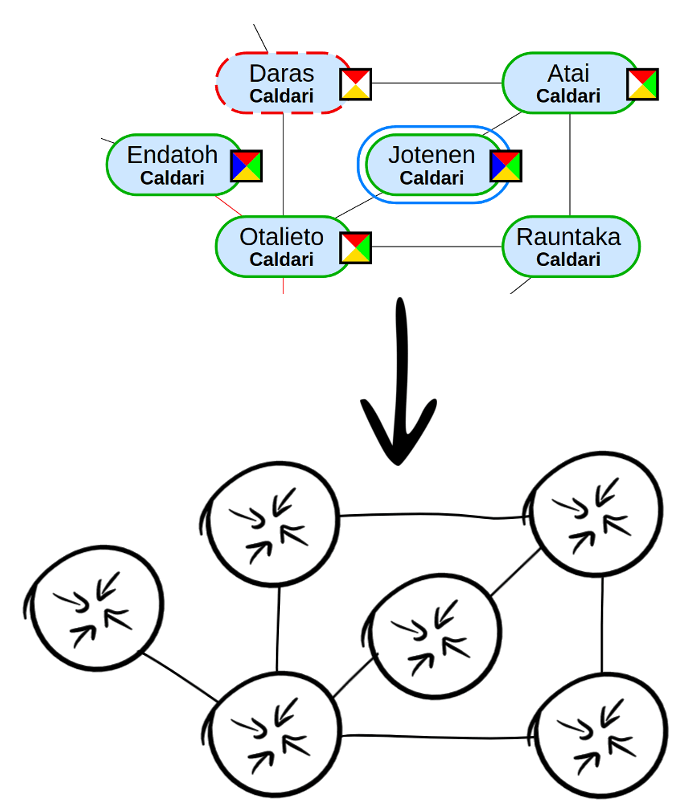

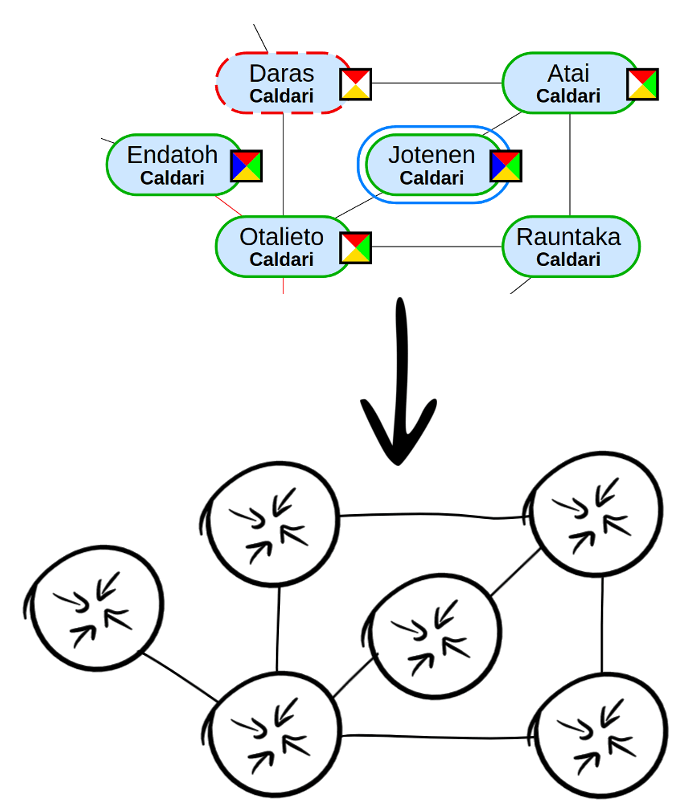

I used another open source project to create an EvE map in the form of JSON, and then created a custom configuration program based on this data. Having conducted several test runs of just a few systems, I proved that they can take configuration from VFAT and establish BGP sessions with each other about this.

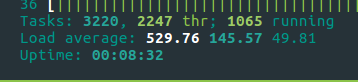

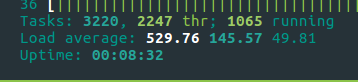

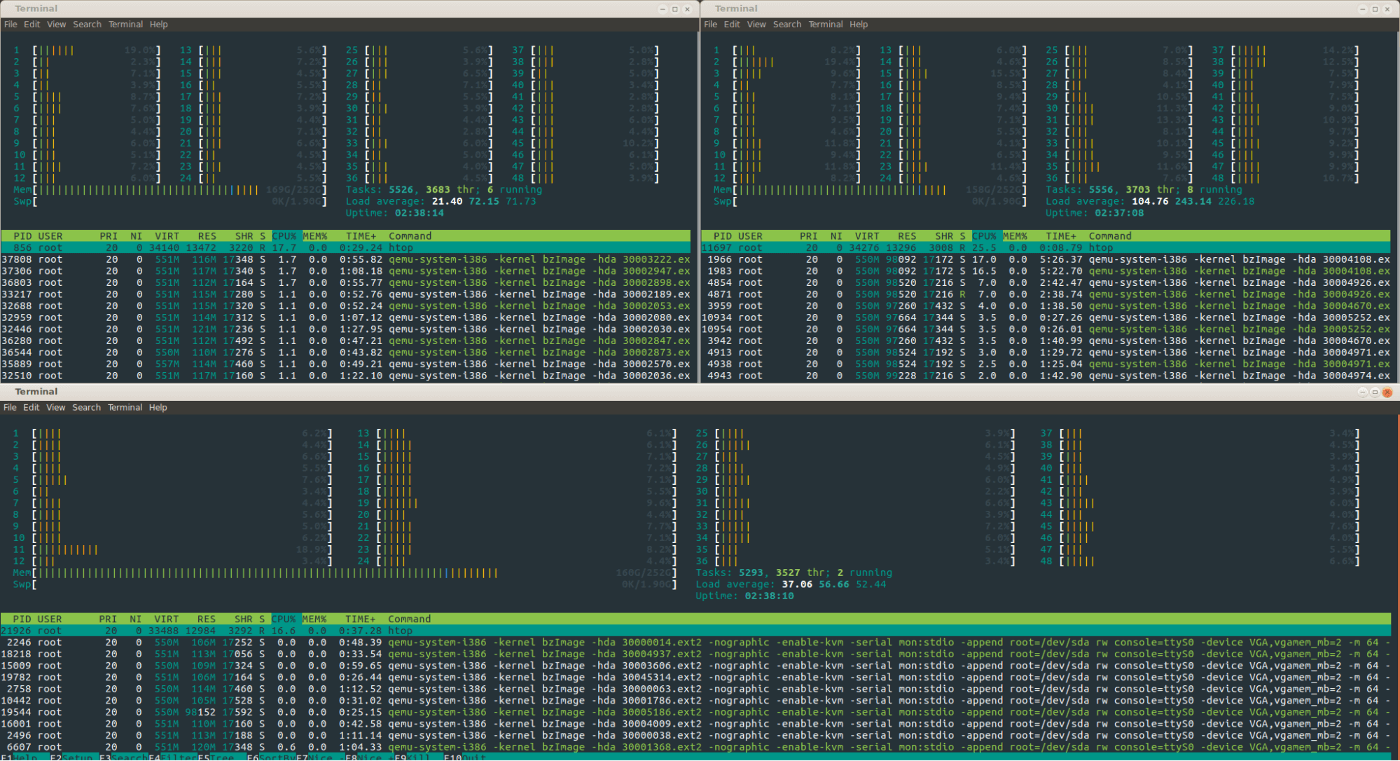

So, I took the decisive step to loading the universe:

At first, I tried to run all the systems in one big event, but, unfortunately, this led to a big bang for the system boot, so after that I switched to launching the system every 2.5 seconds and 48 system cores took care of this.

During the boot process, I found that you would see large explosions of CPU usage over all virtual machines, I later found out that these were large parts of the universe, connecting with each other, thus causing large amounts of BGP traffic on both sides of newly connected virtual networks. machines.

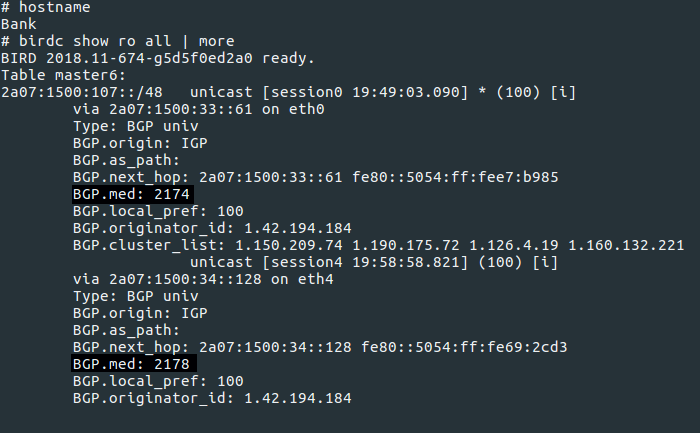

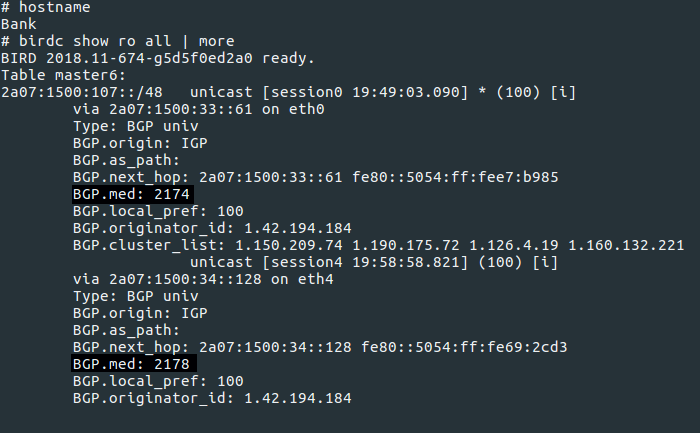

In the end, we saw some pretty awesome BGP paths, since each system advertises / 48 IPv6 addresses, you can see the routes to each system and to all other systems that it would have to go through to get there.

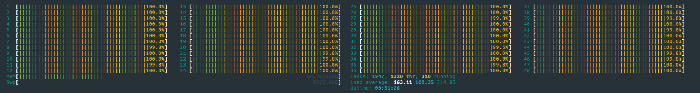

I took a snapshot of the routing table on each router in the universe, and then depicted frequently used systems to access other systems, but this image is huge. Here is a small version of this in a publication; if you click on an image, keep in mind that this image will most likely cause your device to run out of memory.

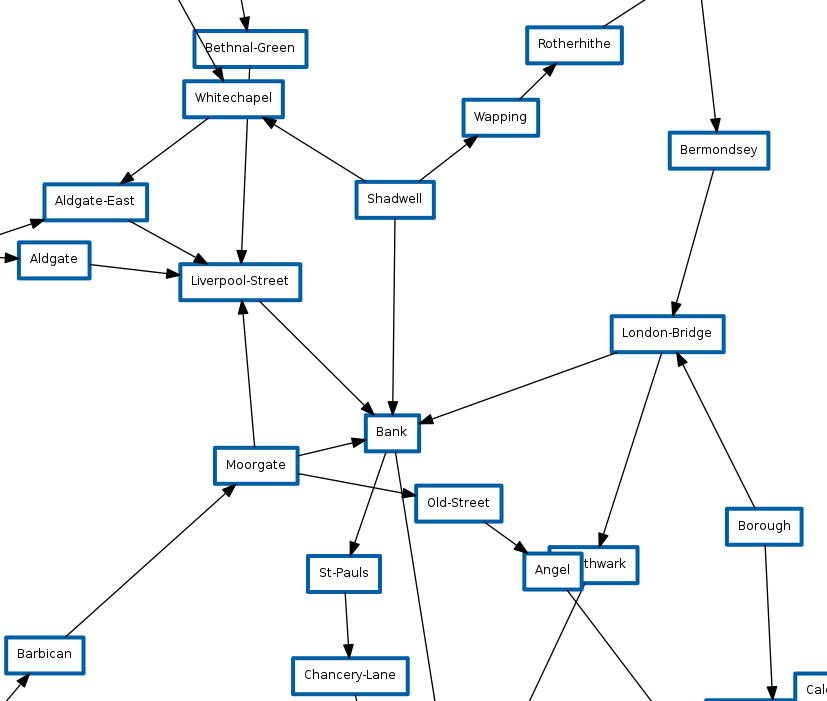

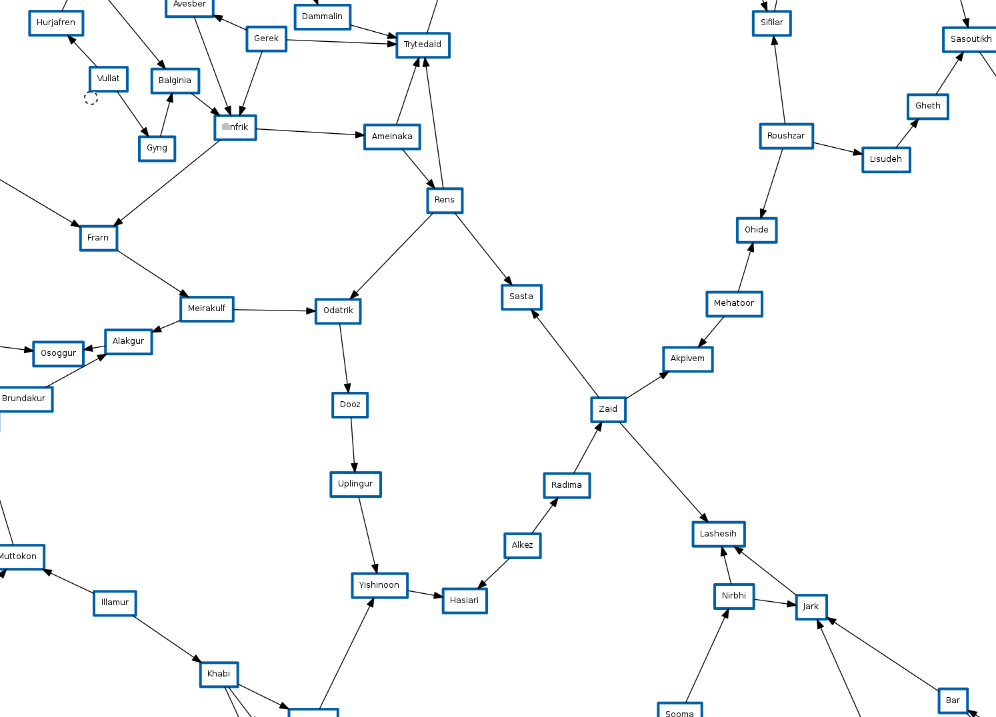

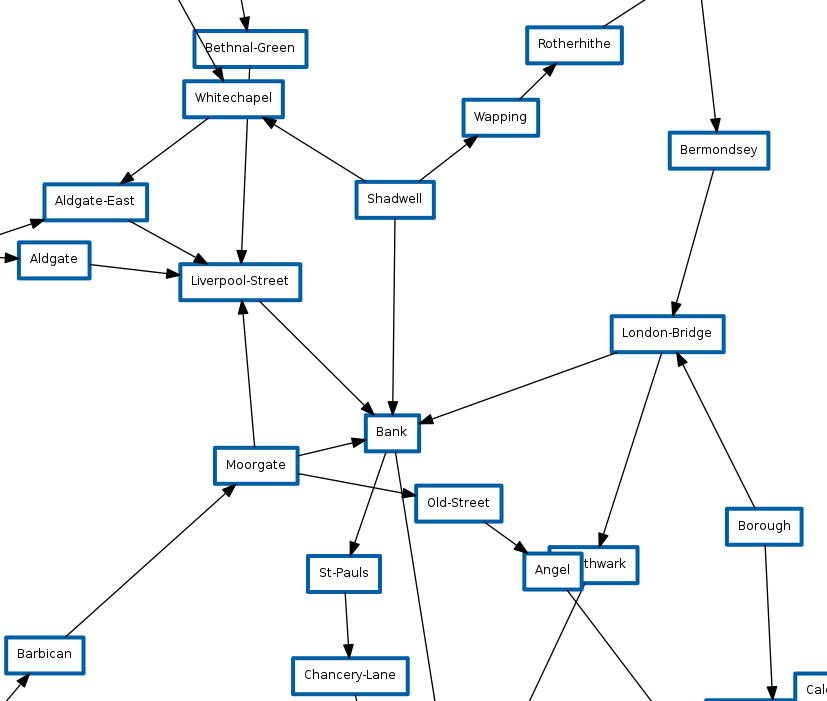

After that, I thought, what else can you display on BGP routed networks? Could you use a smaller model to test how the routing configuration works in large networks? I prepared a file that displayed the London Underground system to check this :

The TFL system is much smaller and has much more jumps that have only one direction, since most stations have only one “line” of transport, however we can extract one thing from this, we can use it to play safely with BGP MED 's.

However, there is a problem when we view the TFL card as a BGP network, in the real world the time / delay between each stop is not the same, and therefore, if we simulated this delay, we would not bypass the system as fast as we could, since we only look at the fewest stations to go.

However, thanks to the Freedom of Information Act (FOIA), the request that was sent to the TFL provided us with the time needed to move from one station to another. They were generated in a BGP router configuration, for example:

In

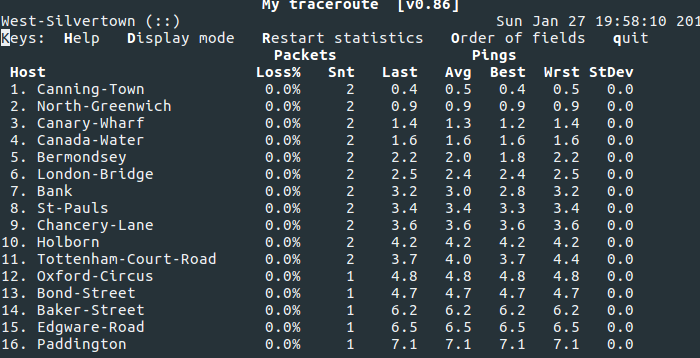

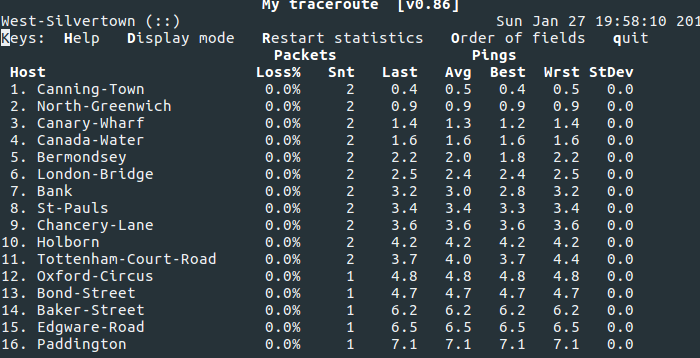

This means that traceroutes determine exactly how you can navigate around London, for example, to my station in Paddington:

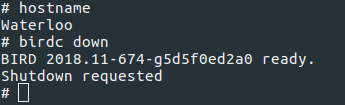

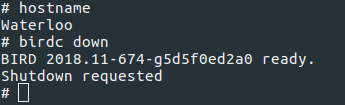

We can also have fun with BGP, simulating a maintenance or incident at Waterloo Station:

And as the entire network instantly chooses the next fastest route, not the one with the fewest passing stations.

And this is BGP MED magic in routing!

The code for all this is already available. You can create your own network structures with a fairly simple JSON schema, or simply use EvE online or TFL, as they are already in the repository.

You can find all the code for this here.

There is also an extensive map of the world on which all these players can be placed. At its peak, EvE had 63,000 online players in one world with 500,000 paid subscriptions at its peak of popularity, and although this number is getting smaller every year, the world remains incredibly large. This means that the transition from one side to the other is a significant amount of time (as well as the risk due to the player’s dependence on the faction).

The translation was made with the support of the company EDISON Software , which professionally deals with security , as well as develops electronic medical verification systems .

You travel to different areas using the warp mode (within the same system), or jump into different systems using the jump gate:

And all these systems combine to create a map of beauty and complexity:

I have always viewed this map as a network, it is a large network of systems that connect with each other so that people can pass through them, and most systems have more than two hopping gates. It made me think about what would happen if you literally took the idea of a map as a network? What will the EvE Internet system look like?

To do this, we need to understand how the real Internet works. The Internet is a large collection of ISPs that are all numerically identified using a standardized and unique ISP number called an autonomous system number or ASN (or AS for a shorter version). This AS needs a way to exchange routes with each other, since they will own IP address ranges, and they need a way to inform other ISPs that their routers can route these IP addresses. For this, the world settled on a border gateway protocol or BGP.

BGP works by “communicating” to other AS (known as a node) their routes:

The standard behavior of BGP when it receives a route from a node is to transfer it to all other nodes to which it is also connected. This means that the nodes will automatically share their routing tables with each other.

However, this behavior is only useful if you use BGP to send routes to internal routers, since the modern Internet has different logical relationships with each other. Instead of a network, the modern Internet looks like this:

However, EvE online is installed in the future. Who knows if the Internet relies on this routing scheme for profit. Let's imagine that this is not so that we can see how BGP is scaled in larger networks.

To do this, we need to simulate the actual behavior of the BGP router and the connection. Considering that EvE has a relatively low 8000 ~ number of systems, and a reasonable 13.8 thousand connections between them. I assumed that it would actually be impossible to run 8000 ~ virtual machines with real BGP and a network to figure out how these real systems look when they act together as a network.

However, we do not have unlimited resources, so we will need to find a way to make the smallest Linux image, both when using disk space and when using memory. To do this, I paid attention to embedded systems, because embedded systems often have to work in environments with very low levels of resources. I came across Buildroot , and after a few hours I had a small Linux image containing only what I needed to run this project.

$ ls -alh total 17M drwxrwxr-x 2 ben ben 4.0K Jan 22 22:46 . drwxrwxr-x 6 ben ben 4.0K Jan 22 22:45 .. -rw-r--r-- 1 ben ben 7.0M Jan 22 22:46 bzImage -rw-r--r-- 1 ben ben 10M Jan 22 22:46 rootfs.ext2 This image contains bootable linux, which also has: * Bird 2 BGP Daemon * tcpdump and My Traceroute (mtr) for network debugging * busybox for the base shell and system utilities

This image can be easily launched in qemu with a small number of options:

qemu-system-i386 -kernel ../bzImage \ -hda rootfs.ext2 \ -hdb fat:./30045343/ \ -append "root=/dev/sda rw" \ -m 64 For networking, I decided to use the undocumented function from qemu (in my version), in which you can send two qemu processes to each other and use UDP sockets to transfer data between them. This is convenient, as we plan to provide a large number of links, so using the usual TUN / TAP adapter method can quickly lead to confusion.

Since this function is partly undocumented, there were some problems with its work. It took me a long time to understand that the network name on the command line should be the same for both sides of the connection. Later it turned out that this function is already well documented, as is usually the case. Changes take time to get to older versions of the distribution.

As soon as it worked, we had a couple of virtual machines that could send packets between themselves, and the hypervisor sends them as UDP datagrams. Since we will be launching a large number of such systems, we will need a quick way to configure them using the previously created configuration. To do this, we can use the convenient qemu function, which allows you to take a directory on the hypervisor and turn it into a virtual FAT32 file system. This is useful because it allows us to create a directory for each system that we plan to run, and each qemu process points to that directory, which means that we can use the same boot image for all virtual machines in the cluster.

Since each system has 64 MB of RAM, and we plan to use 8000 ~ VM, we certainly need a decent amount of RAM. For this, I used 3 m2.xlarge.x86 with packet.net's, as they offer 256 GB of RAM with 2x Xeon Gold 5120, which means they have a decent amount of support.

I used another open source project to create an EvE map in the form of JSON, and then created a custom configuration program based on this data. Having conducted several test runs of just a few systems, I proved that they can take configuration from VFAT and establish BGP sessions with each other about this.

So, I took the decisive step to loading the universe:

At first, I tried to run all the systems in one big event, but, unfortunately, this led to a big bang for the system boot, so after that I switched to launching the system every 2.5 seconds and 48 system cores took care of this.

During the boot process, I found that you would see large explosions of CPU usage over all virtual machines, I later found out that these were large parts of the universe, connecting with each other, thus causing large amounts of BGP traffic on both sides of newly connected virtual networks. machines.

root@evehyper1:~/147.75.81.189# ifstat -i bond0,lo bond0 lo KB/s in KB/s out KB/s in KB/s out 690.46 157.37 11568.95 11568.95 352.62 392.74 20413.64 20413.64 468.95 424.58 21983.50 21983.50 In the end, we saw some pretty awesome BGP paths, since each system advertises / 48 IPv6 addresses, you can see the routes to each system and to all other systems that it would have to go through to get there.

$ birdc6 s ro all 2a07:1500:b4f::/48 unicast [session0 18:13:15.471] * (100) [AS2895i] via 2a07:1500:d45::2215 on eth0 Type: BGP univ BGP.origin: IGP BGP.as_path: 3397 3396 3394 3385 3386 3387 2049 2051 2721 2720 2719 2692 2645 2644 2643 145 144 146 2755 1381 1385 1386 1446 1448 849 847 862 867 863 854 861 859 1262 1263 1264 1266 1267 2890 2892 2895 BGP.next_hop: 2a07:1500:d45::2215 fe80::5054:ff:fe6e:5068 BGP.local_pref: 100 I took a snapshot of the routing table on each router in the universe, and then depicted frequently used systems to access other systems, but this image is huge. Here is a small version of this in a publication; if you click on an image, keep in mind that this image will most likely cause your device to run out of memory.

After that, I thought, what else can you display on BGP routed networks? Could you use a smaller model to test how the routing configuration works in large networks? I prepared a file that displayed the London Underground system to check this :

The TFL system is much smaller and has much more jumps that have only one direction, since most stations have only one “line” of transport, however we can extract one thing from this, we can use it to play safely with BGP MED 's.

However, there is a problem when we view the TFL card as a BGP network, in the real world the time / delay between each stop is not the same, and therefore, if we simulated this delay, we would not bypass the system as fast as we could, since we only look at the fewest stations to go.

However, thanks to the Freedom of Information Act (FOIA), the request that was sent to the TFL provided us with the time needed to move from one station to another. They were generated in a BGP router configuration, for example:

protocol bgp session1 { neighbor 2a07:1500:34::62 as 1337; source address 2a07:1500:34::63; local as 1337; enable extended messages; enable route refresh; ipv6 { import filter{ bgp_med = bgp_med + 162; accept; }; export all; }; } protocol bgp session2 { neighbor 2a07:1500:1a::b3 as 1337; source address 2a07:1500:1a::b2; local as 1337; enable extended messages; enable route refresh; ipv6 { import filter{ bgp_med = bgp_med + 486; accept; }; export all; }; } In

session1 time interval between two stations is 1.6 minutes, the other path from this station is 4.86 minutes. This number is added to the route for each station / router through which it passes. This means that each router / station in the network knows that it is time to get to each station through each route:

This means that traceroutes determine exactly how you can navigate around London, for example, to my station in Paddington:

We can also have fun with BGP, simulating a maintenance or incident at Waterloo Station:

And as the entire network instantly chooses the next fastest route, not the one with the fewest passing stations.

And this is BGP MED magic in routing!

The code for all this is already available. You can create your own network structures with a fairly simple JSON schema, or simply use EvE online or TFL, as they are already in the repository.

You can find all the code for this here.

Source: https://habr.com/ru/post/439298/