QLC based SSD - hard drive killer? Not really

SSD-drives have long been out of the category of expensive and unreliable exotics and have become a familiar component of computers at all levels, from budget office “typewriters” to powerful servers.

In this article we want to talk about a new stage in the evolution of SSD - the next increase in the level of data recording in NAND: about four-level cells that store 4 bits each , or QLC (Quad-Level Cell). Drives made with this technology have a greater recording density, this simplifies the increase in their volume, and the cost is less than that of SSDs with “traditional” MLC and TLC cells.

As one would expect, in the development process it was necessary to solve many problems associated with the transition to a new technology. Giant companies are successfully coping with them, while small Chinese firms are still lagging behind, their storage devices are less technological, but cheaper.

How this happened, whether a new “HDD killer” has appeared and whether it is necessary to run to the stores, changing all the HDDs and SSDs of previous generations to new ones - we will tell below.

In the process of the evolution of drives, the method of storing information changed, the technical process became more and more subtle, the recording density increased both in a single cell and on a chip. Algorithms were improved in the controllers, the writing speed approached the reading speed, and then they began to grow rapidly. Today, the uniform distribution of calls to NAND memory cells has reached a certain optimum, the reliability of information storage has increased many times and is almost equal to that of traditional HDDs. In the process of rapid development of technology, SSD began to be produced in a variety of form factors.

Now the market has a huge selection of drives from a variety of companies, both first-tier A-brands, and from Chinese companies that have tried to have SSD enough for everyone

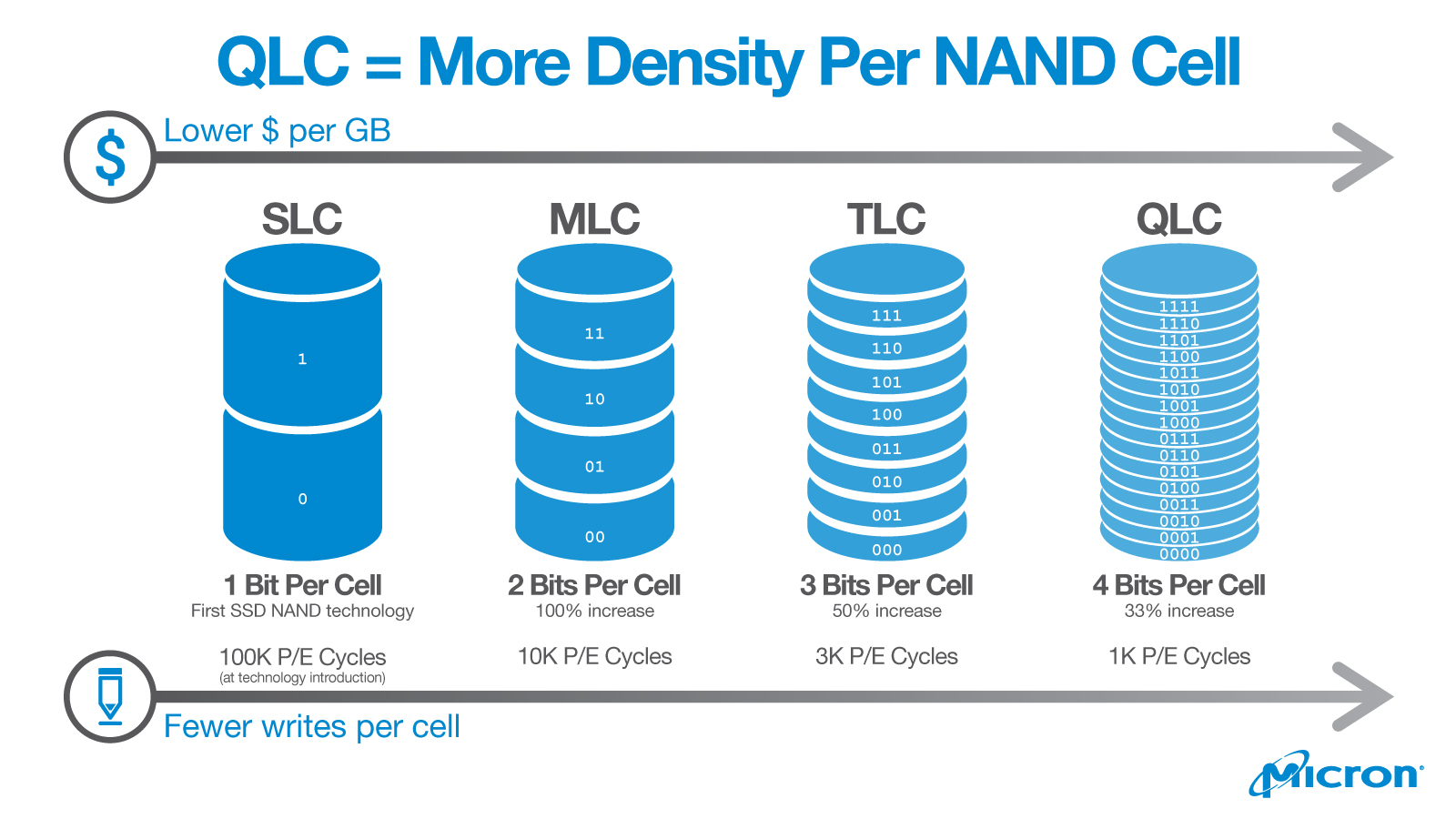

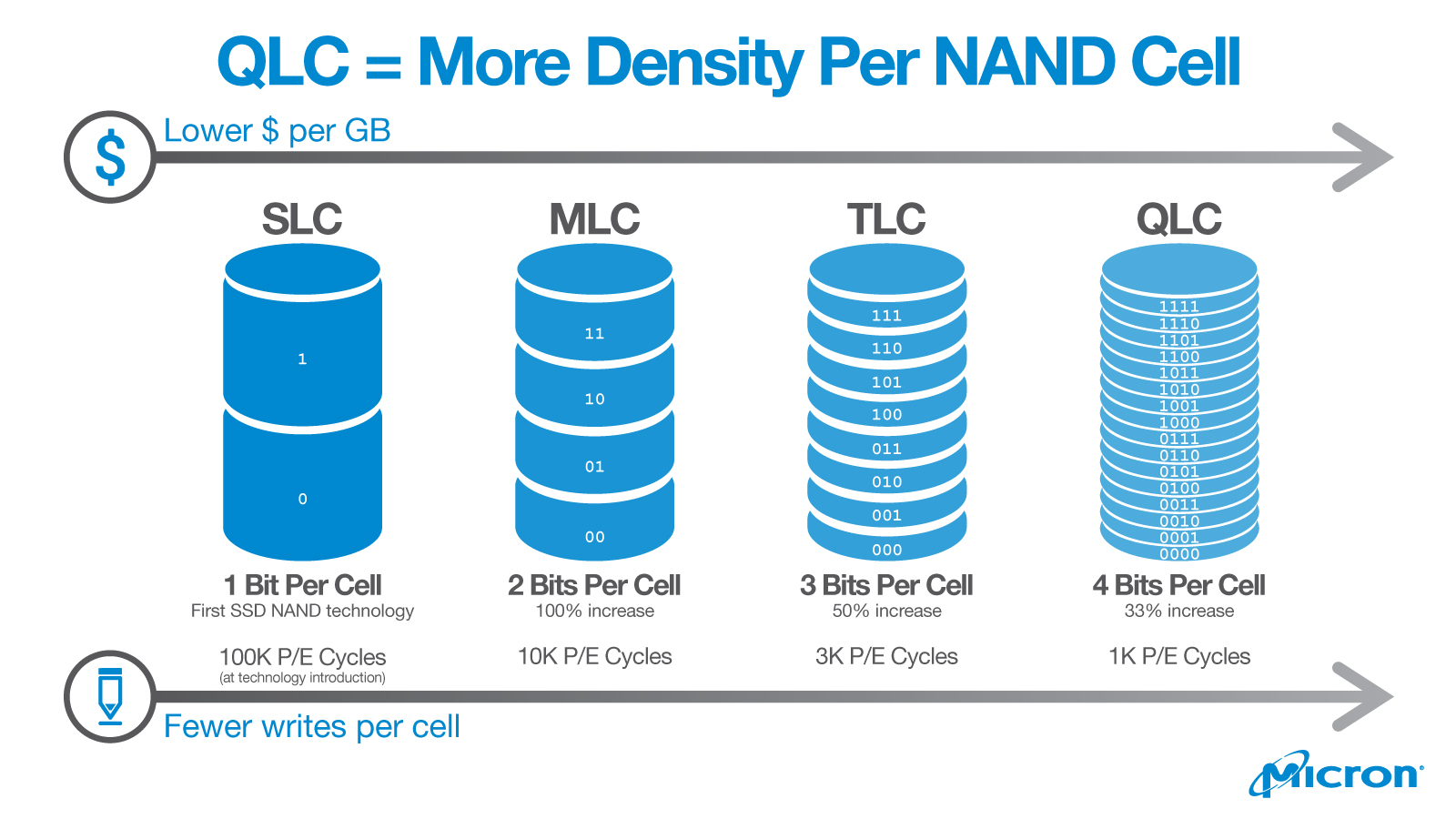

The number of bits written in one NAND cell is determined by how many charge levels are in a floating gate transistor. The more of them, the more bits can store one transistor. This is the main difference between the QLC technology and the “previous” TLC - the number of bits in one cell has increased from three to four .

With an increase in the number of charge levels, the characteristics of the drive change very much: the access speed drops, the reliability of information storage decreases, but the capacity increases, and the price / volume ratio becomes more attractive for buyers. Accordingly, chips built using QLC technology are cheaper than the previous generation of TLC, which store three bits in one cell. At the same time, QLC is less reliable, because the probability of cell failure significantly increases with each new level.

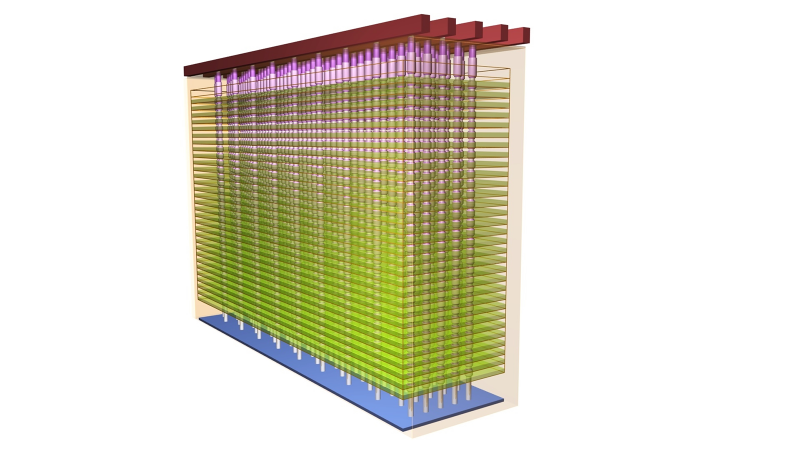

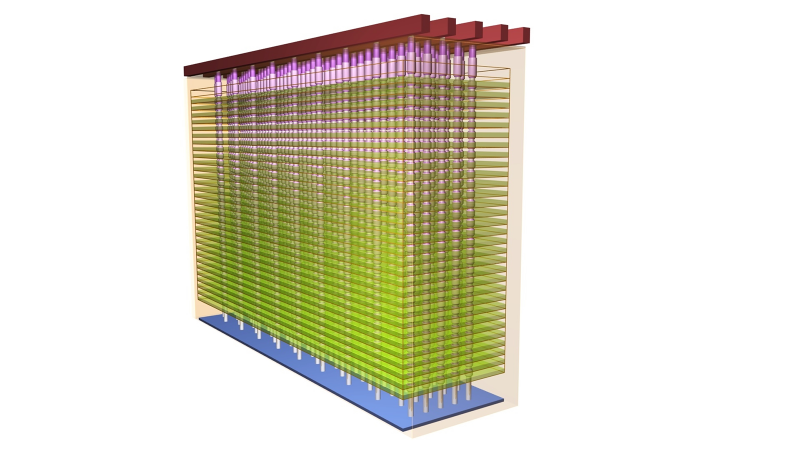

In addition to the complexities of one single cell, others arise. Due to the fact that memory chips are made using 3D NAND technology, they are three-dimensional arrays of cells tightly packed one above the other, and the cells in the neighboring "floors" mutually influence each other, spoiling the lives of their neighbors. In addition, modern chips contain more layers than products of previous generations. For example, one of the technologies for increasing memory density implies an increase in the number of layers in a crystal from 48 to 64. In the framework of another technology, a soldering of two 48-layer crystals is carried out, bringing the total to 96, which imposes very high requirements on the combination of boundaries in this sandwich ”, it becomes more points of failure and the rejection rate is growing. Despite the complexity of the process, this technology is more profitable than trying to grow layers in a single chip, because rejection with increasing number of layers grows nonlinearly, and a low yield of suitable chips would be too expensive. In fairness, it should be noted that only the highest echelon companies can afford such developments. Some Chinese firms producing chips have not switched to 64-layer crystals, and only such electronics giants as Intel and Micron have the technology of “gluing” two 48-layer crystals.

3D NAND

Another novelty used in drives of the new generation of A-brands is the transfer of the control and supplying strapping to an array of cells. Due to this, the area of crystals has decreased and it has become possible to place four banks of memory in places where only two were previously located. And this, in turn, made it possible to parallelize queries and increase the speed of working with memory. In addition, a smaller area of crystals allowed to increase the storage capacity.

Increased cell density helps fight faster memory degradation. This task was dealt with "in the forehead", with the help of even greater redundancy of the array of cells.

Prototypes of QLC-chips showed last summer, and the first promises about the release of SSD on a new technology were made at the beginning of this year. In the summer, almost all firms producing drives, reported that they were ready for mass production, voiced the names of new models, their prices and specifications. Now you can buy SSD with QLC-chips. Most models are available in the form factor M.2 and 2.5 ", with capacities of 512 gigabytes, 1 and 2 terabytes.

To begin with, it is fair to admit that drives created using the new QLC technology are categorically not suitable for serious / critical tasks. And the reason for this is a whole series of technical difficulties that engineers of both large corporations-inventors and Chinese "followers" have to solve.

For example, on the Intel site, new SSDs are offered only in the segment for mid-range home computers. Especially justified their use in inefficient netbooks, whose tasks do not include games or work with databases, and the cost, on the contrary, is very important. Such "typewriters" are becoming increasingly popular. For work in the segment "Enterprise" only drives with MLC and TLC chips are offered.

If we compare the characteristics of branded SSDs (it makes no sense to consider cheap Chinese, cheap controllers kill all the characteristics), then the average price of QLC-drives is about 20-30% lower than MLC, with the same form factor and volume.

Access speed For a model with QLC chips, it is: for reading up to 1500 Mb / s, for writing up to 1000 Mb / s. For the model on TLC chips - 3210 MB / s and 1625 MB / s, respectively. The write speed of the QLC-drive is one and a half times lower, and the reads are two. The difference is significant, but for surfing the internet and editing text is more than enough.

TBW (Total Bytes Written) . The critical parameter characterizing the resource SSD. It indicates the maximum number of terabytes that can be written to the drive. The higher the TBW, the more resilient the drive and the longer it will be able to work without failures. For all models of the 760p series, the resource is 288 TBW, and for the 660p - only 100 TBW. Almost triple the difference.

DWPD (Drive Writes Per Day) . This reliability indicator indicates how many times a day you can overwrite the entire drive as a whole, and is calculated by the formula:

where 0.512 is the volume of the drive in terabytes;

365 is the number of days per year;

5 - the number of years of warranty.

DWPD is more objective, because the calculation takes into account the time during which the manufacturer undertakes to solve problems with the drive for free. For the QLC-model, the DWPD is 0.1, and for the TLC-models it is 0.32. In other words, in this example, every day QLC can completely overwrite 50 GB - this is its normal mode of operation. Given that at the same price the capacity of QLC-drives is higher than the MLC, then the average user of a “typewriter with the Internet” is unlikely to manage to develop this resource.

These two devices are a vivid example of how engineers have to solve a lot of technical difficulties, which appeared more clearly in QLC than in TLC. In particular, QLC has lower access speed for writing and reading, lower resource, higher WAF ratio (more about it - lower). Let's take a closer look at the main difficulties and methods for solving them.

Let's start with one of the most noticeable features for the user of the QLC SSD - reducing the write speed when the drive cache is full . Since the access speed of QLC and so is relatively low, manufacturers are trying to increase it using caching. The SSD uses its own array of disk cells, which are converted to single-bit mode of operation - SLC.

There are several caching algorithms. Often, a small part of the storage capacity of the drive itself is allocated for the cache - on average, from 2 to 16 GB, in some models there can be up to several tens of gigabytes. The disadvantage of the method is that if an intensive data exchange takes place while the computer is running, a small amount of cache can quickly fill up and the read / write speed will drop dramatically.

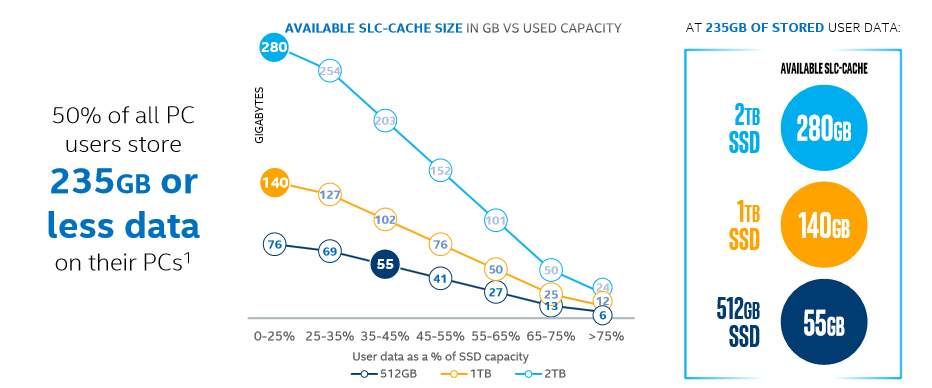

More technologically advanced companies use advanced controllers that are able to dynamically transfer part of the cells to the SLC fast mode, in this case, the cache size depends on the total volume of the drive and can reach 10%. In modern SSD, both methods are used: a relatively small amount of static cache is supplemented by a dynamically allocated volume, which is many times larger. The more free space, the larger the cache size and the more difficult it is to exhaust its volume. It is logical that a larger drive has a larger cache, which means that the dynamic cache in it will work more efficiently.

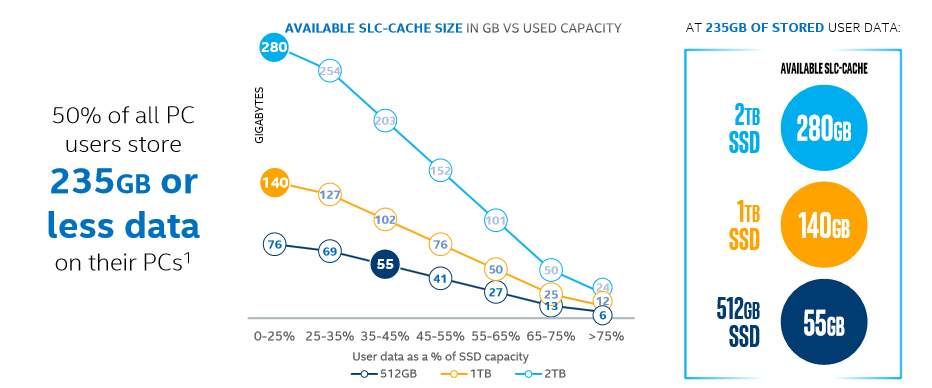

Visual dependence of the SLC-cache size on the volume of the drive and free space on it.

The complication of the QLC architecture compared to TLC has also led to an increase in the number of data reading errors. To correct them, it was necessary to introduce the forced use of ECC (Error correction code) algorithms . With their help, the controller independently corrects almost all data reading errors. And the development of effective correction algorithms is one of the most difficult tasks when creating QLC drives, since it is necessary not only to ensure high correction efficiency (expressed in the number of corrected bits per 1 KB of data), but also to address memory cells as little as possible in order to save their resource . For this, manufacturers introduce more productive controllers, but the main thing is to use powerful scientific and statistical devices to create and improve algorithms.

The features of the QLC architecture not only reduce the reliability, but also lead to the phenomenon of “write amplification” (Write amplification, WA) . Although it would be more correct to say “multiply the record”, however, the “gain” option is still more common in Runet.

What is WA? SSD physically performs much more read / write operations on cells than is required for the amount of data directly received from the operating system. Unlike traditional HDDs, which have a very small “quantum” of rewritable data, the data on the SSD is stored in fairly large “pages”, usually 4 KB each. There is also the concept of "block" - the minimum number of pages that can be overwritten. Usually the block contains from 128 to 512 pages.

For example, the rewriting cycle in SSD consists of several operations:

As you can see, this operation repeatedly reads and erases relatively large amounts of data in several different areas of the drive, even if the operating system wants to change only a few bytes. This seriously increases cell wear. In addition, the "extra" read / write operations significantly reduce the bandwidth of flash memory.

The “write gain” degree is expressed by the WAF (Write amplification factor) factor: the ratio of the actual rewritable data volume to the volume that needs to be overwritten. Ideally, when compression is not used, WAF is equal to 1. Actual values very much depend on various factors, for example, on the size of rewritable blocks and algorithms used in controllers.

And since QLC cells are much more sensitive to the number of rewriting cycles, the size of WAF has become much more important than for TLC and MLC.

What other factors negatively affect WAF in QLC-drives?

With the growth of WAF in QLC, they are struggling with various methods.

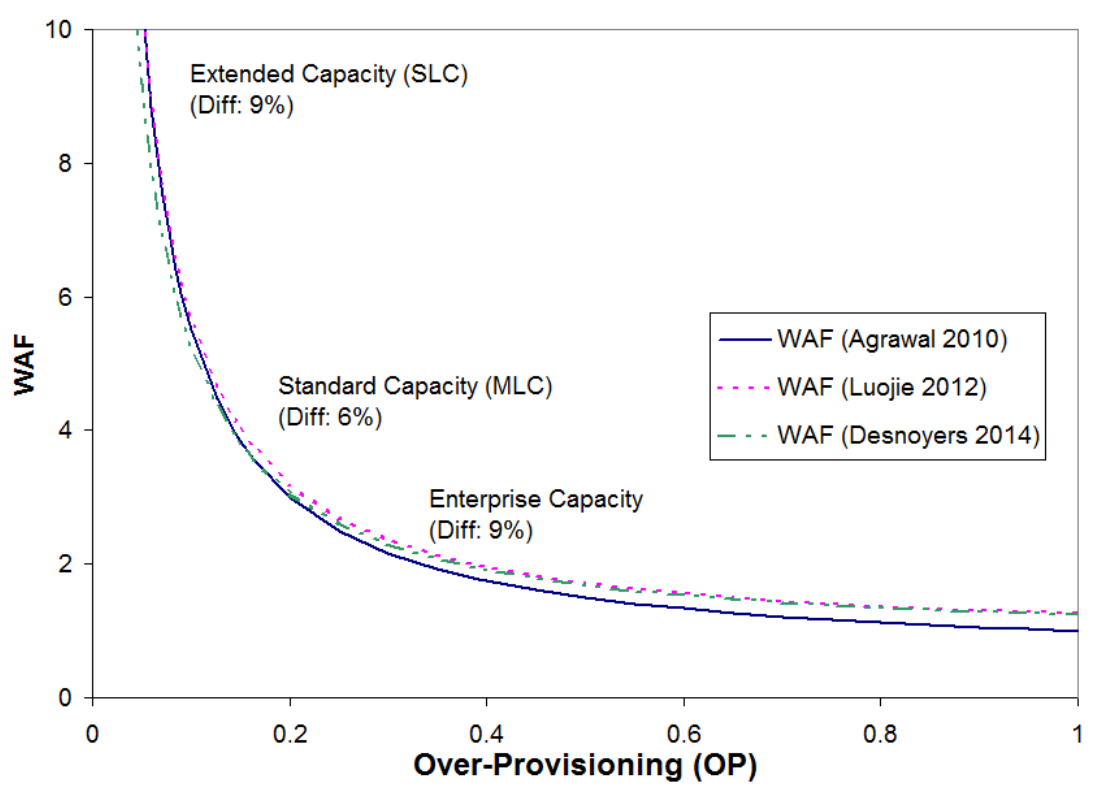

For example, using over-provisioning (OP) - allocating for official needs a part of the volume that is not available to the user.

The more allocated area, the more freedom the controller has and the faster the work of its algorithms. For example, earlier, under OP, the difference between “real” and “marketing” gigabytes was distinguished, that is, between 10 9 = 1 000 000 000 bytes and 2 30 = 1 073 741 824 bytes, which equals 7.37% of the total volume of the drive. There are a number of other tricks to allocate service space. For example, modern controllers allow you to dynamically use under OP the entire current free volume of the drive.

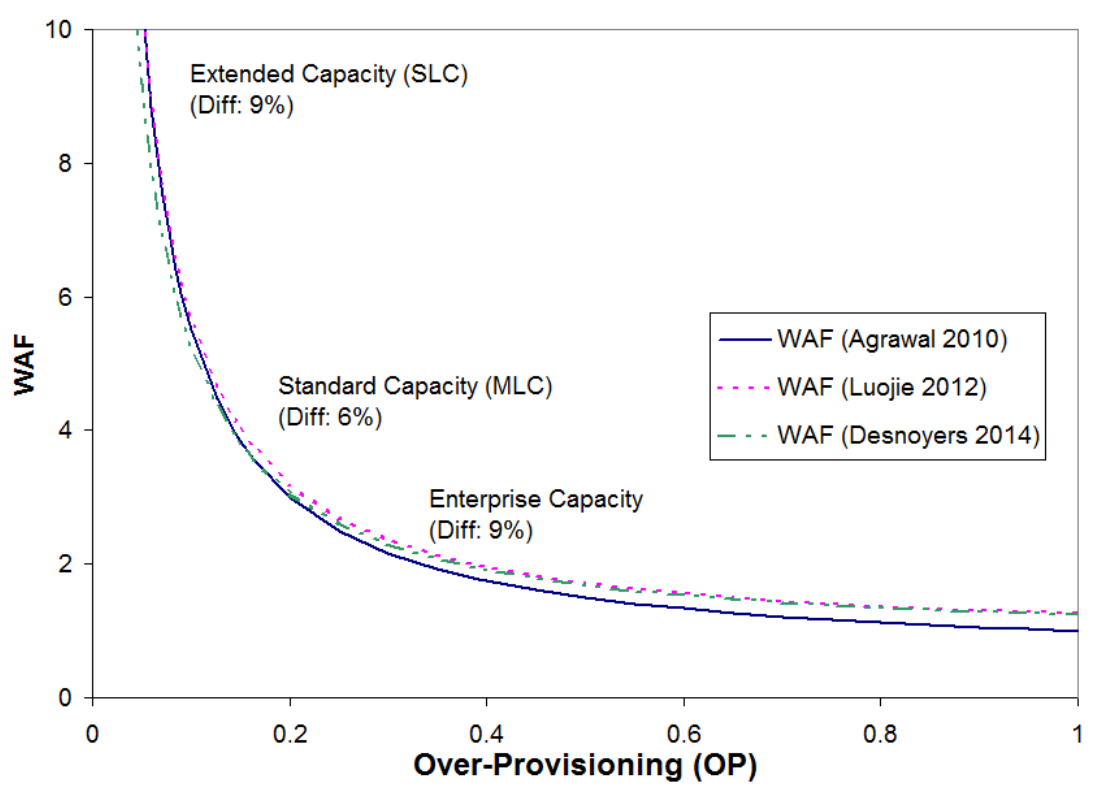

Approximate dependence of WAF on the size of OP:

Allows you to reduce WAF and the separation of static and dynamic data (Separating static and dynamic data). The controller calculates which data is overwritten frequently and which is mostly read, or does not change at all, and groups the data blocks on the disk accordingly.

Other tools to reduce WAF in QLC-drives include sequential recording techniques (very roughly this can be compared with the HDD defragmentation we are used to). The algorithm defines blocks that may belong to one large file and do not require processing by the garbage collector. If the operating system gives the command to delete or modify this file, then its blocks will be erased or overwritten entirely, without being included in the WA loop, which increases the speed and wears out less memory cells. Finally, data compression before recording and deduplication contribute to the fight against WA.

As you already understood, the reliability and resource of QLC-drives depends far not only on the used memory chips, but also on the controller performance, and most importantly - on the advancement of various algorithms embedded in the controller. Many companies, even large ones, buy controllers from other firms specializing in their production. Small Chinese firms use inexpensive and simple controllers of past generations, guided not by the quality and novelty of algorithms, but by price. Large companies do not save on hardware for their SSDs and choose controllers that provide the drive with a long life and faster performance. Leaders among manufacturers of controllers for SSD are constantly changing. But besides complex controllers, the firmware algorithms play a huge role, which large manufacturers develop on their own, not trusting this important business to third-party companies.

The main advantage of QLC over drives on TLC-and MLC-chips is that it was possible to put even more memory into the same physical volume. So QLC will not force out previous technologies from the market, and even more so they will not become competitors for HDD.

The difference between QLC and TLC in speed will be noticeable when running heavy programs and with intensive data exchange. But the average user may not notice this, because in computers of the level for which QLC-drives are recommended, the program waits longer for user actions than works with data.

We can safely say that the niche of inexpensive drives for computers of small productivity, when it does not make sense to overpay for increased reliability or maximum write and read speeds, is successfully occupied. In such computers, the QLC SSD can be the only drive on which the system and necessary programs will be installed, as well as stored user data. And in the enterprise - the revolution did not happen, here as before, they will prefer the more reliable TLC and the slow but unpretentious HDD.

However, the technology does not stand still, already this year, manufacturers promise to begin the transition to the technical process at 7 nm, and in the long term, in 2021 and later, technical processes will come to 5 and 3 nm. Controller algorithms are being improved, some firms promise smart SSD drives, which will be several times faster, under certain specific use cases, the development of 3D NAND technologies is planned.

So, let's wait a couple of years and see what else manufacturers can offer us.

For more information on Kingston products, visit the company's official website .

In this article we want to talk about a new stage in the evolution of SSD - the next increase in the level of data recording in NAND: about four-level cells that store 4 bits each , or QLC (Quad-Level Cell). Drives made with this technology have a greater recording density, this simplifies the increase in their volume, and the cost is less than that of SSDs with “traditional” MLC and TLC cells.

As one would expect, in the development process it was necessary to solve many problems associated with the transition to a new technology. Giant companies are successfully coping with them, while small Chinese firms are still lagging behind, their storage devices are less technological, but cheaper.

How this happened, whether a new “HDD killer” has appeared and whether it is necessary to run to the stores, changing all the HDDs and SSDs of previous generations to new ones - we will tell below.

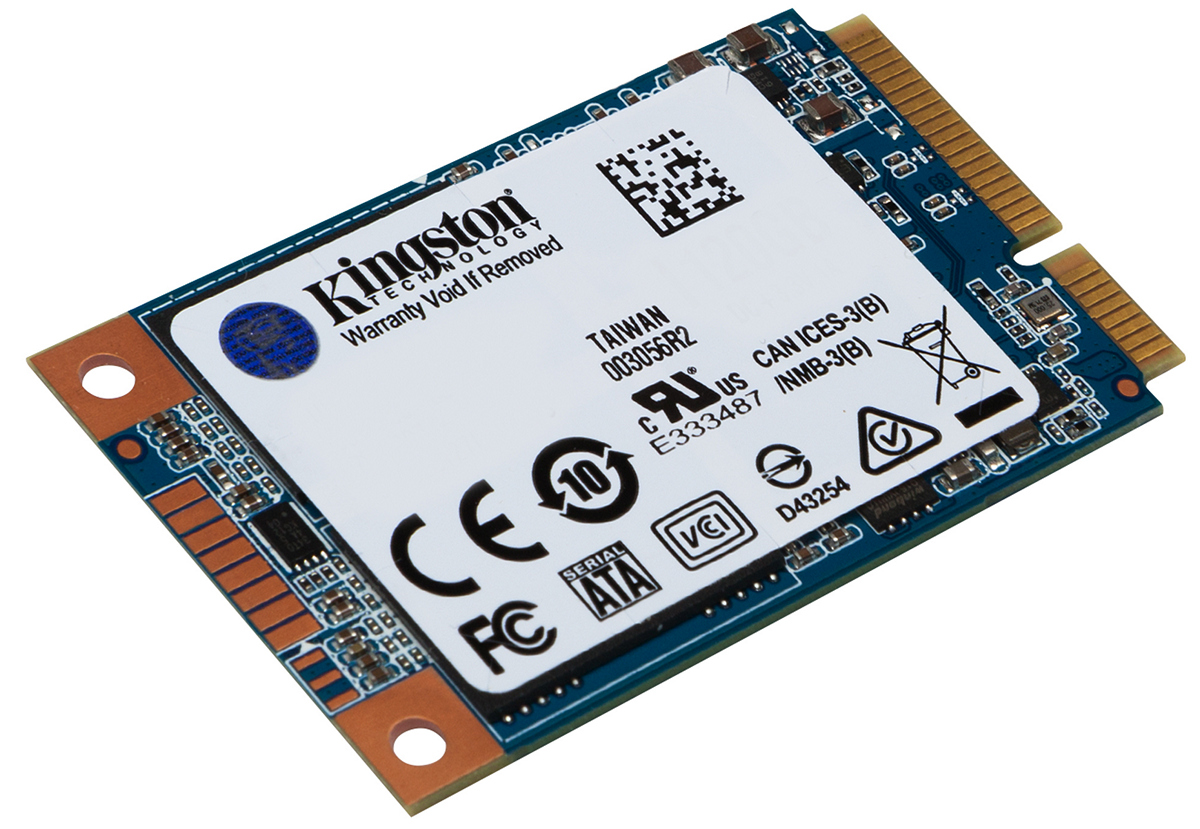

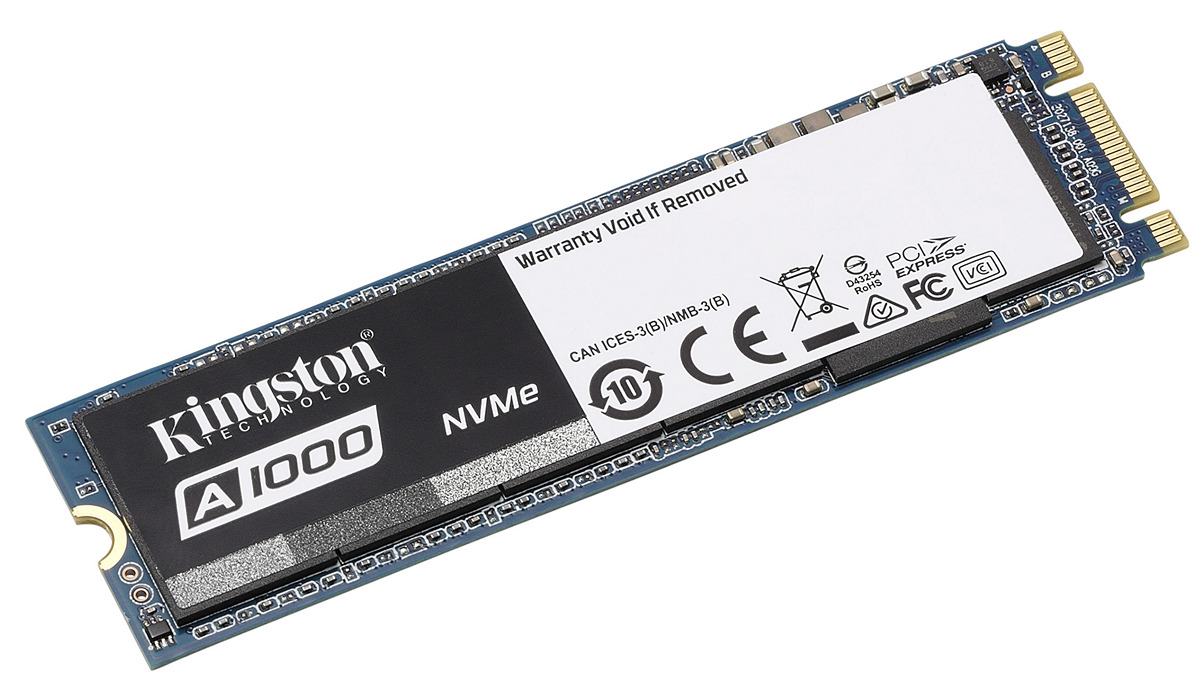

In the process of the evolution of drives, the method of storing information changed, the technical process became more and more subtle, the recording density increased both in a single cell and on a chip. Algorithms were improved in the controllers, the writing speed approached the reading speed, and then they began to grow rapidly. Today, the uniform distribution of calls to NAND memory cells has reached a certain optimum, the reliability of information storage has increased many times and is almost equal to that of traditional HDDs. In the process of rapid development of technology, SSD began to be produced in a variety of form factors.

Now the market has a huge selection of drives from a variety of companies, both first-tier A-brands, and from Chinese companies that have tried to have SSD enough for everyone

What does QLC technology offer us?

The number of bits written in one NAND cell is determined by how many charge levels are in a floating gate transistor. The more of them, the more bits can store one transistor. This is the main difference between the QLC technology and the “previous” TLC - the number of bits in one cell has increased from three to four .

With an increase in the number of charge levels, the characteristics of the drive change very much: the access speed drops, the reliability of information storage decreases, but the capacity increases, and the price / volume ratio becomes more attractive for buyers. Accordingly, chips built using QLC technology are cheaper than the previous generation of TLC, which store three bits in one cell. At the same time, QLC is less reliable, because the probability of cell failure significantly increases with each new level.

In addition to the complexities of one single cell, others arise. Due to the fact that memory chips are made using 3D NAND technology, they are three-dimensional arrays of cells tightly packed one above the other, and the cells in the neighboring "floors" mutually influence each other, spoiling the lives of their neighbors. In addition, modern chips contain more layers than products of previous generations. For example, one of the technologies for increasing memory density implies an increase in the number of layers in a crystal from 48 to 64. In the framework of another technology, a soldering of two 48-layer crystals is carried out, bringing the total to 96, which imposes very high requirements on the combination of boundaries in this sandwich ”, it becomes more points of failure and the rejection rate is growing. Despite the complexity of the process, this technology is more profitable than trying to grow layers in a single chip, because rejection with increasing number of layers grows nonlinearly, and a low yield of suitable chips would be too expensive. In fairness, it should be noted that only the highest echelon companies can afford such developments. Some Chinese firms producing chips have not switched to 64-layer crystals, and only such electronics giants as Intel and Micron have the technology of “gluing” two 48-layer crystals.

3D NAND

Another novelty used in drives of the new generation of A-brands is the transfer of the control and supplying strapping to an array of cells. Due to this, the area of crystals has decreased and it has become possible to place four banks of memory in places where only two were previously located. And this, in turn, made it possible to parallelize queries and increase the speed of working with memory. In addition, a smaller area of crystals allowed to increase the storage capacity.

Increased cell density helps fight faster memory degradation. This task was dealt with "in the forehead", with the help of even greater redundancy of the array of cells.

Prototypes of QLC-chips showed last summer, and the first promises about the release of SSD on a new technology were made at the beginning of this year. In the summer, almost all firms producing drives, reported that they were ready for mass production, voiced the names of new models, their prices and specifications. Now you can buy SSD with QLC-chips. Most models are available in the form factor M.2 and 2.5 ", with capacities of 512 gigabytes, 1 and 2 terabytes.

Positioning QLC-drives

To begin with, it is fair to admit that drives created using the new QLC technology are categorically not suitable for serious / critical tasks. And the reason for this is a whole series of technical difficulties that engineers of both large corporations-inventors and Chinese "followers" have to solve.

For example, on the Intel site, new SSDs are offered only in the segment for mid-range home computers. Especially justified their use in inefficient netbooks, whose tasks do not include games or work with databases, and the cost, on the contrary, is very important. Such "typewriters" are becoming increasingly popular. For work in the segment "Enterprise" only drives with MLC and TLC chips are offered.

If we compare the characteristics of branded SSDs (it makes no sense to consider cheap Chinese, cheap controllers kill all the characteristics), then the average price of QLC-drives is about 20-30% lower than MLC, with the same form factor and volume.

Access speed For a model with QLC chips, it is: for reading up to 1500 Mb / s, for writing up to 1000 Mb / s. For the model on TLC chips - 3210 MB / s and 1625 MB / s, respectively. The write speed of the QLC-drive is one and a half times lower, and the reads are two. The difference is significant, but for surfing the internet and editing text is more than enough.

TBW (Total Bytes Written) . The critical parameter characterizing the resource SSD. It indicates the maximum number of terabytes that can be written to the drive. The higher the TBW, the more resilient the drive and the longer it will be able to work without failures. For all models of the 760p series, the resource is 288 TBW, and for the 660p - only 100 TBW. Almost triple the difference.

DWPD (Drive Writes Per Day) . This reliability indicator indicates how many times a day you can overwrite the entire drive as a whole, and is calculated by the formula:

DWPD = TBW / 0,512 * 365 * 5where 0.512 is the volume of the drive in terabytes;

365 is the number of days per year;

5 - the number of years of warranty.

DWPD is more objective, because the calculation takes into account the time during which the manufacturer undertakes to solve problems with the drive for free. For the QLC-model, the DWPD is 0.1, and for the TLC-models it is 0.32. In other words, in this example, every day QLC can completely overwrite 50 GB - this is its normal mode of operation. Given that at the same price the capacity of QLC-drives is higher than the MLC, then the average user of a “typewriter with the Internet” is unlikely to manage to develop this resource.

These two devices are a vivid example of how engineers have to solve a lot of technical difficulties, which appeared more clearly in QLC than in TLC. In particular, QLC has lower access speed for writing and reading, lower resource, higher WAF ratio (more about it - lower). Let's take a closer look at the main difficulties and methods for solving them.

Access speed

Let's start with one of the most noticeable features for the user of the QLC SSD - reducing the write speed when the drive cache is full . Since the access speed of QLC and so is relatively low, manufacturers are trying to increase it using caching. The SSD uses its own array of disk cells, which are converted to single-bit mode of operation - SLC.

There are several caching algorithms. Often, a small part of the storage capacity of the drive itself is allocated for the cache - on average, from 2 to 16 GB, in some models there can be up to several tens of gigabytes. The disadvantage of the method is that if an intensive data exchange takes place while the computer is running, a small amount of cache can quickly fill up and the read / write speed will drop dramatically.

More technologically advanced companies use advanced controllers that are able to dynamically transfer part of the cells to the SLC fast mode, in this case, the cache size depends on the total volume of the drive and can reach 10%. In modern SSD, both methods are used: a relatively small amount of static cache is supplemented by a dynamically allocated volume, which is many times larger. The more free space, the larger the cache size and the more difficult it is to exhaust its volume. It is logical that a larger drive has a larger cache, which means that the dynamic cache in it will work more efficiently.

Visual dependence of the SLC-cache size on the volume of the drive and free space on it.

Read errors

The complication of the QLC architecture compared to TLC has also led to an increase in the number of data reading errors. To correct them, it was necessary to introduce the forced use of ECC (Error correction code) algorithms . With their help, the controller independently corrects almost all data reading errors. And the development of effective correction algorithms is one of the most difficult tasks when creating QLC drives, since it is necessary not only to ensure high correction efficiency (expressed in the number of corrected bits per 1 KB of data), but also to address memory cells as little as possible in order to save their resource . For this, manufacturers introduce more productive controllers, but the main thing is to use powerful scientific and statistical devices to create and improve algorithms.

Resource

The features of the QLC architecture not only reduce the reliability, but also lead to the phenomenon of “write amplification” (Write amplification, WA) . Although it would be more correct to say “multiply the record”, however, the “gain” option is still more common in Runet.

What is WA? SSD physically performs much more read / write operations on cells than is required for the amount of data directly received from the operating system. Unlike traditional HDDs, which have a very small “quantum” of rewritable data, the data on the SSD is stored in fairly large “pages”, usually 4 KB each. There is also the concept of "block" - the minimum number of pages that can be overwritten. Usually the block contains from 128 to 512 pages.

For example, the rewriting cycle in SSD consists of several operations:

- move pages from the erasable block to a temporary storage location,

- clear the space occupied by the unit

- rewrite the temporary block by adding new pages,

- write the updated block to the old place,

- empty the space used for temporary storage.

As you can see, this operation repeatedly reads and erases relatively large amounts of data in several different areas of the drive, even if the operating system wants to change only a few bytes. This seriously increases cell wear. In addition, the "extra" read / write operations significantly reduce the bandwidth of flash memory.

The “write gain” degree is expressed by the WAF (Write amplification factor) factor: the ratio of the actual rewritable data volume to the volume that needs to be overwritten. Ideally, when compression is not used, WAF is equal to 1. Actual values very much depend on various factors, for example, on the size of rewritable blocks and algorithms used in controllers.

And since QLC cells are much more sensitive to the number of rewriting cycles, the size of WAF has become much more important than for TLC and MLC.

What other factors negatively affect WAF in QLC-drives?

- The garbage collection algorithm , which searches for unevenly filled blocks that simultaneously contain empty and filled pages, overwriting them so that the blocks contain only empty or only filled pages, which further reduces the number of operations leading to WA.

- Wear leveling . The blocks that the system often accesses are regularly moved to cells instead of blocks that are less in demand. This is done to ensure that all memory cells in the drive are worn evenly. But as a result, the share of the drive gradually decreases, even if you use it as an archive storage.

Here is an example of “gain recording” due to the work of wear leveling and garbage collection mechanisms:

- Also, the magnitude of WAF is affected by the operation of the error correction mechanism (ECC). As already mentioned, it is possible to reduce its contribution to “recording multiplication” by improving the algorithms, including LDPC .

- With enough free space on the SSD, some controllers can transfer part of the NAND cells to a mode with fewer write levels: from QLC to SLC. This greatly speeds up the drive and improves its reliability. But, when free space is reduced, the cells will again be overwritten in the mode with the maximum number of levels. The more free space on the SSD, the faster and more efficiently it will work, provided that its controller is sufficiently advanced and supports this function. If you keep some of the most actively used cells in SLC mode, this increases the overall WAF, but reduces wear.

With the growth of WAF in QLC, they are struggling with various methods.

For example, using over-provisioning (OP) - allocating for official needs a part of the volume that is not available to the user.

OP = (физическая ёмкость — доступная пользователю ёмкость) / доступная пользователю ёмкостьThe more allocated area, the more freedom the controller has and the faster the work of its algorithms. For example, earlier, under OP, the difference between “real” and “marketing” gigabytes was distinguished, that is, between 10 9 = 1 000 000 000 bytes and 2 30 = 1 073 741 824 bytes, which equals 7.37% of the total volume of the drive. There are a number of other tricks to allocate service space. For example, modern controllers allow you to dynamically use under OP the entire current free volume of the drive.

Approximate dependence of WAF on the size of OP:

Allows you to reduce WAF and the separation of static and dynamic data (Separating static and dynamic data). The controller calculates which data is overwritten frequently and which is mostly read, or does not change at all, and groups the data blocks on the disk accordingly.

Other tools to reduce WAF in QLC-drives include sequential recording techniques (very roughly this can be compared with the HDD defragmentation we are used to). The algorithm defines blocks that may belong to one large file and do not require processing by the garbage collector. If the operating system gives the command to delete or modify this file, then its blocks will be erased or overwritten entirely, without being included in the WA loop, which increases the speed and wears out less memory cells. Finally, data compression before recording and deduplication contribute to the fight against WA.

As you already understood, the reliability and resource of QLC-drives depends far not only on the used memory chips, but also on the controller performance, and most importantly - on the advancement of various algorithms embedded in the controller. Many companies, even large ones, buy controllers from other firms specializing in their production. Small Chinese firms use inexpensive and simple controllers of past generations, guided not by the quality and novelty of algorithms, but by price. Large companies do not save on hardware for their SSDs and choose controllers that provide the drive with a long life and faster performance. Leaders among manufacturers of controllers for SSD are constantly changing. But besides complex controllers, the firmware algorithms play a huge role, which large manufacturers develop on their own, not trusting this important business to third-party companies.

findings

The main advantage of QLC over drives on TLC-and MLC-chips is that it was possible to put even more memory into the same physical volume. So QLC will not force out previous technologies from the market, and even more so they will not become competitors for HDD.

The difference between QLC and TLC in speed will be noticeable when running heavy programs and with intensive data exchange. But the average user may not notice this, because in computers of the level for which QLC-drives are recommended, the program waits longer for user actions than works with data.

We can safely say that the niche of inexpensive drives for computers of small productivity, when it does not make sense to overpay for increased reliability or maximum write and read speeds, is successfully occupied. In such computers, the QLC SSD can be the only drive on which the system and necessary programs will be installed, as well as stored user data. And in the enterprise - the revolution did not happen, here as before, they will prefer the more reliable TLC and the slow but unpretentious HDD.

However, the technology does not stand still, already this year, manufacturers promise to begin the transition to the technical process at 7 nm, and in the long term, in 2021 and later, technical processes will come to 5 and 3 nm. Controller algorithms are being improved, some firms promise smart SSD drives, which will be several times faster, under certain specific use cases, the development of 3D NAND technologies is planned.

So, let's wait a couple of years and see what else manufacturers can offer us.

For more information on Kingston products, visit the company's official website .

Source: https://habr.com/ru/post/439568/