How easy it is to monitor the site

In order to remotely monitor the health of servers, professionals use special software systems, such as Zabbix or Icinga . But, if you are a novice owner or administrator of one or two websites with a small load, then there is no need for large monitoring systems. The main parameter is whether the site serves users quickly. Therefore, you can monitor the work of the site using a simple program from any computer connected to the Internet.

Let's write such a script now - the simplest monitoring of the availability and speed of the site. This program can be run on a home computer, smartphone, and so on. The program has only two functions:

I will describe each step in some detail, so that even a beginner, who is only slightly familiar with writing command files (bat and cmd on DOS and Windows systems, sh on UNIX-like systems), figured out without any particular problems and could adapt the script to his needs. But please do not use this script thoughtlessly, because if used incorrectly, it may not give proper results and, in addition, gobble up a lot of traffic .

I will describe a script for an operating system such as "Linux" and its use on a home computer. By the same principles, this can be done on other platforms. And for those who are just eyeing the possibilities of Linux, another example might be interesting, which simple and powerful tool are its scripts.

Let's get a separate folder for this program and create 3 files in it. For example, I have a folder / home / me / Progs / iNet / monitor (here me is the name of my user, Progs / iNet is my folder for programs related to the Internet, and monitor is the name of this program, from the word monitor, that is , observe. As I am the only user of this computer, I keep such files among personal folders (/ home), in a separate disk partition, which allows you to save them when you reinstall the system). In this folder there will be files:

(For convenience of editing and, if that, file recovery, you can include this folder in the Git version control system; here I will not describe this).

We will run the mon.sh file at regular short intervals, for example, 60 seconds. I used for this the standard tool provided by the operating system (more precisely, the desktop environment).

My desktop environment is Xfce. This is one of the most popular options for Linux. I like Xfce because it allows you to almost completely customize the whole environment - just the way you feel comfortable. At the same time, Xfce is somewhat smaller and faster than the other two well-known systems - Gnome and KDE. (Other options are also interesting - for example, the LXDE environment is even faster and easier than Xfce, but not as rich in features as yet).

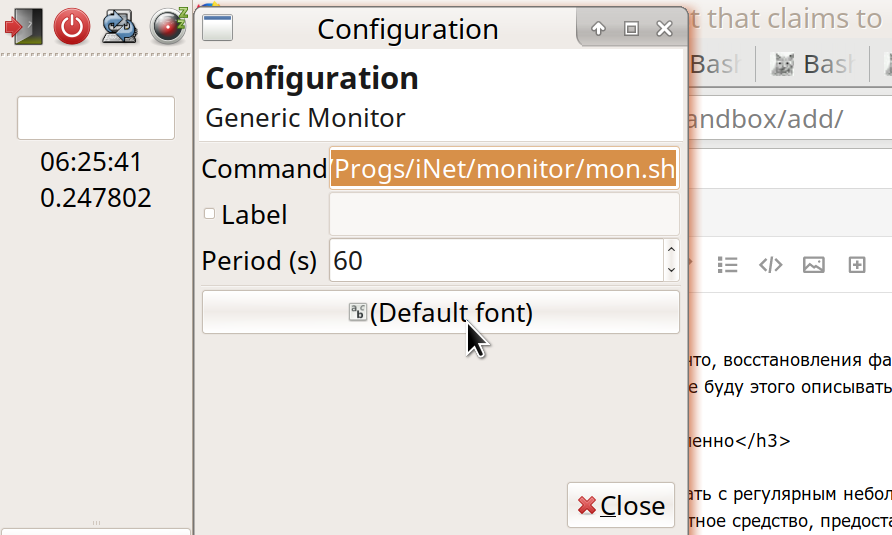

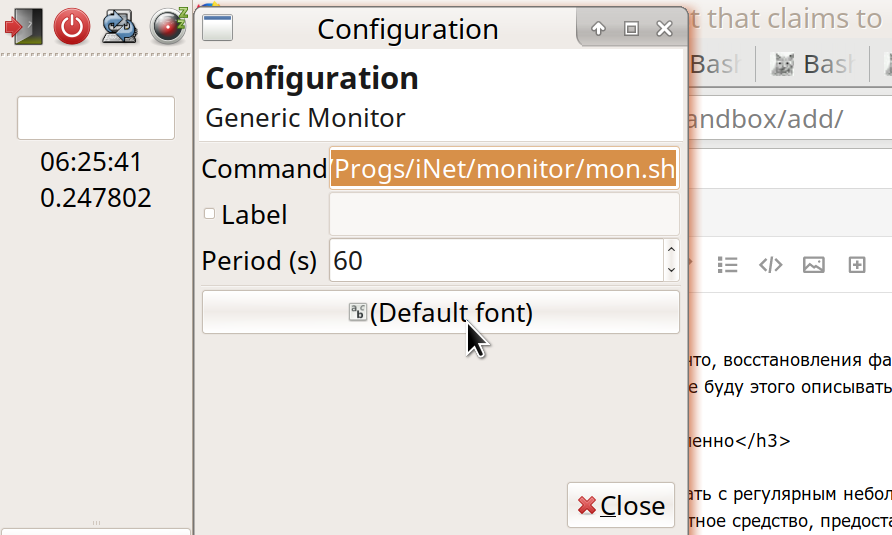

The tool we need, in the case of Xfce, is a plug-in for the “Generic Monitor” working panel. It is usually already installed, but if not, the Installer will easily find it: “Genmon” (description: xfce4-genmon-plugin ). This is a plugin that can be added to the panel and set it in the settings: (1) the command being executed and (2) the frequency of its execution in seconds. In my case, the command:

/home/me/Progs/iNet/monitor/mon.sh

(or, equivalently, ~ / Progs / iNet / monitor / mon.sh).

If you have done all these steps now - you have created the files and launched the plugin on the panel - then you see the result of running our program there (error message: the mon.sh file is not a program). Then, to make the file executable, go into our folder and give it permission to run -

And in the file itself, we write these first lines:

Instead of "example.ru" substitute the name of your site, the state of which you will be watching. And, if it works on http, put http instead of https. The line #! / Bin / bash means that it is a script to run by the Bash program ( bash is probably the most common script to run on Linux). To work through another command interpreter, it is indicated instead of Bash. The remaining lines with a sharp first are comments. The first actual line of code is info = $ () and in these brackets the curl command with parameters. Such a construction - something = $ (something) - means “execute the command in brackets and assign the result to a variable on the left”. In this case, I called the variable "info". This command in parentheses - curl (in the jargon called by some "Kurl") - sends a request to the network at the specified address and returns to us the server response. (My friend said, explaining: “How the crane grumbles — and gets an answer from some other crane in the sky”). Consider the options:

-I means that we do not request the whole page, but only its "HTTP headers". We do not need the entire page every time to make sure that the site works. Since we will check the site frequently, it is important to keep the size of the transmitted data as small as possible. This saves both traffic, and electricity, and the load on the server - and on wildlife.

By the way, pay attention to how much spare traffic you have (not used per month) on the hosting. Below you will see how much data is being transferred and you can calculate if you have enough reserves; if anything, the site verification period can be increased to 5, 10, or even 30-60 minutes. Although in this case the picture will not be so complete - and minor interruptions may go unnoticed; but, in general, when monitoring a site, it is more important to detect long-term problems than single interruptions. Also, what traffic can you afford on the calling computer? In my case - a desktop PC with unlimited traffic - this is not so important; but for a mobile device or a tariff with limits it is worth counting and, possibly, increasing the check intervals.

Let's go further: -o / dev / stdout means "the answer received by Kurl from the server should be sent to such and such file". And in this case, it is not a file on disk, but / dev / stdout - Standard output device. Usually “Standard Output Device” is our screen, where we can see the results of the program. But in the script we often send this “standard output” for further processing (now we save it into the info variable). And then we will, more often than not, either send the results of the commands to variables, or pass them on to the next commands in a chain. To create chains of commands, use the pipe operator ("Pipe"), depicted as a vertical bar ("|").

-w '% {time_total} eyayu' : here -w means "format and issue such additional information to the standard output device". Specifically, we are interested in time_total - how much time it took the transmission of the request-response between us and the server. You probably know that there is a simpler command, ping (“Ping”), to quickly query the server and get an answer from it, “Pong.” But Ping only checks that the hosting server is alive, and the signal goes back and forth for so many times. This shows the maximum access speed, but still does not tell us how quickly the site produces real content. Ping can work quickly, and at the same time the site can slow down or not work at all - due to high load or some internal problems. Therefore, we use exactly Kurla and get the actual time the server issues our content.

(And according to this parameter, you can judge whether the server is productive enough for your tasks, if its users have a convenient response time. It’s not a problem - for example, my sites on many hosts slow down over time, and you had to look for another hosting).

Did you notice strange letters "eya" after {time_total}? I added them as a unique label, which surely will not be in the headers sent to us from the server (although you will have no idea what will happen and what your users will have - bad ways and the road to the abyss. Do not do that! .. Or when you do , at least be ashamed of you). For this label (hopefully) we can then easily pull out the piece of information we need from a whole heap of lines in the info variable. So, curl -w '% {time_total} eyayu' (with the other parameters correct) will give us something like:

0.215238

This is the number of seconds it took to access the site, plus our label. (In addition to this parameter, in the info variable we will be interested mainly in the "Status code" - the status code, or, in a simple way, the server response code. Usually, when the server issues the requested file, the code is "200". When the page is not found , this is the famous 404. When there are errors on the server, it is, most often, 500 with something).

The rest of the parameters for our curl are:

which means: to request such an address; The maximum time to wait for a response is 9 seconds (you can set less, because a rare user will wait for a response from the site for so long, he will quickly find the site not working). And "-s" means silent, that is, curl will not tell us the extra details.

By the way, there is usually little space on the desktop panel, so to debug a script, it is better to launch it from the terminal (in its folder, with the command ./mon.sh). And for our panel plug-in, we will put a long pause between command launches for now - say, 3,600 seconds.

For debugging, we can run this curl without framing parentheses and see what it produces. (From this and calculate the consumption of traffic). We will see, basically, a set of HTTP headers, such as:

The first line here is the “HTTP / 1.1 302 Found” - which means: the “HTTP / 1.1” data transfer protocol is used, the server response code is “302 Found”. When requesting another page, this could be “301 Moved Permanently”, etc. In the following example, the normal response of my server is “HTTP / 2,200”. If your server normally responds differently, substitute this response instead of “HTTP / 2 200” in this script.

The last line, as we see, Kurl gives out, in how many seconds we got the server response, with our assigned label.

Interesting: we can customize the site so that it reports on these requests (and only on them!) Additional information about its state - for example, in the “self-made” HTTP header. Say, what is the load on the site processors, how much free memory and disk space it has, and whether the database is fast. (Of course, for this site should be dynamic, that is, requests should be processed by the program - in PHP, Node.JS, etc. Another option is to use special software on the server). But, perhaps, we do not really need these details yet. The purpose of this script - just regularly monitor the overall health of the site. In case of problems, we will already deal with diagnostics by other means. So now we are writing the simplest script that will work for any site, even static, and without additional settings on the server. In the future, if desired, it will be possible to expand the capabilities of the script; for now let's do the foundation.

The string errr = $ (echo $?) Means: write the result of the “echo $?” Command to the errr variable. The echo command means displaying some text on the Standard Output Device (stdout), and the characters "$?" - This is the current error code (the remainder of the previous command). So, if our curl suddenly cannot reach the server, it will produce a non-zero error code, and we will find out about it by checking the errr variable.

Here I want to make a small digression from technical matters to read the text more interesting. (If you do not agree, skip three paragraphs). I will say this, that all human activity is in its own way expedient. Even when a person intentionally beats his forehead against a wall (or on the floor ... on the keyboard ...), this has its own expediency. For example, he gives a way out of emotional energy - maybe not in the “best” way, but as best he can. (And the very concept of the “best” is meaningless from the point of view of this moment in this place - because the best and the worst are possible only in comparison with something else).

I am writing this text now, instead of seemingly more important matters for me - why? First of all, in order to sum up, review and internally decompose what I learned in writing scripts during these two days ... Remove from RAM and store for storage. Secondly, I have a little rest in this way ... Thirdly, I hope that this chewed explanation will be useful to someone else (and I myself, perhaps someday). As, it does not matter whether the best, but distinct reminder on the topic of scripts and requests to the server.

Someone, maybe, will correct me in something, and the result will be even better. Someone will get a piece of useful information. Is it advisable? Perhaps ... this is what I can do and do now. Tomorrow, maybe I will sleep off and re-evaluate current actions ... but this re-evaluation will give something useful for the rest of my life.

So, on execution of the request, we have two pieces of data:

It is precisely because both totals will be needed - both info and an error code - I wrote them down into variables, and did not immediately pass the results of Kourl to other teams through the Pipe. But now in this script it's time to climb through the pipes:

In each of these lines we write the next variable - we save the results of the execution of commands. And in each case, this is not one team, but a chain; The first link is the same everywhere: echo "$ info" - gives to the stream of the Standard Output Device (stdout) the same block of information that we saved, received from curl. Further along the pipe, this stream is transmitted to the grep (“Grep”) command. The gre option with the -e option selects from all lines only those in which it matches the pattern. As you can see, in the first case, we select the string containing “HTTP”. This string, pulled from the rest of the heap, is passed along the pipe further, to the command grep -v 'HTTP / 2 200' . Here, the -v parameter is the opposite of the -e parameter, it filters out the lines where such and such a pattern is found. In total, the code variable will contain a line with the server response code (of the type “HTTP / 1.1 302 Found”), but only if it is not 'HTTP / 2 200'. So I filter the lines that my site sends in the normal case, and save only the "anomalous" answers. (If you have a different server response, substitute it there).

Similarly, in the date variable, we write the string in which the server issued its current date and time. This is something like " date: Sun, 11 Feb 2019 08:06:57 GMT " (usually in the GMT time zone, aka UTC). We need to record the date (but only once a day!) In our log file ("server status log") - server.log. And at the same time we will display this time on the screen. It could be recorded in the log date-time and from your computer, but for convenience we take these, since all the same the server sent them.

It looks like our third variable, dlay (from the English word delay - delay). Select by our delicate label "eyau" line, which saved the duration of waiting for a response from the server. We delete this label, which is no longer needed, with the help of the sed command, and save the result.

Now, if we print these variables for verification - for example, adding lines to our script:

it should be something like:

Total: the curl-a error code is zero (which means it worked fine). Server status code - not recorded (as it was normal). Date and time. Duration of response. It remains to correctly display what is needed on the screen and, if necessary, to write to a file.

This is the most interesting: what, under what conditions and how to record?

In order not to bore you with more details, I will tell you briefly (there are enough good reference books for all these commands on the Internet). By the way, brevity is one of the main goals that we will achieve when writing to this log. Then it will be convenient for viewing, and it will never take up a lot of disk space (unlike other logs that grow in megabytes per day).

First: take care to record the date in the log only once, and not in each row. To do this, we separate from our date variable separately the current date (curr) and time (time):

And also, from the log file, count the dates with dates and take the last date (prev):

If our current date (curr) is not equal to the previous one (from the file, prev), then the new day has arrived (or, say, the log file was empty); then write a new date to the file:

... and at the same time we record the current information: time, delay in receiving a response from the site, response code of the site (if not normal):

This will help to navigate: a certain day began with a certain speed of the site. In other cases, let's not clutter up the file with unnecessary records. Certainly, we will write down if we received an unusual server status code:

And also we write if the server response time is more than usual. My site usually answered in 0.23-0.25 seconds, so I fix answers that took longer than 0.3 seconds:

Finally, once an hour, I simply record the time received from the server - as a sign that it is alive, and at the same time as a kind of file markup:

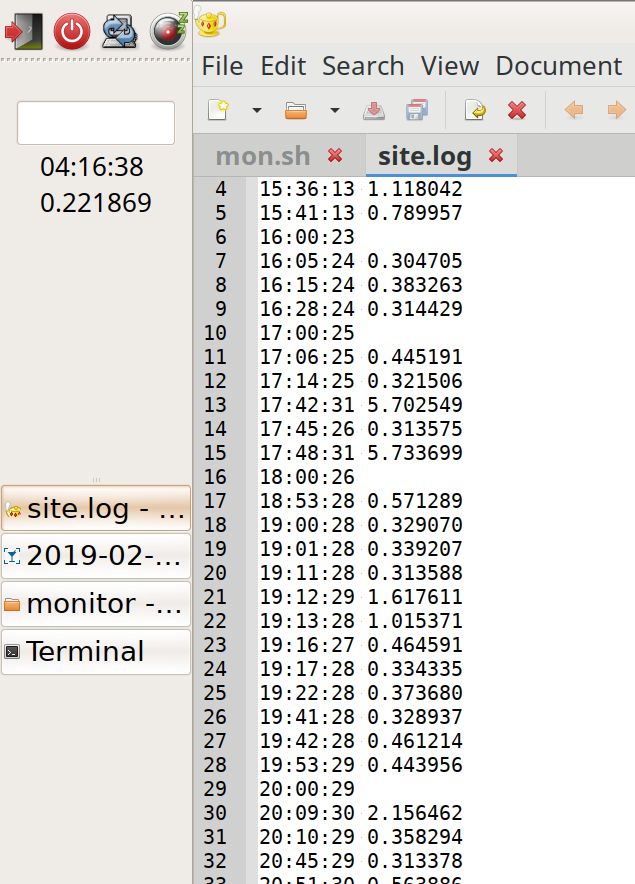

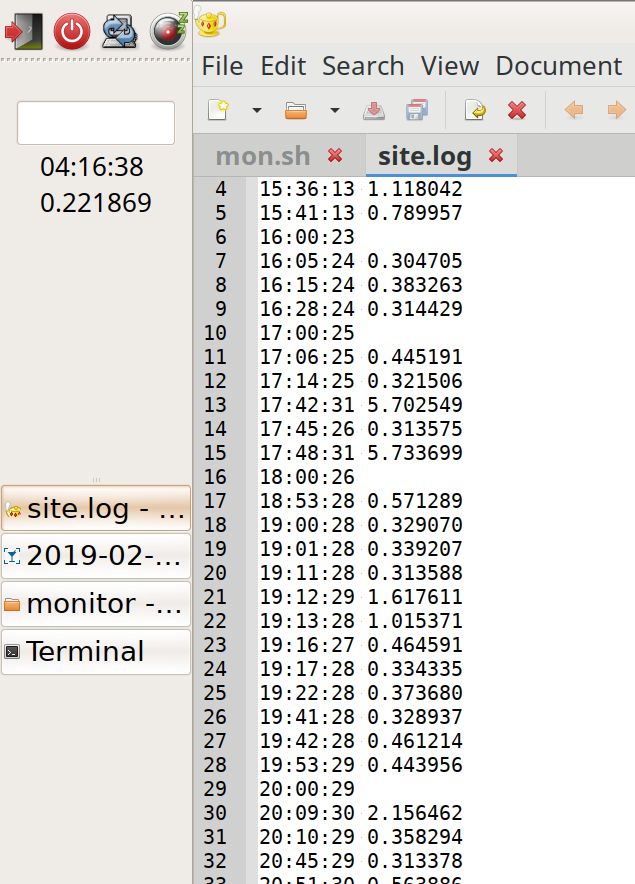

We get the content of the file where the markup with hourly entries helps visually, without reading, to see when the load is higher or lower (the number of entries per hour is greater):

And finally, display information on the screen. And also, if curl failed, output and write a message about it (and at the same time launch Ping and log in - check if the server is alive at all):

Replace the IP address in the ping string with the real IP address of your site.

The result of the works:

On the left you can see the time in UTC and the current responsiveness of the site. On the right there is a log: it is visible to the meeting, even with a cursory scrolling, at what time the load was more or less. You can see abnormally slow answers (peaks; although it is not yet clear where they come from).

That's all. The script turned out to be simple, oak, and it can be improved: work on optimization, portability, improvement of alerts and mappings, taking into account the proxy and cache ...

But already in this kind of program, it probably can give an idea about the state of your site. And may this site be wisely expedient, useful to people and all creatures!

Ps. Edited small bloopers in the text: the -e parameter is not needed in the grep command when a pattern with a regular expression is not used; The -i option is added in one place, so that the comparison is case-insensitive.

Let's write such a script now - the simplest monitoring of the availability and speed of the site. This program can be run on a home computer, smartphone, and so on. The program has only two functions:

- shows on the screen the time during which your site gives users a page,

- in case of slow website responses or errors, the program writes data to a file (“log”, or log file). This data is worth checking from time to time to correct problems when they begin. Therefore, we will take care of recording these logs in a visual, easy-to-view form.

I will describe each step in some detail, so that even a beginner, who is only slightly familiar with writing command files (bat and cmd on DOS and Windows systems, sh on UNIX-like systems), figured out without any particular problems and could adapt the script to his needs. But please do not use this script thoughtlessly, because if used incorrectly, it may not give proper results and, in addition, gobble up a lot of traffic .

I will describe a script for an operating system such as "Linux" and its use on a home computer. By the same principles, this can be done on other platforms. And for those who are just eyeing the possibilities of Linux, another example might be interesting, which simple and powerful tool are its scripts.

1. Organization first

Let's get a separate folder for this program and create 3 files in it. For example, I have a folder / home / me / Progs / iNet / monitor (here me is the name of my user, Progs / iNet is my folder for programs related to the Internet, and monitor is the name of this program, from the word monitor, that is , observe. As I am the only user of this computer, I keep such files among personal folders (/ home), in a separate disk partition, which allows you to save them when you reinstall the system). In this folder there will be files:

- README.txt - here is a description (in case of sclerosis): what kind of program, background information to it, etc.

- mon.sh - there will be a program that polls the site.

- server.log - here it will record the status of the site. In our case, it’s just the date, time and duration of the site’s response (plus additional information if the server responds to our request with an error).

(For convenience of editing and, if that, file recovery, you can include this folder in the Git version control system; here I will not describe this).

2. Persistent and relaxed

We will run the mon.sh file at regular short intervals, for example, 60 seconds. I used for this the standard tool provided by the operating system (more precisely, the desktop environment).

My desktop environment is Xfce. This is one of the most popular options for Linux. I like Xfce because it allows you to almost completely customize the whole environment - just the way you feel comfortable. At the same time, Xfce is somewhat smaller and faster than the other two well-known systems - Gnome and KDE. (Other options are also interesting - for example, the LXDE environment is even faster and easier than Xfce, but not as rich in features as yet).

The tool we need, in the case of Xfce, is a plug-in for the “Generic Monitor” working panel. It is usually already installed, but if not, the Installer will easily find it: “Genmon” (description: xfce4-genmon-plugin ). This is a plugin that can be added to the panel and set it in the settings: (1) the command being executed and (2) the frequency of its execution in seconds. In my case, the command:

/home/me/Progs/iNet/monitor/mon.sh

(or, equivalently, ~ / Progs / iNet / monitor / mon.sh).

3. When the program is not there yet, the error is already there.

If you have done all these steps now - you have created the files and launched the plugin on the panel - then you see the result of running our program there (error message: the mon.sh file is not a program). Then, to make the file executable, go into our folder and give it permission to run -

- or file manager: Properties - Permissions - Allow to run as a program (Properties - Permissions - Allow this file to run as a program);

- or a command from the terminal: chmod 755 mon.sh

And in the file itself, we write these first lines:

#!/bin/bash # Monitor server responses (run this every 60 seconds): # info=$(curl -I -o /dev/stdout -w '%{time_total}эяю' --url https://example.ru/ -m 9 -s) errr=$(echo $?) Instead of "example.ru" substitute the name of your site, the state of which you will be watching. And, if it works on http, put http instead of https. The line #! / Bin / bash means that it is a script to run by the Bash program ( bash is probably the most common script to run on Linux). To work through another command interpreter, it is indicated instead of Bash. The remaining lines with a sharp first are comments. The first actual line of code is info = $ () and in these brackets the curl command with parameters. Such a construction - something = $ (something) - means “execute the command in brackets and assign the result to a variable on the left”. In this case, I called the variable "info". This command in parentheses - curl (in the jargon called by some "Kurl") - sends a request to the network at the specified address and returns to us the server response. (My friend said, explaining: “How the crane grumbles — and gets an answer from some other crane in the sky”). Consider the options:

curl -I -o /dev/stdout -w '%{time_total}эяю' --url https://example.ru/ -m 9 -s -I means that we do not request the whole page, but only its "HTTP headers". We do not need the entire page every time to make sure that the site works. Since we will check the site frequently, it is important to keep the size of the transmitted data as small as possible. This saves both traffic, and electricity, and the load on the server - and on wildlife.

By the way, pay attention to how much spare traffic you have (not used per month) on the hosting. Below you will see how much data is being transferred and you can calculate if you have enough reserves; if anything, the site verification period can be increased to 5, 10, or even 30-60 minutes. Although in this case the picture will not be so complete - and minor interruptions may go unnoticed; but, in general, when monitoring a site, it is more important to detect long-term problems than single interruptions. Also, what traffic can you afford on the calling computer? In my case - a desktop PC with unlimited traffic - this is not so important; but for a mobile device or a tariff with limits it is worth counting and, possibly, increasing the check intervals.

4. Step follows step, except the first step.

Let's go further: -o / dev / stdout means "the answer received by Kurl from the server should be sent to such and such file". And in this case, it is not a file on disk, but / dev / stdout - Standard output device. Usually “Standard Output Device” is our screen, where we can see the results of the program. But in the script we often send this “standard output” for further processing (now we save it into the info variable). And then we will, more often than not, either send the results of the commands to variables, or pass them on to the next commands in a chain. To create chains of commands, use the pipe operator ("Pipe"), depicted as a vertical bar ("|").

-w '% {time_total} eyayu' : here -w means "format and issue such additional information to the standard output device". Specifically, we are interested in time_total - how much time it took the transmission of the request-response between us and the server. You probably know that there is a simpler command, ping (“Ping”), to quickly query the server and get an answer from it, “Pong.” But Ping only checks that the hosting server is alive, and the signal goes back and forth for so many times. This shows the maximum access speed, but still does not tell us how quickly the site produces real content. Ping can work quickly, and at the same time the site can slow down or not work at all - due to high load or some internal problems. Therefore, we use exactly Kurla and get the actual time the server issues our content.

(And according to this parameter, you can judge whether the server is productive enough for your tasks, if its users have a convenient response time. It’s not a problem - for example, my sites on many hosts slow down over time, and you had to look for another hosting).

Did you notice strange letters "eya" after {time_total}? I added them as a unique label, which surely will not be in the headers sent to us from the server (although you will have no idea what will happen and what your users will have - bad ways and the road to the abyss. Do not do that! .. Or when you do , at least be ashamed of you). For this label (hopefully) we can then easily pull out the piece of information we need from a whole heap of lines in the info variable. So, curl -w '% {time_total} eyayu' (with the other parameters correct) will give us something like:

0.215238

This is the number of seconds it took to access the site, plus our label. (In addition to this parameter, in the info variable we will be interested mainly in the "Status code" - the status code, or, in a simple way, the server response code. Usually, when the server issues the requested file, the code is "200". When the page is not found , this is the famous 404. When there are errors on the server, it is, most often, 500 with something).

5. Creative approach - father of self-test

The rest of the parameters for our curl are:

--url https://example.ru/ -m 9 -s which means: to request such an address; The maximum time to wait for a response is 9 seconds (you can set less, because a rare user will wait for a response from the site for so long, he will quickly find the site not working). And "-s" means silent, that is, curl will not tell us the extra details.

By the way, there is usually little space on the desktop panel, so to debug a script, it is better to launch it from the terminal (in its folder, with the command ./mon.sh). And for our panel plug-in, we will put a long pause between command launches for now - say, 3,600 seconds.

For debugging, we can run this curl without framing parentheses and see what it produces. (From this and calculate the consumption of traffic). We will see, basically, a set of HTTP headers, such as:

HTTP/1.1 302 Found Server: QRATOR Date: Sun, 11 Feb 2019 08:06:57 GMT Content-Type: text/html; charset=UTF-8 Connection: keep-alive Keep-Alive: timeout=15 X-Powered-By: PHP/7.2.14-1+ubuntu16.04.1+deb.sury.org+1 Location: https://habr.com/ru/ X-Frame-Options: SAMEORIGIN X-Content-Type-Options: nosniff Strict-Transport-Security: max-age=63072000; includeSubDomains; preload X-Cache-Status: HIT 0.033113эяю The first line here is the “HTTP / 1.1 302 Found” - which means: the “HTTP / 1.1” data transfer protocol is used, the server response code is “302 Found”. When requesting another page, this could be “301 Moved Permanently”, etc. In the following example, the normal response of my server is “HTTP / 2,200”. If your server normally responds differently, substitute this response instead of “HTTP / 2 200” in this script.

The last line, as we see, Kurl gives out, in how many seconds we got the server response, with our assigned label.

Interesting: we can customize the site so that it reports on these requests (and only on them!) Additional information about its state - for example, in the “self-made” HTTP header. Say, what is the load on the site processors, how much free memory and disk space it has, and whether the database is fast. (Of course, for this site should be dynamic, that is, requests should be processed by the program - in PHP, Node.JS, etc. Another option is to use special software on the server). But, perhaps, we do not really need these details yet. The purpose of this script - just regularly monitor the overall health of the site. In case of problems, we will already deal with diagnostics by other means. So now we are writing the simplest script that will work for any site, even static, and without additional settings on the server. In the future, if desired, it will be possible to expand the capabilities of the script; for now let's do the foundation.

The string errr = $ (echo $?) Means: write the result of the “echo $?” Command to the errr variable. The echo command means displaying some text on the Standard Output Device (stdout), and the characters "$?" - This is the current error code (the remainder of the previous command). So, if our curl suddenly cannot reach the server, it will produce a non-zero error code, and we will find out about it by checking the errr variable.

6. Expediency

Here I want to make a small digression from technical matters to read the text more interesting. (If you do not agree, skip three paragraphs). I will say this, that all human activity is in its own way expedient. Even when a person intentionally beats his forehead against a wall (or on the floor ... on the keyboard ...), this has its own expediency. For example, he gives a way out of emotional energy - maybe not in the “best” way, but as best he can. (And the very concept of the “best” is meaningless from the point of view of this moment in this place - because the best and the worst are possible only in comparison with something else).

I am writing this text now, instead of seemingly more important matters for me - why? First of all, in order to sum up, review and internally decompose what I learned in writing scripts during these two days ... Remove from RAM and store for storage. Secondly, I have a little rest in this way ... Thirdly, I hope that this chewed explanation will be useful to someone else (and I myself, perhaps someday). As, it does not matter whether the best, but distinct reminder on the topic of scripts and requests to the server.

Someone, maybe, will correct me in something, and the result will be even better. Someone will get a piece of useful information. Is it advisable? Perhaps ... this is what I can do and do now. Tomorrow, maybe I will sleep off and re-evaluate current actions ... but this re-evaluation will give something useful for the rest of my life.

So, on execution of the request, we have two pieces of data:

- the main block of information from the server (if, of course, the server’s response came), we wrote it into the info variable;

- The error code of the curl command (0, if no errors) is written to the errr variable.

It is precisely because both totals will be needed - both info and an error code - I wrote them down into variables, and did not immediately pass the results of Kourl to other teams through the Pipe. But now in this script it's time to climb through the pipes:

code=$(echo "$info" | grep HTTP | grep -v 'HTTP/2 200') date=$(echo "$info" | grep -i 'date:') dlay=$(echo "$info" | grep эяю | sed -e 's/эяю//') In each of these lines we write the next variable - we save the results of the execution of commands. And in each case, this is not one team, but a chain; The first link is the same everywhere: echo "$ info" - gives to the stream of the Standard Output Device (stdout) the same block of information that we saved, received from curl. Further along the pipe, this stream is transmitted to the grep (“Grep”) command. The gre option with the -e option selects from all lines only those in which it matches the pattern. As you can see, in the first case, we select the string containing “HTTP”. This string, pulled from the rest of the heap, is passed along the pipe further, to the command grep -v 'HTTP / 2 200' . Here, the -v parameter is the opposite of the -e parameter, it filters out the lines where such and such a pattern is found. In total, the code variable will contain a line with the server response code (of the type “HTTP / 1.1 302 Found”), but only if it is not 'HTTP / 2 200'. So I filter the lines that my site sends in the normal case, and save only the "anomalous" answers. (If you have a different server response, substitute it there).

Similarly, in the date variable, we write the string in which the server issued its current date and time. This is something like " date: Sun, 11 Feb 2019 08:06:57 GMT " (usually in the GMT time zone, aka UTC). We need to record the date (but only once a day!) In our log file ("server status log") - server.log. And at the same time we will display this time on the screen. It could be recorded in the log date-time and from your computer, but for convenience we take these, since all the same the server sent them.

It looks like our third variable, dlay (from the English word delay - delay). Select by our delicate label "eyau" line, which saved the duration of waiting for a response from the server. We delete this label, which is no longer needed, with the help of the sed command, and save the result.

Now, if we print these variables for verification - for example, adding lines to our script:

printf 'errr: %s\n' "$errr" printf 'code: %s\n' "$code" printf '%s\n' "$date" printf 'dlay: %s\n' "$dlay" it should be something like:

errr: 0 code: date: Mon, 11 Feb 2019 12:46:18 GMT dlay: 0.236549 Total: the curl-a error code is zero (which means it worked fine). Server status code - not recorded (as it was normal). Date and time. Duration of response. It remains to correctly display what is needed on the screen and, if necessary, to write to a file.

This is the most interesting: what, under what conditions and how to record?

7. Cunning conclusions

In order not to bore you with more details, I will tell you briefly (there are enough good reference books for all these commands on the Internet). By the way, brevity is one of the main goals that we will achieve when writing to this log. Then it will be convenient for viewing, and it will never take up a lot of disk space (unlike other logs that grow in megabytes per day).

First: take care to record the date in the log only once, and not in each row. To do this, we separate from our date variable separately the current date (curr) and time (time):

curr=$(echo "$date" | sed -e 's/\(20[0-9][0-9]\).*$/\1/') time=$(echo "$date" | sed -e 's/^.*\ \([0-9][0-9]:.*\)\ GMT\r$/\1/') And also, from the log file, count the dates with dates and take the last date (prev):

prev=$(cat /home/me/Progs/iNet/monitor/site.log | grep -e 'date:' | tail -1) If our current date (curr) is not equal to the previous one (from the file, prev), then the new day has arrived (or, say, the log file was empty); then write a new date to the file:

if [[ $curr != $prev ]]; then printf '%s\n' "$curr" >>/home/me/Progs/iNet/monitor/site.log printf '%s %s %s\n' "$time" "$dlay" "$code" >>/home/me/Progs/iNet/monitor/site.log ... and at the same time we record the current information: time, delay in receiving a response from the site, response code of the site (if not normal):

printf '%s %s %s\n' "$time" "$dlay" "$code" >>/home/me/Progs/iNet/monitor/site.log This will help to navigate: a certain day began with a certain speed of the site. In other cases, let's not clutter up the file with unnecessary records. Certainly, we will write down if we received an unusual server status code:

elif [[ -n $code ]]; then printf '%s %s %s\n' "$time" "$dlay" "$code" >>/home/me/Progs/iNet/monitor/site.log And also we write if the server response time is more than usual. My site usually answered in 0.23-0.25 seconds, so I fix answers that took longer than 0.3 seconds:

elif (( $(echo "$dlay > 0.3" | bc -l) )); then printf '%s %s\n' "$time" "$dlay" >>/home/me/Progs/iNet/monitor/site.log Finally, once an hour, I simply record the time received from the server - as a sign that it is alive, and at the same time as a kind of file markup:

else echo "$time" | grep -e :00: | cat >>/home/me/Progs/iNet/monitor/site.log fi We get the content of the file where the markup with hourly entries helps visually, without reading, to see when the load is higher or lower (the number of entries per hour is greater):

19:42:28 0.461214 19:53:29 0.443956 20:00:29 20:09:30 2.156462 20:10:29 0.358294 20:45:29 0.313378 20:51:30 0.563886 20:54:30 0.307219 21:00:30 0.722343 21:01:30 0.310284 21:09:30 0.379662 21:10:31 1.305779 21:12:35 5.799455 21:23:31 1.054537 21:24:31 1.230391 21:40:31 0.461266 21:42:37 7.140093 22:00:31 22:12:37 5.724768 22:14:31 0.303500 22:42:37 5.735173 23:00:32 23:10:32 0.318207 date: Mon, 11 Feb 2019 00:00:34 0.235298 00:01:33 0.315093 01:00:34 01:37:41 5.741847 02:00:36 02:48:37 0.343234 02:56:37 0.647698 02:57:38 1.670538 02:58:39 2.327980 02:59:37 0.663547 03:00:37 03:40:38 0.331613 04:00:38 04:11:38 0.217022 04:50:39 0.313566 04:55:45 5.719911 05:00:39 And finally, display information on the screen. And also, if curl failed, output and write a message about it (and at the same time launch Ping and log in - check if the server is alive at all):

printf '%s\n%s\n%s' "$time" "$dlay" "$code" if (( $errr != 0 )); then date >>/home/me/Progs/iNet/monitor/site.log date printf 'CURL Request failed. Error: %s\n' "$errr" >>/home/me/Progs/iNet/monitor/site.log printf 'CURL Request failed. Error: %s\n' "$errr" pung=$(ping -c 1 178.248.237.68) printf 'Ping: %s\n----\n' "$pung" >>/home/me/Progs/iNet/monitor/site.log printf 'Ping: %s\n' "$pung" fi Replace the IP address in the ping string with the real IP address of your site.

8. Afterword

The result of the works:

On the left you can see the time in UTC and the current responsiveness of the site. On the right there is a log: it is visible to the meeting, even with a cursory scrolling, at what time the load was more or less. You can see abnormally slow answers (peaks; although it is not yet clear where they come from).

That's all. The script turned out to be simple, oak, and it can be improved: work on optimization, portability, improvement of alerts and mappings, taking into account the proxy and cache ...

But already in this kind of program, it probably can give an idea about the state of your site. And may this site be wisely expedient, useful to people and all creatures!

The full text of the script with comments. Do not forget to make the necessary changes!

#!/bin/bash # Monitor server responses (run this every 60 seconds): info=$(curl -I -o /dev/stdout -w '%{time_total}эяю' --url https://example.ru/ -m 9 -s) errr=$(echo $?) # errr = CURL error code https://curl.haxx.se/libcurl/c/libcurl-errors.html code=$(echo "$info" | grep HTTP | grep -v 'HTTP/2 200') date=$(echo "$info" | grep -i 'date:') dlay=$(echo "$info" | grep эяю | sed -e 's/эяю//') # code = Response code = 200? # => empty, otherwise response code string # # date = from HTTP Header of the server responded, like: # Date: Sun, 10 Feb 2019 05:01:50 GMT # # dlay = Response delay ("time_total") from CURL, like: # 0.25321 #printf 'errr: %s\n' "$errr" #printf 'code: %s\n' "$code" #printf '%s\n' "$date" #printf 'dlay: %s\n' "$dlay" curr=$(echo "$date" | sed -e 's/\(20[0-9][0-9]\).*$/\1/') time=$(echo "$date" | sed -e 's/^.*\ \([0-9][0-9]:.*\)\ GMT\r$/\1/') prev=$(cat /home/me/Progs/iNet/monitor/site.log | grep -e 'date:' | tail -1) # = Previously logged date, like: # date: Sun, 10 Feb 2019 # Day logged before vs day returned by the server; usually the same if [[ $curr != $prev ]]; then # Write date etc., at the beginning of every day: printf '%s\n' "$curr" >>/home/me/Progs/iNet/monitor/site.log printf '%s %s %s\n' "$time" "$dlay" "$code" >>/home/me/Progs/iNet/monitor/site.log elif [[ -n $code ]]; then # If the response had HTTP error code - log it: printf '%s %s ? %s\n' "$time" "$dlay" "$code" >>/home/me/Progs/iNet/monitor/site.log elif (( $(echo "$dlay > 0.3" | bc -l) )); then # If the response delay was large - log it: printf '%s %s %s\n' "$time" "$dlay" "$code" >>/home/me/Progs/iNet/monitor/site.log else # If it's the start of an hour - just log the time echo "$time" | grep -e :00: | cat >>/home/me/Progs/iNet/monitor/site.log fi # To screen: printf '%s\n%s\n%s' "$time" "$dlay" "$code" # On CURL error: if (( $errr != 0 )); then date >>/home/me/Progs/iNet/monitor/site.log date printf 'CURL Request failed. Error: %s\n' "$errr" >>/home/me/Progs/iNet/monitor/site.log printf 'CURL Request failed. Error: %s\n' "$errr" pung=$(ping -c 1 178.248.237.68) printf 'Ping: %s\n----\n' "$pung" >>/home/me/Progs/iNet/monitor/site.log printf 'Ping: %s\n' "$pung" fi Ps. Edited small bloopers in the text: the -e parameter is not needed in the grep command when a pattern with a regular expression is not used; The -i option is added in one place, so that the comparison is case-insensitive.

Source: https://habr.com/ru/post/439894/