Java pointer compression

This article will discuss the implementation of pointer compression in the Java Virtual Machine 64-bit , which is controlled by the UseCompressedOops option and enabled by default for 64-bit systems starting with Java SE 6u23.

Description of the problem

In 64-bit JVM pointers occupy 2 times more (surprise-surprise) memory space than in 32-bit. This can increase the size of the data by 1.5 times compared with the same code for the 32-bit architecture. At the same time, in the 32-bit architecture, only 2 ^ 32 bytes (4 GB) can be addressed, which is quite small in the modern world.

Let's write a small program and look at how many bytes Integer objects occupy:

import java.util.stream.IntStream; import java.util.stream.Stream; class HeapTest { public static void main(String ... args) throws Exception { Integer[] x = IntStream.range(0, 1_000_000).boxed().toArray(Integer[]::new); Thread.sleep(6000000); Stream.of(x).forEach(System.out::println); } } Here we allocate a million objects of class Integer and fall asleep for a long time. The last line is needed so that the compiler suddenly does not ignore the creation of an array (although on my machine without this line, objects are created normally).

Compile and run the program with pointer compression disabled:

> javac HeapTest.java > java -XX:-UseCompressedOops HeapTest Using the jcmd utility, we look at the memory allocation:

> jps 45236 HeapTest ... > jcmd 45236 GC.class_histogram

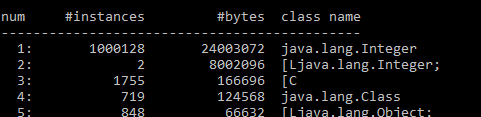

The picture shows that the total number of objects is 1000128 , and the size of the memory that these objects occupy is 24003072 bytes . Those. 24 bytes per object (why exactly 24 will be written below).

But the memory of the same program, but with the flag UseCompressedOops enabled :

Now each object is 16 bytes .

The advantages of compression are obvious =)

Decision

How does the JVM compress the pointers? This technique is called Compressed Oops . Oop stands for ordinary object pointer or a normal pointer to an object .

The trick is that in a 64-bit system, the data in memory is aligned with the machine word, i.e. 8 bytes each. And the address always has three zero bits at the end.

If, while saving the pointer, the address is shifted 3 bits to the right (the operation is called encode ), and before use it is shifted by 3 bits to the left ( decode ), then you can fit 35 bits in 32 bits , i.e. address up to 32 GB (2 ^ 35 bytes).

If the heap size for your program is more than 32GB, then the compression stops working and all pointers become 8 bytes in size.

When the UseCompressedOops option is enabled, the following pointer types are compressed:

- Class field for each object

- Class field objects

- Elements of an array of objects.

Objects of the JVM itself are never compressed. In this case, compression occurs at the level of the virtual machine, and not bytecode.

Learn more about placing objects in memory.

And now let's use the jol (Java Object Layout) utility to take a closer look at how much memory our Integer occupies in different JVMs:

> java -jar jol-cli-0.9-full.jar estimates java.lang.Integer ***** 32-bit VM: ********************************************************** java.lang.Integer object internals: OFFSET SIZE TYPE DESCRIPTION VALUE 0 8 (object header) N/A 8 4 int Integer.value N/A 12 4 (loss due to the next object alignment) Instance size: 16 bytes Space losses: 0 bytes internal + 4 bytes external = 4 bytes total ***** 64-bit VM: ********************************************************** java.lang.Integer object internals: OFFSET SIZE TYPE DESCRIPTION VALUE 0 16 (object header) N/A 16 4 int Integer.value N/A 20 4 (loss due to the next object alignment) Instance size: 24 bytes Space losses: 0 bytes internal + 4 bytes external = 4 bytes total ***** 64-bit VM, compressed references enabled: *************************** java.lang.Integer object internals: OFFSET SIZE TYPE DESCRIPTION VALUE 0 12 (object header) N/A 12 4 int Integer.value N/A Instance size: 16 bytes Space losses: 0 bytes internal + 0 bytes external = 0 bytes total ***** 64-bit VM, compressed references enabled, 16-byte align: ************ java.lang.Integer object internals: OFFSET SIZE TYPE DESCRIPTION VALUE 0 12 (object header) N/A 12 4 int Integer.value N/A Instance size: 16 bytes Space losses: 0 bytes internal + 0 bytes external = 0 bytes total The difference between "64-bit VM" and "64-bit VM, compressed references enabled" is to reduce the object header by 4 bytes. Plus, in the case of no compression, it becomes necessary to add another 4 bytes to align the data in memory.

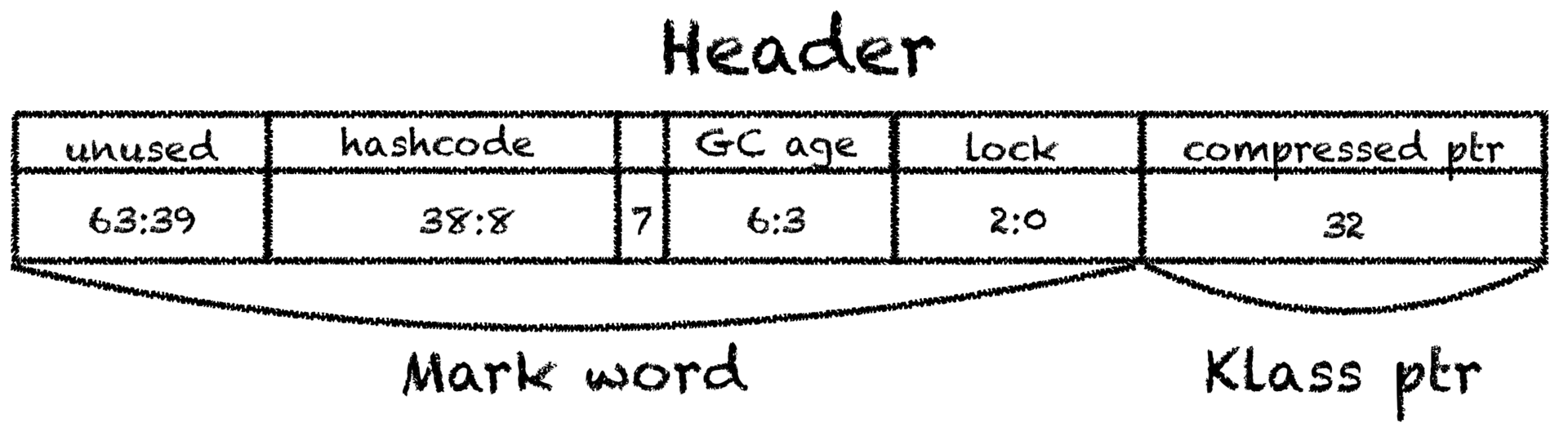

What is this object header? Why did it decrease by 4 bytes?

The image shows an object header equal to 12 bytes, i.e. with the UseCompressedOops option enabled. The header consists of some internal flags of the JVM, as well as a pointer to the class of this object. It can be seen that the pointer to the class takes 32 bits. Without compression, it would occupy 64 bits and the size of the object header would be 16 bytes.

By the way, you can see that there is another option for 16-byte alignment. In this case, you can increase the memory to 64 GB.

Pointer compression

Compression of pointers, of course, has an obvious disadvantage - the cost of operations encode and decode with each reference to the pointer. The exact numbers will depend on the specific application.

For example, here is the garbage collector pause schedule for compressed and uncompressed pointers taken from here. Java GC in Numbers - Compressed OOPs

It is seen that when compression is on, GC pauses last longer. More details about this can be found in the article itself (the article is rather old - in 2013).

References:

Compressed oops in the Hotspot JVM

How does the JVM allocate objects

CompressedOops: Introduction to compressed references in Java

Trick behind JVM's compressed oops

Java HotSpot Virtual Machine Performance Enhancements

Source: https://habr.com/ru/post/440166/