New approach to understanding the thinking of machines

Neural networks are known for their incomprehensibility - the computer can give a good answer, but cannot explain what led him to this conclusion. Bin Kim is developing a "translator to the human", so that if artificial intelligence breaks down, we can understand it.

Bin Kim, a researcher at Google Brain, is developing a way to question the system using machine learning about the decisions she made.

If the doctor tells you that you need surgery, you will want to know why - and you will expect that his explanation will seem sensible to you, even if you have not been trained as a doctor. Bin Kim [Been Kim], a researcher at Google Brain, believes that we should be able to expect the same from artificial intelligence (AI). She is an expert in “interpreted” machine learning (MO), and wants to create an AI that can explain her actions to anyone.

Since ten years ago, the neural network technology behind AI began to spread more and more, it was able to transform all the processes, from sorting e-mail to searching for new drugs, thanks to its ability to learn from data and look for patterns in them. But this ability has an inexplicable catch: the very complexity that allows modern neural networks with in-depth training to successfully learn to drive a car and recognize insurance fraud makes it almost impossible for experts to understand the principles of their work. If the neural network is trained to search for patients at risk of liver cancer or schizophrenia — and such a system called Deep Patient [ Deep Patient ] was launched at Mount Sinai Hospital in New York in 2015 — then there is no way to understand data features of the neural network "pays attention". This “knowledge” is spread over multiple layers of artificial neurons, each of which has connections to hundreds or thousands of other neurons.

As more and more industries are trying to automate or improve their decision-making processes using AI, this “black box” problem seems to be less of a technological shortcoming, and more like a fundamental flaw. The project from DARPA called XAI (an abbreviation of "explicable AI", eXplainable AI) is actively exploring this problem, and interpretability moves from the front lines of research in the field of MO closer to its center. “The AI is in that crucial moment when we, humanity, are trying to figure out whether this technology is right for us,” says Kim. “If we do not solve the problem of interpretability, I think we will not be able to move further with this technology, and maybe just give up on it”.

Kim and colleagues at Google Brain recently developed the Testing with Concept Activation Vectors (TCAV) system, which she describes as a “human translator” and which allows the user to ask the black box with AI what was the share of participation of a certain high level concept in decision making. For example, if the MO system is trained to find on images of zebras, a person could ask TCAV to describe how much the concept of “stripes” contributes to the decision-making process.

Initially, TCAV was tested on models that were trained to recognize images, but it also works on models designed for word processing or specific data visualization tasks, for example, EEG graphs. “It's generalized and simple — it can be connected to many different models,” says Kim.

Quanta magazine talked with Kim about what interpretability means, who needs it and why it matters.

In your career, you have focused on “interpretability” for MO. But what exactly does this term mean?

Interpretability has two branches. One is interpretability for science: if we consider a neural network to be the object of study, then it is possible to conduct scientific experiments on it in order to really understand the whole story of the model, the reasons for its reaction, and so on.

The second branch on which I mainly concentrate efforts is interpretability for creating an AI capable of answering questions. Do not understand every little thing model. But our goal is to understand enough so that this tool can be used safely.

But how can you believe in the system, if you do not fully understand how it works?

I will give you an analogy. Suppose a tree grows in my yard that I want to cut down. I have a chainsaw for this. I do not understand exactly how a chainsaw works. But the instructions say, "Something must be handled with care so as not to cut oneself." Having the instruction, I would rather use a chainsaw instead of a hand saw - the latter is easier to understand, but I would have had to cut a tree for five hours.

You understand what it means to "cut", even if you do not know everything about the mechanism that makes this possible.

Yes. The purpose of the second branch of interpretability is as follows: can we understand the tool enough to make it safe to use? And we can create this understanding by confirming that useful human knowledge is reflected in the tool.

How does the “reflection of human knowledge” make the black box of AI more understandable?

Here is another example. If the doctor uses the MO model to make a diagnosis of "cancer", the doctor will need to know that the model does not select just some random correlation in the data that we do not need. One way to make sure of this is to confirm that the MO model is doing about the same thing a doctor would do. That is, to show that the diagnostic knowledge of the doctor is reflected in the model.

For example, if a doctor is looking for a suitable cell specimen for diagnosing cancer, then he will look for something called a “fused gland”. He will also take into account such indicators as the patient's age and whether he has undergone chemotherapy in the past. These factors, or concepts, will be taken into account by the doctor trying to diagnose cancer. If we can show that the MO-model also pays attention to them, then the model will be more understandable, since it will reflect the human knowledge of the doctors.

This is what TCAV does - shows which high-level concepts does the MO model use for decision making?

Yes. Before this, interpretability methods explained only what the neural network does in terms of “input features”. What does it mean? If you have an image, then each pixel will be an input feature. Yang Lekun (pioneer of in-depth training, director of AI research on Facebook), said he considers these models to be over-interpreted, since you can look at each node of the neural network and see the numerical values for each of the input features. For computers, it may be suitable, but people think wrong. I'm not telling you "Look at the pixels from 100 to 200, their RGB values are 0.2 and 0.3." I say "This is an image of a very shaggy dog." People communicate in this way - with the help of concepts.

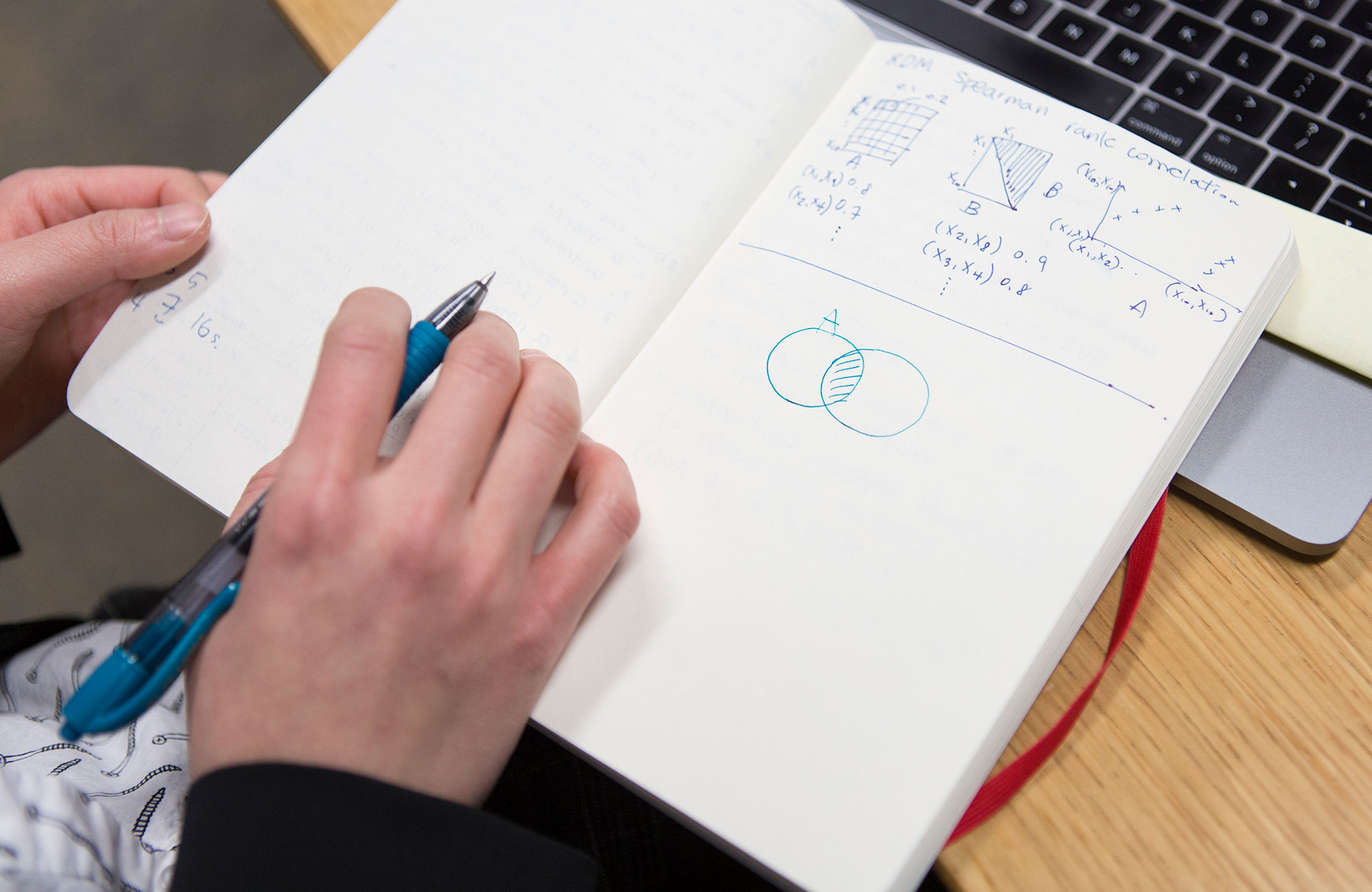

How does TCAV translate between input features and concepts?

Let's go back to the example of a doctor using an MO model, which has already been trained to classify images of cell samples according to cancer. You, as a doctor, need to know how important the concept of “merged glands” was to make a model of positive predictions of cancer. First, you collect, say, 20 images that show examples of merged glands. Then you connect these tagged examples to the model.

Then, TCAV internally conducts a so-called. "Sensitivity test". When we add these labeled images of fused glands, how much does the likelihood of positive cancer prediction increase? The answer can be estimated from 0 to 1. And this will be your points in TCAV. If the probability increased, this concept was important for the model. If not, this concept is not important.

"Concept" - the term foggy. Are there concepts that will not work with TCAV?

If you cannot describe a concept with a subset of your dataset, it will not work. If your MO model is trained on images, then the concept must be visually expressed. If, for example, I want to visually express the concept of love, it will be quite difficult to do.

We also carefully check the concept. We have a statistical verification procedure that rejects the concept vector, if it has an effect on the model that is equivalent to random. If your concept does not pass this test, then TCAV will say, “I don’t know, this concept does not look like something important for the model.”

Is the TCAV project aimed more at establishing AI confidence than a generalized understanding of it?

No, and I will explain why, since this difference is quite subtle.

Of the many studies in cognitive and psychology, we know that people are very gullible. This means that it is very easy to deceive a person by forcing him to believe in something. The goal of MO interpretability is the opposite. It is to inform the person that it is not safe to use a particular system. The goal is to uncover the truth. Therefore, “trust” is not the right word.

So the goal of interpretability is to uncover potential flaws in AI reasoning?

Yes it is.

How can she reveal flaws?

TCAV can be used to ask models a question about concepts not related to the field of study. Returning to the example of doctors using AI to predict the likelihood of cancer. Doctors might suddenly think: “Apparently, the car gives positive predictions for the presence of cancer for many images whose color is slightly blued in blue. We believe that this factor should not be taken into consideration. ” And if they get a high TCAV rating for the “blue”, it means that they found a problem in their MO model.

TCAV is intended for hanging on existing AI systems that cannot be interpreted. Why not just do the interpreted system instead of black boxes?

There is a branch of the study of interpretability, concentrating on the creation of initially interpreted models, reflecting the way a person is reasoned. But I think this: now we already have fully-prepared models of AI, which are already used to solve important problems, and when they were created, they did not initially think about interpretability. So just there. Many of them work in Google! You can say, "Interpretability is so useful that let us create another model for you to replace the one you have." Well, good luck to you.

And then what to do? We still need to go through this crucial moment of deciding whether this technology is useful to us or not. Therefore, I am working on methods of interpretability “after training”. If someone gave you a model and you cannot change it, how will you approach the task of generating explanations of its behavior so that you can use it safely? This is what TCAV does.

TCAV allows people to ask an AI about the importance of certain concepts. But what if we don’t know what to ask - what if we want the AI to simply explain?

Right now we are working on a project that can automatically find concepts for you. We call it DTCAV - the opening TCAV. But I think that the main problem of interpretability is that people are involved in this process, and that we allow people and machines to communicate.

In many cases, when working with applications on which a lot depends, experts in a particular field already have a list of concepts important to them. We at Google Brain are constantly confronted with this in the medical applications of AI. They do not need a set of concepts - they want to provide concept models that are interesting to them. We are working with a doctor who is treating diabetic retinopathy, an eye disease, and when we told her about TCAV, she was very happy, because she already had a whole bunch of hypotheses about what the model could do, and now she can check all the questions. This is a huge plus, and a very user-centric way to implement collaborative machine learning.

You think that without interpretability, humanity can simply abandon AI technology. Considering what capabilities it possesses, do you really consider such an option as real?

Yes. That is what happened with expert systems. In the 1980s, we determined that they are cheaper than people in solving some problems. And who today uses expert systems? No one. And after that came the winter of AI.

So far this does not seem likely, so much hype and money are invested in AI. But in the long run, I think that humanity can decide - perhaps out of fear, perhaps because of lack of evidence - that this technology does not suit us. This is possible.

Source: https://habr.com/ru/post/440426/