Serverless CI / CD on AWS

It would be cool if the deployment in the copy-peist format is in the past, but, alas, things are different. It happens that developers prefer this method of delivery. Although now write an article about why this is not good, but you know everything without me. With the tendency to serverless architecture, the CI / CD pipelines play an important role in the delivery of applications. I also wrote about them in the article " 3 best tips for your next serverless project ."

I have been interested in the topic of CI and CD quite a while, and for the first time I ran into it a few years ago - thanks to TeamCity. Currently TeamCity is still used for most of our CI / CD pipelines. TeamCity works fine, I have nothing against it, but I always strive to improve my work. For example, it would be good to collect pipelines in the form of code - this is one of the areas in which TeamCity is not so good.

I studied the AWS integration and delivery tools some time ago, and although we use CodeDeploy for another project that works on EC2, I have not yet deployed a serverless project with them. After reviewing the tools again, I noticed that: now there is a built-in integration function for deploying CloudFormation and Lambda, presumably based on SAM AWS; we use a serverless platform - it generates CloudFormation templates, but it does not work out of the box with AWS tools.

Training

I use the following AWS services: EC2, Docker, ECR, S3, IAM, CodeBuild, CodePipeline, CloudWatch, CloudTrail. To keep up, you need at least a basic level to understand what each of these services is doing.

Basically, I write the server-side code in .NET, and this guide relies on this platform. None of the ready-made CodeBuild images have both .NET and NodeJS runtimes simultaneously (NodeJS is needed for serverless platforms). If your Lambda functions are written in NodeJS, setting up the deployment pipeline is much simpler, because this is the only runtime environment required for installation in the Docker image (if it's your case, skip most of the tutorial). For a second, it was my first experience with containers, and I am glad to learn something new.

I dare to assume that your code is in a certain repository, for example, git. For guidance, we simply upload to S3 a file containing a package of code for deployment; How you get it is up to you. Having taken my work on the basis, you can always go further by connecting your pipeline to such repositories as github or CodeCommit.

1. Create an EC2 instance and install Docker

Run a standard AWS Linux 2 EC2 instance — this should be clear without explanation. Log in and install Docker using the commands:

sudo yum update -y sudo amazon-linux-extras install docker sudo service docker start You also need to add the user ec2 to the docker group in order to execute Docker commands without using sudo:

sudo usermod -a -G docker ec2-user When the command is completed, log out and log back into your EC2 instance so that the user ec2 gets new permissions. Make sure the ec2 user runs Docker commands without sudo:

docker info 2. Create a Docker image and place it in ECR

Suppose the previous step was successful; The next step is to create a Docker image that fits in the ECR. AWS offers basic images for CodeBuild on github , and this makes it easy to create your own image.

I also posted the image on github - in case you don’t want to do the following to create your own: https://github.com/effectivedigital/serverless-deployment-image

Copy the images and go to the .NET Core 2.1 directory:

git clone https://github.com/aws/aws-codebuild-docker-images.git cd aws-codebuild-docker-images cd ubuntu/dot-net/core-2.1/ Open the Dockerfile in your preferred text editor:

nano Dockerfile Add commands to install NodeJS and serverless platforms at the end of other commands already available in the Dockerfile. Most of these commands I received from the Docker NodeJS image in the same AWS repository:

# Install Node Dependencies ENV NODE_VERSION="10.14.1" # gpg keys listed at https://github.com/nodejs/node#release-team RUN set -ex \ && for key in \ 94AE36675C464D64BAFA68DD7434390BDBE9B9C5 \ B9AE9905FFD7803F25714661B63B535A4C206CA9 \ 77984A986EBC2AA786BC0F66B01FBB92821C587A \ 56730D5401028683275BD23C23EFEFE93C4CFFFE \ 71DCFD284A79C3B38668286BC97EC7A07EDE3FC1 \ FD3A5288F042B6850C66B31F09FE44734EB7990E \ 8FCCA13FEF1D0C2E91008E09770F7A9A5AE15600 \ C4F0DFFF4E8C1A8236409D08E73BC641CC11F4C8 \ DD8F2338BAE7501E3DD5AC78C273792F7D83545D \ 4ED778F539E3634C779C87C6D7062848A1AB005C \ A48C2BEE680E841632CD4E44F07496B3EB3C1762 \ ; do \ gpg - keyserver hkp://p80.pool.sks-keyservers.net:80 - recv-keys "$key" || \ gpg - keyserver hkp://ipv4.pool.sks-keyservers.net - recv-keys "$key" || \ gpg - keyserver hkp://pgp.mit.edu:80 - recv-keys "$key" ; \ done RUN set -ex \ && wget "https://nodejs.org/download/release/v$NODE_VERSION/node-v$NODE_VERSION-linux-x64.tar.gz" -O node-v$NODE_VER$ && wget "https://nodejs.org/download/release/v$NODE_VERSION/SHASUMS256.txt.asc" -O SHASUMS256.txt.asc \ && gpg - batch - decrypt - output SHASUMS256.txt SHASUMS256.txt.asc \ && grep " node-v$NODE_VERSION-linux-x64.tar.gz\$" SHASUMS256.txt | sha256sum -c - \ && tar -xzf "node-v$NODE_VERSION-linux-x64.tar.gz" -C /usr/local - strip-components=1 \ && rm "node-v$NODE_VERSION-linux-x64.tar.gz" SHASUMS256.txt.asc SHASUMS256.txt \ && ln -s /usr/local/bin/node /usr/local/bin/nodejs \ && rm -fr /var/lib/apt/lists/* /tmp/* /var/tmp/* RUN npm set unsafe-perm true CMD [ "node" ] # Install Serverless Framework RUN set -ex \ && npm install -g serverless Now we collect and tag the image:

docker build -t aws/codebuild/dot-net . After completing the build, run the image - make sure that everything works and that the serverless platform is installed correctly:

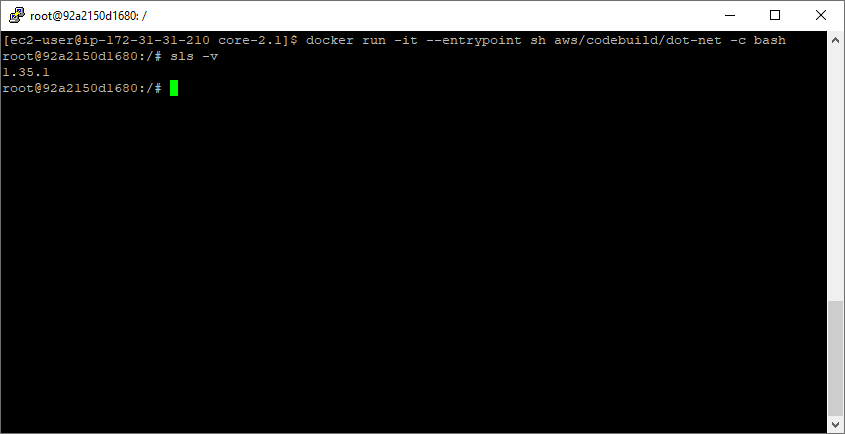

docker run -it --entrypoint sh aws/codebuild/dot-net -c bash sls -v

Running sls -v inside a new container

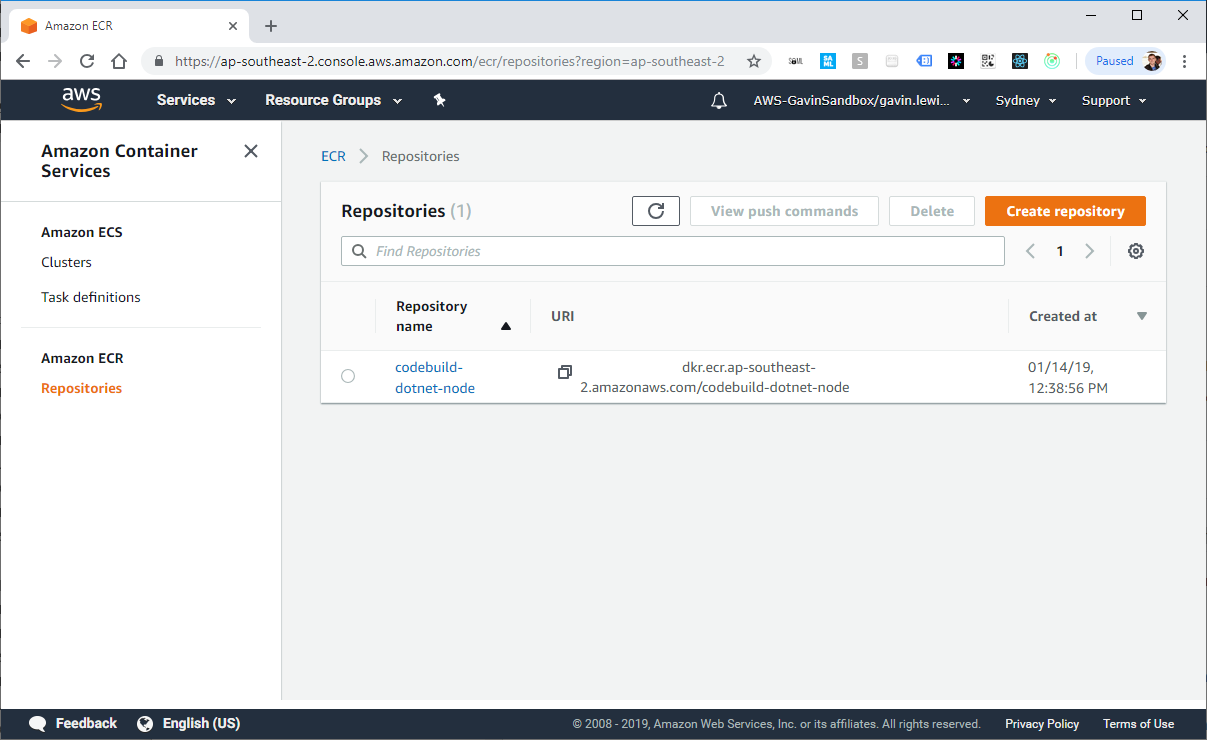

Then create a repository in ECR using the AWS CLI. After running the command, the new repository will be displayed in the AWS console:

aws ecr create-repository --repository-name codebuild-dotnet-node

AWS CLI response to creating a repository in ECR

Now mark the aws / codebuild / dot-net image created earlier with the value of repositoryUri from the previous step:

docker tag aws/codebuild/dot-net <ACCOUNTID>.dkr.ecr.ap-southeast-2.amazonaws.com/codebuild-dotnet-node Run the get-login command to get the docker login authentication command line for the container registry:

aws ecr get-login --no-include-email Run the docker login command issued by the get-login command during the last step.

docker login -u AWS -p eyJwYXlsb2FkIjoiNGZnd0dSaXM1L2svWWRLMmhJT1c0WWpOZEcxamJFeFJOK2VvT0Y5[...] https://<ACCOUNTID>.dkr.ecr.ap-southeast-2.amazonaws.com If you are logged in successfully, place the docker image in the repository created in ECR. This may take several minutes (depending on the size of the completed image).

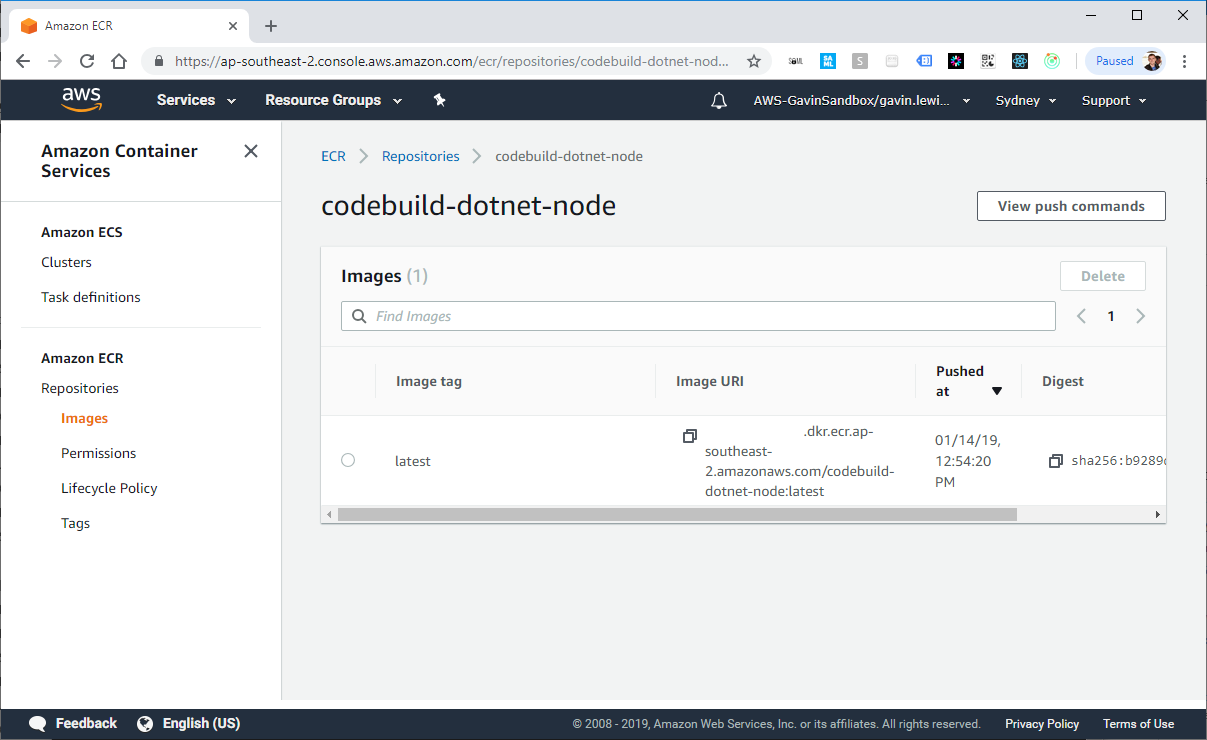

docker push <ACCOUNTID>.dkr.ecr.ap-southeast-2.amazonaws.com/codebuild-dotnet-node

Docker on EC2 creates our image

Then we can open access to the image from ECR to anyone. The permission needs to be blocked in the working environment, but in this example we will open it. Go to the permissions tab in the AWS console, select "Edit JSON Policy" and paste the following element into the policy:

{ "Version": "2008-10-17", "Statement": [ { "Sid": "EnableAccountAccess", "Effect": "Allow", "Principal": "*", "Action": [ "ecr:BatchCheckLayerAvailability", "ecr:BatchGetImage", "ecr:DescribeImages", "ecr:DescribeRepositories", "ecr:GetAuthorizationToken", "ecr:GetDownloadUrlForLayer", "ecr:GetRepositoryPolicy", "ecr:ListImages" ] } ] } 3. Create Pipeline

It is time to assemble the pipeline. In order to simplify the process, make it repeatedly deployable, and stick to the server-form architecture of the server, I assembled the pipeline using a serverless platform. The same result can be achieved by assembling in CloudFormation.

I will not copy the entire source from my serverless.yml file — copy it from github: https://github.com/effectivedigital/serverless-deployment-pipeline

Review the serverless template to see exactly what it will do, but, in brief, it sets up the following elements:

- 3 S3 segments

- 1 segment policy

- 3 IAM roles

- 1 CodeBuild project

- 1 CodePipeline Pipeline

- 1 CloudWatch Event

- 1 track CloudTrail

Then update DockerImageArn for your image in ECR. If you want to create deployment packages with a name other than Deployment.zip , then also update DeploymentFilename :

DockerImageArn: <ACCOUNTID>.dkr.ecr.ap-southeast-2.amazonaws.com/codebuild-dotnet-node:latest DeploymentFilename: Deployment.zip That's it, the pipeline is ready for deployment. Run the serverless deployment command and wait until it is configured:

sls deploy -v

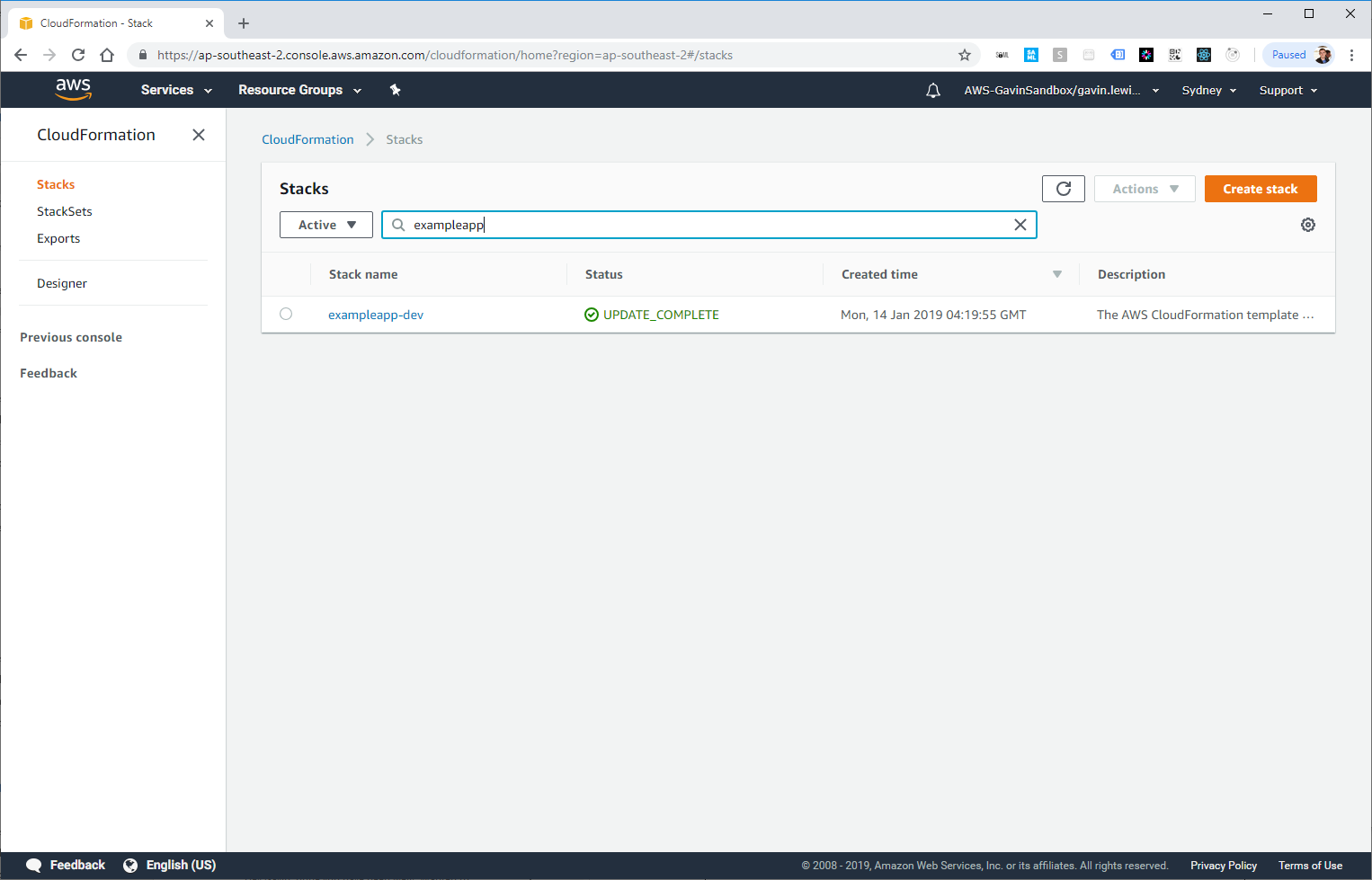

Created by serverless platform CloudFormation stack

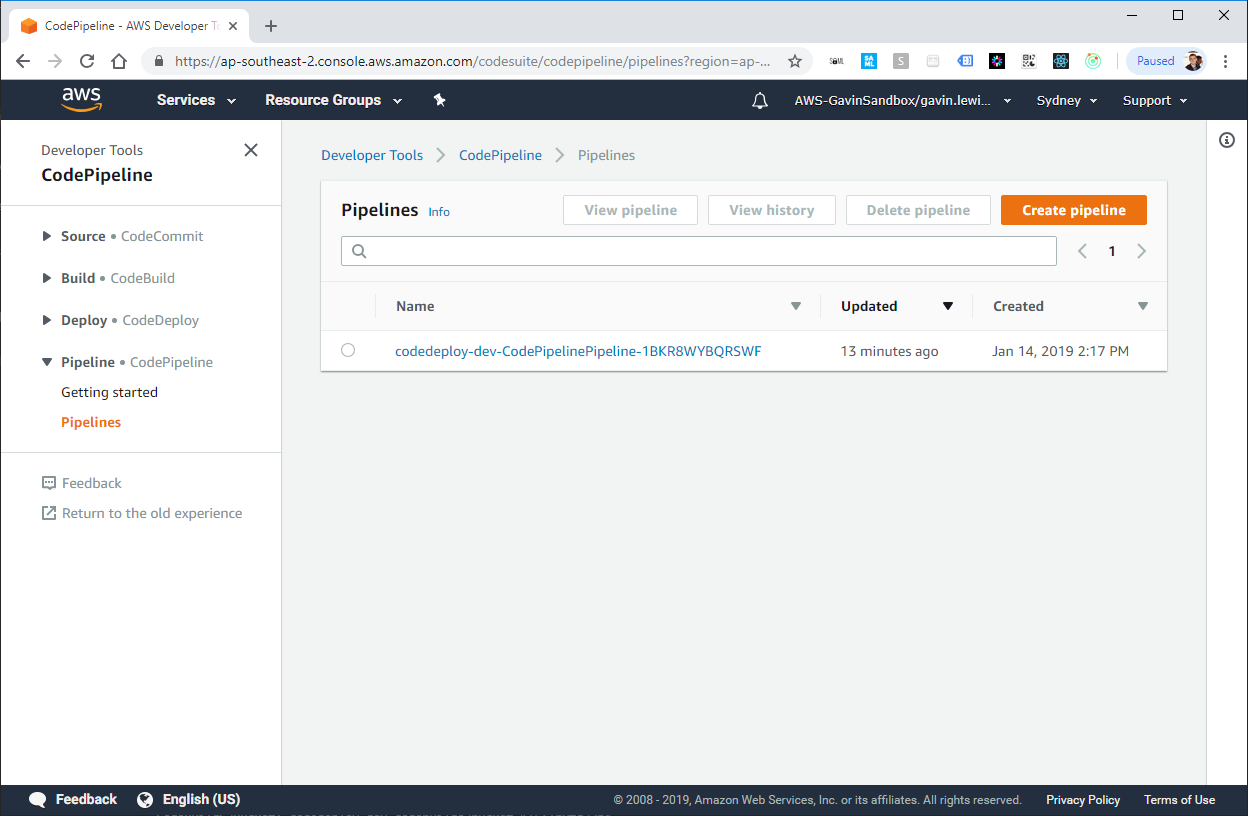

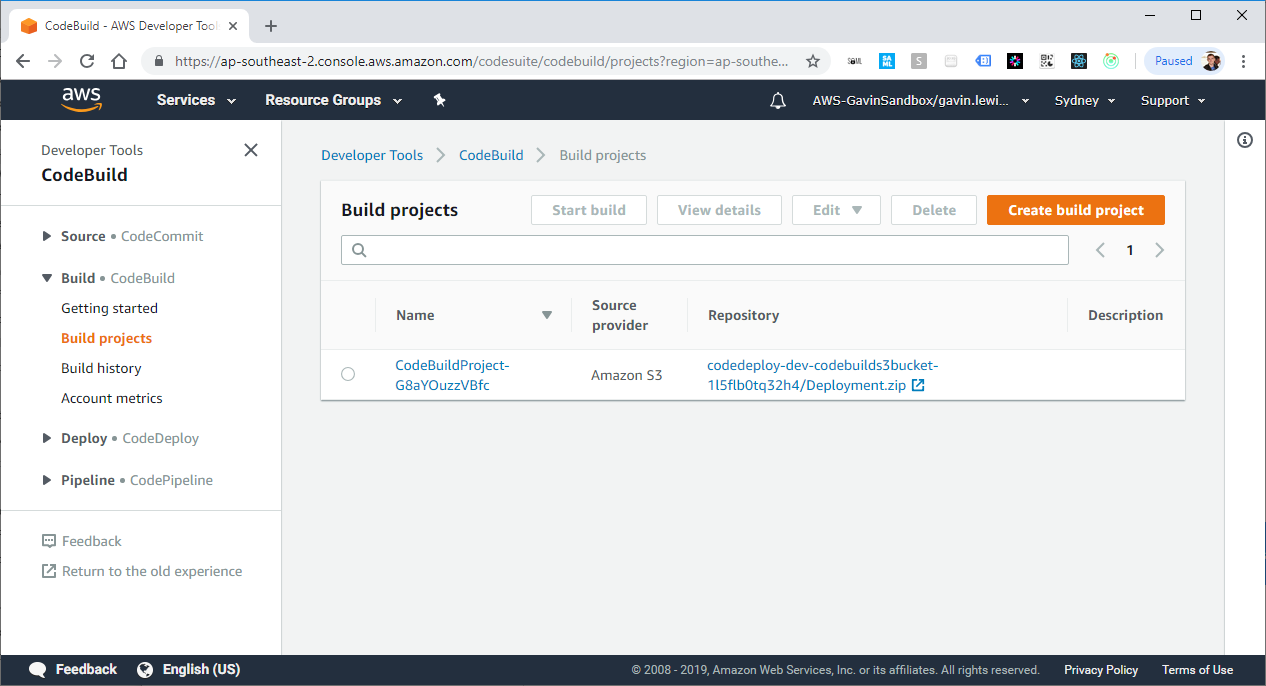

Created by serverless codepipeline platform

Created by serverless platform project CodeBuild Project

4. Add buildSpec.yml to your application.

When CodePipeline detects a change in the deployment file in S3, it will tell CodeBuild to start and try to build and deploy the application. However, CodeBuild should also know which commands should be executed to build and deploy the application, and buildSpec.yml contains instructions that CodeBuild will follow.

I created a very simple "Hello world" application that includes an example buildSpec.yml file that you can use: https://github.com/effectivedigital/serverless-deployment-app

Or create a file buildSpec.yml in existing applications and fill it out according to the instructions below:

version: 0.2 phases: pre_build: commands: - chmod a+x * build: commands: - ./build.sh post_build: commands: - sls deploy -v -s $STAGE 5. Check Pipeline

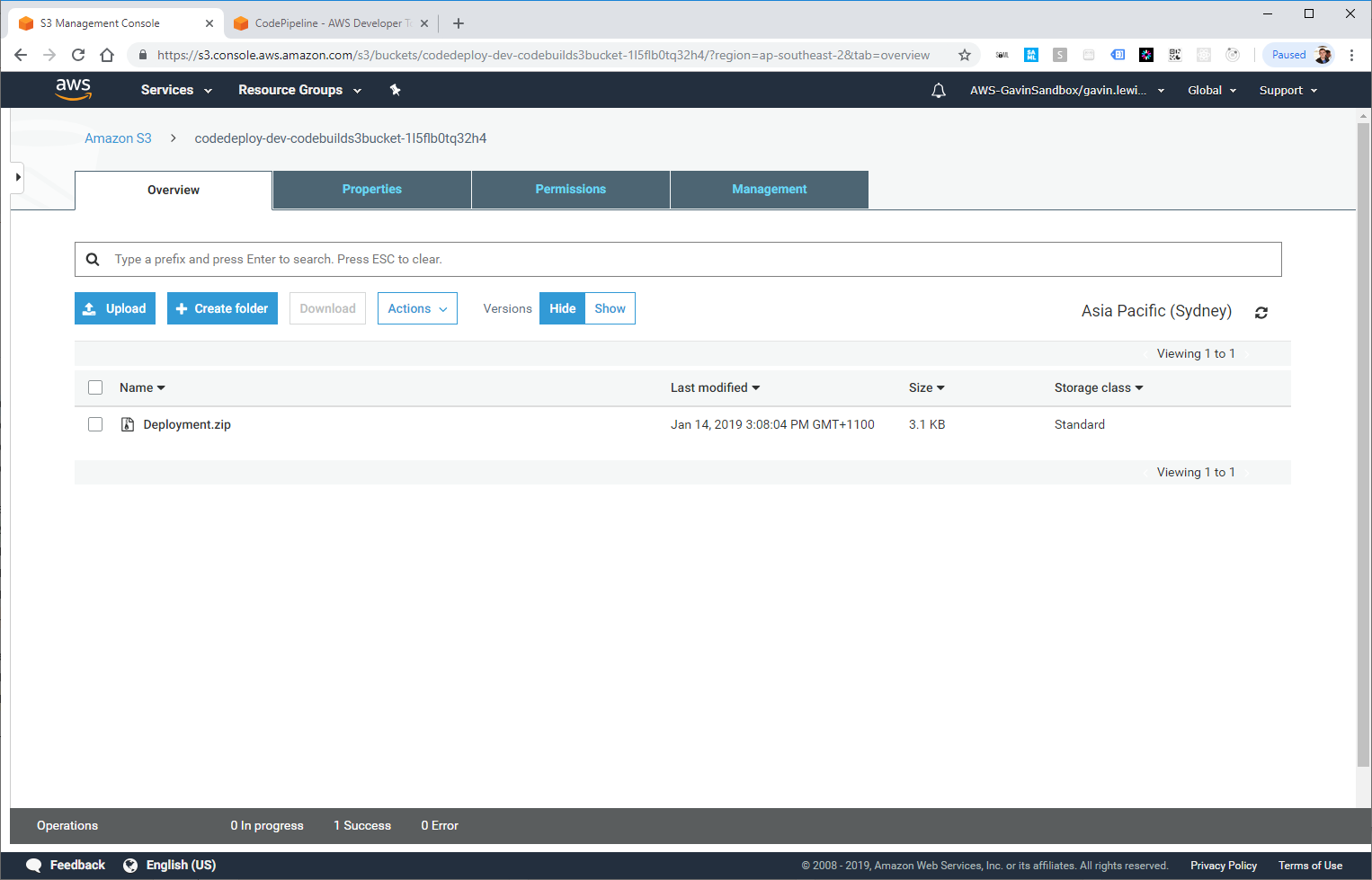

Now everything is ready for the first launch of your pipeline. Create a package called Deployment.zip , it should include all the files for your serverless application and the buildSpec.yml file.

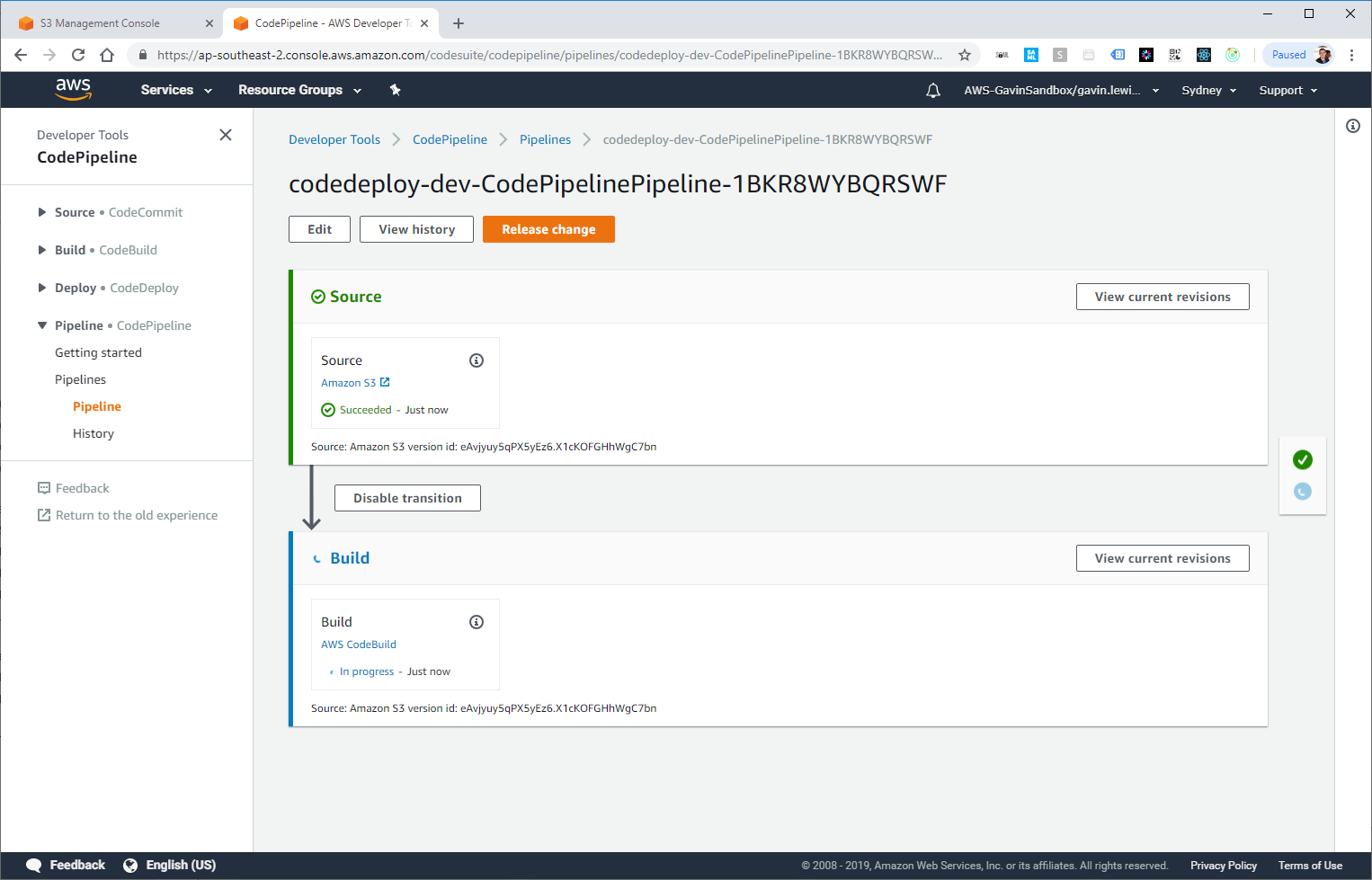

After a few moments, CloudTrail should register the PutObject event and activate the CloudWatch event rule, which then activates CodePipeline launch.

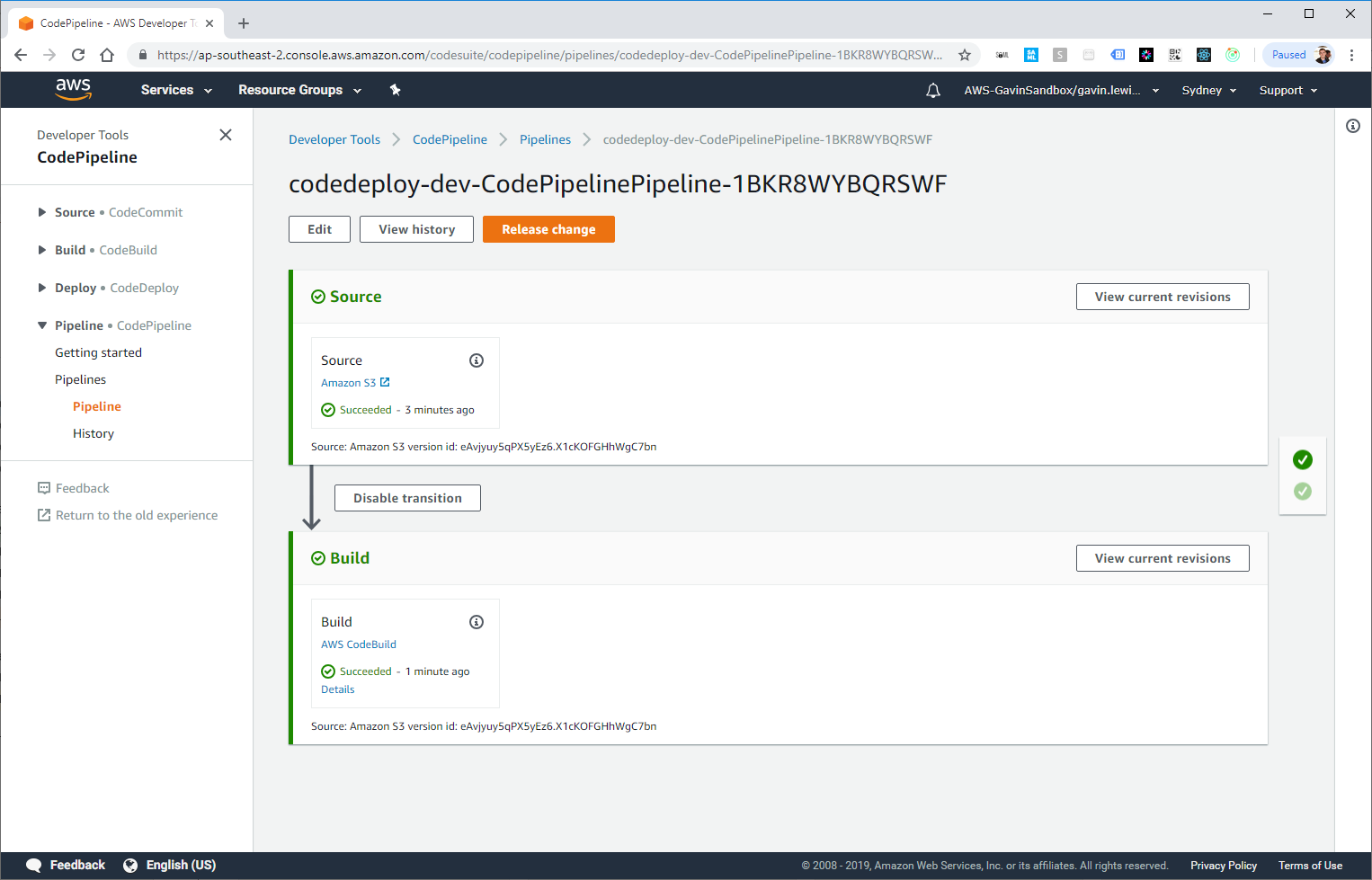

CodePipeline is running, the build has gone.

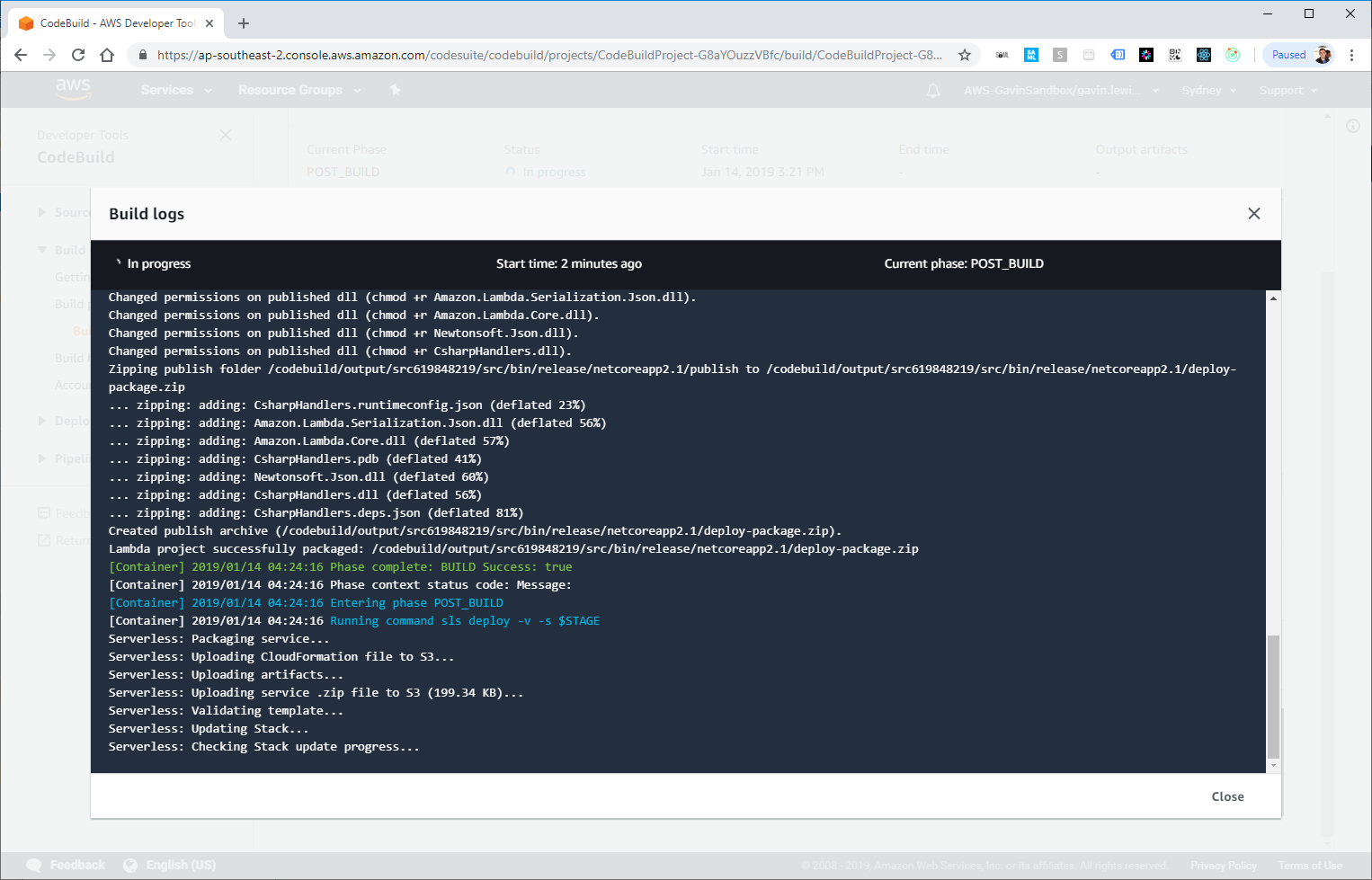

If we go to the details of the AWS CodeBuild step, we can see the build and deployment process:

CodeBuild will get output from the build and deploy Docker image

A new application deployed by our pipeline is also displayed in CloudFormation:

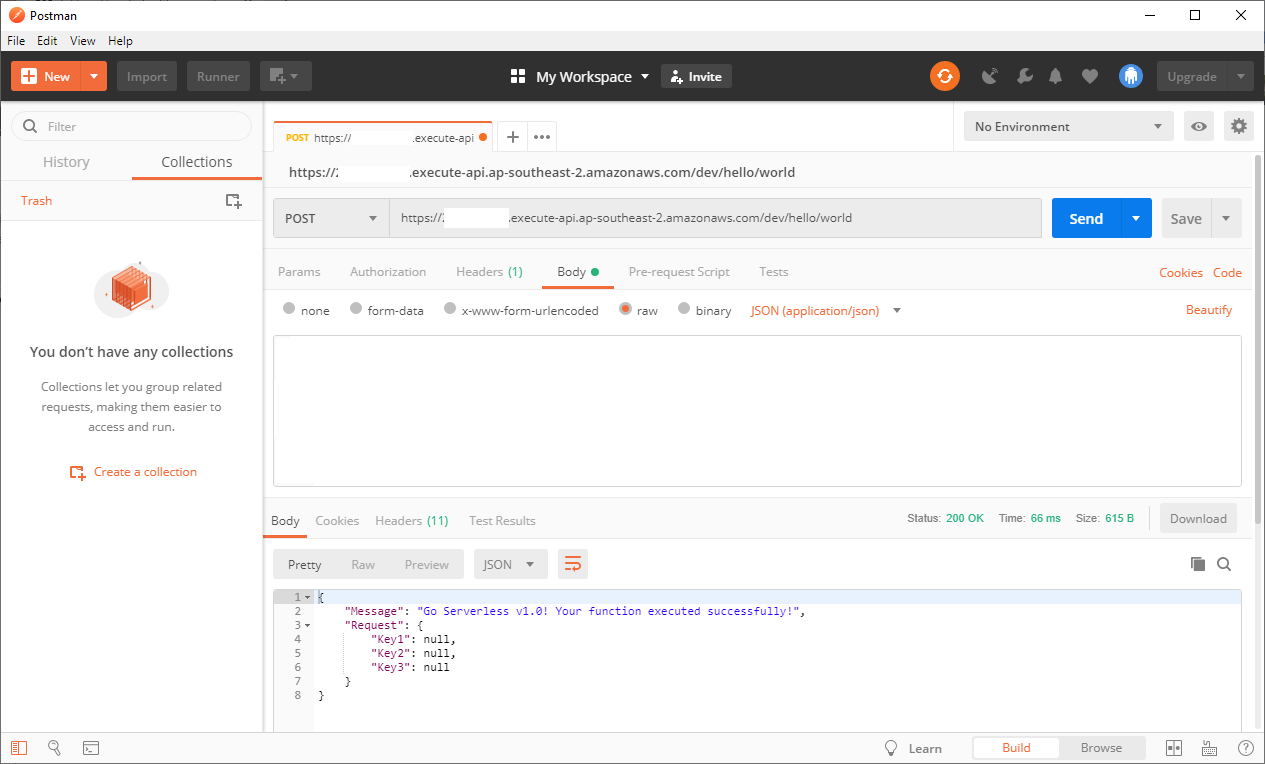

You can test the API endpoint created in our simple application (the URL is in the CodeBuild exit or in the API gateway) and make sure that the application works successfully:

Brief conclusions

CodePipeline allows you to create a scalable, flexible and inexpensive CI / CD pipeline and helps to solve some of the problems associated with traditional pipelines created on servers.

I would like to go ahead and add unit testing to the mix after deployment, but the topic deserves a separate article - and this is a reason to read us in the future!

Source: https://habr.com/ru/post/440756/