Audio AI: select vocals from music using convolutional neural networks

Hacking music to democratize derivative content

I wish to return to 1965, knock on the front door of the Abbey Road studio with a pass, go inside - and hear the real voices of Lennon and McCartney ... Well, let's try. Input: The Beatles' Mid-Quality MP3 Song We Can Work it Out . The top track is the input mix, the bottom track is the isolated vocal that our neural network allocated.

Formally, this problem is known as audio source separation or audio source separation. It consists in the restoration or reconstruction of one or several source signals that are mixed with other signals as a result of a linear or convolutional process. This area of research has many practical applications, including improving sound quality (speech) and noise elimination, musical remixes, spatial distribution of sound, remastering, etc. Sound engineers sometimes call this technique demixing. There are a large number of resources on this topic, from blind signal separation with independent component analysis (ICA) to semi-controlled factorization of non-negative matrices and ending with more recent neural network approaches. Good information on the first two points can be found in these mini-manuals from CCRMA, which at one time I was very useful.

But before diving into the development ... quite a bit of the philosophy of applied machine learning ...

I was engaged in processing signals and images even before the slogan “deep learning solves everything” was spread, so I can provide you with a solution as a travel engineering feature and show why a neural network is the best approach for this particular problem . What for? Very often, I see people writing something like this:

“With deep learning, you no longer need to worry about choosing signs; it will do it for you. ”

or worse ...

"The difference between machine learning and deep learning [wait a minute ... deep learning is still machine learning!] In ML you take out the signs yourself, but in deep learning it happens automatically within the network."

Such generalizations probably come from the fact that DNNs can be very effective in exploring good hidden spaces. But so it is impossible to generalize. It really upsets me when recent graduates and practitioners are amenable to the above misconceptions and adopt the “deep-learning-solves-all” approach. They say that it is enough to sketch a bunch of raw data (even if after a little preliminary processing) - and everything will work as it should. In the real world, you need to take care of things such as performance, execution in real time, etc. Because of such misconceptions, you will be stuck for a very long time in the experiment mode ...

Feature Engineering remains a very important discipline in the design of artificial neural networks. As in any other ML technique, in most cases it is precisely it that distinguishes effective production-level solutions from unsuccessful or ineffective experiments. A deep understanding of your data and their nature still means a lot ...

Ok, I finished the sermon. Now let's see why we are here! As with any data processing problem, we’ll first see how they look. Take a look at the following vocal excerpt from the original studio recording.

Studio vocals of 'One Last Time', Ariana Grande

Not too interesting, right? Well, that's because we visualize the signal in time . Here we only see the amplitude changes over time. But you can extract all sorts of other things, such as amplitude envelopes (envelope), rms values (RMS), the rate of change from positive amplitude to negative (zero-crossing rate), etc., but these signs are too primitive and not sufficiently distinctive, to help in our problem. If we want to extract vocals from an audio signal, we first need to somehow determine the structure of human speech. Fortunately, the window Fourier transform (STFT) comes to the rescue.

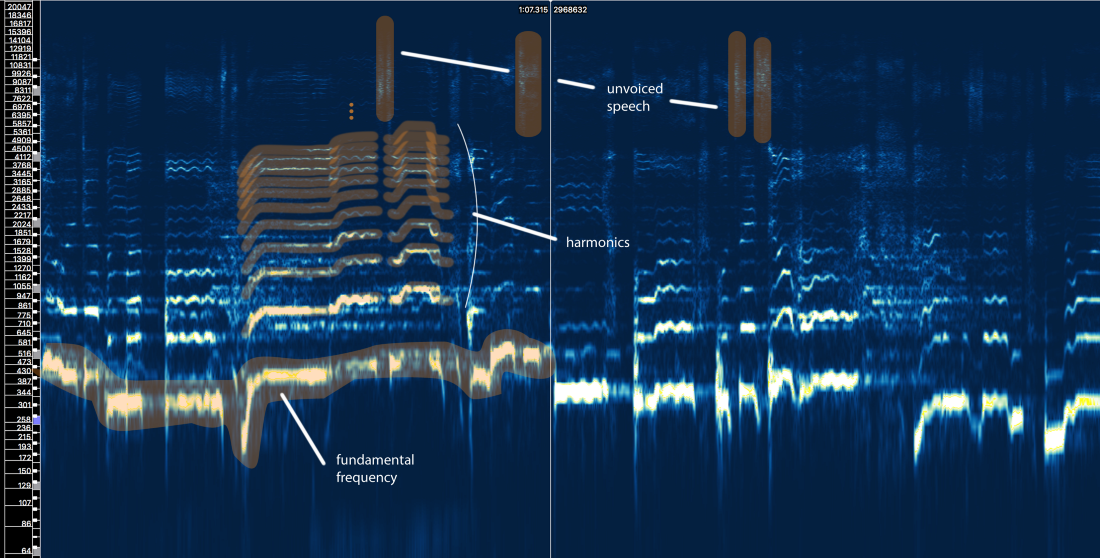

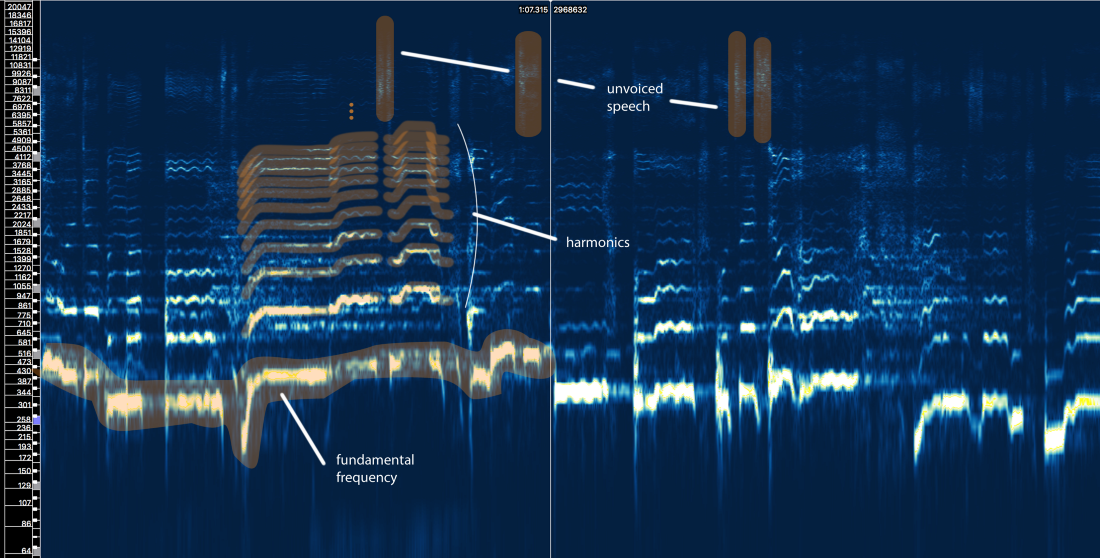

Amplitude spectrum STFT - window size = 2048, overlap = 75%, logarithmic frequency scale [Sonic Visualizer]

Although I love speech processing and definitely love playing with input filter modeling, kepstrom, sratottami, LPC, MFCC and so on , we’ll skip all this nonsense and focus on the main elements related to our problem so that the article is understandable to as many people as possible. not just signal processing specialists.

So, what does the structure of human speech tell us?

Well, we can define three main elements here:

Let's forget for a second what is called machine learning. Can a vocal extraction method be developed based on our knowledge of the signal? Let me try ...

Naive vocal isolation V1.0:

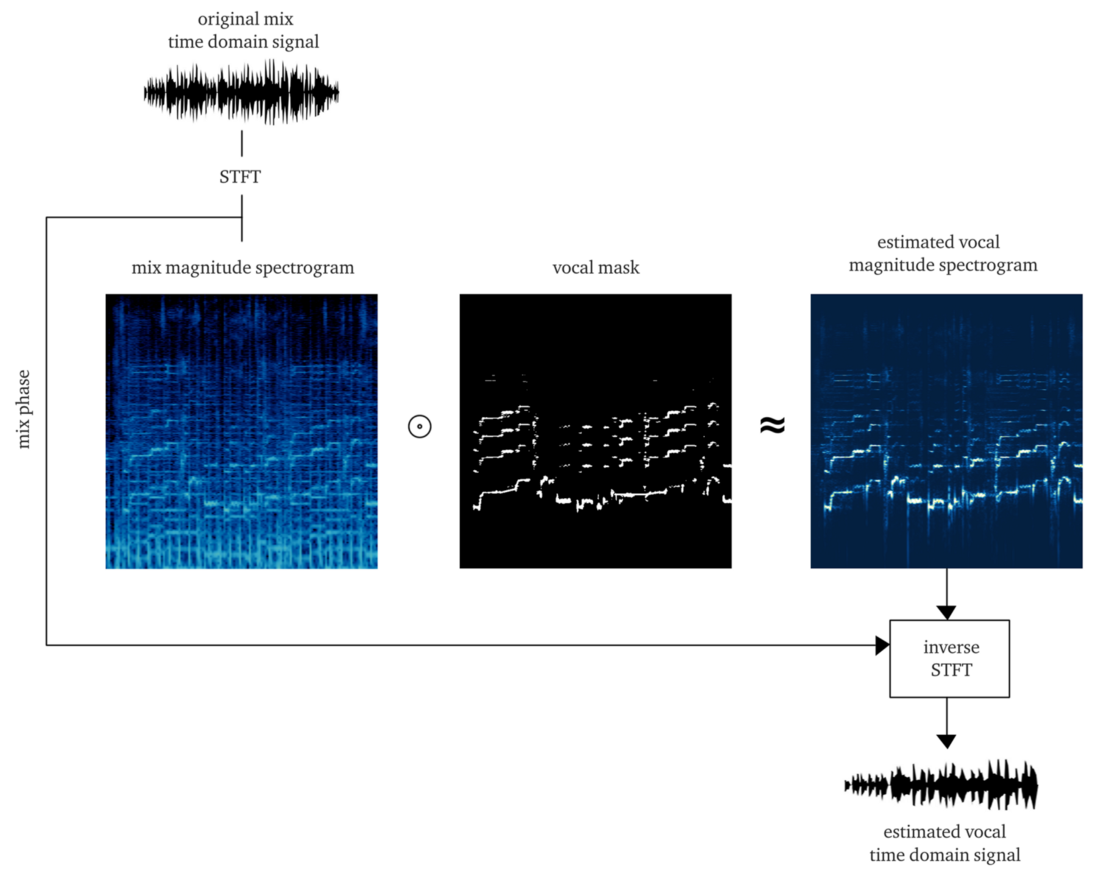

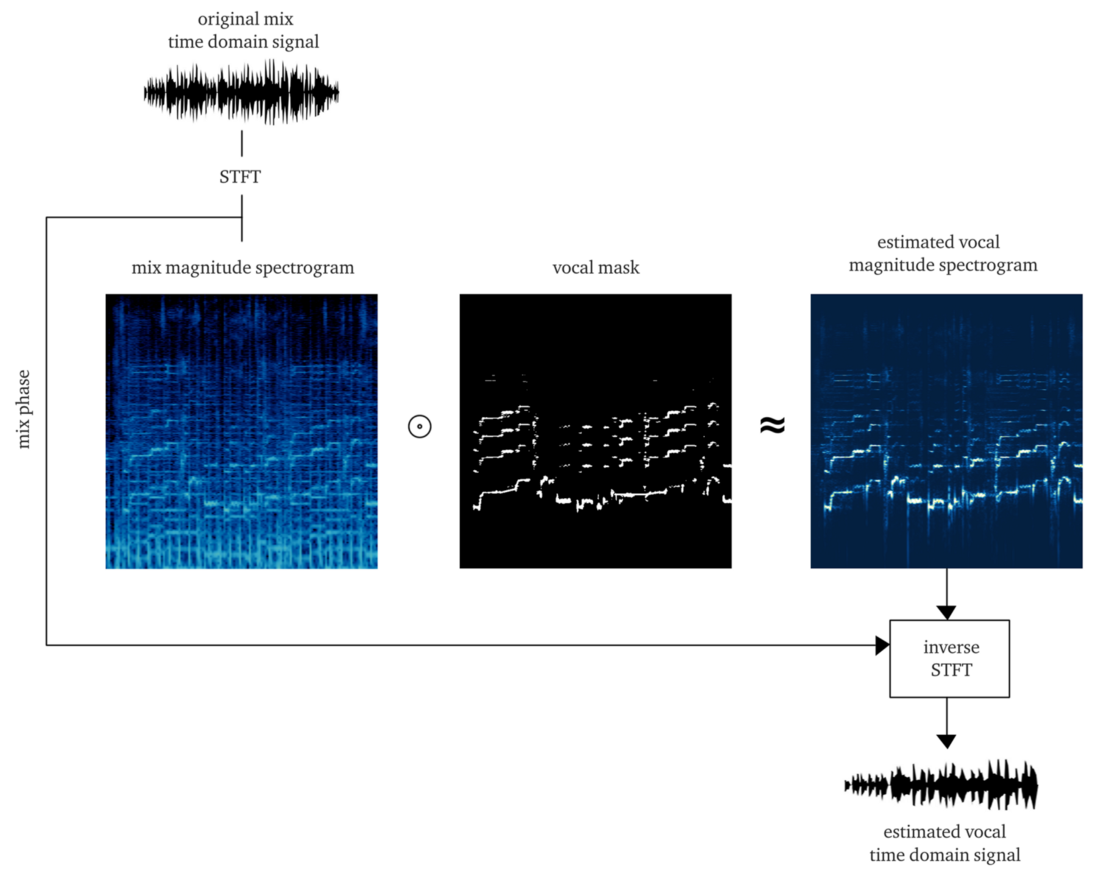

If we work with dignity, the result should be a soft or bitmask , the application of which to the amplitude of the STFT (element-by-element multiplication) gives an approximate reconstruction of the amplitude of the STFT vocal. Then we combine this vocal STFT with information about the phase of the original signal, calculate the inverse STFT and get the time signal of the reconstructed vocals.

Doing it from scratch is a big job. But for the sake of demonstration, let's apply the implementation of the pYIN algorithm . Although it is designed to solve step 3, it performs steps 1 and 2 quite properly with the correct settings, tracking the vocal base even in the presence of music. The example below contains the output after processing this algorithm, without processing unvoiced speech.

So what...? He seems to have done all the work, but there is no good quality and close. Perhaps spending more time, energy and money, we will improve this method ...

But let me ask you ...

What happens if a few voices appear on the track, but this is often found in at least 50% of modern professional tracks?

What happens if vocals are processed with reverberation, delays and other effects? Let's take a look at the last chorus of Ariana Grande from this song.

Do you already feel pain ...? I am yes.

Such methods on the hard rules very quickly turn into a house of cards. The problem is too complicated. C too many rules, too many exceptions and too many different conditions (effects and details settings). The multi-step approach also implies that errors in one step spread problems to the next step. Improving each step will be very costly: it will take a large number of iterations to do everything correctly. And last but not least, it is likely that in the end we will have a very resource-intensive conveyor, which in itself can negate all efforts.

In such a situation, it’s time to start thinking about a more comprehensive approach and let ML find out some of the basic processes and operations necessary to solve the problem. But we still have to show our skills and do feature engineering, and you will see why.

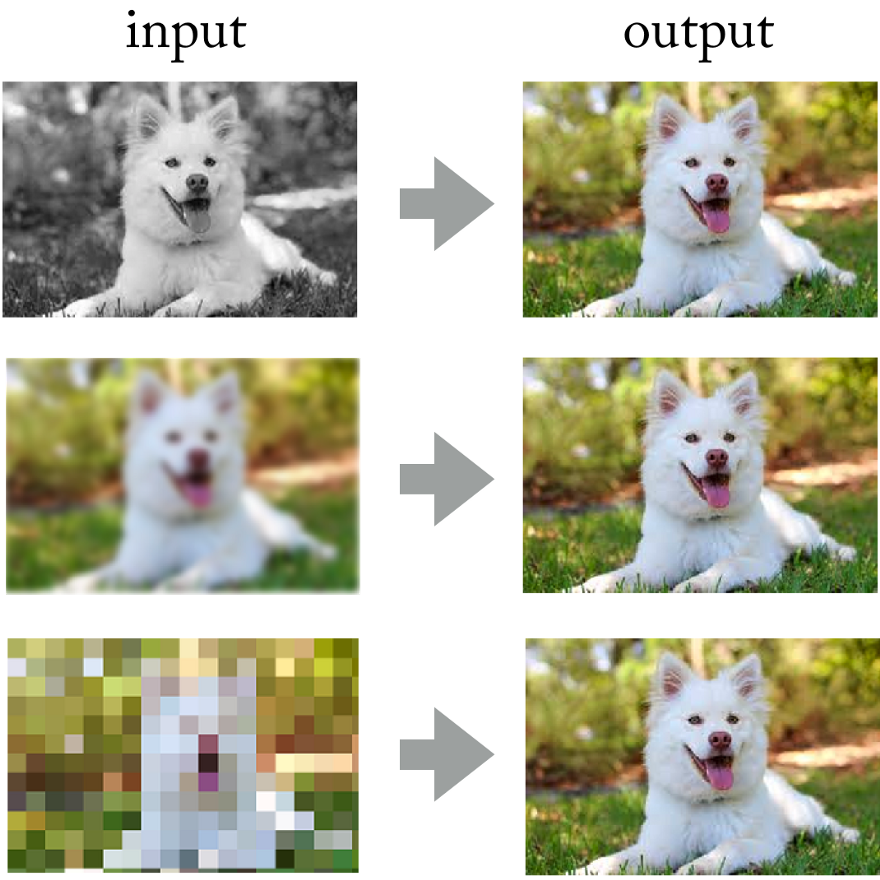

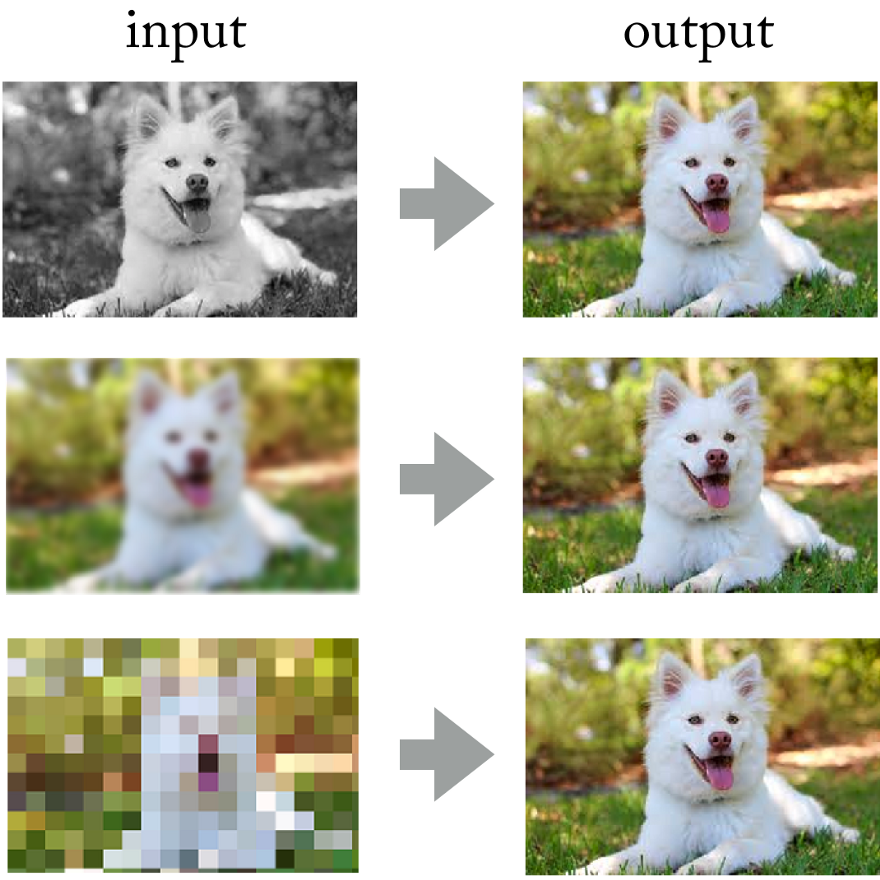

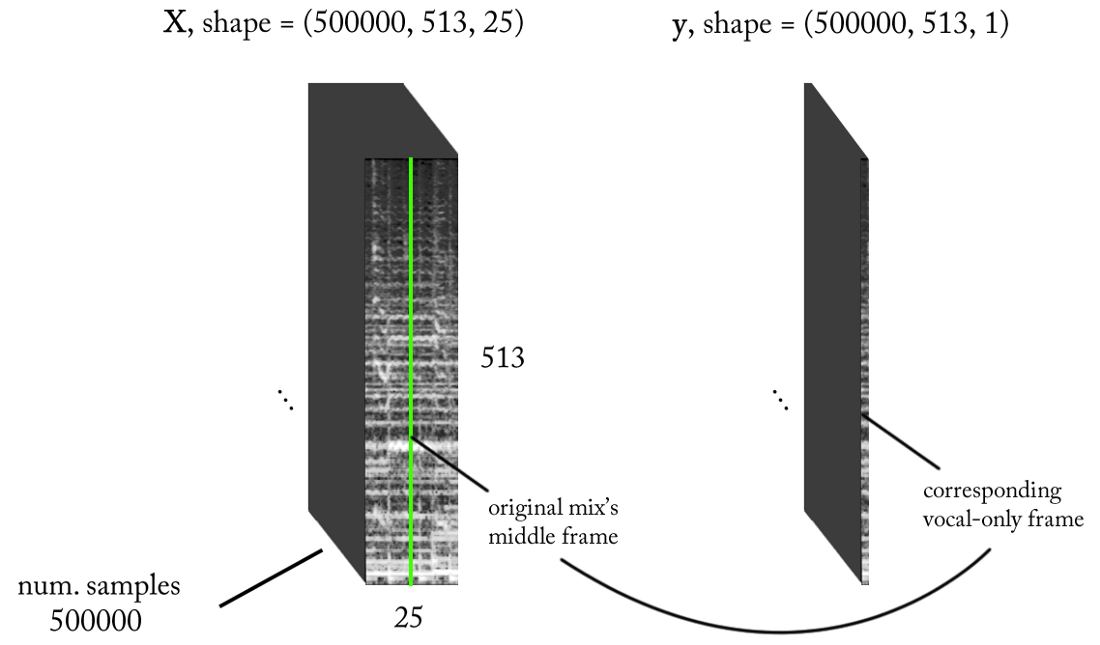

Looking at the achievements of convolutional neural networks in photo processing, why not apply the same approach here?

Neural networks successfully solve such tasks as image coloring, sharpening and resolution

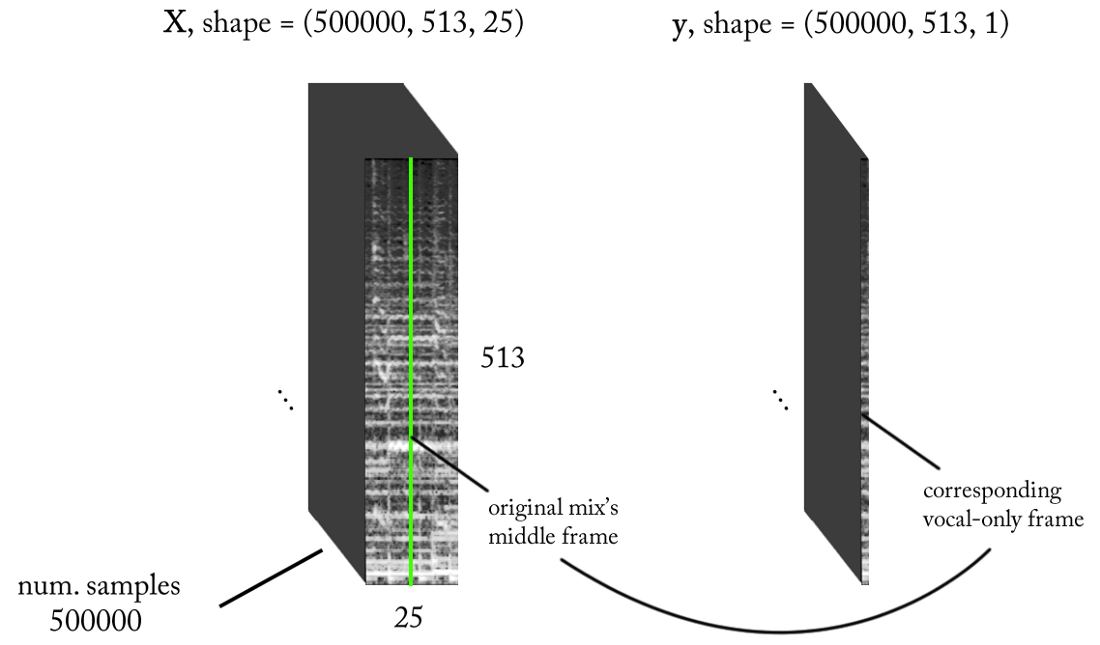

In the end, you can also present the sound signal "as an image" using the short-term Fourier transform, right? Although these sound images do not correspond to the statistical distribution of natural images, they still have spatial patterns (in time and frequency space) in which the network can be trained.

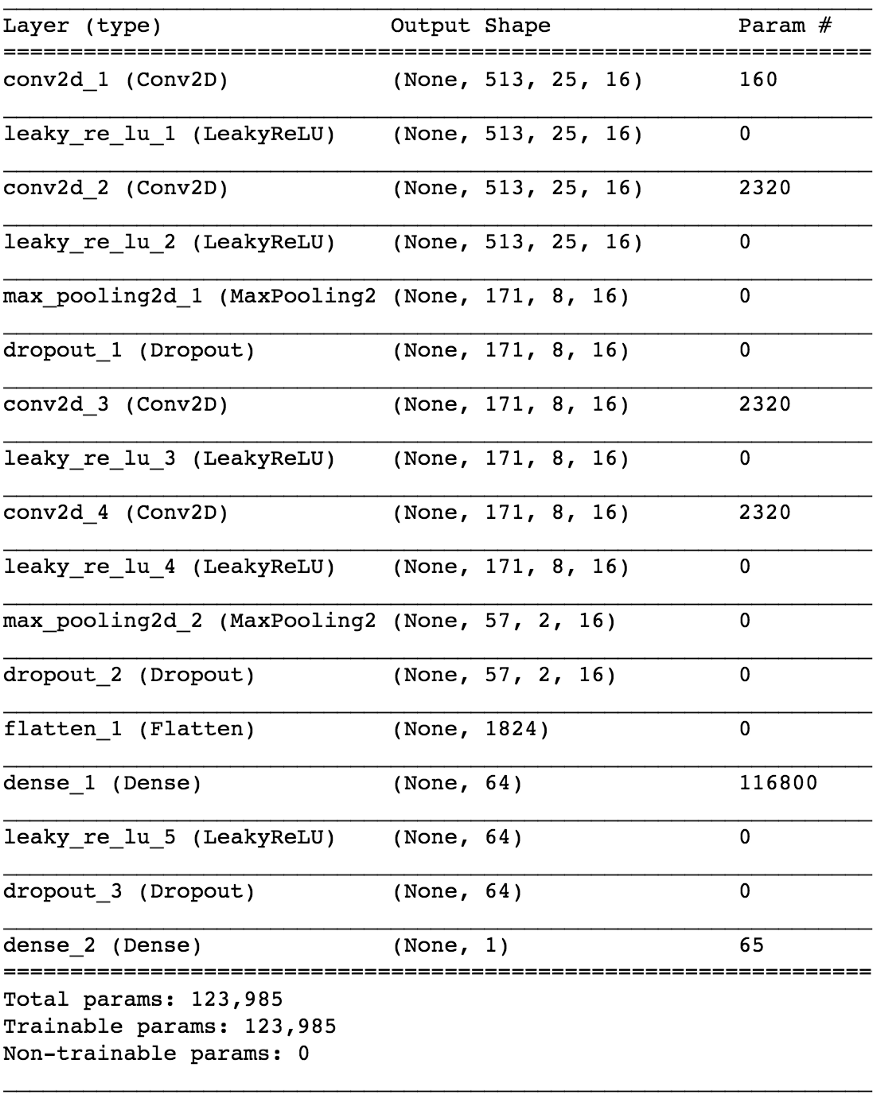

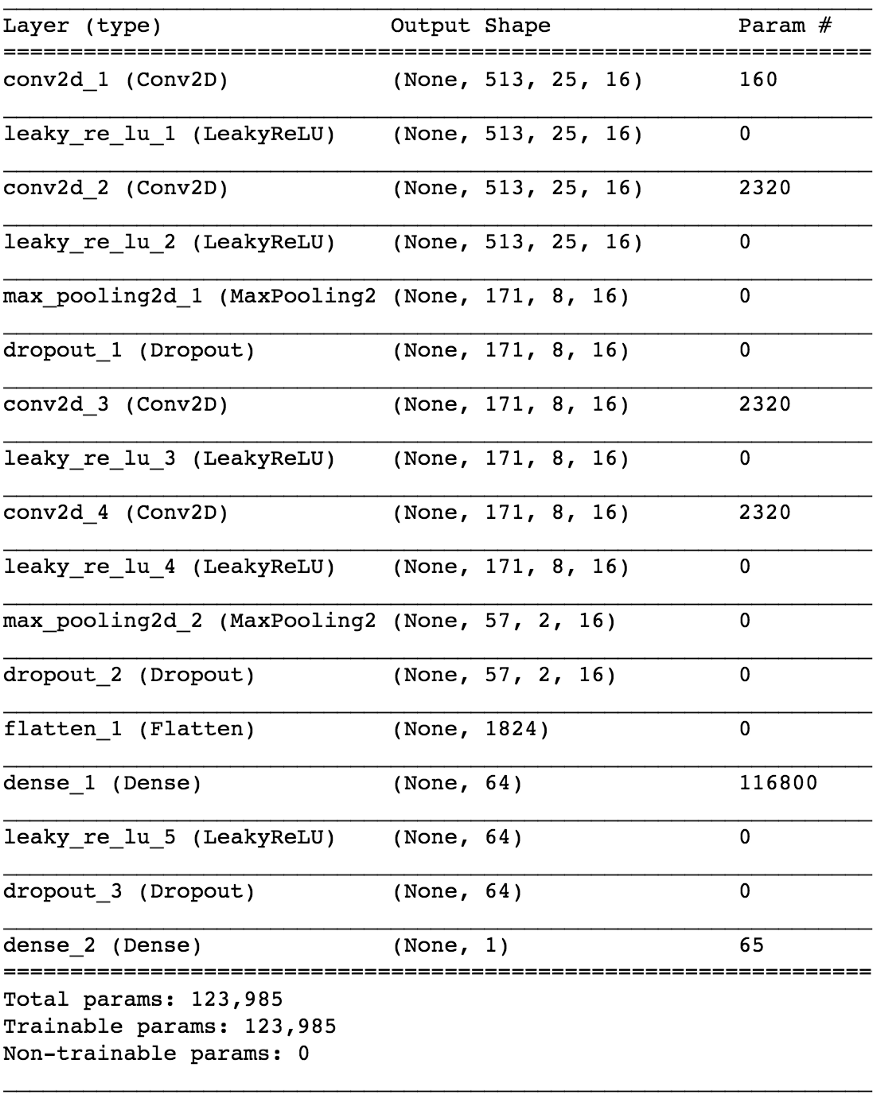

Left: drum beat and baseline at the bottom, a few synthesizer sounds in the middle, all this mixed with vocals. Right: vocals only

Conducting such an experiment would be costly because it is difficult to obtain or generate the necessary training data. But in applied research, I always try to apply this approach: first, to identify a simpler problem that confirms the same principles , but does not require a lot of work. This allows you to evaluate the hypothesis, to perform iterations faster and correct the model with minimal losses if it does not work as it should.

The implied condition is that the neural network must understand the structure of human speech . A simpler problem may be the following: will the neural network be able to detect the presence of speech on an arbitrary piece of sound recording . We are talking about a reliable voice activity detector (VAD) implemented in the form of a binary classifier.

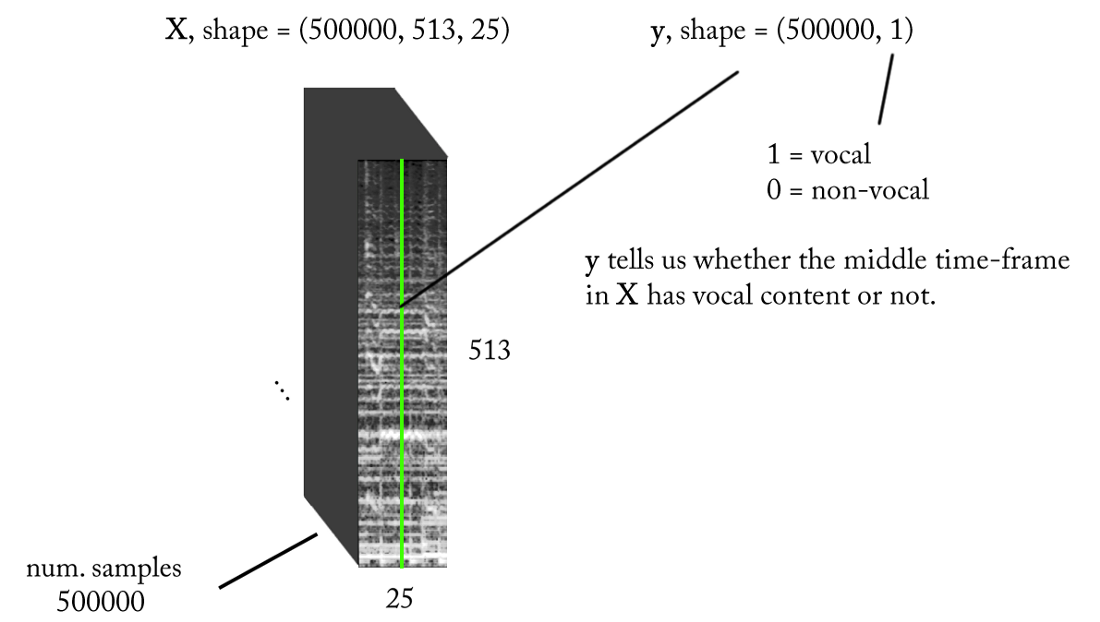

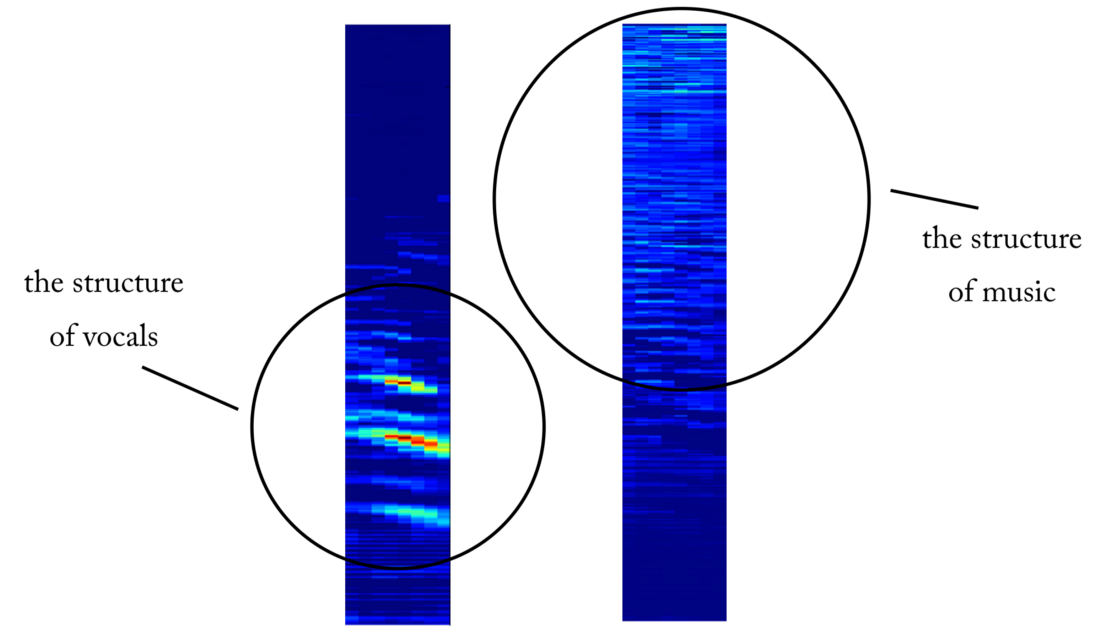

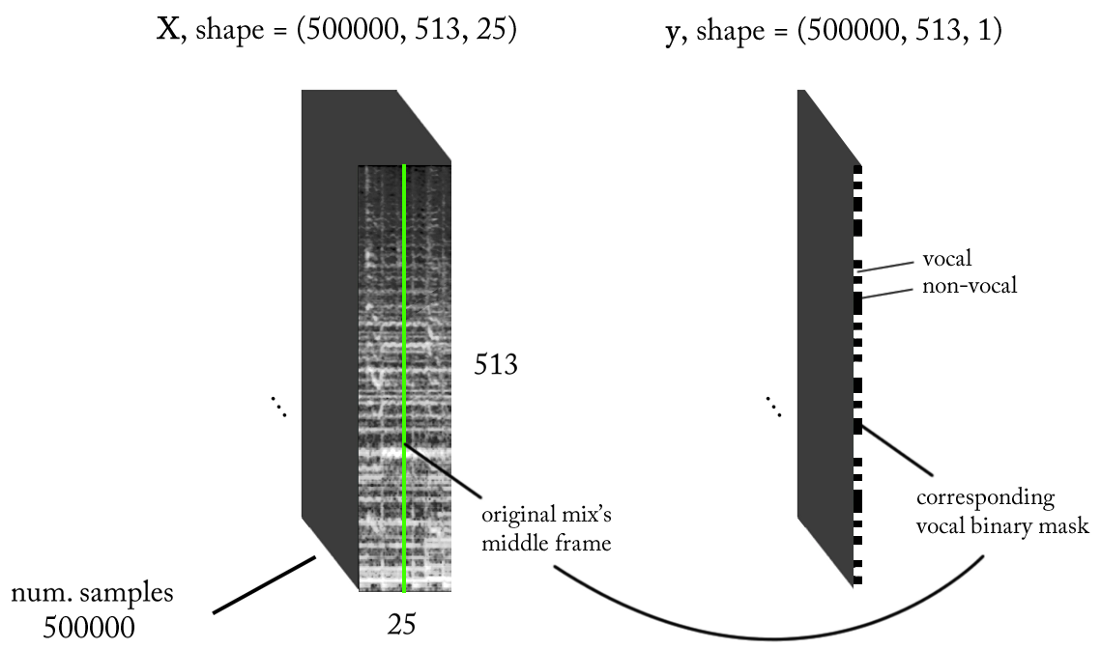

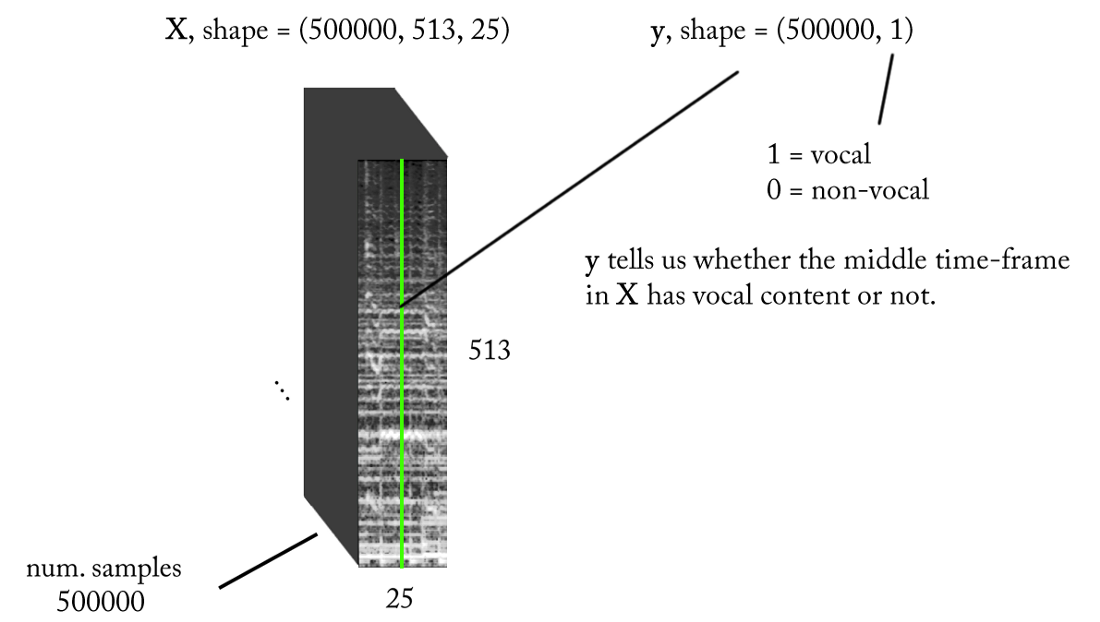

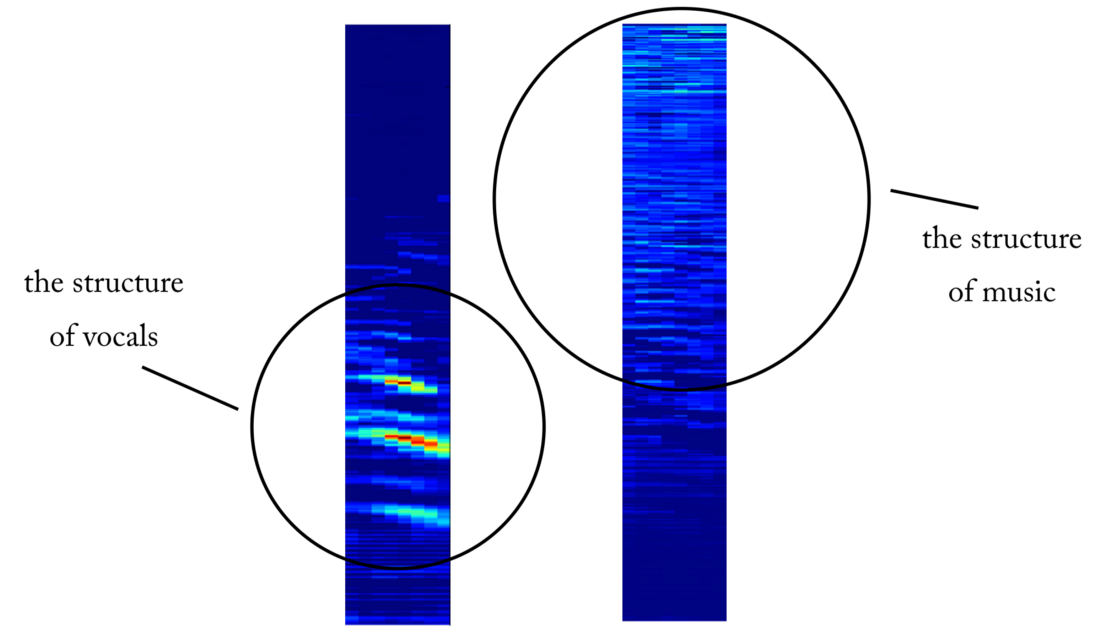

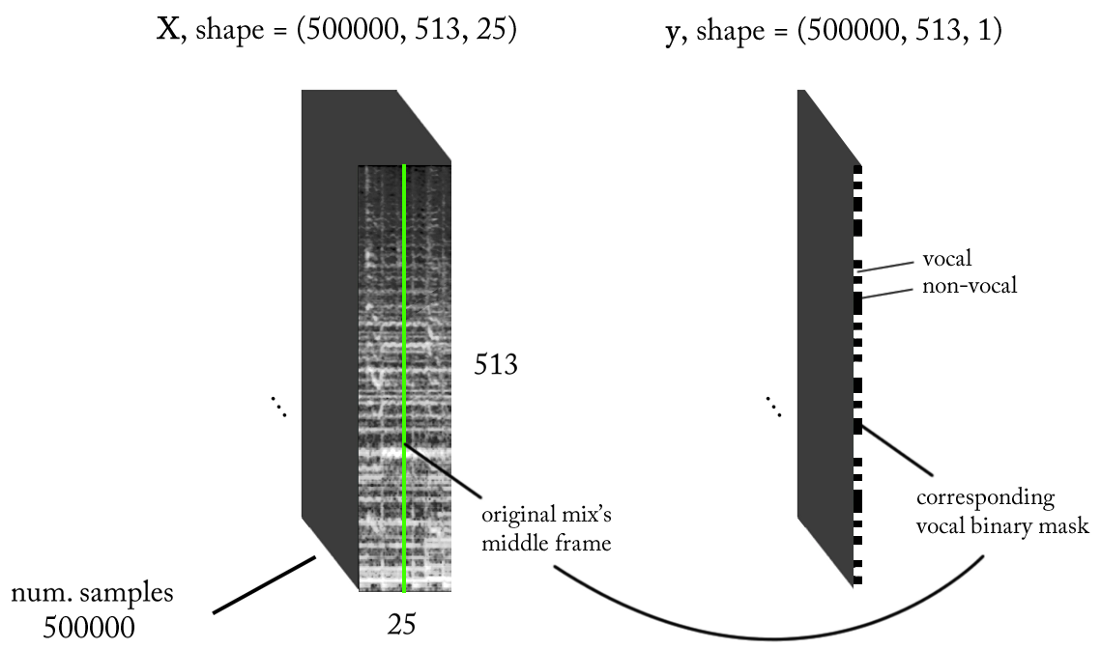

We know that beeps, such as music and human speech, are based on temporal dependencies. Simply put, nothing happens in isolation at a given time. If I want to know if there is a voice on a particular piece of sound recording, then I need to look at the neighboring regions. This temporal context provides good information about what is happening in the area of interest. At the same time, it is desirable to perform the classification with very small time increments in order to recognize the human voice with the highest possible time resolution.

Let's count a little ...

With the above requirements, the input and output of our binary classifier is as follows:

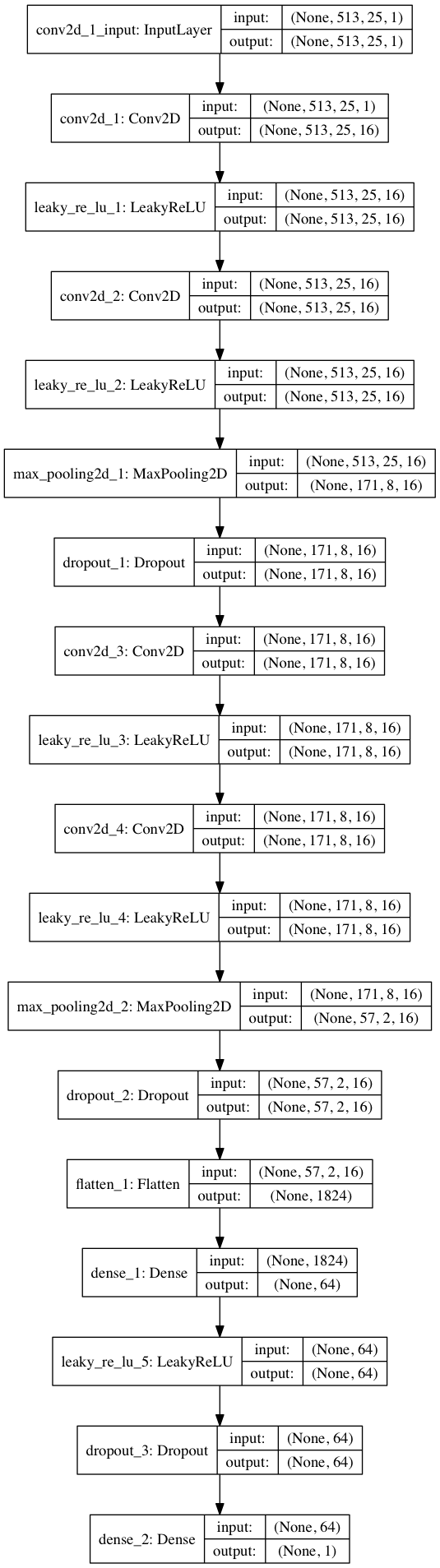

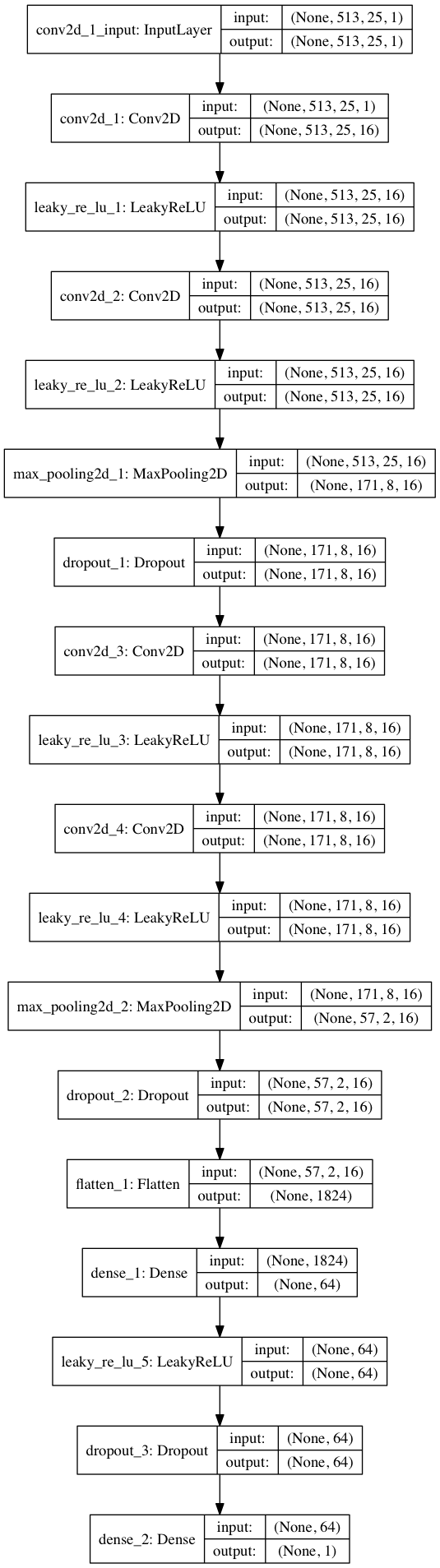

Using Keras, we will build a small model of a neural network to test our hypothesis.

By separating the 80/20 data into training and testing after ~ 50 epochs, we get accuracy when testing ~ 97% . This is sufficient proof that our model is able to distinguish vocals in musical sound fragments (and fragments without vocals). If you check some feature maps from the 4th convolutional layer, then we can conclude that the neural network seems to have optimized its cores for two tasks: filtering the music and filtering the vocals ...

An example of an object map at the output of the 4th convolutional layer. Apparently, the output on the left is the result of kernel operations in an attempt to save vocal content while ignoring music. High values resemble the harmonic structure of human speech. The object map on the right appears to be the result of the opposite task.

Having solved a simpler classification problem, how do we get to the real vocal selection of music? Well, looking at the first naive method, we still want to somehow get the amplitude spectrogram for vocals. Now it becomes a regression task. What we want to do is for a specific timeframe from the STFT of the original signal, that is, a mix (with sufficient time context), to calculate the corresponding amplitude spectrum for the vocals in this timeframe.

What about the training data set? (you can ask me at this moment)

Damn ... why so. I was going to review this at the end of the article so as not to be distracted from the topic!

If our model is well trained, then for logical inference it is only necessary to implement a simple sliding window to the STFT mix. After each prediction, move the window to the right by 1 timeframe, predict the next frame with vocals and associate it with the previous prediction. As for the model, let's take the same model that was used for the voice detector and make small changes: the output waveform is now (513.1), linear activation at the output, MSE as a function of loss. Now we begin training.

Don't rejoice yet ...

Although such an I / O representation makes sense, after training our model several times, with different parameters and data normalizations, there are no results. It seems we are asking too much ...

We have moved from a binary classifier to a regression on a 513-dimensional vector. Although the network is studying the task to some extent, there are still obvious artifacts and interference from other sources in the restored vocals. Even after adding additional layers and increasing the number of model parameters, the results do not change much. And then the question arises: how to deceive the “simplify” task for the network, and at the same time achieve the desired results?

What if, instead of estimating the amplitude of the STFT vocal, to train the network in obtaining a binary mask, which, when applied to the STFT mix, gives us a simplified, but perceptually acceptable amplitude vocal spectrogram?

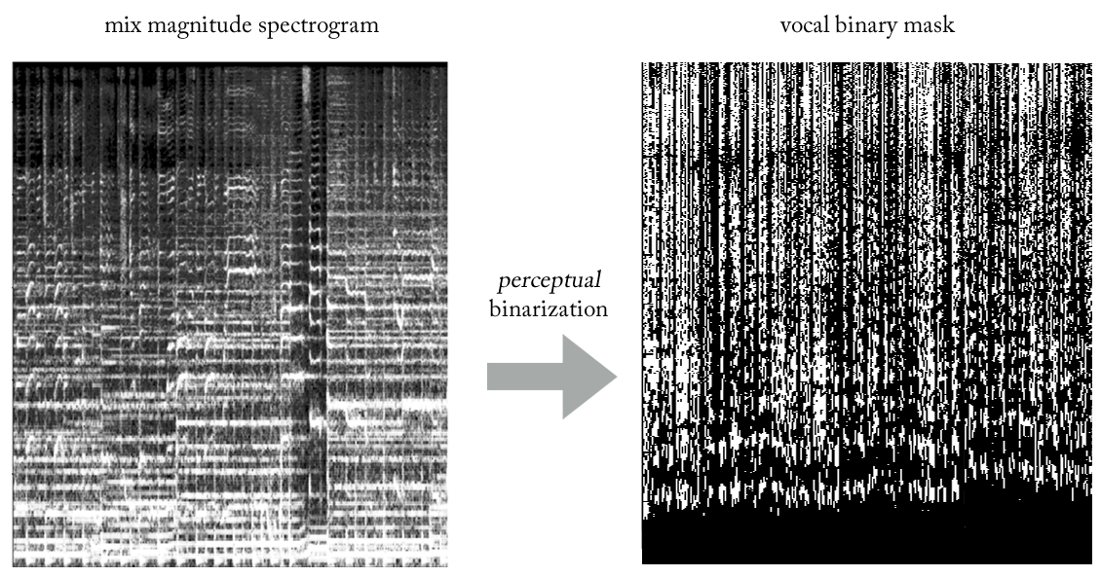

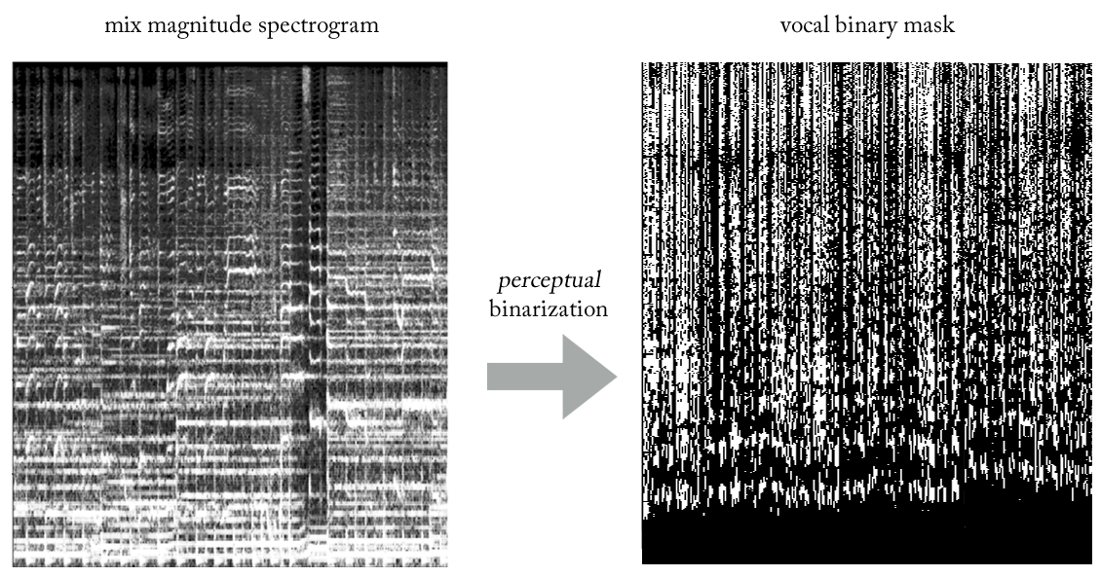

Experimenting with various heuristics, we came up with a very simple (and, of course, unorthodox in terms of signal processing ...) method of extracting vocals from mixes using binary masks. Without going into details, the essence is as follows. Imagine the output as a binary image, where the value '1' indicates the predominant presence of vocal content at a given frequency and timeframe, and the value '0' indicates the prevailing presence of music in a given place. We can call it the binarization of perception , just to come up with some name. Visually, it looks pretty ugly, to be honest, but the results are surprisingly good.

Now our problem becomes a peculiar hybrid of regression-classification (very roughly speaking ...). We ask the model to “classify pixels” at the output as vocal or non-vocal, although conceptually (as well as from the point of view of the MSE loss function used) the task still remains regression.

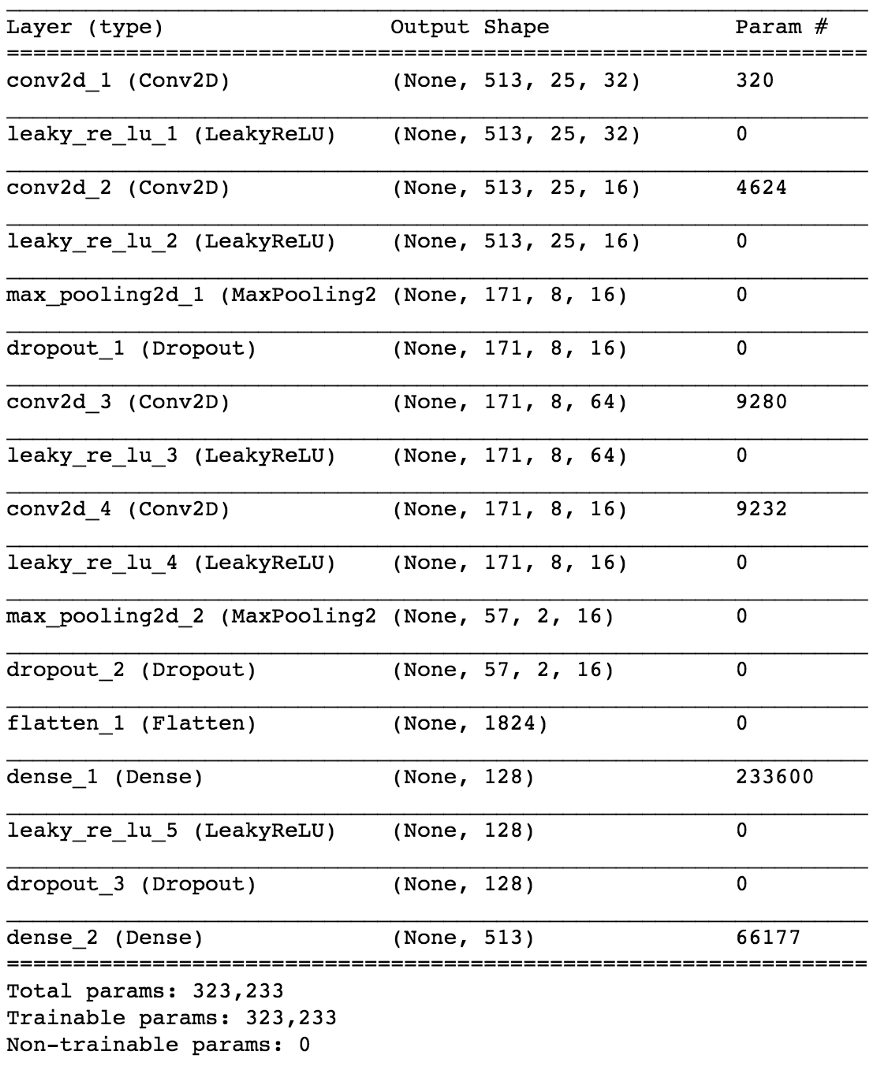

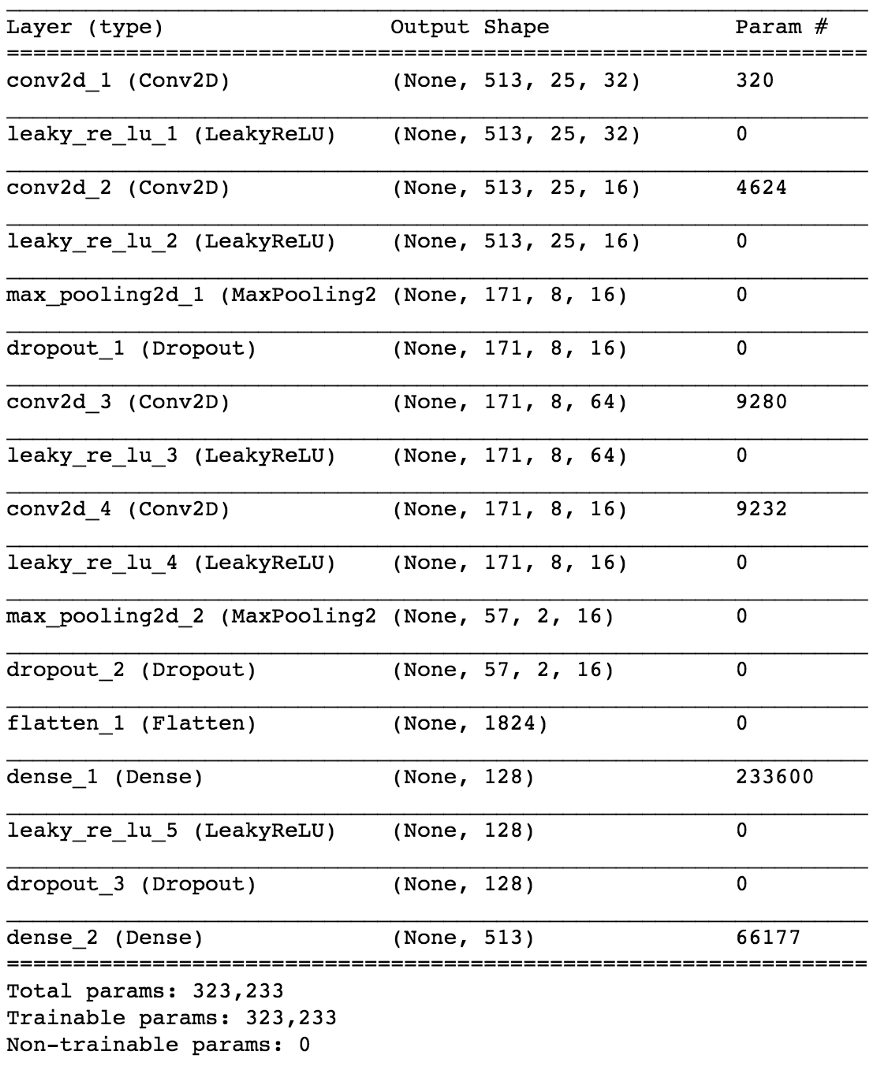

Although this distinction may seem inappropriate for some, in fact it is of great importance in the ability of the model to study the task, the second of which is simpler and more limited. At the same time, this allows us to keep our model relatively small in terms of the number of parameters, given the complexity of the task, something very desirable for working in real time, which in this case was a design requirement. After some minor tweaks, the final model looks like this.

Essentially, as in the naive method . In this case, for each pass, we forecast one timeframe of the binary vocal mask. Again, by implementing a simple sliding window with a step of one timeframe, we continue to evaluate and merge successive timeframes, which ultimately make up the entire vocal binary mask.

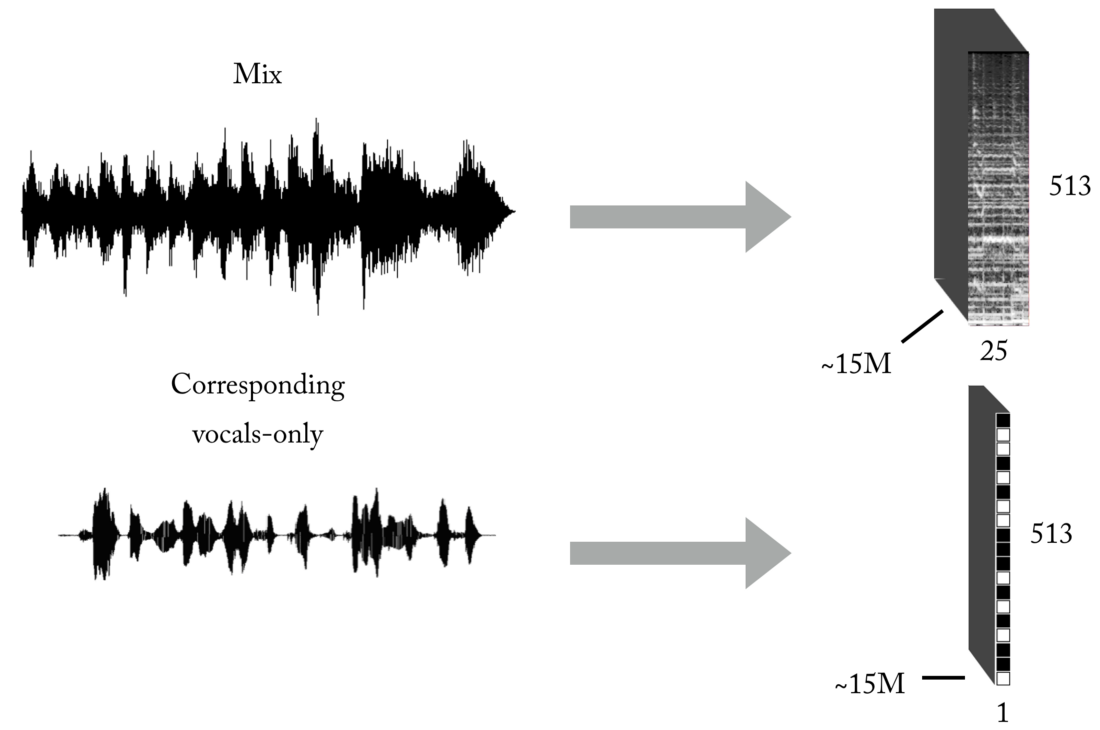

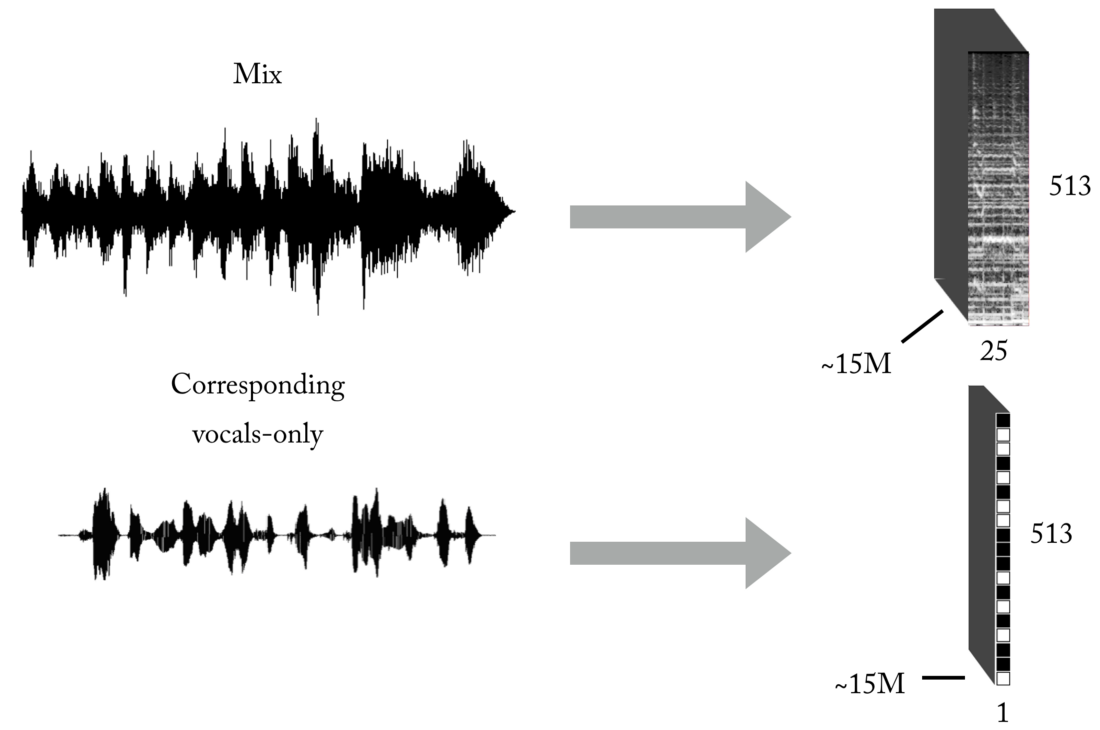

As you know, one of the main problems when learning with a teacher (leave these toy examples with ready datasets) is the correct data (in quantity and quality) for a specific problem that you are trying to solve. Based on the input and output concepts described, to teach our model, you first need a significant amount of mixes and corresponding, ideally aligned and normalized vocal tracks. Such a set can be created in several ways, and we used a combination of strategies, ranging from manually creating pairs [mix <-> vocals] based on several a cappelles found on the Internet, to searching for musical material of rock bands and scraping Youtube. Just to give you an idea of how time-consuming and painful the process is, a part of the project was the development of such a tool for automatic pairing [mix <-> vocals]:

You need a really large amount of data in order for the neural network to learn the transfer function for translating mixes into vocals. Our final set consisted of about 15 million samples of 300 ms mixes and their corresponding vocal binary masks.

As you probably know, creating an ML model for a specific task is only half the battle. In the real world, you need to think about the software architecture, especially if you need to work in real time or close to it.

In this particular implementation, the reconstruction in the time domain can occur immediately after the prediction of the full binary vocal mask (standalone mode) or, more interestingly, in multi-threaded mode, where we receive and process data, restore the vocal and reproduce the sound - all in small segments, close to streaming and even almost in real time, processing music that is recorded on the fly with minimal delay. In general, this is a separate topic, and I will leave it for another article on ML-pipelines in real time ...

Here you can hear some minimal noise from the drums ...

Notice how, at the very beginning, our model extracts the crowds of the crowd as vocal content :). In this case there is some interference from other sources. Since this is a live recording, it seems acceptable that the extracted vocals are worse in quality than the previous ones.

The article is already quite large, but considering the work done, you deserve to hear the latest demo. With exactly the same logic as when extracting vocals, we can try to divide stereo music into components (drums, bass, vocals, others), making some changes in our model and, of course, having the appropriate set of training :).

Thank you for reading. As a final note: as you can see, the actual model of our convolutional neural network is not so special. The success of this work led to Feature Engineering and an accurate hypothesis testing process, which I will write about in future articles!

Disclaimer: all intellectual property, projects and methods described in this article are disclosed in patents US10014002B2 and US9842609B2.

I wish to return to 1965, knock on the front door of the Abbey Road studio with a pass, go inside - and hear the real voices of Lennon and McCartney ... Well, let's try. Input: The Beatles' Mid-Quality MP3 Song We Can Work it Out . The top track is the input mix, the bottom track is the isolated vocal that our neural network allocated.

Formally, this problem is known as audio source separation or audio source separation. It consists in the restoration or reconstruction of one or several source signals that are mixed with other signals as a result of a linear or convolutional process. This area of research has many practical applications, including improving sound quality (speech) and noise elimination, musical remixes, spatial distribution of sound, remastering, etc. Sound engineers sometimes call this technique demixing. There are a large number of resources on this topic, from blind signal separation with independent component analysis (ICA) to semi-controlled factorization of non-negative matrices and ending with more recent neural network approaches. Good information on the first two points can be found in these mini-manuals from CCRMA, which at one time I was very useful.

But before diving into the development ... quite a bit of the philosophy of applied machine learning ...

I was engaged in processing signals and images even before the slogan “deep learning solves everything” was spread, so I can provide you with a solution as a travel engineering feature and show why a neural network is the best approach for this particular problem . What for? Very often, I see people writing something like this:

“With deep learning, you no longer need to worry about choosing signs; it will do it for you. ”

or worse ...

"The difference between machine learning and deep learning [wait a minute ... deep learning is still machine learning!] In ML you take out the signs yourself, but in deep learning it happens automatically within the network."

Such generalizations probably come from the fact that DNNs can be very effective in exploring good hidden spaces. But so it is impossible to generalize. It really upsets me when recent graduates and practitioners are amenable to the above misconceptions and adopt the “deep-learning-solves-all” approach. They say that it is enough to sketch a bunch of raw data (even if after a little preliminary processing) - and everything will work as it should. In the real world, you need to take care of things such as performance, execution in real time, etc. Because of such misconceptions, you will be stuck for a very long time in the experiment mode ...

Feature Engineering remains a very important discipline in the design of artificial neural networks. As in any other ML technique, in most cases it is precisely it that distinguishes effective production-level solutions from unsuccessful or ineffective experiments. A deep understanding of your data and their nature still means a lot ...

From A to Z

Ok, I finished the sermon. Now let's see why we are here! As with any data processing problem, we’ll first see how they look. Take a look at the following vocal excerpt from the original studio recording.

Studio vocals of 'One Last Time', Ariana Grande

Not too interesting, right? Well, that's because we visualize the signal in time . Here we only see the amplitude changes over time. But you can extract all sorts of other things, such as amplitude envelopes (envelope), rms values (RMS), the rate of change from positive amplitude to negative (zero-crossing rate), etc., but these signs are too primitive and not sufficiently distinctive, to help in our problem. If we want to extract vocals from an audio signal, we first need to somehow determine the structure of human speech. Fortunately, the window Fourier transform (STFT) comes to the rescue.

Amplitude spectrum STFT - window size = 2048, overlap = 75%, logarithmic frequency scale [Sonic Visualizer]

Although I love speech processing and definitely love playing with input filter modeling, kepstrom, sratottami, LPC, MFCC and so on , we’ll skip all this nonsense and focus on the main elements related to our problem so that the article is understandable to as many people as possible. not just signal processing specialists.

So, what does the structure of human speech tell us?

Well, we can define three main elements here:

- The fundamental frequency (f0), which is determined by the vibration frequency of our vocal cords. In this case, Ariana sings in the range of 300-500 Hz.

- A number of harmonics above f0, which follow a similar form or pattern. These harmonics appear at frequencies that are multiples of f0.

- Unvocalized speech, which includes consonants, such as 't', 'p', 'k', 's' (which are not produced by the vibration of the vocal cords), breathing, etc. All this manifests itself in the form of short bursts in the high-frequency region.

The first attempt using the rules

Let's forget for a second what is called machine learning. Can a vocal extraction method be developed based on our knowledge of the signal? Let me try ...

Naive vocal isolation V1.0:

- Identify sites with vocals. In the original signal a lot of things. We want to focus on those areas that really contain vocal content, and ignore everything else.

- To distinguish between voiced and unvoiced speech. As we have seen, they are very different. Probably, they need to be processed in different ways.

- Estimate the change in fundamental frequency over time.

- Based on pin 3, apply some kind of mask to capture the harmonics.

- Do something with fragments of unvoiced speech ...

If we work with dignity, the result should be a soft or bitmask , the application of which to the amplitude of the STFT (element-by-element multiplication) gives an approximate reconstruction of the amplitude of the STFT vocal. Then we combine this vocal STFT with information about the phase of the original signal, calculate the inverse STFT and get the time signal of the reconstructed vocals.

Doing it from scratch is a big job. But for the sake of demonstration, let's apply the implementation of the pYIN algorithm . Although it is designed to solve step 3, it performs steps 1 and 2 quite properly with the correct settings, tracking the vocal base even in the presence of music. The example below contains the output after processing this algorithm, without processing unvoiced speech.

So what...? He seems to have done all the work, but there is no good quality and close. Perhaps spending more time, energy and money, we will improve this method ...

But let me ask you ...

What happens if a few voices appear on the track, but this is often found in at least 50% of modern professional tracks?

What happens if vocals are processed with reverberation, delays and other effects? Let's take a look at the last chorus of Ariana Grande from this song.

Do you already feel pain ...? I am yes.

Such methods on the hard rules very quickly turn into a house of cards. The problem is too complicated. C too many rules, too many exceptions and too many different conditions (effects and details settings). The multi-step approach also implies that errors in one step spread problems to the next step. Improving each step will be very costly: it will take a large number of iterations to do everything correctly. And last but not least, it is likely that in the end we will have a very resource-intensive conveyor, which in itself can negate all efforts.

In such a situation, it’s time to start thinking about a more comprehensive approach and let ML find out some of the basic processes and operations necessary to solve the problem. But we still have to show our skills and do feature engineering, and you will see why.

Hypothesis: use neural network as a transfer function that translates mixes into vocals

Looking at the achievements of convolutional neural networks in photo processing, why not apply the same approach here?

Neural networks successfully solve such tasks as image coloring, sharpening and resolution

In the end, you can also present the sound signal "as an image" using the short-term Fourier transform, right? Although these sound images do not correspond to the statistical distribution of natural images, they still have spatial patterns (in time and frequency space) in which the network can be trained.

Left: drum beat and baseline at the bottom, a few synthesizer sounds in the middle, all this mixed with vocals. Right: vocals only

Conducting such an experiment would be costly because it is difficult to obtain or generate the necessary training data. But in applied research, I always try to apply this approach: first, to identify a simpler problem that confirms the same principles , but does not require a lot of work. This allows you to evaluate the hypothesis, to perform iterations faster and correct the model with minimal losses if it does not work as it should.

The implied condition is that the neural network must understand the structure of human speech . A simpler problem may be the following: will the neural network be able to detect the presence of speech on an arbitrary piece of sound recording . We are talking about a reliable voice activity detector (VAD) implemented in the form of a binary classifier.

Designing feature space

We know that beeps, such as music and human speech, are based on temporal dependencies. Simply put, nothing happens in isolation at a given time. If I want to know if there is a voice on a particular piece of sound recording, then I need to look at the neighboring regions. This temporal context provides good information about what is happening in the area of interest. At the same time, it is desirable to perform the classification with very small time increments in order to recognize the human voice with the highest possible time resolution.

Let's count a little ...

- Sampling frequency (fs): 22050 Hz (we lower the sampling rate from 44100 to 22050)

- STFT design: window size = 1024, hop size = 256, interpolation of the chalk scale for the weighing filter based on perception. Since our input data is real , you can work with half STFT (the explanation is beyond the scope of this article ...), keeping the DC component (optional), which gives us 513 frequency bins.

- Target resolution of classification: one STFT frame (~ 11.6 ms = 256/22050)

- Target time context: ~ 300 milliseconds = 25 STFT frames.

- Target number of teaching examples: 500 thousand

- Assuming that we use a sliding window in steps of 1 STFT timeframe to generate training data, we need about 1.6 hours of tagged sound to generate 500 thousand sample data.

With the above requirements, the input and output of our binary classifier is as follows:

Model

Using Keras, we will build a small model of a neural network to test our hypothesis.

import keras from keras.models import Sequential from keras.layers import Dense, Dropout, Flatten, Conv2D, MaxPooling2D from keras.optimizers import SGD from keras.layers.advanced_activations import LeakyReLU model = Sequential() model.add(Conv2D(16, (3,3), padding='same', input_shape=(513, 25, 1))) model.add(LeakyReLU()) model.add(Conv2D(16, (3,3), padding='same')) model.add(LeakyReLU()) model.add(MaxPooling2D(pool_size=(3,3))) model.add(Dropout(0.25)) model.add(Conv2D(16, (3,3), padding='same')) model.add(LeakyReLU()) model.add(Conv2D(16, (3,3), padding='same')) model.add(LeakyReLU()) model.add(MaxPooling2D(pool_size=(3,3))) model.add(Dropout(0.25)) model.add(Flatten()) model.add(Dense(64)) model.add(LeakyReLU()) model.add(Dropout(0.5)) model.add(Dense(1, activation='sigmoid')) sgd = SGD(lr=0.001, decay=1e-6, momentum=0.9, nesterov=True) model.compile(loss=keras.losses.binary_crossentropy, optimizer=sgd, metrics=['accuracy'])

By separating the 80/20 data into training and testing after ~ 50 epochs, we get accuracy when testing ~ 97% . This is sufficient proof that our model is able to distinguish vocals in musical sound fragments (and fragments without vocals). If you check some feature maps from the 4th convolutional layer, then we can conclude that the neural network seems to have optimized its cores for two tasks: filtering the music and filtering the vocals ...

An example of an object map at the output of the 4th convolutional layer. Apparently, the output on the left is the result of kernel operations in an attempt to save vocal content while ignoring music. High values resemble the harmonic structure of human speech. The object map on the right appears to be the result of the opposite task.

From voice detector to disconnect signal

Having solved a simpler classification problem, how do we get to the real vocal selection of music? Well, looking at the first naive method, we still want to somehow get the amplitude spectrogram for vocals. Now it becomes a regression task. What we want to do is for a specific timeframe from the STFT of the original signal, that is, a mix (with sufficient time context), to calculate the corresponding amplitude spectrum for the vocals in this timeframe.

What about the training data set? (you can ask me at this moment)

Damn ... why so. I was going to review this at the end of the article so as not to be distracted from the topic!

If our model is well trained, then for logical inference it is only necessary to implement a simple sliding window to the STFT mix. After each prediction, move the window to the right by 1 timeframe, predict the next frame with vocals and associate it with the previous prediction. As for the model, let's take the same model that was used for the voice detector and make small changes: the output waveform is now (513.1), linear activation at the output, MSE as a function of loss. Now we begin training.

Don't rejoice yet ...

Although such an I / O representation makes sense, after training our model several times, with different parameters and data normalizations, there are no results. It seems we are asking too much ...

We have moved from a binary classifier to a regression on a 513-dimensional vector. Although the network is studying the task to some extent, there are still obvious artifacts and interference from other sources in the restored vocals. Even after adding additional layers and increasing the number of model parameters, the results do not change much. And then the question arises: how to deceive the “simplify” task for the network, and at the same time achieve the desired results?

What if, instead of estimating the amplitude of the STFT vocal, to train the network in obtaining a binary mask, which, when applied to the STFT mix, gives us a simplified, but perceptually acceptable amplitude vocal spectrogram?

Experimenting with various heuristics, we came up with a very simple (and, of course, unorthodox in terms of signal processing ...) method of extracting vocals from mixes using binary masks. Without going into details, the essence is as follows. Imagine the output as a binary image, where the value '1' indicates the predominant presence of vocal content at a given frequency and timeframe, and the value '0' indicates the prevailing presence of music in a given place. We can call it the binarization of perception , just to come up with some name. Visually, it looks pretty ugly, to be honest, but the results are surprisingly good.

Now our problem becomes a peculiar hybrid of regression-classification (very roughly speaking ...). We ask the model to “classify pixels” at the output as vocal or non-vocal, although conceptually (as well as from the point of view of the MSE loss function used) the task still remains regression.

Although this distinction may seem inappropriate for some, in fact it is of great importance in the ability of the model to study the task, the second of which is simpler and more limited. At the same time, this allows us to keep our model relatively small in terms of the number of parameters, given the complexity of the task, something very desirable for working in real time, which in this case was a design requirement. After some minor tweaks, the final model looks like this.

How to restore the time domain signal?

Essentially, as in the naive method . In this case, for each pass, we forecast one timeframe of the binary vocal mask. Again, by implementing a simple sliding window with a step of one timeframe, we continue to evaluate and merge successive timeframes, which ultimately make up the entire vocal binary mask.

Creating a training set

As you know, one of the main problems when learning with a teacher (leave these toy examples with ready datasets) is the correct data (in quantity and quality) for a specific problem that you are trying to solve. Based on the input and output concepts described, to teach our model, you first need a significant amount of mixes and corresponding, ideally aligned and normalized vocal tracks. Such a set can be created in several ways, and we used a combination of strategies, ranging from manually creating pairs [mix <-> vocals] based on several a cappelles found on the Internet, to searching for musical material of rock bands and scraping Youtube. Just to give you an idea of how time-consuming and painful the process is, a part of the project was the development of such a tool for automatic pairing [mix <-> vocals]:

You need a really large amount of data in order for the neural network to learn the transfer function for translating mixes into vocals. Our final set consisted of about 15 million samples of 300 ms mixes and their corresponding vocal binary masks.

Pipeline architecture

As you probably know, creating an ML model for a specific task is only half the battle. In the real world, you need to think about the software architecture, especially if you need to work in real time or close to it.

In this particular implementation, the reconstruction in the time domain can occur immediately after the prediction of the full binary vocal mask (standalone mode) or, more interestingly, in multi-threaded mode, where we receive and process data, restore the vocal and reproduce the sound - all in small segments, close to streaming and even almost in real time, processing music that is recorded on the fly with minimal delay. In general, this is a separate topic, and I will leave it for another article on ML-pipelines in real time ...

Probably, I said enough, so why not listen to a couple of examples !?

Daft Punk - Get Lucky (studio recording)

Here you can hear some minimal noise from the drums ...

Adele - Set Fire to the Rain (live recording!)

Notice how, at the very beginning, our model extracts the crowds of the crowd as vocal content :). In this case there is some interference from other sources. Since this is a live recording, it seems acceptable that the extracted vocals are worse in quality than the previous ones.

Yes, and "something else" ...

If the system works for vocals, why not apply it to other instruments ...?

The article is already quite large, but considering the work done, you deserve to hear the latest demo. With exactly the same logic as when extracting vocals, we can try to divide stereo music into components (drums, bass, vocals, others), making some changes in our model and, of course, having the appropriate set of training :).

Thank you for reading. As a final note: as you can see, the actual model of our convolutional neural network is not so special. The success of this work led to Feature Engineering and an accurate hypothesis testing process, which I will write about in future articles!

Source: https://habr.com/ru/post/441090/