Advanced Three.js: shader materials and post processing

There are quite a few introductions to the basics of working with Three.js, but you may notice a shortage of materials on more advanced topics. And one of these themes is the combination of shaders and scenes with three-dimensional models. In the eyes of many novice developers, it is as if incompatible things from different worlds. Today, using a simple example of a “plasma sphere”, we will look at what ShaderMaterial is and what it is eaten with, as well as what EffectComposer is and how quickly you can postprocess for a rendered scene.

It is assumed that the reader is familiar with the basics of working with Three.js and understands how shaders work. If you have not come across this before, then I highly recommend reading:

- An introduction to programming shaders for web designers

- We apply Voronoi mosaic and geometric masks in shaders

- Three-dimensional presentation of products on Three.js for the smallest

But let's get started ...

ShaderMaterial - what is it?

We have already seen how a flat texture is used and how it is stretched onto a three-dimensional object. As this texture was an ordinary picture. When we analyzed the writing of fragment shaders - everything was flat there too. So: if we can generate a flat image using a shader, then why not use it as a texture?

It is this thought that forms the basis for the shader material. By creating material for a three-dimensional object, instead of a texture, we specify shaders for it. In its basic form, it looks like this:

const shaderMaterial = new THREE.ShaderMaterial({ uniforms: { // ... }, vertexShader: '...', fragmentShader: '...' }); The fragment shader will be used to create the texture of the material, and you, of course, ask, what will the vertex shader do? Will he again do the banal coordinate recalculation? Yes, we will start with this simple option, but we can also set an offset or produce other manipulations for each vertex of a three-dimensional object - now there are no restrictions on the plane. But it's better to look at it all with an example. In words, little is clear. Create a scene and make one sphere in the center.

We will use ShaderMaterial as a material for a sphere:

const geometry = new THREE.SphereBufferGeometry(30, 64, 64); const shaderMaterial = new THREE.ShaderMaterial({ uniforms: { // . . . }, vertexShader: document.getElementById('sphere-vertex-shader').textContent, fragmentShader: document.getElementById('sphere-fragment-shader').textContent }); const sphere = new THREE.Mesh(geometry, shaderMaterial); SCENE.add(sphere); Vertex shader will be neutral:

void main() { gl_Position = projectionMatrix * modelViewMatrix * vec4(position, 1.0); } Please note that Three.js passes its uniform variables. We do not have to do anything, they are implied. In themselves, they contain all sorts of matrices to which we already have access from JS, as well as the camera position. Imagine that at the beginning of the shaders themselves something is inserted:

// = object.matrixWorld uniform mat4 modelMatrix; // = camera.matrixWorldInverse * object.matrixWorld uniform mat4 modelViewMatrix; // = camera.projectionMatrix uniform mat4 projectionMatrix; // = camera.matrixWorldInverse uniform mat4 viewMatrix; // = inverse transpose of modelViewMatrix uniform mat3 normalMatrix; // = camera position in world space uniform vec3 cameraPosition; In addition, several attribute variables are passed to the vertex shader:

attribute vec3 position; attribute vec3 normal; attribute vec2 uv; By name it is clear what it is - the position of the current vertex, the normal to the surface at this point and the coordinates on the texture with which the vertex corresponds.

Traditionally, coordinates in space are designated as (x, y, z), and coordinates on the texture plane as (u, v). Hence the name of the variable. You will often meet him in various examples. In theory, we need to transfer these coordinates to the fragment shader in order to work with them there. This will do.

varying vec2 vUv; void main() { vUv = uv; gl_Position = projectionMatrix * modelViewMatrix * vec4(position, 1.0); } Fragment shader to begin with, let it be something like this:

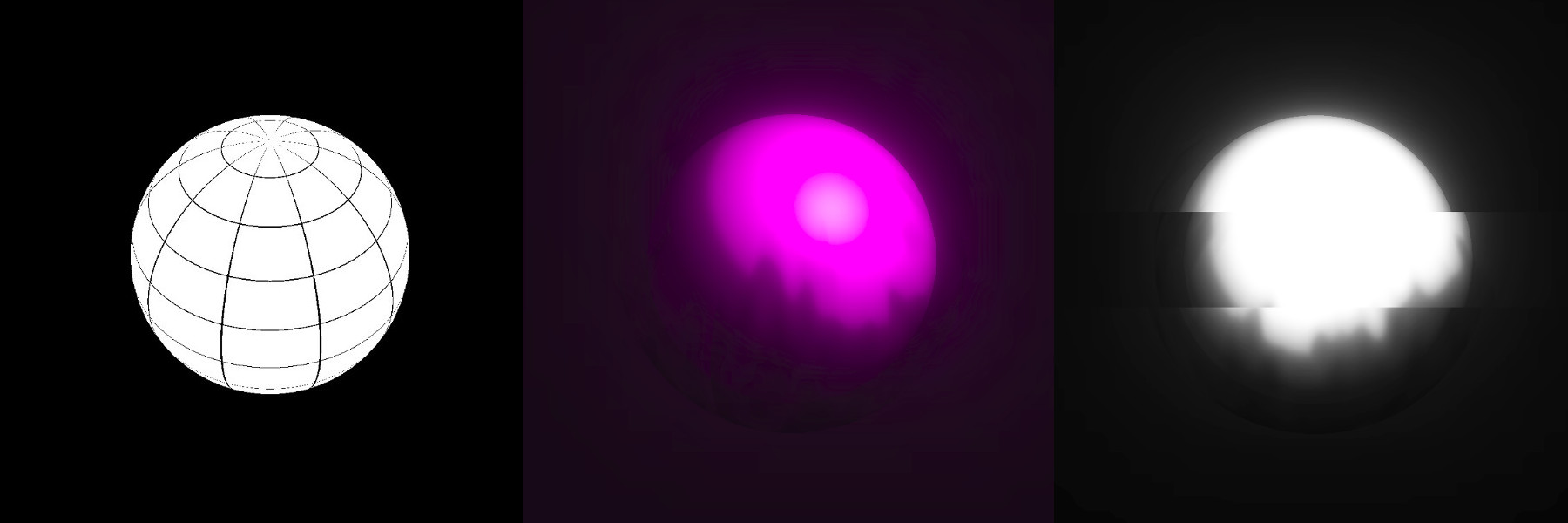

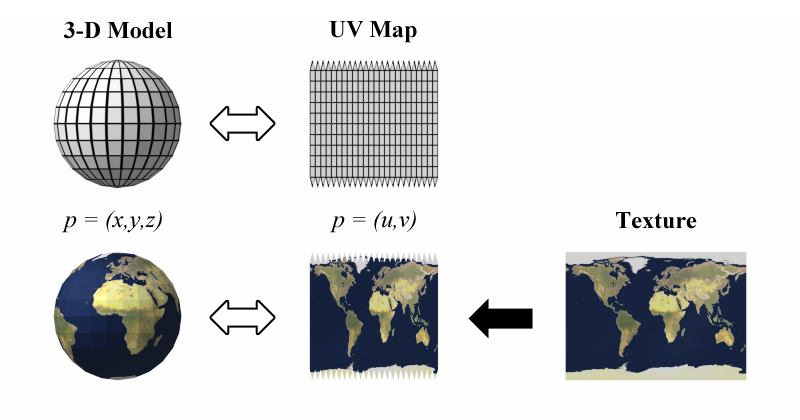

#define EPSILON 0.02 varying vec2 vUv; void main() { if ((fract(vUv.x * 10.0) < EPSILON) || (fract(vUv.y * 10.0) < EPSILON)) { gl_FragColor = vec4(vec3(0.0), 1.0); } else { gl_FragColor = vec4(1.0); } } Just create a mesh. If you think a little, then on the plane it will be just a grid of squares, but since we impose it on the sphere, it is distorted, turning into a globe. On Wikipedia there is a good picture illustrating what is happening:

That is, in a fragmentary shader we make a flat texture, as in the center in this illustration, and Three.js then pulls it onto a sphere. Very comfortably.

Of course, for more complex models, the sweep will be more complex. But usually, when creating various design sites, we work with simple geometric shapes and it’s easy to imagine a sweep in your head.

Ok, what can you do about it?

The main feature is that the shader material may change over time. This is not a static thing that we painted once and forgot, we can animate it. And both in color (in the fragment shader) and in form (in the vertex). This is a very powerful tool.

In our example, we will make a fire enveloping the sphere. There will be two spheres - one ordinary (inside), and the second of shader material (outside, with a large radius). Adding another sphere will not comment.

To begin, add time as a uniform variable for shaders in our material. Without time, nowhere. We have already done this on pure JS, but in Three.js it’s still as simple as that. Let the time in the shaders be called uTime, and be stored in the variable TIME:

function updateUniforms() { SCENE.traverse((child) => { if (child instanceof THREE.Mesh && child.material.type === 'ShaderMaterial') { child.material.uniforms.uTime.value = TIME; child.material.needsUpdate = true; } }); } Update everything every time the animate function is called:

function animate() { requestAnimationFrame(animate); TIME += 0.005; updateUniforms(); render(); } the fire

Creating a fire is in fact very similar to the generation of a landscape, only instead of heights it is color. Or transparency, as in our case.

Functions for random and noise, we have already seen, we will not analyze them in detail. We only need to impose noise at different frequencies to add diversity, and make each of these noises shift at different speeds. Something like flames will turn out, the big ones move slowly, the small ones move faster:

uniform float uTime; varying vec2 vUv; float rand(vec2); float noise(vec2); void main() { vec2 position1 = vec2(vUv.x * 4.0, vUv.y - uTime); vec2 position2 = vec2(vUv.x * 4.0, vUv.y - uTime * 2.0); vec2 position3 = vec2(vUv.x * 4.0, vUv.y - uTime * 3.0); float color = ( noise(position1 * 5.0) + noise(position2 * 10.0) + noise(position3 * 15.0)) / 3.0; gl_FragColor = vec4(0.0, 0.0, 0.0, color - smoothstep(0.1, 1.3, vUv.y)); } float rand(vec2 seed) { return fract(sin(dot(seed, vec2(12.9898,78.233))) * 43758.5453123); } float noise(vec2 position) { vec2 blockPosition = floor(position); float topLeftValue = rand(blockPosition); float topRightValue = rand(blockPosition + vec2(1.0, 0.0)); float bottomLeftValue = rand(blockPosition + vec2(0.0, 1.0)); float bottomRightValue = rand(blockPosition + vec2(1.0, 1.0)); vec2 computedValue = smoothstep(0.0, 1.0, fract(position)); return mix(topLeftValue, topRightValue, computedValue.x) + (bottomLeftValue - topLeftValue) * computedValue.y * (1.0 - computedValue.x) + (bottomRightValue - topRightValue) * computedValue.x * computedValue.y; } So that the flame does not cover the entire sphere, we play with the fourth color parameter - transparency - and bind it to the y coordinate. In our case, this option is very convenient. More generally, we impose a gradient with transparency on noise.

At such moments it is useful to recall the function smoothstep

In general, this approach to creating fire using shaders is a classic. You will often meet him in various places. It will be useful to play with magic numbers - they are put in an example at random and at the same time how the plasma will look will depend on them.

In order to make the fire more interesting, let's move on to the vertex shader and get a little poshamanim ...

How to make the flame “flow” a little in space? For beginners, this question can cause great difficulties, despite its simplicity. I have seen very complex approaches to solving this issue, but in fact - we need to smoothly move the vertices on the sphere along the lines “from its center”. Here and there, here and there. Three.js already gave us the current position of the vertex and the normal - we will use them. For “back and forth,” some limited function will fit, for example, sine. You can experiment, of course, but sine is the default option.

You do not know what to take - take a sine. Better yet, the amount of sines with different frequency.

We shift the coordinates along the normal to the obtained value and recalculate according to the previously known formula.

uniform float uTime; varying vec2 vUv; void main() { vUv = uv; vec3 delta = normal * sin(position.x * position.y * uTime / 10.0); vec3 newPosition = position + delta; gl_Position = projectionMatrix * modelViewMatrix * vec4(newPosition, 1.0); } What we get is no longer a sphere. That ... I don't even know if this one has a name. But, again, do not forget to play with the coefficients - they affect a lot. When creating such effects, something is often selected by trial and error and it is very useful to develop a “mathematical intuition” - the ability to more or less understand how a particular function behaves, how it depends on which variables.

At this stage, we have an interesting but slightly clumsy image. So at first we will look a little at post-processing, and then we will pass to a live example.

Post processing

The ability to do something with a rendered Three.js image is a very useful thing, while undeservedly forgotten in numerous series of lessons. Technically, this is implemented as follows: the image that the renderer gave us is sent to the EffectComposer (as long as it is a black box), which shamanizes something in itself and outputs the final image to the canvas. That is, after the renderer another module is added. In this composer, we pass the parameters - what to do with the resulting image. One such parameter is called pass. In a sense, the composer works like some kind of Gulp - it does nothing, we give it plugins that are already doing the work. Perhaps it’s not quite correct to say so, but the idea should be clear.

Everything that we will use further is not included in the basic composition of Three.js, so we include few dependencies and dependencies of the dependencies themselves:

<script src='https://unpkg.com/three@0.99.0/examples/js/postprocessing/EffectComposer.js'></script> <script src='https://unpkg.com/three@0.99.0/examples/js/postprocessing/RenderPass.js'></script> <script src='https://unpkg.com/three@0.99.0/examples/js/postprocessing/ShaderPass.js'></script> <script src='https://unpkg.com/three@0.99.0/examples/js/shaders/CopyShader.js'></script> <script src='https://unpkg.com/three@0.99.0/examples/js/shaders/LuminosityHighPassShader.js'></script> <script src='https://unpkg.com/three@0.99.0/examples/js/postprocessing/UnrealBloomPass.js'></script> Remember that these scripts are included in the package three and you can collect all this into a single bundle with the help of webpacks or analogues.

In the base view, a composer is created like this:

COMPOSER = new THREE.EffectComposer(RENDERER); COMPOSER.setSize(window.innerWidth, window.innerHeight); const renderPass = new THREE.RenderPass(SCENE, CAMERA); renderPass.renderToScreen = true; COMPOSER.addPass(renderPass); RenderPass does virtually nothing new. It simply renders what we used to receive from a regular renderer. In fact, if you look at the source RenderPass, then there you can find a standard renderer. Since now the rendering takes place there, we need to replace the renderer with a composite in our script:

function render() { // RENDERER.render(SCENE, CAMERA); COMPOSER.render(SCENE, CAMERA); } This approach using RenderPass as a first pass is a standard practice when working with an EffectComposer. Usually we need to first get a rendered image of the scene, then to do something with it.

In the examples from Three.js, in the postprocessing section, you can find a thing called UnrealBloomPass. This is a ported script from the Unreal engine. It adds a slight glow that can be used to create more beautiful lighting. Often this will be the first step to image improvement.

const bloomPass = new THREE.UnrealBloomPass( new THREE.Vector2(window.innerWidth, window.innerHeight), 1.5, 1, 0.1); bloomPass.renderToScreen = true; COMPOSER.addPass(bloomPass); Note: the renderToScreen option is set only to the last Pass, which we passed to the composer.

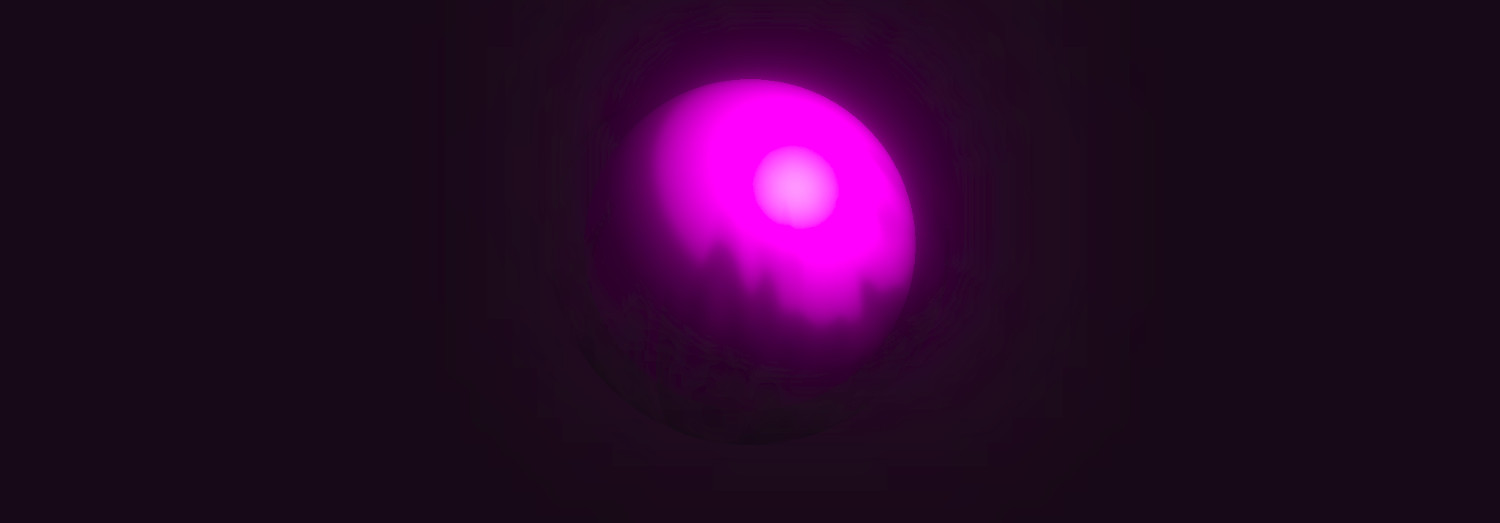

But let's see what kind of glow this bloomPass gave us and how it goes with the sphere:

Agree, this is much more interesting than just a sphere and an ordinary source of light, as they are usually shown in the initial lessons on Three.js.

But we will go even further ...

More shaders to god shaders!

It is very useful to use console.log and look at the structure of the composer. In it you can find some elements with the names renderTarget1, renderTarget2, etc., where the numbers correspond to the indexes of the passed passes. And here it becomes clear why the EffectComposer is so called. It works on the principle of filters in SVG. Remember, there you can use the result of the implementation of some filters in others? Here is the same thing - you can combine effects.

Using console.log to understand the internal structure of Three.js objects and many other libraries is very useful. Use this approach frequently to better understand what is what.

Add another pass. This time it will be ShaderPass.

const shader = { uniforms: { uRender: { value: COMPOSER.renderTarget2 }, uTime: { value: TIME } }, vertexShader: document.getElementById('postprocessing-vertex-shader').textContent, fragmentShader: document.getElementById('postprocessing-fragment-shader').textContent }; const shaderPass = new THREE.ShaderPass(shader); shaderPass.renderToScreen = true; COMPOSER.addPass(shaderPass); In renderTarget2, the result of executing the previous pass is bloomPass (it was the second in a row), we use it as a texture (this is in fact a flat, rendered image) and pass as a uniform variable to the new shader.

Perhaps it is worth slowing down and realize all the magic ...

Next we create a simple vertex shader. In most cases at this stage we don’t need to do anything with the vertices, we only transfer the coordinates (u, v) to the fragment shader:

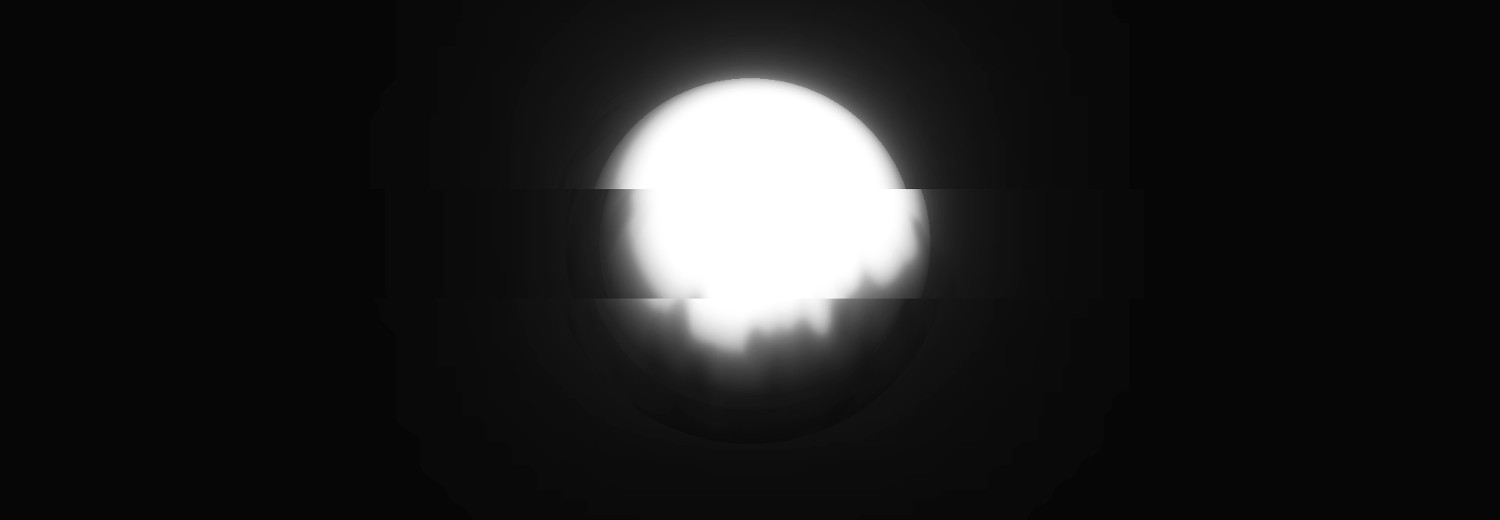

varying vec2 vUv; void main() { vUv = uv; gl_Position = projectionMatrix * modelViewMatrix * vec4(position, 1.0); } And in the fragment we can be entertained by your own taste and color. For example, we can add a light glitch effect, make everything black and white and play around with brightness / contrast:

uniform sampler2D uRender; uniform float uTime; varying vec2 vUv; float rand(vec2); void main() { float randomValue = rand(vec2(floor(vUv.y * 7.0), uTime / 1.0)); vec4 color; if (randomValue < 0.02) { color = texture2D(uRender, vec2(vUv.x + randomValue - 0.01, vUv.y)); } else { color = texture2D(uRender, vUv); } float lightness = (color.r + color.g + color.b) / 3.0; color.rgb = vec3(smoothstep(0.02, 0.7, lightness)); gl_FragColor = color; } float rand(vec2 seed) { return fract(sin(dot(seed, vec2(12.9898,78.233))) * 43758.5453123); } Let's look at the result:

As you can see, filters are superimposed on the sphere. It is still three-dimensional, nothing is broken, but on the canvas we have the processed image.

Conclusion

Shader materials and post-processing in Three.js are two small, but very powerful tools that are definitely worth adopting. There are lots of options for their use - everything is limited by your imagination. Even the simplest scenes with their help can be changed beyond recognition.

Source: https://habr.com/ru/post/436482/